UW’s AI Purple (2025): How Campus AI Platforms Advance Responsible Adoption

Explore UW’s AI Purple launch (2025)—governed, secure campus AI. Featuring official guidelines, practical rollout steps, and tips for monitoring institutional reputation.

Updated on 2025-10-09. Details such as launch date, access scope, and technical controls (e.g., data retention, logging) are evolving. We’ll refresh this article as UW publishes additional specifics.

The quick take: What “Purple” is and why it matters now

The University of Washington (UW) is preparing to launch “Purple,” a secure, campus‑wide generative AI platform operated by UW‑IT. UW‑IT’s roadmap positions Purple as an in‑house solution “available to all UW users” with essential, broad‑based capabilities—explicitly including text‑based interactions—under institutionally governed privacy, security, and ethical standards, per the 2024–2025 AI Strategy. See the description in UW‑IT’s AI Strategy and “AI Tools on the Rise” pages for the clearest official framing.

- According to the UW‑IT Technology Strategy Roadmap’s AI Strategy (2025), Purple is described as “in‑house AI … available to all UW users offering essential, broad‑based GenAI capabilities including text‑based …” (UW‑IT AI Strategy).

- UW‑IT’s “AI Tools on the Rise” adds: “Purple will be the University of Washington’s own generative AI platform, operated securely by UW‑IT … adhering to institutional standards for privacy, data security, and ethical use,” and notes its “coming soon” status in Fall 2025 (UW‑IT Upcoming AI Tools).

Why this matters: A university‑managed platform with clear governance can move higher education past generic “policy statements” toward operational responsibility—tying access, training, and monitoring to ethical commitments. With Purple, UW is signaling readiness to scale generative AI while keeping equity, privacy, and transparency front and center.

The governance foundation behind Purple

UW’s governance scaffold predates Purple’s launch, and it’s central to understanding how “responsible AI” becomes practice.

- The Office of the Provost’s AI Task Force outlined in September 2024 that “the UW has a responsibility to lead … not only in potential applications, but in their ethical and equitable use,” with working groups spanning teaching and learning, student services, research, infrastructure, and administration (Provost AI Task Force update, 2024). A campus‑wide survey and town halls in late 2024 informed recommendations.

- UW‑IT’s AI Strategy sets staged milestones: publish initial UW AI guidelines (≈3 months), develop a university‑wide data strategy (≈6 months), scale computing and launch a vetted AI portfolio (≈1 year), and build proficiency via hiring, training, and communities of practice (≈2 years) (UW‑IT AI Strategy, 2025).

- Guardrails to anchor day‑one use appear in UW‑IT’s Generative AI General Use Guidelines, including: use only UW‑approved services with university data; comply with relevant policies and standards; remember that GenAI augments—not replaces—human judgment; and avoid uses that reinforce bias or barriers for underrepresented groups (UW‑IT General Use Guidelines, 2025).

Taken together, this governance stack—principles, guidelines, staged strategy—helps UW frame Purple not as “another tool,” but as a governed capability set that aligns operations with values.

From policy to platform: situating Purple in UW’s AI ecosystem

Purple is intended to complement a broader set of UW‑supported services, rather than replace them. UW‑IT’s Artificial Intelligence overview points to tailored solutions and supported tools such as Microsoft 365 Copilot Chat, Copilot for Microsoft 365 (Premium), GitHub Copilot, Workday, Zoom, and Adobe Creative Suite (UW‑IT Artificial Intelligence overview, 2025). The “AI Tools on the Rise” page also highlights Tillicum, the next‑generation AI‑accelerated research computing platform.

It’s worth noting that Teaching@UW emphasized in mid‑2025 that “currently, the only UW‑supported generative AI tool is Microsoft Copilot,” reflecting pre‑Purple conditions; that guidance includes added protections through UW’s Microsoft agreement (Teaching@UW AI+Teaching, June 2025). Purple’s “coming soon” status signals a shift toward a campus‑managed gen‑AI environment, but until UW‑IT publishes specifics, avoid assuming feature parity or integrations beyond what’s documented.

A practical rollout playbook universities can adapt

What should peer institutions take from UW’s approach? Beyond the press‑release layer, responsible adoption hinges on disciplined rollout mechanics.

-

Governance and representation

- Establish an AI steering committee with representation from academic units, IT security, research computing, accessibility, compliance, and student voices.

- Publish campus AI guidelines alongside a clear data governance statement. Clarify acceptable use, disclosure norms in coursework, and assessment integrity.

-

Platform and security controls

- Prefer institution‑managed gen‑AI platforms with role‑based access, auditability, and data classification controls. Keep an “allowed tools” registry and review it quarterly.

- Coordinate with research computing to support high‑end needs (e.g., GPU access via platforms akin to UW’s Tillicum).

-

Training and enablement

- Tier training by audience: faculty, staff, students. Use scenario‑based modules focused on privacy, bias mitigation, accessibility, and discipline‑specific workflows.

- Provide opt‑out mechanisms and accommodations, ensuring equitable access to training and tools.

-

Monitoring and measurement

- Define KPIs before launch: adoption rates by unit, policy compliance, incident reporting, equity impacts, and qualitative satisfaction.

- Run pilots in diverse departments; document lessons openly and update guidelines accordingly.

Making “responsible” tangible: UW Radiology’s governance example

To ground the concept, UW Medicine’s Radiology unit outlines a detailed AI governance lifecycle—covering acquisition, installation, clinical deployment approvals, continuous monitoring, and documentation—with alignment to AAPM TG‑273 and ARCH‑AI recommendations. This illustrates how domain‑specific controls can coexist with campus‑wide principles, ensuring accountability among clinicians, IT, and leadership (UW Radiology AI governance, 2025). For research contexts, UW’s IRB guidance emphasizes bias assessment, data monitoring plans, and transparent consent materials when AI is involved (UW HSD IRB guidance on research using AI).

Monitoring external AI surfaces: the communications layer

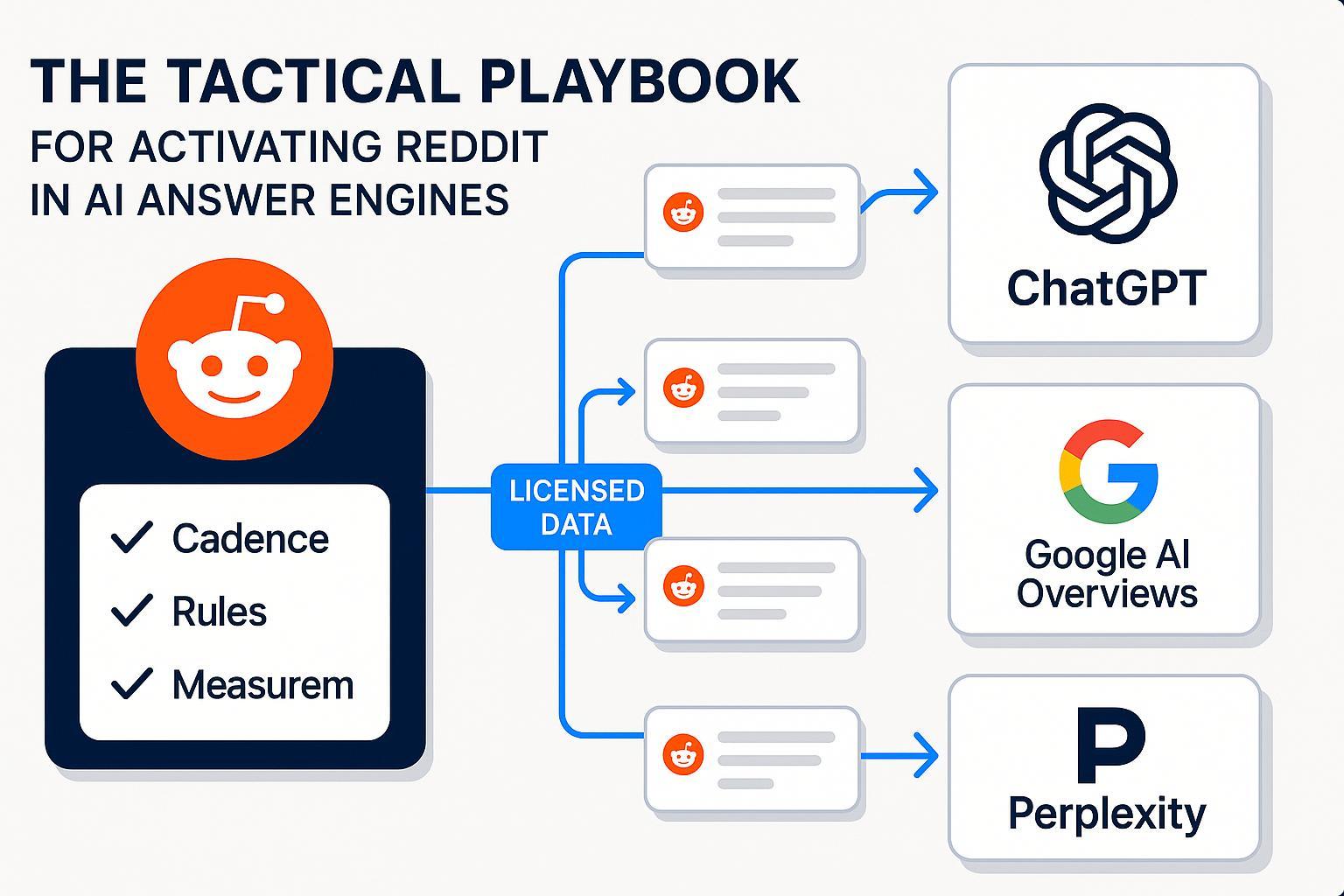

Even with strong internal governance, how your institution appears across consumer AI answers—ChatGPT, Perplexity, Google’s AI Overviews—shapes public understanding. Communications teams can use tools that track brand mentions and sentiment across AI responses to ensure policies and programs are represented accurately. One such option is Geneo, an AI search visibility platform that monitors brand exposure and sentiment across generative engines. Disclosure: Geneo is our product.

For teams new to AI search visibility, two helpful starters:

- Understand the components of an AI Search Visibility Score and how to apply it to your institution’s narratives (definition and components).

- Get a foundation in Generative Engine Optimization (GEO) to influence how AI systems summarize your official resources (GEO ultimate guide).

Use these concepts to set a lightweight monitoring cadence: monthly snapshots of how AI surfaces answer mission‑critical queries (e.g., “UW AI policy,” “campus generative AI tool,” “student privacy with AI”), and route discrepancies back to the governance committee for correction via public documentation, FAQs, and outreach.

Balanced perspective: peers pursuing campus‑managed GenAI

UW isn’t alone in pursuing institution‑managed platforms. The University of Michigan’s U‑M GPT offers access to multiple large models (e.g., GPT‑4o, Llama 3, Claude 3.5 Haiku) with community‑wide availability and FERPA‑aligned guidance (documented in 2025 across ITS pages) (U‑M GenAI main site). UT Austin’s “UT Spark” is positioned as an all‑in‑one AI platform for current faculty, staff, and students, powered by OpenAI and Microsoft Azure (UT Austin CTL – Generative AI tools). These examples reinforce a broader trend: universities are building governed, campus‑accessible GenAI to balance innovation with responsibility.

What to watch next (and a mini change‑log)

Fast‑moving details for Purple include launch date, access mechanics, feature scope, data handling specifics, and how it relates to existing services (e.g., Microsoft Copilot Chat, Tillicum). Expect updates to UW‑IT’s AI pages as the platform rolls out.

Mini change‑log (high‑level)

- 2025‑10‑09: Initial publication. Purple status marked “coming soon” per UW‑IT pages; capabilities referenced as text‑based per AI Strategy; governance sources linked.

Closing thoughts

Responsible campus AI isn’t a single announcement; it’s a sustained practice across governance, platforms, training, and monitoring. UW’s Purple—framed by the Provost’s task force and UW‑IT strategy—offers a timely case for peers to adapt. Start with governance and guidelines, stand up a vetted platform, teach responsibly, monitor outcomes (including how AI systems describe you), and iterate in the open.