Best Practices for Hyper-Personalized Content at Scale with Generative AI (2025)

Discover proven 2025 tactics for scalable, hyper-personalized content using generative AI. Step-by-step frameworks, governance, channel playbooks, and ROI for professional marketers.

If you want personalization that actually moves revenue in 2025, treat it like a governed, measurable, multichannel program—not a string of one-off AI prompts. Teams that operationalize data → segments → prompt systems → orchestration → QA → measurement consistently see durable gains, a theme reinforced in the 2025 outlooks on AI-powered personalization from McKinsey’s executive insights (2025).

Below is the field-tested playbook I use to help B2C and B2B teams ship hyper‑personalized content at scale—without breaking governance or SEO.

1) Build the program like a product: an end‑to‑end blueprint

The most reliable outcomes come from an assembly line you can audit and improve. Here’s the blueprint I’ve found repeatable across industries.

-

Data readiness and identity

- Unify first‑party data in a CDP (or equivalent) so you can build consent-aware profiles and address users across channels. A succinct explainer of identity resolution patterns is IBM’s overview of AI personalization building blocks (IBM, 2024+).

- Maintain consent state and preference flags per user; keep deterministic IDs (email, MAID) and privacy‑preserving linkages.

- Data hygiene: set freshness SLAs (e.g., <24 hours for behavioral events), deduplicate profiles, and validate segment reachability.

-

Segmentation ladder (crawl → walk → run)

- Crawl: Deterministic segments (e.g., lifecycle stage, category affinity) derived from first‑party interactions.

- Walk: Predictive scores (likelihood to purchase/churn, price sensitivity) to prioritize and tailor offers.

- Run: Contextual bandits/uplift modeling to adapt in real time while learning causal effects; this aligns with the direction of modern experimentation platforms and the emergence of adaptive personalization noted in Optimizely’s product advances (2024).

-

Prompt systems, not ad‑hoc prompts

- Create reusable prompt templates with variable slots: {segment}, {persona pain}, {offer}, {channel}, {tone}, {compliance flags}.

- Ground generation with retrieval (RAG) over your product catalog, help center, or thought leadership to minimize hallucinations and keep claims accurate.

- Maintain a prompt library with versioning, examples of good/poor outputs, and guardrails for sensitive categories (claims, finance, health).

-

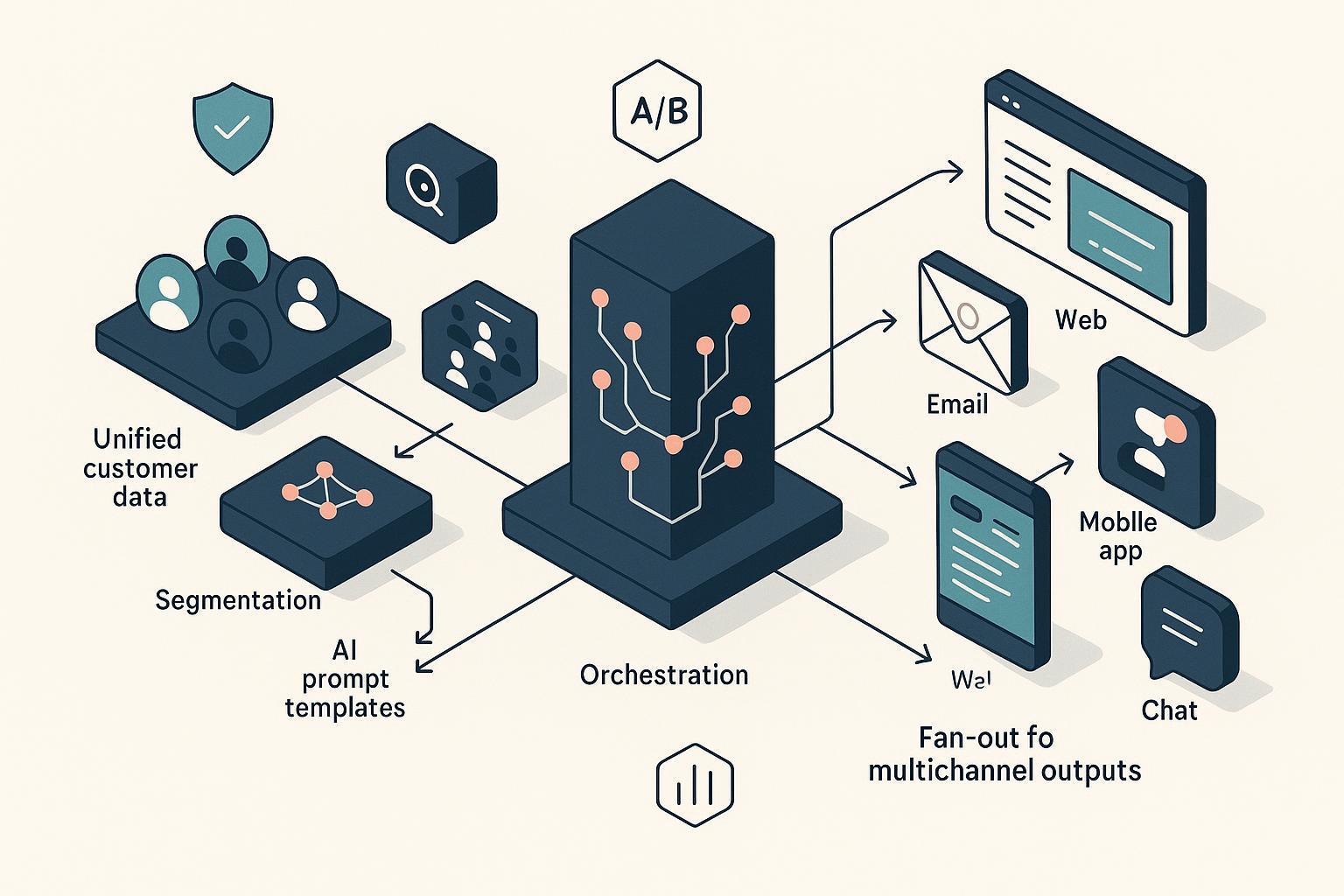

Workflow orchestration and delivery

- Connect your CDP, CMS, ESP, ad platforms, and app push provider via an automation layer; coordinate frequency capping and conversion suppression across channels.

- Use server‑side delivery where possible to avoid flicker and to maintain robust measurement, especially on the web.

- Ensure cross‑device consistency: if a user converts on mobile, suppress redundant desktop offers. Omnichannel playbooks like Algonomy’s emphasize synchronized activation and dynamic content, consistent with omnichannel personalization guidance (2024).

-

QA and governance, baked in

- Adopt the NIST AI Risk Management Framework’s Govern–Map–Measure–Manage cycle as your backbone for generative content programs; see the NIST AI RMF official page (2024–2025) for scope and artifacts.

- Align lifecycle risk controls to ISO/IEC 23894 (AI risk management) for processes like bias checks, transparency, and continuous improvement; see this practical ISO/IEC 23894 explainer (itSMF, 2023) for structure.

- Content QA gates: brand voice check, claims substantiation, sensitive-category review, and synthetic‑media disclosure policy.

-

Measurement and experimentation discipline

- Define primary KPIs (e.g., conversion rate, revenue/visitor) and guardrails (e.g., bounce, latency, complaint rate) per channel.

- Use holdouts and statistical power planning to avoid false positives. Guidance from Optimizely and practitioners underscores run‑time discipline and significance thresholds; see this digest of Optimizely web experimentation practices (2024) for a refresher.

- Maintain an experiment registry and standardized KPI definitions so results are comparable across teams and quarters.

Pro tip: Document the entire chain (segments → prompts → outputs → variants → results). This single source of truth is invaluable for audits, training, and post‑mortems.

2) Channel playbooks: what to personalize and how to measure it

To scale responsibly, tune your playbook by channel. Here’s what typically works and how to avoid common traps.

Web/SEO

- Personalized modules: hero headlines, dynamic product/category blocks, case study tiles, FAQs by segment.

- Technical delivery: use server‑side rendering or edge functions to reduce layout shift and measurement bias.

- Measurement: run A/B/n tests with holdouts; attribute revenue impact; keep an eye on crawlability and canonicalization as variants proliferate. For SEO‑safe content generation workflows and variant hygiene, see our practical guide on Generative Engine Optimization for beginners.

- Personalize subject lines, hero copy, product grids, and CTAs based on segment and real‑time behavior.

- Guardrails: frequency caps by user; deliverability monitoring (seed tests, spam‑trap watchlists); coordinate suppression post‑conversion.

- Experimentation: deploy multi‑armed bandits to explore templates while converging on winners.

Social and paid ads

- Use DCO (dynamic creative optimization) to mix/match elements by audience; enforce brand safety with pre‑flight filters.

- Suppression lists: align with owned channels to avoid waste. Measure incremental lift using geo or audience‑level holdouts.

Mobile/app

- Personalize onboarding sequences, in‑app recommendations, and triggered push/in‑app messages.

- Respect device constraints (battery, data) and honor OS‑level do‑not‑track preferences.

Chat/assistants

- Retrieval‑grounded assistants that remember context across sessions provide tailored help and post‑purchase guidance.

- Safety rails: escalation to human agents for sensitive issues; log and review conversations for QA and prompt improvements.

Reference documentation from Adobe on personalization orchestration is a solid foundation for teams building cross‑channel governance and measurement, e.g., the Adobe Journey Optimizer personalization overview (Adobe, 2024/25).

3) What “good” looks like: quantified case evidence

Vendor‑verified customer stories offer concrete, defensible benchmarks to calibrate expectations.

- Coca‑Cola with Adobe Journey Optimizer reported a 117% increase in clicks and a 36% revenue lift from 1:1 product recommendations, among other gains, per the official Adobe Coca‑Cola case study (Adobe, 2024/25).

- Signify (Philips Hue) saw +55% net merchandised value and 50% faster page loads while compressing campaign cycles from months to days, per the Adobe Signify case (Adobe, 2024/25).

Treat these as directional targets; your lift depends on data readiness, offer quality, and test rigor.

4) Advanced techniques that separate leaders from dabblers

-

Contextual bandits and uplift modeling

- Use adaptive allocation to explore and exploit creative variants while directly optimizing for incremental outcomes. This reduces interference and accelerates learning compared to static A/B testing.

-

Retrieval‑Augmented Generation (RAG)

- Ground generative copy on your catalog, knowledge base, and policies to reduce hallucinations and keep claims compliant.

-

- Pair tailored copy with personalized images or short video intros at scale for premium accounts or high‑value cohorts.

-

Privacy‑by‑design

- Explore federated learning to keep data local; apply differential privacy where appropriate. This aligns your practices with risk frameworks like NIST and ISO.

5) Governance, risk, and compliance you can’t ignore in 2025

Personalization is only as scalable as your risk controls.

-

Program governance

- Use the NIST AI RMF to structure roles, risk identification, metrics, and continuous improvement (2024–2025).

- Map your lifecycle controls to ISO/IEC 23894’s risk management processes; the ISO/IEC 23894 practical overview (2023) is a helpful primer.

-

Regulatory timelines

- The EU AI Act entered into force in 2024 with phased obligations through 2027; practitioners should track near‑term dates in 2025/2026. See a clear legal timeline explainer in Goodwin’s EU AI Act implementation timeline (2024).

- In the U.S., existing advertising law applies to AI‑generated or targeted content; ensure disclosures are clear and avoid deceptive claims per the FTC’s Advertising & Marketing business guidance (ongoing).

-

Content integrity and disclosure

- Establish a policy for synthetic‑media disclosure and watermarking where feasible. Keep model cards/fact sheets noting intended use, limitations, and known failure modes.

-

Data rights and consent

- Centralize consent and ensure propagation to all downstream systems. Keep data minimization and purpose limitation front and center.

6) The operating model: who does what, and how to scale

A durable program needs a cross‑functional pod with a clear product owner for personalization.

-

Core pod

- Growth/Marketing lead (P/L for personalization); Content lead (brand voice, templates); Data science/ML (segmentation, uplift models); Marketing ops (orchestration, delivery); Legal/Privacy (governance); Design/Creative (assets and templates); Engineering (integrations, performance).

-

Working rhythms

- Weekly standups on experiment pipelines and QA defects; monthly governance reviews; quarterly roadmap updates.

- Training: office hours for prompt reviews, template libraries, and brand voice tune‑ups.

-

Stack patterns I’ve found reliable

- CDP + experimentation + orchestration + CMS/ESP/ad platforms + prompt/version management + analytics.

- For content teams that want AI-native writing, SEO optimization, multilingual generation, and WordPress publishing in one place, platforms like QuickCreator combine AI drafting with block-based editing, SERP-aware optimization, and team workflows. Use this kind of system to standardize prompt templates, enforce QA gates, and operationalize publishing while your CDP governs data and audiences.

For practitioners who need a primer before tackling hyper‑personalization, this step-by-step overview of using AI for content creation can help teammates get up to speed on core workflows.

7) Measurement frameworks and ROI math you can take to finance

-

Incremental revenue lift

- (Treatment CR – Control CR) × Traffic × AOV × period length.

-

Segment‑level value

- Measure heterogeneous effects: a 2‑pt lift on high‑LTV cohorts often beats a 5‑pt lift on low‑value traffic.

-

- (Incremental profit – Program cost) / Program cost, where costs include platform licenses, model/API usage, data/engineering labor, and creative ops.

-

Longitudinal validation

- Use rolling holdouts and cohort analysis to detect novelty effects and to verify sustained uplift.

If you need a practical walkthrough on instrumenting revenue impact in enterprise analytics, see Adobe’s guide to automating revenue impact calculation (Adobe, 2024).

8) Foundational checklist to launch in 30–60 days

Week 1–2: Data and governance

- Align on KPIs and guardrails; set up an experiment registry.

- Audit data freshness, consent propagation, and identity resolution.

- Define QA gates (brand, legal, claims) and a disclosure policy for synthetic media.

Week 2–3: Templates and segments

- Draft 6–10 reusable prompt templates with variable slots for your top three segments.

- Build 3–5 deterministic segments (e.g., “first‑time buyer,” “loyalist,” “enterprise evaluator”).

- Prepare retrieval sources (catalog, docs, top FAQs) for grounding.

Week 3–5: Orchestration and delivery

- Connect CDP → CMS/ESP/ad platforms; implement conversion suppression and frequency caps.

- Launch two controlled web tests and one email test with guardrails and holdouts.

- Stand up a QA checklist and review process; log defects and remediation.

Week 5–8: Scale and refine

- Introduce predictive scores or simple contextual bandits for one high‑value placement.

- Add a second channel (e.g., paid social DCO) with shared suppression lists.

- Review results, refine prompts/templates, and publish learnings.

To streamline publishing ops as you scale variants, align your CMS and WordPress workflows. If you need a primer on the mechanics, this guide to WordPress publishing and team workflows outlines practical steps for coordination.

9) Common failure modes (and how to avoid them)

-

Underpowered tests and novelty bias

- Symptom: big early wins that fade. Fix: power your tests, run to significance, and validate longitudinally with holdouts.

-

Consent drift and channel misalignment

- Symptom: users continue receiving ads or emails after opt‑out. Fix: centralize consent and propagate to all endpoints; audit suppression joins weekly.

-

Brand voice and claims inconsistency

- Symptom: tone whiplash, risky promises. Fix: maintain voice/tone guardrails in prompts; route sensitive claims through legal.

-

Fragmented measurement

- Symptom: conflicting results across teams. Fix: standardize KPI definitions and instrument revenue impact the same way everywhere.

-

Over‑automation without human review

- Symptom: subtle errors at scale. Fix: human‑in‑the‑loop for sensitive segments, with escalation to domain experts.

10) When (and why) to push personalization further

Once your foundation is stable, push toward:

- Real‑time triggers tied to user actions and context.

- Multimodal creative (copy + image/video) for key accounts or moments.

- Assistant‑style experiences that extend beyond conversion into onboarding and support.

This is where the strategic upside highlighted in McKinsey’s 2025 perspective on the next frontier of personalization becomes tangible—but only if you keep governance and measurement tight.

11) Appendix: guardrails and references worth bookmarking

-

Governance frameworks

- NIST AI Risk Management Framework (2024–2025)

- ISO/IEC 23894 risk management explainer (2023)

-

Regulatory and integrity

- EU AI Act implementation timeline (2024)

- FTC Advertising & Marketing guidance (ongoing)

-

Execution patterns

If your team is still building AI content foundations, the beginner-friendly primer on AI content creation steps to get started will accelerate onboarding before you layer on hyper‑personalization.