AI-Optimized FAQ & HowTo Content for Generative Search (2025)

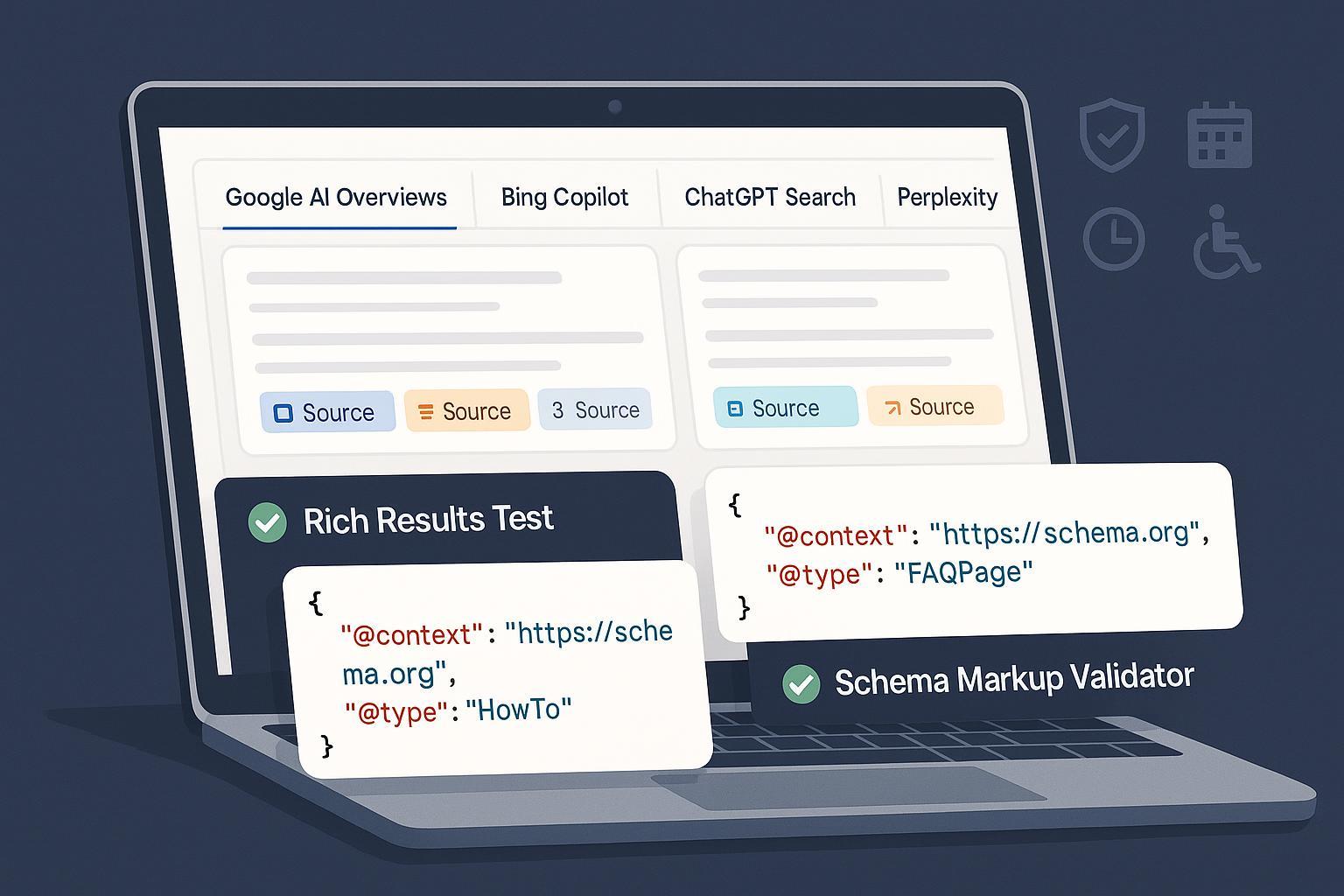

Discover 2025 best practices for FAQPage and HowTo schema, validation workflows, and monitoring to get cited in Google AI Overviews, Bing Copilot, ChatGPT, and Perplexity.

What makes FAQs and HowTos show up in AI Overviews, Copilot, ChatGPT Search, or Perplexity?

Short answer: Content that’s clearly written for people, technically accessible to crawlers, and unambiguously structured is most likely to be cited. There’s no submission switch. You earn inclusion by being useful, understandable, and trustworthy.

Deeper context:

- For Google: Inclusion in AI features is quality-driven; ensure pages are crawlable, indexable, and helpful. Google reiterates there’s no separate “AI Overviews” markup—focus on content quality and technical accessibility, as described in the 2025 guidance in the Search Central documentation on AI features and your website.

- For Bing Copilot: Generative answers show citations; make sure your content is authoritative, readable, and answers the query directly. Microsoft’s April 2025 announcement highlights Copilot Search’s publisher-friendly citation behavior in the Bing blog on Copilot Search.

- For ChatGPT Search: OpenAI confirms results include links to relevant sources—your job is to provide succinct, trustworthy explanations and well-structured answers so the model can attribute you. See the OpenAI announcement on ChatGPT Search.

- For Perplexity: Its Deep Research runs many searches and produces source-backed reports, which means clear, evidence-supported content earns visibility. Read the Perplexity Deep Research overview.

Practical takeaway: Align page intent to user intent (a question deserves a concise, direct answer; a task deserves step-by-step instructions). Use plain language, surface your evidence and credentials, and make the page technically simple to crawl.

Does FAQPage markup still help in 2025 given Google’s rich result restrictions?

Short answer: Yes—use FAQPage markup when you have genuine on-page Q&A. Even though rich result eligibility narrowed in 2023, FAQPage still helps search engines and LLMs correctly interpret your content and can support inclusion in generative answers.

Details:

- Google reduced FAQ rich results primarily to well-known government and health sites in 2023, but the underlying schema remains valid and useful when used properly. See Google’s 2025 documentation on FAQPage structured data.

- Only mark up Q&A that is visible to users and non-promotional. Make sure each question has a direct, single accepted answer.

- On-page: Q&A is visible and matches the JSON-LD text exactly.

- Schema: mainEntity is an array of Question objects; each Question has name and acceptedAnswer with Answer.text.

- Tools: Validate with the Rich Results Test and then with the Schema Markup Validator.

Which HowTo properties matter most in 2025, and how should I write steps?

Short answer: Provide a clear task name, ordered steps with names plus human-readable instructions, and add images/tools/supplies/total time when relevant. Keep the steps short and imperative.

Key requirements and tips:

- Use HowTo with ordered HowToStep (or HowToSection) objects. Each step needs a name and text that matches what users see on the page.

- Enrich where applicable: images for steps, tools and supplies lists, totalTime, and results.

- Follow Google’s current HowTo guidance in HowTo structured data documentation.

- Steps: Each HowToStep has name and text; order is logical and matches the UI.

- Enrichment: Include totalTime, tool, supply, and step images when helpful and accurate.

- Tools: Validate with the Rich Results Test and Schema Markup Validator.

Can you show a minimal, correct JSON-LD for FAQPage and HowTo?

Quick examples you can copy and adapt. Ensure your on-page content matches the markup exactly.

FAQPage (minimal, compliant):

{

"@context": "https://schema.org",

"@type": "FAQPage",

"mainEntity": [

{

"@type": "Question",

"name": "What is schema markup?",

"acceptedAnswer": {

"@type": "Answer",

"text": "Schema markup is structured data that helps search engines understand your content."

}

},

{

"@type": "Question",

"name": "How do I fix FAQ schema errors?",

"acceptedAnswer": {

"@type": "Answer",

"text": "Ensure all required fields are included and validate your JSON-LD syntax in the Rich Results Test."

}

}

]

}

HowTo (ordered steps; enriched):

{

"@context": "https://schema.org",

"@type": "HowTo",

"name": "Run a 30-minute schema audit",

"totalTime": "PT30M",

"tool": ["Crawler", "Text Editor"],

"supply": ["Sitemap", "URL List"],

"step": [

{"@type": "HowToStep", "name": "Crawl your site", "text": "Export all URLs and detect existing JSON-LD."},

{"@type": "HowToStep", "name": "Validate priority pages", "text": "Use Rich Results Test on top landing pages by organic sessions."},

{"@type": "HowToStep", "name": "Fix errors and connect entities", "text": "Add @id links for Organization and Person across pages."}

]

}

Do/Don’t:

- Do ensure your JSON-LD is loaded on initial render and mirrors the visible content.

- Don’t mark up promotional blurbs or content that doesn’t actually answer a user question or describe a procedure.

How should I phrase questions and answers so LLMs can extract them reliably?

Short answer: Write like a great support engineer—concise question, direct answer in the first sentence, then optional detail or caveats.

- Keep one intent per question. Avoid multi-part questions unless you split them.

- Put the “bottom line up front.” One crisp answer sentence, then elaboration.

- Use simple language and avoid brand jargon. If a term is necessary, define it once.

- Add context examples when ambiguity exists (e.g., for “pricing,” specify region or plan).

- Use accessible headings (H2/H3) and anchor links for each Q&A block so models can deep-link if needed.

Cross-check: Ensure the Q&A text in your HTML exactly matches the JSON-LD Answer.text. This alignment reduces misinterpretation.

What credibility (E‑E‑A‑T) signals matter for generative citations?

Short answer: Real authors, real expertise, transparent sourcing, and consistent entity markup.

Implementable checklist:

- Authors: Use clear bylines with bios and link to authoritative profiles. Consider Article markup for author Person and publisher Organization per Google’s guidance; the broader practices are covered in Google’s article schema docs, linked from their developer portal (2024–2025 updates).

- Entity consistency: Use Organization markup with logo and sameAs; connect author pages to the org and to public profiles.

- Citations: When you state facts, link to primary sources. Keep it sparse but strong.

- Editorial standards: Show update logs, review processes, and contact/complaint paths on your site.

Why it matters: Google’s focus on people-first content and experience remains central in 2025 (see Search Central’s 2025 guidance on AI features and your website). Other engines also reward clarity, evidence, and accountable authorship.

How often should I update FAQs/HowTos, and how do dates factor into eligibility?

Short answer: Update when facts change or when you add meaningfully better explanations. Keep a single, prominent date and align it with structured data.

Operational tips:

- Show one primary date (Published or Updated) to users. Avoid dual-date confusion unless it helps the reader.

- Align on-page date with JSON-LD datePublished/dateModified. Avoid future dates and misleading timestamps. Google’s date guidance explains best practices in the Publication dates documentation.

- Maintain a brief change log for high-value pages so readers and models can see what changed and when.

- Refresh Q&As that reference policies, pricing, or procedures at least quarterly—or sooner if accuracy drifts.

How do I validate implementation and catch errors before shipping?

Short answer: Use a two-step validation: Google’s Rich Results Test first, then the Schema Markup Validator. Confirm crawlability and indexability before you publish.

Preflight checklist:

- Validate page-level JSON-LD syntax and eligibility in the Rich Results Test.

- Confirm Schema.org compliance in the Schema Markup Validator.

- Inspect the URL with Google Search Console to confirm HTTP 200 and that robots/noindex aren’t blocking.

- Test your staging and production versions after every CMS/theme update.

- Re-validate top templates quarterly.

Common failure modes and fixes:

- Missing Answer.text in FAQPage → Add the missing property and re-validate.

- Steps without text in HowTo → Add clear, human-readable instructions per step.

- Markup not matching visible content → Align the HTML copy and JSON-LD exactly.

How can I measure whether my pages are getting cited in AI answers across engines?

Short answer: Combine manual checks, analytics, and optional third-party monitors.

Practical workflow:

- Manual spot checks: Run a weekly list of priority queries in Google (with AI features), Bing Copilot, ChatGPT Search, and Perplexity; record screenshots and the cited domains. Bing’s behavior is detailed in the 2025 Copilot Search blog announcement, and Perplexity’s source panels are described in their Deep Research post. ChatGPT’s sourcing is confirmed in the OpenAI ChatGPT Search announcement.

- Analytics: Build GA4 custom channel groupings to capture traffic from these platforms where referrers exist; triangulate with direct traffic lifts around content updates.

- Server logs: Check for distinct referrers; note that some AI engines may obscure them.

- Optional tools: Platforms like Geneo can be used to monitor multi-engine AI citations and brand mentions across ChatGPT, Perplexity, and Google’s AI features. Disclosure: Geneo is our product.

Optional extended reading: For distribution strategies that can influence LLM sourcing (e.g., high-signal community discussions), see our guide on Reddit communities best practices for AI search citations.

What KPIs should I watch as I iterate?

Short answer: Track visibility and quality, not just clicks.

KPI set to consider:

- Inclusion coverage: Number of target queries where your domain appears as a cited source across engines.

- Content accuracy: Rate of corrections/updates per month; time-to-fix after policy changes.

- Engagement: On-page engagement for FAQ/HowTo landing pages; scroll depth; time on page.

- Assistance signals: Reduction in support tickets for topics covered by new/updated FAQs.

- SEO health: Indexation status and structured data error counts in GSC.

Tip: Add annotations when you ship significant content or schema changes so you can correlate KPI movements with releases.

Are there differences in how I should optimize for Google vs. Bing vs. ChatGPT vs. Perplexity?

Short answer: The fundamentals are the same—useful, accurate content in accessible formats—but the presentation and citation patterns differ.

Practical distinctions:

- Google: No special markup for AI Overviews; lean into helpful content, clean structure, and clear Q&A/HowTo patterns as outlined in AI features guidance.

- Bing Copilot: Expect visible citations; aim for succinct, quotable passages and authoritative signals, as discussed in the Bing Copilot Search blog (2025).

- ChatGPT Search: Provide definitive, well-cited explanations so the model can attribute you (see OpenAI’s announcement).

- Perplexity: Its Deep Research favors comprehensive, source-backed material; clarity and citations matter (see Perplexity’s Deep Research post).

How do I handle advanced scenarios like pages with both FAQs and HowTos or multimedia steps?

Short answer: Keep intent clear. If a page genuinely contains both Q&A and a procedure, you can use multiple schema types—just ensure each block is distinct and accurate.

Guidelines:

- Multi-entity pages: It’s acceptable to include FAQPage and HowTo JSON-LD on the same URL if both are present and visible. Keep them separate and accurate.

- Multimedia: Add ImageObject or VideoObject for steps where visuals materially improve understanding. Ensure alt text is descriptive and that images are compressed and accessible.

- Disambiguation: Use precise names for steps and questions. Where terms can mean different things, add a short definition or scope note in the first sentence.

- Accessibility: Use semantic headings, ARIA landmarks where appropriate, and descriptive link text so assistive technologies and LLMs can parse structure reliably.

Troubleshooting tip: If validation passes but visibility is low, reassess whether the page cleanly satisfies a focused intent or if it’s trying to do too much at once.

What are the most common mistakes that prevent inclusion—and how do I avoid them?

Short answer: Misaligned markup, promotional or thin Q&A, and unvalidated changes are the usual culprits.

Do/Don’t quick list:

- Do mirror visible content in JSON-LD exactly; don’t auto-generate mismatched markup.

- Do write a one-sentence direct answer; don’t bury the lede under fluff.

- Do validate in both RRT and SMV; don’t skip validation after CMS updates.

- Do provide author/org/entity signals; don’t publish faceless pages with no accountability.

- Do maintain dates and change logs; don’t “refresh” dates without meaningful updates.

If you’re auditing a failing page: Start with the Rich Results Test, then the Schema Markup Validator, and confirm your page aligns with Google’s FAQPage and HowTo documentation.

Can community distribution improve my odds of being cited?

Short answer: Yes, thoughtfully participating in relevant discussions can surface your content to LLMs that reference popular, well-cited sources.

How to do it well:

- Share genuinely helpful explanations or checklists in communities where your audience already learns (e.g., specialized forums or subreddits), and reference your fuller guide when it adds value.

- Ensure your content stands on its own—posts should be useful even without the click.

- Avoid spammy link drops; focus on credibility and helpfulness.

Further reading: If you’re exploring community strategy, see this overview of Reddit communities best practices for AI search citations.

Where can I learn about changes to eligibility and policies?

Short answer: Monitor official documentation first, then supplement with high-quality industry analyses.

Go-to sources:

- Google Search Central’s living documentation on AI features and your website and specific schema pages for FAQPage and HowTo.

- Validators you should bookmark: Google’s Rich Results Test and the Schema Markup Validator.

- Platform announcements: Microsoft’s Bing Copilot Search blog (April 2025), OpenAI’s ChatGPT Search announcement, and Perplexity’s Deep Research post.

Note: When researching tools, you can review independent analyses of the landscape; for a vendor-by-vendor snapshot with pros/cons, see this overview of the 2025 monitoring tool market in our Profound review with alternative recommendations.

If you implement the above, keep a simple ops loop: write for people, structure for machines, validate before shipping, and measure what shows up. Iterate quarterly—or faster if your facts change—and you’ll steadily improve your odds of being cited across generative search experiences.