Synthetic Indexing Breakthrough 2025: AI-Friendly Site Architecture Best Practices

Discover field-tested best practices for synthetic indexing in 2025. Learn how AI-friendly site architecture enhances content discoverability on AI search engines with actionable workflow steps.

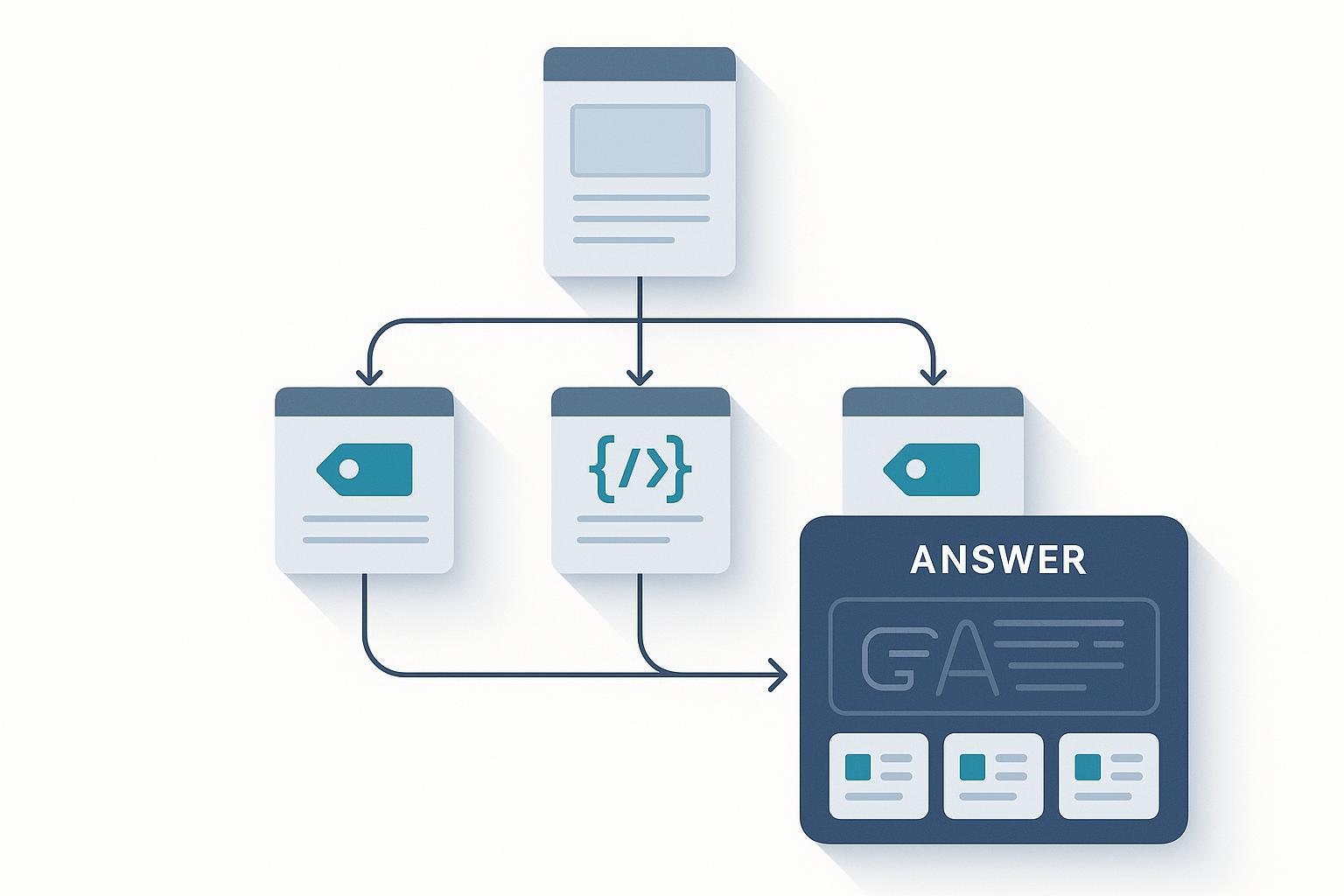

If you design for traditional SEO alone, you’ll miss the layer that now decides whether your content is quoted, summarized, or ignored by AI answer engines. Synthetic indexing is the emergent layer where engines assemble answers from passages, entities, structured data, and embeddings—rather than just ranking whole pages. In 2025, the stakes are real: Semrush reported in March 2025 that Google’s AI Overviews appeared in about 13.14% of queries, up from 6.49% in January, with the strongest impact on informational searches, according to the Semrush AI Overviews study (2025).

This article distills the architecture patterns we’ve used to make sites legible to AI systems—so your pages become the sources those systems cite.

What “AI-friendly site architecture” actually means

AI-friendly architecture prioritizes passage-level clarity, entity disambiguation, and structured meaning. The goal isn’t just to rank—it’s to be quotable and attributable when an LLM composes an answer.

- Semantic HTML ensures machines can locate and scope the right blocks (definitions, steps, lists) without guessing.

- Chunkable sections surface “liftable” passages. Not too small, not too big—coherent units that can stand alone.

- Structured data (JSON-LD) and entity signals tell models who/what your content is about and why it’s trustworthy.

- Topic clusters and internal links establish context and resolve ambiguity across related pages.

- Conversational patterns (FAQ/HowTo) map to how users ask questions and how answer engines prefer to quote.

For teams getting started, Google’s guidance on AI search emphasizes that there’s no special markup required for eligibility—pages must simply be indexable and eligible for a search snippet. See the official note in Google’s “AI features and your website” (2025). That said, in practice, extractable passages, solid schema, and clean semantics materially improve your odds of appearing as a supporting link.

1) Semantic HTML and passage extractability

What to do:

- Use HTML5 landmarks:

, - Mark up timestamps with

- Keep critical content server-rendered. Avoid JS-only rendering for core copy that engines need to read and quote.

- Embed concise, self-contained definitions, lists, and tables for key facts; these elements are ideal “grab points” for answer engines.

For deeper architectural reasoning from an engineering lens, iPullRank’s analysis is worth reviewing in the AI Search Architecture Deep Dive (2025).

2) Chunkable, coherent content blocks

LLMs don’t want orphaned sentences; they prefer coherent paragraphs that carry enough context to stand alone. In retrieval-augmented systems, overly small chunks can raise irrelevance and hallucination risks. NVIDIA’s applied guidance highlights that “micro-chunking” often harms retrieval; section or paragraph-level chunks tend to perform better, as discussed in NVIDIA’s chunking strategy overview (2024).

Practical guardrails:

- Size for sense: Aim for 80–200 word sections with one clear idea and an explicit subhead.

- Use question-style H2/H3s when feasible (“How does X work?”, “What is Y vs Z?”). These map naturally to user prompts.

- Keep references and definitions adjacent to claims so passages can be quoted without losing attribution.

3) Structured data and entity clarity

Implement JSON-LD consistently for Organization, WebSite, WebPage, Article/BlogPosting, Person (authors), FAQPage, and Product/Service or LocalBusiness as applicable. Align your entities with external identifiers using sameAs (Wikidata, Wikipedia, LinkedIn for authors, Crunchbase where relevant). Keep schema truthful to visible content.

Example: minimal Article + Person JSON-LD block

{

"@context": "https://schema.org",

"@type": "Article",

"headline": "Synthetic indexing breakthrough (2025): How AI-friendly site architecture boosts content discoverability",

"datePublished": "2025-10-05",

"dateModified": "2025-10-05",

"author": {

"@type": "Person",

"name": "Your Name",

"sameAs": [

"https://www.linkedin.com/in/your-profile"

]

},

"publisher": {

"@type": "Organization",

"name": "Your Brand"

}

}

4) Topic clusters and internal linking that reinforce meaning

Build pillar pages that define a primary entity/topic comprehensively and connect them to subpages for distinct intents (what/how/why/compare/cost/tools/case studies). Ensure reciprocal links across the cluster and keep important content within 3–4 clicks from the homepage. Use descriptive, human-readable URLs and add breadcrumbs to clarify context for crawlers and users.

5) FAQ and How-To patterns for conversational queries

Include concise Q&A blocks that answer one question directly in 2–4 sentences. Apply FAQPage schema. For procedural content, use HowTo schema and express steps in ordered lists. These patterns match how engines assemble step-by-step or definition-style answers.

6) Freshness and technical hygiene

- Keep XML sitemaps accurate; expose lastmod and reference sitemaps in robots.txt.

- Maintain canonical control; avoid soft 404s and redirect chains.

- Watch Core Web Vitals; slow pages get crawled less and quoted less reliably.

- Display visible published/updated timestamps and editorial standards to support E‑E‑A‑T.

Google’s 2025 communications reiterate that well-structured, helpful content remains the input; see the guidance in Google’s “Succeeding in AI search” blog (2025).

Role-based workflows: who does what

A durable practice installs responsibilities across disciplines.

-

Site architect / UX lead

- Model topic clusters and navigation. Keep priority content ≤4 clicks deep.

- Wireframe templates with clear H1, intro answer, Q&A subsections, lists/tables, FAQ, sources, author bio, and updated timestamp.

- Ensure server-rendered critical content and semantic landmarks in templates.

-

Technical SEO / engineering

- Implement schema via server-side includes or a schema registry. Enforce sameAs and consistent identifiers.

- Validate indexability: HTTP 200, non-blocked robots, correct canonicals, mobile rendering.

- Build QA scripts to check passage “liftability” (e.g., headings present, answer block length, list/table presence).

-

Content lead / editors

- Author passage-complete sections with precise definitions, steps, and comparisons.

- Maintain outbound citations to authoritative sources where claims are made.

- Refresh and annotate updates quarterly or when facts change; track edit history.

Platform nuances that matter in practice

Google AI Overviews / AI Mode

What’s official: Google states there’s no special markup for inclusion; pages need to be indexed and snippet-eligible. See Google’s AI features and your website (2025). In practice, we see higher inclusion when pages:

- Provide direct, unambiguous answers near question-style subheads

- Carry robust Organization/Person/Article schema with author credentials and sameAs links

- Offer comprehensive pillars that cover multiple related intents and link subtopics clearly

Google’s 2025 blog post reiterates fundamentals: clear structure, helpfulness, and technical hygiene improve performance in AI search experiences; see Google’s “Succeeding in AI search” blog (2025).

Bing Copilot / Deep Search

Observed behavior (from practitioner studies and audits) suggests Copilot commonly cites sources that already demonstrate authority and extractable passages. Passage-friendly formatting—definitions, lists, concise tables—helps. iPullRank’s engineering view provides useful mental models in the AI Search Architecture Deep Dive (2025). Maintain Bing Webmaster Tools coverage, clean sitemaps, and structured data.

Perplexity

Perplexity consistently displays inline citations and values freshness/credibility. To test visibility without the LLM layer, developers can query the Search API, as outlined in the Perplexity Search Quickstart (docs). Practical tips:

- Ensure crawlability and clear author/org metadata

- Keep content updated with visible timestamps

- Use FAQ/HowTo patterns for concise, liftable answers

ChatGPT with Browsing / Deep Research

OpenAI’s Deep Research performs multi-step retrieval with citations. Sites with stable, canonical URLs, accessible HTML, and clear metadata fare better. See the behavior described in OpenAI’s “Introducing Deep Research” (2024).

Implementation workflow: Audit → Model → Execute → Validate → Monitor

1) Audit (2–3 weeks for mid-size sites)

- Crawl the site (Screaming Frog/Sitebulb) to inventory indexability, render status, canonicals, and schema presence.

- Evaluate passage extractability: spot-check top URLs for question-style subheads, direct answers, lists/tables, and FAQ blocks.

- Map entities: Organization, authors, products/services, key concepts. Add sameAs targets to disambiguate.

- Verify sitemaps (XML, lastmod), robots.txt allowances, and Google/Bing coverage. Fix any 4xx/5xx, soft 404s, and chain redirects.

Deliverables: audit report, prioritized issue list, entity map, and gap analysis against priority topics.

2) Information architecture modeling (1–2 weeks)

- Define clusters around key themes. For mature topics, 15–30 subtopics per pillar is common.

- Plan internal links: pillar → subpages and reciprocal links, plus contextual links between sibling subtopics.

- Wireframe a content template with: H1, 2–4 sentence intro answer, Q&A subsections, lists/tables, FAQ, sources, author bio, updated date.

- Design JSON-LD blocks for Organization, Person, WebPage, Article/BlogPosting, and FAQPage; standardize property names and sameAs conventions.

3) Execution and schema deployment (rolling, by cluster)

- Implement componentized templates; inject JSON-LD server-side. Build a schema registry to keep properties consistent across pages.

- Rewrite or expand content to meet passage completeness. Add tables for “at-a-glance” facts where appropriate.

- Validate with Google’s Rich Results Test and structured data validators during staging and after release.

4) Validation and passage testing (1–2 weeks per release)

- Technical QA: confirm 200 status, canonical correctness, robots/sitemaps, mobile rendering, and CWV.

- Passage QA: test a sample of your key questions in Perplexity and Bing. For Perplexity, optionally verify whether your URLs appear among raw ranked results via the Search Quickstart.

- Entity QA: check that author profiles and brand entities resolve correctly in knowledge sources; adjust sameAs links where ambiguous.

5) Monitoring and iteration (ongoing)

- Track inclusion/citations in AI Overviews, Copilot, Perplexity, and ChatGPT browsing where feasible.

- Watch for “entity drift” in AI summaries (incorrect descriptions, outdated facts). Correct with clarifying passages, updated schema, and fresh timestamps.

- Refresh top pages quarterly or upon material changes; log updates and measure citation deltas.

Common pitfalls (and how to fix them)

-

Over-chunking content into tiny fragments that lose context

- Fix: favor paragraph/section granularity and keep definitions/claims self-contained; align with the guidance summarized in NVIDIA’s chunking strategy overview (2024).

-

Schema that contradicts visible content or omits disambiguation

- Fix: maintain a schema registry; validate on deployment; add sameAs for authors/organizations and prominent entities.

-

Heavy client-side rendering that hides critical copy from crawlers

- Fix: server-render the main content; defer non-critical JS; ensure bots receive equivalent HTML.

-

Thin passages that aren’t quote-worthy

- Fix: rewrite sections to include definitions, steps, comparisons, and data points. Add tables and lists where extractability helps.

-

Sloppy information architecture

- Fix: tighten cluster modeling and reciprocal links; add breadcrumbs; reduce click depth for priority content.

Metrics that matter for synthetic indexing

Leading indicators

- Inclusion as a supporting link in AI Overviews/AI Mode

- Bing Copilot citations and Deep Search appearances

- Presence in Perplexity’s raw Search results for priority questions

- Passage extraction rate per page template (how many sections meet “liftable” criteria)

- Schema validation pass rate

Outcome metrics

- AI-assisted referral visits and assisted conversions

- Accuracy of branded entity summaries in AI answers

- Changes in CTR/visibility for informational queries where Overviews appear

Cadence and governance

- Quarterly refresh for top clusters; ad hoc updates on fact changes

- Release notes with timestamps; page-level change logs in your CMS

- Standing review of entity maps and sameAs consistency across the site

Compact adoption checklist

Architecture and templates

- [ ] Semantic landmarks and strict heading hierarchy

- [ ] Server-rendered critical content; clean CWV and mobile UX

- [ ] Page template with intro answer, Q&A subsections, lists/tables, FAQ, sources, author bio, updated date

Structured data and entities

- [ ] JSON-LD for Organization, Person, WebPage, Article/BlogPosting, FAQPage as applicable

- [ ] sameAs identifiers for brand, authors, and key entities

- [ ] Validation in staging and production; schema registry maintained

Content and clusters

- [ ] Pillar → subtopic clusters with reciprocal links

- [ ] Sections sized for sense (80–200 words), question-style subheads where natural

- [ ] Tables/lists for extractability; concise, quotable definitions and steps

Monitoring and iteration

- [ ] Track AI Overviews/Copilot/Perplexity/ChatGPT browsing citations

- [ ] Watch for entity drift; correct with clarifying content/schema

- [ ] Quarterly refresh cadence; log changes with timestamps

Closing next steps

If you need to continuously track where your brand is cited or summarized across AI engines, consider using Geneo to monitor AI visibility and entity accuracy over time. Disclosure: the preceding mention is informational; we are affiliated with the product. Use any monitoring workflow you trust if it better fits your stack.

References and further reading

- Official eligibility and mechanics for AI features: Google’s “AI features and your website” (2025)

- Practical guidance for performing well in AI search: Google’s “Succeeding in AI search” blog (2025)

- Architectural perspective on AI search: iPullRank’s AI Search Architecture Deep Dive (2025)

- Prevalence of AI Overviews: Semrush AI Overviews study (2025)

- Passage chunking pitfalls and best practices: NVIDIA’s chunking strategy overview (2024)

- Testing raw visibility in Perplexity: Perplexity Search Quickstart (docs)

- Multi-step browsing behavior context: OpenAI’s “Introducing Deep Research” (2024)