Prompt Guardrails to Prevent Hallucinated Financial Advice

Learn how prompt guardrails prevent hallucinated financial advice, ensuring AI delivers accurate, reliable, and compliant insights for financial decisions.

AI tools are transforming the financial world, but they come with risks. To prevent hallucinated financial advice—wrong or misleading answers from AI—that can lead to significant losses is crucial.

A study revealed $67.4 billion in global losses due to AI mistakes in 2024. For instance, one analyst relied on incorrect AI predictions, resulting in a $2.3 million error in spending.

Errors like these can negatively impact financial decisions. Implementing prompt guardrails functions like safety protocols. They assist AI in providing accurate, fair, and reliable advice. By using these guidelines, you can prevent hallucinated financial advice and protect your business and clients from costly mistakes.

Key Takeaways

Prompt guardrails help make sure AI gives correct money advice. They work like safety nets to stop wrong information.

Checking inputs, like using Retrieval-Augmented Generation, helps AI use current data. This lowers the chance of mistakes.

Testing and updating guardrails often keeps AI systems dependable and ready for changes in money matters.

When AI developers and money experts work together, guardrails get better. This makes sure rules are followed and advice stays fair.

Strong filters and checks keep your money choices safe. They also help people trust advice from AI.

Understanding Prompt Guardrails to Prevent Hallucinated Financial Advice

What Are Prompt Guardrails?

Prompt guardrails are rules that guide AI to give correct answers. They act like safety nets, making sure AI responses meet user needs and follow rules. For example, morality guardrails stop harmful or unfair outputs. Compliance guardrails ensure AI follows privacy laws like GDPR and HIPAA.

In finance, these guardrails are very important for trust. They stop AI from giving wrong or misleading information, called hallucinations. Experts say guardrails are key to keeping AI systems accurate and protecting private data.

Type of Guardrail | Purpose |

|---|---|

Morality guardrails | Stops harmful or unfair outputs, ensuring ethical and fair results. |

Security guardrails | Protects against leaks or false information, keeping data safe. |

Compliance guardrails | Follows privacy laws like GDPR, CPRA, and HIPAA to protect personal data. |

Contextual guardrails | Makes sure AI gives answers that fit the situation and are accurate. |

Using these rules helps stop bad financial advice and ensures AI gives reliable answers.

Why They Matter in Finance

In finance, even small mistakes can cause big problems. Imagine AI suggesting a bad investment or getting tax rules wrong. These errors could cost money or lead to legal trouble. Prompt guardrails help by checking and fixing AI outputs.

For example, Amazon Bedrock Guardrails use checks to block bad content and confirm facts. They use structured formats like JSON to keep AI answers clear and correct. Regular testing and feedback improve AI over time.

Chatbots give wrong facts in 27% of answers.

76% of quotes from AI have made-up sources.

Good prompts can make AI 30% more accurate.

These numbers show why strong guardrails are needed in finance. Adding these safeguards protects clients and builds trust in AI systems.

Risks of Hallucinated Financial Advice

Factual Errors and Money Problems

AI can make mistakes that cost businesses a lot of money. Wrong predictions or bad data from AI can cause legal troubles, unhappy customers, or poor decisions. A study by Gartner showed 23% of companies had big problems because of AI errors. These problems included breaking rules or messing up customer processes.

These mistakes can be very expensive. JPMorgan Chase saved $28.7 million each year by fixing AI compliance reports, getting a 670% return on investment. UnitedHealth Group saved $43.2 million by stopping wrong treatment approvals, improving costs and care. These examples show why stopping bad AI advice is important to save money and make better choices.

Source | Evidence | Financial Impact |

|---|---|---|

Gartner Research, 2023 | 23% of organizations faced negative consequences | Regulatory compliance issues, customer errors |

JPMorgan Chase Annual Report, 2023 | $28.7 million saved annually | 670% ROI on AI compliance reports |

UnitedHealth Group, 2023 | $43.2 million reduction in inappropriate authorizations | Improved cost management and care quality |

Ethical and Legal Problems

Bad AI advice can cause ethical and legal troubles. Misleading AI outputs have led to fines and rule-breaking. The SEC punished firms for lying about their AI systems, especially smaller companies. Breaking Section 206(2) of the Advisers Act, which doesn’t need proof of fraud, led to fines between $175,000 and $225,000.

These cases show why AI must follow ethical rules. Wrong or dishonest advice can break laws and hurt reputations. Adding strong guardrails helps AI follow rules and avoid legal problems.

Evidence Type | Description |

|---|---|

SEC Enforcement Actions | Firms penalized for false claims about AI usage |

Legal Violations | Violations of Section 206(2) of the Advisers Act |

Financial Penalties | Civil penalties ranging from $175,000 to $225,000 |

Losing Trust in AI

Trust is key in finance. Bad AI advice makes people doubt its reliability. Businesses and clients may avoid AI tools if they think the information is wrong or fake. Studies show chatbots give wrong facts 27% of the time, and 76% of AI quotes include made-up sources.

To rebuild trust, focus on being accurate and clear. Prompt guardrails help AI give good financial advice. By stopping bad advice, you protect clients and make AI more trustworthy in finance.

Practical Strategies to Prevent Hallucinated Financial Advice

Input Validation Techniques

Input validation helps AI give correct financial advice. It checks the data you provide to the AI. This step ensures the information is complete, clear, and properly formatted. For example, if you want investment advice, give the AI accurate market data and clear instructions.

A useful method is Retrieval-Augmented Generation (RAG). This technique lets AI use real-time data from trusted sources. It avoids relying only on outdated memory. RAG uses facts to reduce mistakes. For instance, if you ask about stock prices, the AI can pull live data from reliable databases.

Strategy | Description |

|---|---|

Retrieval-Augmented Generation (RAG) | Uses real-time data from trusted sources to avoid outdated memory. |

Advanced Prompting Techniques | Helps AI think step-by-step to catch errors early. |

Consensus and Cross-Verification | Compares answers from multiple AI models to ensure agreement. |

By checking inputs and using methods like RAG, you can stop AI mistakes and get better advice.

Output Filtering and Verification

AI outputs can still have errors, even with good inputs. Output filtering and verification help catch these mistakes. This step reviews AI answers to ensure they match facts and industry rules. External checks flag problems before users see them.

For example, AI Guardrails and Verification compare AI answers to trusted data ranges. If the AI suggests a bad investment, guardrails catch the mistake and fix it. Another method is Consensus and Cross-Verification, where multiple AI models check each other’s answers. This reduces errors by requiring agreement before accepting results.

Tip: Always check AI financial advice against trusted sources like market reports or expert opinions. This builds trust and ensures accuracy.

Using strong filtering and verification steps protects your decisions and avoids costly errors.

Iterative Prompt Refinement

Improving AI accuracy takes time and practice. Iterative prompt refinement means adjusting prompts based on past results. This helps AI understand your needs better and give clearer answers.

For example, refining prompts improved asset analysis scores from 68% to 92%. Step-by-step adjustments help AI catch mistakes early. Techniques like chain-of-thought prompting guide AI to think through problems carefully.

Here’s how to refine prompts:

Start with a simple, clear question.

Check the AI’s answer for mistakes.

Change the prompt to fix those mistakes.

Repeat until the AI gives accurate answers.

Note: Refining prompts improves accuracy and helps AI handle complex financial tasks.

By improving prompts over time, you can make AI more reliable and get advice that fits your needs.

Advanced Methods for Reliable Financial Advice

Contextual Grounding with External Knowledge

AI works better when it understands your specific question. Contextual grounding helps AI match its answers to the situation or data you give. This reduces mistakes and makes financial advice more useful.

For example, some AI models can use both text and images. You could upload a financial statement with your question. The AI would study the document and give advice based on the details. Tools like financial calculators also help AI make exact calculations, improving its suggestions.

These methods make financial advice more accurate. By using real-world data and tools, AI can give better answers that fit your needs.

Real-Time Monitoring and Feedback Systems

Real-time monitoring checks AI answers as they are created. It finds mistakes before they reach you. This keeps the advice accurate and helpful.

For example, monitoring systems have improved AI response quality by 91%. They also make customer service faster and better. Feedback loops help AI learn from past errors and improve over time.

Tip: Use real-time monitoring to stop errors and build trust in AI advice.

Domain-Specific Model Customization

Customizing AI for finance makes it more reliable. General AI models don’t always know enough about complex financial topics. Fine-tuning models with financial data improves their accuracy.

For example, Palmyra-Fin scored 73% on a tough finance exam, beating the average score of 60%. FinBERT also set records in analyzing financial texts after fine-tuning. These examples show why adding financial knowledge to AI is important.

Fine-tuning models like FinBERT helps them understand financial texts better.

Using special datasets ensures AI advice follows industry rules.

Customizing AI for finance stops bad advice and makes its guidance trustworthy.

Continuous Improvement in Guardrail Design

Regular Testing and Updates

Testing often keeps AI systems working well and staying reliable. By checking guardrails regularly, you can find weak spots and fix them. This also helps handle new problems like changing rules or risks in AI behavior.

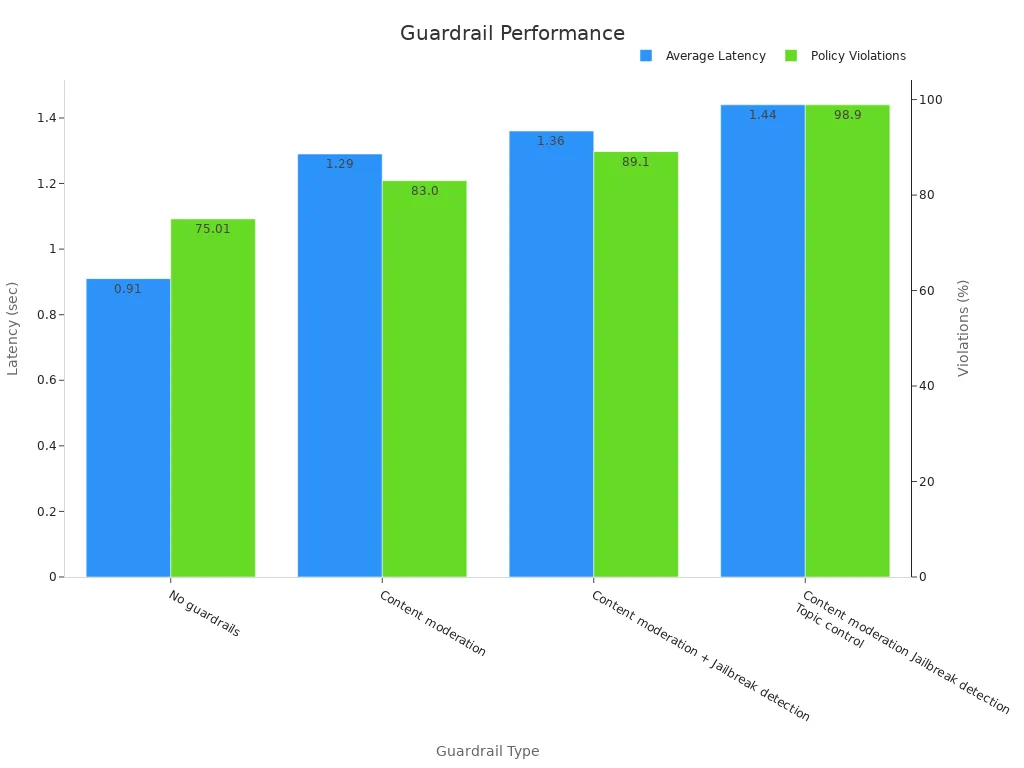

For example, some guardrails work better than others. Systems with tools like content moderation and topic control catch 98.9% of rule-breaking. Systems without these tools only catch 75.01%. But advanced systems may slow down responses, increasing time from 0.91 seconds to 1.44 seconds.

By testing and updating guardrails, you can keep accuracy high and stop bad financial advice from spreading.

Adapting to Financial Industry Changes

The financial world changes fast with new rules, tools, and trends. To keep AI useful, guardrails must adjust to these changes. Adding reasoning models can help AI spot harmful actions better. These models do well on safety tests compared to others.

Using more data also helps reasoning models improve. But after a point, adding more data doesn’t help as much. This shows the need to balance data use with system speed.

Measure | Description | Findings |

|---|---|---|

Reasoning Guard Models | Models that spot harmful actions | |

Data Efficiency | Checks how well models use data | More data improves results, but gains slow down after a limit |

By keeping up with changes and using better models, AI can give accurate and safe financial advice.

Collaboration Between AI Developers and Financial Experts

AI developers and financial experts need to work together to make good guardrails. Developers know the tech, while financial experts understand rules and needs. Together, they can build systems that meet both tech and finance standards.

For example, financial experts can point out risky areas like tax advice or investments. Developers can then create guardrails to fix these issues. This teamwork makes AI systems both smart and safe for the financial world.

By working together, you can create AI systems that are reliable and trusted, reducing mistakes and boosting user trust.

Stopping bad financial advice from AI is very important. It helps keep trust and makes sure AI gives correct answers. Prompt guardrails are like safety rules. They lower risks and make AI more reliable, especially in finance.

AI mistakes, called hallucinations, sound real but are wrong. These errors can cost a lot of money. Tools like coherence scorers check if AI answers match the topic, making them better.

Companies using special methods, like custom AI for finance and live checks, often save time and make more money over time.

When AI creators, finance experts, and rule-makers work together, they create better systems. This teamwork also helps follow rules and stay ethical.

Using these ideas, you can make AI tools that give good advice and stay ahead in business.

FAQ

What is hallucinated financial advice, and why does it matter?

Hallucinated financial advice happens when AI gives wrong answers that seem correct. This matters because these mistakes can cause bad choices, money loss, or legal trouble. Guardrails stop these errors and keep you safe.

How do prompt guardrails make AI more accurate?

Prompt guardrails help AI give correct and useful answers. They check inputs, review outputs, and improve prompts step by step. These actions make sure AI avoids giving wrong financial advice and meets your needs.

Can AI adjust to new financial rules?

Yes, AI can change by updating its guardrails often. Developers and finance experts work together to add new rules and trends. This keeps AI reliable and following the latest financial guidelines.

Why are financial experts important in making guardrails?

Financial experts find risks and share their knowledge about the industry. Their input helps developers build guardrails for tricky financial situations. This teamwork ensures AI follows both tech and finance rules.

How can you trust AI’s financial advice?

You can trust AI by checking inputs, reviewing outputs, and using AI models made for finance. Real-time checks and feedback also improve accuracy. Always compare AI advice with trusted sources to be sure.

Tip: Choose AI tools with strong guardrails to stay safe and get better results.

See Also

Optimizing Generative Engines in Regulated FinTech Sectors

Balancing HIPAA Compliance and Generative Engine Optimization

Guide to Schema Markup for AI Product Summaries

Creating Review Snippets to Enhance AI GEO Strategies

Enhancing Brand Visibility Through ChatGPT Prompt Engineering