How to Rank Content in Traditional Search & AI Responses: Best Practices 2025

Proven 2025 best practices for creating content that ranks in both traditional search and AI-powered generative responses. Actionable dual-channel SEO workflows, authority signals, measurement, and common pitfalls.

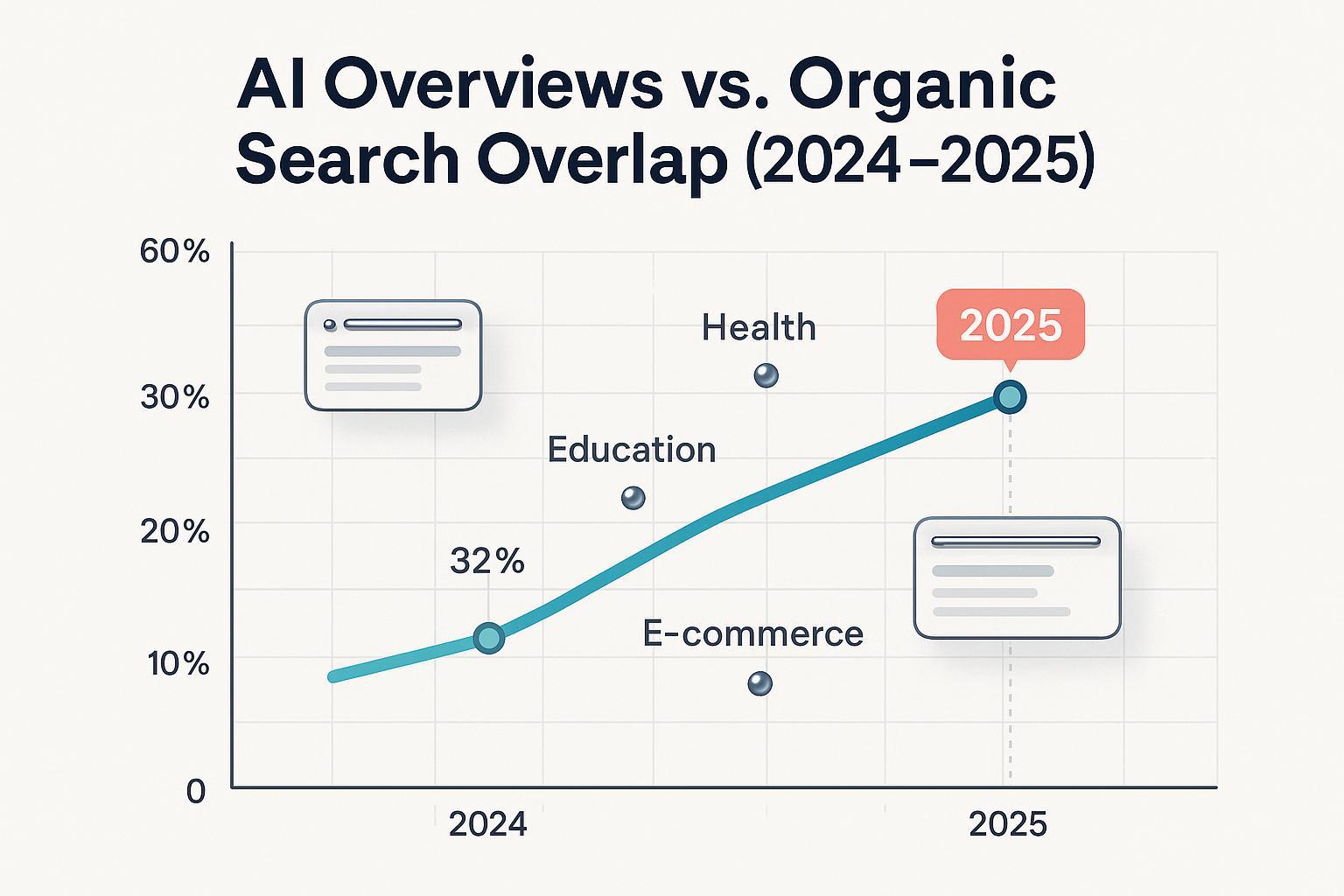

If you’ve felt your hard-won SEO gains wobble as AI answers expand, you’re not alone. In 2025, the most resilient teams are optimizing for two discovery surfaces at once: classic search results and AI-generated responses. The good news? What wins is still helpful, reliable content—augmented with machine readability and clear evidence.

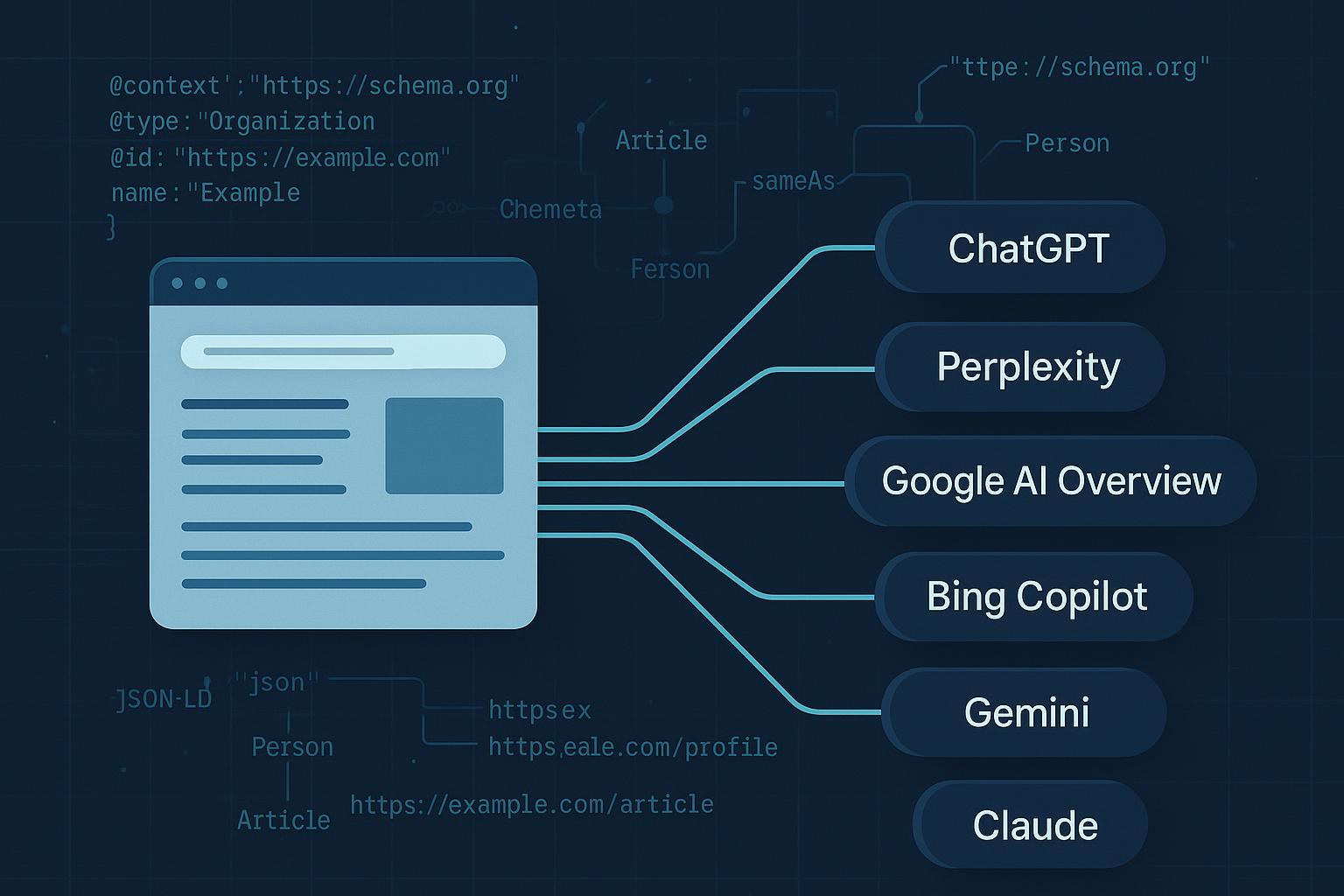

According to Google’s May 2025 guidance, pages that perform well in AI search experiences are those that meet Search Essentials and deliver “helpful, satisfying content,” with structured data aiding machine understanding, not guaranteeing inclusion, as described in the Google Search Central post, Top ways to ensure your content performs well in Google’s AI search experiences (2025). You’ll see similar principles across engines that ground their answers with citations—like Microsoft’s Copilot, which explains that its responses include references and a “Learn more” section for transparency in Copilot in Bing: Our approach to Responsible AI (2024).

This guide distills what consistently works in the field: a dual-channel workflow you can adopt today, with pitfalls to avoid and a practical example you can replicate.

1) Research User Intent for Two Channels

Traditional SEO research alone won’t cut it. Generative engines favor concise, direct, and up-to-date explanations they can quote, while classic SERPs reward comprehensive coverage and authority. Start with two complementary lists:

- Traditional keywords (e.g., “best CRM for healthcare,” “schema markup guide”).

- Conversational prompts and questions (e.g., “What is the best CRM for a mid-sized hospital?” “How do I add Product schema without breaking my page?”).

Add entities and comparisons that engines can easily ground: brand names, product names, pricing tiers, version years, locations, regulatory terms, and canonical definitions.

Practical steps I’ve found effective:

- Build a seed list of 30–50 head and long-tail keywords from your usual tools (Search Console, keyword platforms).

- Convert each into 2–3 conversational questions users actually ask in sales calls or community forums.

- Add comparison prompts (“vs” and alternatives) and decision criteria (“budget under $X,” “HIPAA compliant,” “open-source”).

- Group by intent: define, compare, decide, implement, troubleshoot.

If your team is new to the differences, this primer on Traditional SEO vs GEO is a helpful orientation to how classic optimization and generative engine optimization diverge and overlap.

2) Structure Content for Extractability and Depth

Generative engines need cleanly extractable answers; search still rewards thoroughness. Your page should deliver both:

- Lead with a 1–2 sentence direct answer.

- Follow with key facts in 3–5 bullets.

- Expand with sections that map to common sub-questions (H2/H3 in Q&A form).

- Include a comparison table and a concise, factual FAQ block (even if FAQ rich results are restricted, more below).

A practical organizing approach is the three-level content framework discussed by Search Engine Land in Organizing content for AI search: A 3-level framework (2025): a crisp summary, structured details, and deep-dive modules. In practice, that means your top section answers instantly; the middle offers lists, steps, and tables; and the bottom provides comprehensive coverage for those who need it.

Blueprint you can copy:

- Direct answer: 40–60 words; define, decide, or summarize.

- Key facts: 3–5 bullets with verifiable, dated data points where relevant.

- Table: comparison across 5–7 criteria (price, features, compliance, integrations, support).

- Steps or checklist: how to execute in 5–9 steps.

- FAQs: 4–6 questions phrased as users ask them; answer in 1–3 sentences each.

- Evidence: inline citations to primary sources when staking specific claims.

- Author and last updated date: visible for trust and freshness.

If you want a concise creator-focused checklist for scannability and clarity, see these GEO content best practices.

3) Technical Foundations That Matter in 2025

Classic technical SEO remains the backbone for both channels. Two principles dominate outcomes:

- Make it discoverable and understandable by crawlers.

- Make it unambiguous for machines to parse your entities and claims.

Critical items to implement:

- Indexability and clean HTML: Ensure pages are indexable with valid canonicalization and no accidental noindex on key templates; use semantic headings and lists.

- Core Web Vitals and accessibility: Fast, stable, accessible layouts.

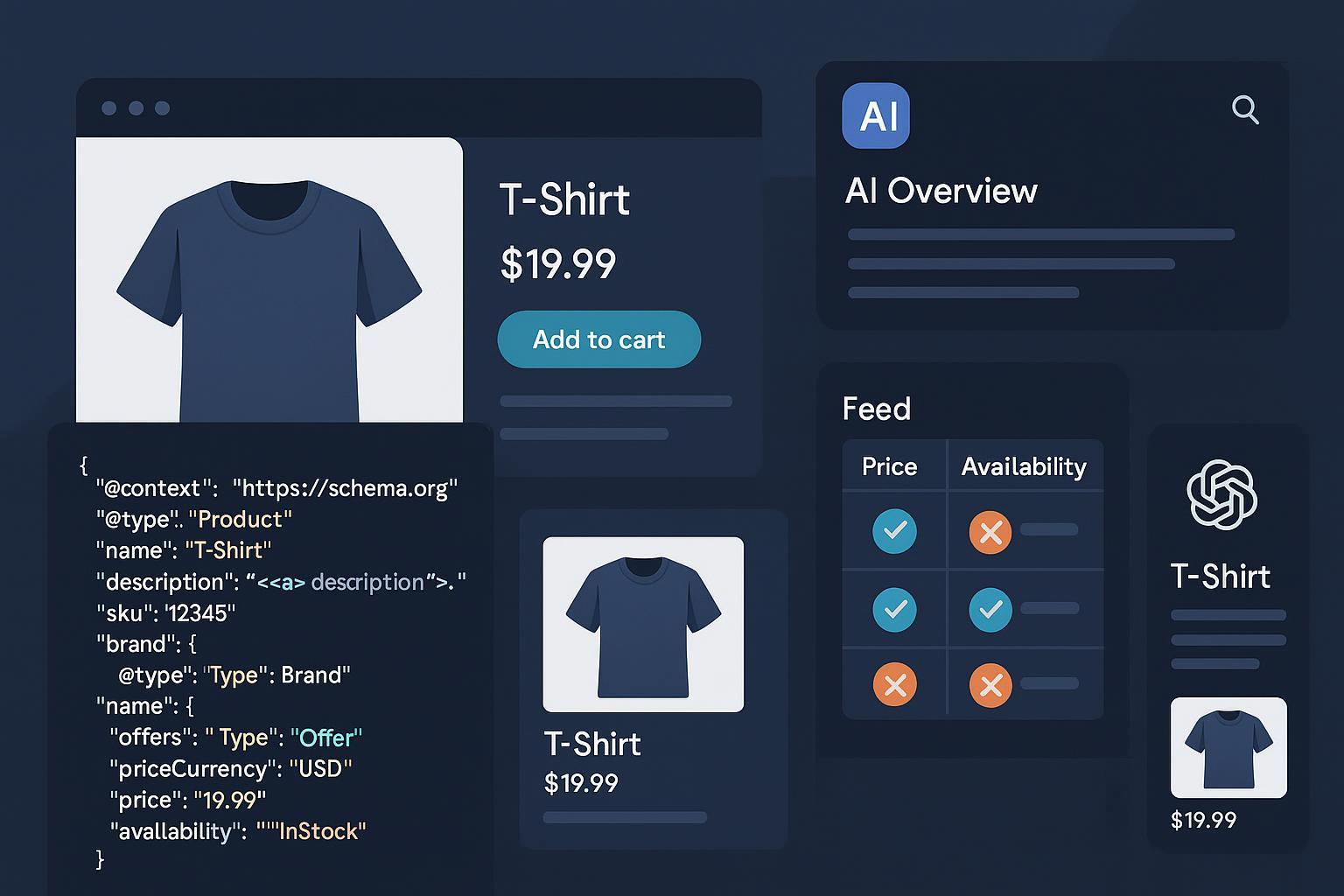

- Structured data: Article, Organization, Person, Product, Review, and other relevant types. Google reiterates that structured data improves machine readability but does not guarantee visibility in AI features; see AI Features and Your Website (Google Search Central, 2025).

- FAQ/HowTo caveats: Google restricted FAQ rich results for most sites and deprecated HowTo rich results. While you can still use FAQPage/HowTo for semantic clarity, don’t expect visual rich results, a shift recapped by Search Engine Journal in 2024 and reflected in Google’s documentation updates page (2025).

- Freshness signals: Updated dates, changelogs, version numbers, and release notes help engines select current, trustworthy sources.

- Clear authorship: Tie content to real people with credentials and links to professional profiles and citations—this strengthens E-E-A-T signals that search systems and AI answer engines both value, per the E-E-A-T guide on Search Engine Land (2025).

4) Authority Signals: E-E-A-T for Humans and Machines

Experience, expertise, authoritativeness, and trustworthiness now function as both human trust markers and machine-disambiguation aids. Practical ways to implement:

- Credentials in context: Add a concise author bio with relevant certifications and experience; link to verifiable profiles.

- Transparent sourcing: Cite primary, dated sources for statistics and definitions; avoid circular references.

- Real-world evidence: Case notes, screenshots (redacted as needed), and step-by-step methods.

- Brand mention strategy: Earn consistent mentions in credible publications, community roundups, and review sites—answer engines frequently cite recognizable sources, and engines like Copilot highlight “verifiable citations” in their approach (Microsoft’s 2024 explanation).

- Structured reputation: Organization schema with sameAs links to your official profiles; Review and Product schema where appropriate with honest rating aggregation.

5) Publish, Interlink, and Build Topic Hubs

Generative engines and search systems both prefer coherent topic coverage. Build tightly scoped hubs:

- One hub page that summarizes and links to core subtopics.

- Supporting articles that each answer one intent thoroughly.

- Contextual interlinks using descriptive anchors (no “click here”).

A practical next read if you’re targeting visibility in SERP modules that often correlate with answer inclusion is this guide to Optimize AI Overviews & Featured Snippets.

6) Measure What Matters in Two Channels

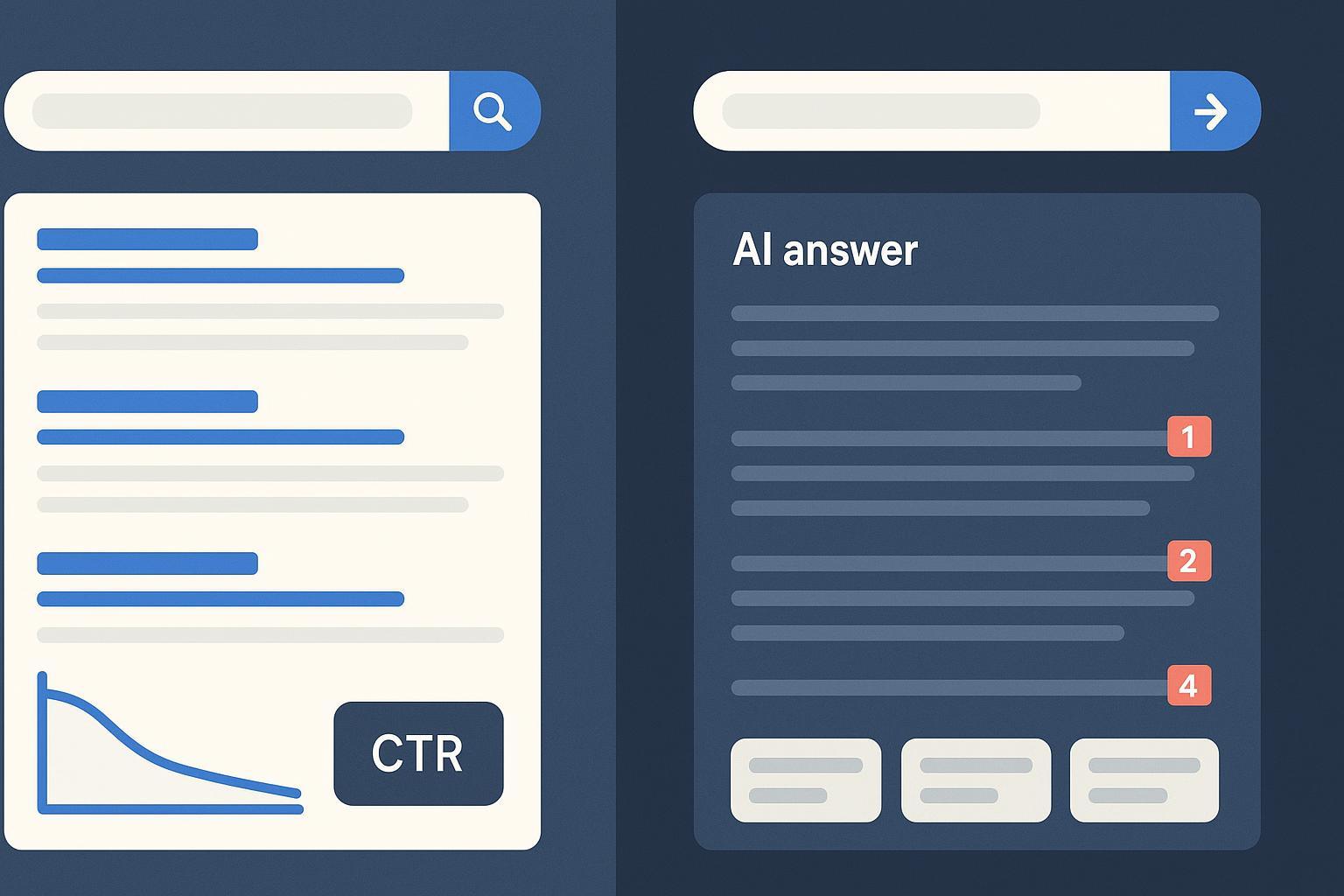

Expect fewer direct clicks from some queries as AI answers satisfy intent, but more visibility and assisted conversions when you’re cited. Track both traditional and AI-specific signals.

Traditional SEO KPIs:

- Rankings for target keywords

- Impressions and CTR (Search Console)

- Organic sessions and conversions (GA4)

- Featured Snippets and People Also Ask captures

- Citation frequency in AI answers (linked and unlinked)

- AI Share of Voice across your priority prompts (percentage of answers mentioning you)

- Sentiment and context quality (positive, neutral, negative)

- Zero-click impression share (queries likely satisfied on-page)

- Volatility of inclusion across weeks and query variants

Two useful overviews of measurement approaches and tactics for AI Overviews are SE Ranking’s how-to article (2025) and Single Grain’s 2025 guide to ranking in AI Overviews. Treat vendor metrics as directional, not standardized benchmarks.

Finally, remember that Google emphasizes that pages shown in AI experiences must be indexed and snippet-eligible—the baseline condition noted in AI Features and Your Website (2025).

7) Practical Workflow Example: From Research to AI Citations (B2B SaaS)

Scenario: You’re a B2B SaaS with a mid-market security product. You want to rank for “SIEM vs XDR” and be cited in AI answers for “What’s the difference between SIEM and XDR?”

Steps:

- Build a dual list: Collect 10 target keywords from Search Console and competitors; generate 20 conversational questions from sales calls and forums.

- Draft your page: Lead with a 50-word definition and a 6-row SIEM vs XDR table (criteria: telemetry scope, detection method, storage, compliance, deployment, pricing model). Add FAQs: “Is SIEM obsolete?” “Can XDR replace SIEM?”

- Add evidence: Cite dated standards (e.g., NIST SP references where appropriate) and vendor-neutral definitions. Include an author bio with security credentials.

- Implement schema: Article, Organization, and Product (if you have a relevant module). Validate with the Rich Results Test.

- Publish and interlink: Link from your security hub page and related implementation guides.

- Monitor weekly for 8 weeks: Track both SERP positions and AI answer citations across your prompt set. Log screenshots and exact prompts.

- Iterate: If AI answers omit your nuance (e.g., compliance logging), add a clearly labeled section with a brief checklist and updated date. Re-test prompts.

Where tool support helps:

- Use your regular SEO stack for rankings and snippets.

- For AI answer monitoring and sentiment, try a brand visibility tracker that logs citations across LLM-powered engines and summarizes sentiment and share of voice. For example, Geneo can monitor multi-platform AI citations, track sentiment, and maintain historical query logs so you can compare how often and how positively you’re referenced over time. Disclosure: Geneo is our product.

Keep the workflow objective: even if a tool shows you’re omitted, don’t chase the tool; update the content to be more direct, current, and extractable, then reassess.

8) Handling Inaccurate or Negative AI Mentions

You will encounter hallucinations, outdated claims, or unflattering summaries. A disciplined response playbook protects your brand:

- Monitor and log: Save screenshots, prompts, dates, and engines when you find issues. Track sentiment shifts over time.

- Publish corrections: Create a clear, cited explainer addressing the specific misconception. Use dated evidence and plain language.

- Submit feedback: Use built-in feedback forms where available (e.g., Google’s AI features feedback, Perplexity feedback buttons, Microsoft channels).

- Reinforce authority: Earn third-party corroboration (industry bodies, standards orgs, recognized analysts). Engines tend to prefer recognizable, credible sources.

- Re-test periodically: Prompts and answers evolve. Re-run your prompt set monthly.

For context on brand presence variance across Google’s modes in 2025, see the Search Engine Land study on brands in AI Mode vs AI Overviews (July 2025). For choosing and evaluating monitoring tools for AI search, Authoritas provides a useful roundup (March 2025).

9) Common Pitfalls and Trade-offs

- Over-optimizing FAQs or HowTo in hopes of rich results: As of 2024–2025, FAQ rich results are restricted and HowTo rich results deprecated. Keep them for semantic clarity, not SERP decoration.

- Thin “answer-first” pages with no depth: You might earn short-term citations but lose user trust and backlinks. Always follow concise answers with depth and evidence.

- Ignoring authorship and dates: E-E-A-T signals matter for both humans and machines; ensure visible bios and updated timestamps.

- Skipping schema: While no guarantee, omitting relevant structured data reduces machine clarity.

- Treating AI visibility as traffic-only: Many AI mentions are unlinked; measure brand presence, sentiment, and assisted conversions, not just sessions.

- Chasing volatility: AI answers fluctuate. Iterate methodically on clarity, freshness, and authority rather than rewriting wholesale with every change.

10) Dual-Optimization Checklist (Copy/Paste)

Use this to pressure-test a page before and after publishing.

Research

- [ ] 30–50 keywords mapped to 60–120 conversational questions

- [ ] Entities and comparisons added (brands, products, versions, geographies)

- [ ] Intent clusters: define/compare/decide/implement/troubleshoot

Structure

- [ ] 40–60 word direct answer at top

- [ ] 3–5 key-fact bullets with dated sources

- [ ] 1 comparison table with 5–7 criteria

- [ ] 5–9 step process or checklist

- [ ] 4–6 concise FAQs phrased like real questions

Technical

- [ ] Indexable, clean HTML, proper headings

- [ ] CWV good, accessible design

- [ ] Structured data: Article, Organization, Person, Product (as relevant)

- [ ] Author bio + last updated date visible

Authority

- [ ] Primary sources cited inline where claims are made

- [ ] Real examples or mini case notes included

- [ ] Reputation signals: reviews/mentions, org schema sameAs links

Publishing & Hubs

- [ ] Linked from relevant hub pages

- [ ] Contextual internal links with descriptive anchors

Measurement & Iteration

- [ ] Track rankings, snippets, impressions, CTR

- [ ] Track AI citations, share of voice, sentiment, zero-click share

- [ ] Log screenshots/prompts weekly for 8 weeks and iterate

If you need a foundation before tackling all of the above, start with the Beginner’s guide to GEO and follow it with these Essential GEO best practices.

11) Staying Current in 2025

A few platform notes to keep your playbook current:

- Google’s AI Mode and AI Overviews continue expanding; product notes describe AI Mode as “more advanced reasoning and multimodality,” with helpful links to the web (Google, 2025). Treat this as opportunity: your concise summaries plus depth are more valuable than ever.

- Google’s documentation clarifies that inclusion in AI experiences aligns with Search Essentials; structured data is useful but not a guarantee (Search Central, 2025). Build for users first, then add machine clarity.

- Perplexity’s exact source selection mechanics aren’t formally documented; observed patterns indicate a preference for recent, conversational sources and sentence-level citations (2024–2025 observations). Prioritize freshness, clarity, and recognizable authority.

Final Thought

There’s no silver bullet. Teams that win at dual-channel visibility consistently do three things: they answer questions directly, they back claims with evidence and structure, and they measure and iterate across both SERPs and AI answers. If you make that your operating system, 2025’s search shifts become less a disruption and more an advantage.

Sources and further reading (selected)

- Google Search Central: Top ways to ensure your content performs well in Google’s AI search experiences (2025)

- Google Search Central docs: AI Features and Your Website (2025)

- Google product blog: AI Mode update (2025)

- Search Engine Land: Organizing content for AI search: A 3-level framework (2025)

- Search Engine Land: An SEO guide to understanding E-E-A-T (2025)

- Microsoft Support: Copilot in Bing: Our approach to Responsible AI (2024)

- Search Engine Journal: Rich results policy changes recap (2024)

- SE Ranking: How to optimize for AI Overviews (2025)

- Single Grain: Google AI Overviews: The ultimate guide to ranking in 2025

- Authoritas: How to choose the right AI brand monitoring tools (2025)