Best Practices for Structured Data & Semantic Clarity to Win AI Citations in 2025

Discover 2025 best practices for structured data and semantic clarity to boost AI engine citations—field-tested workflow and actionable strategies for SEO professionals.

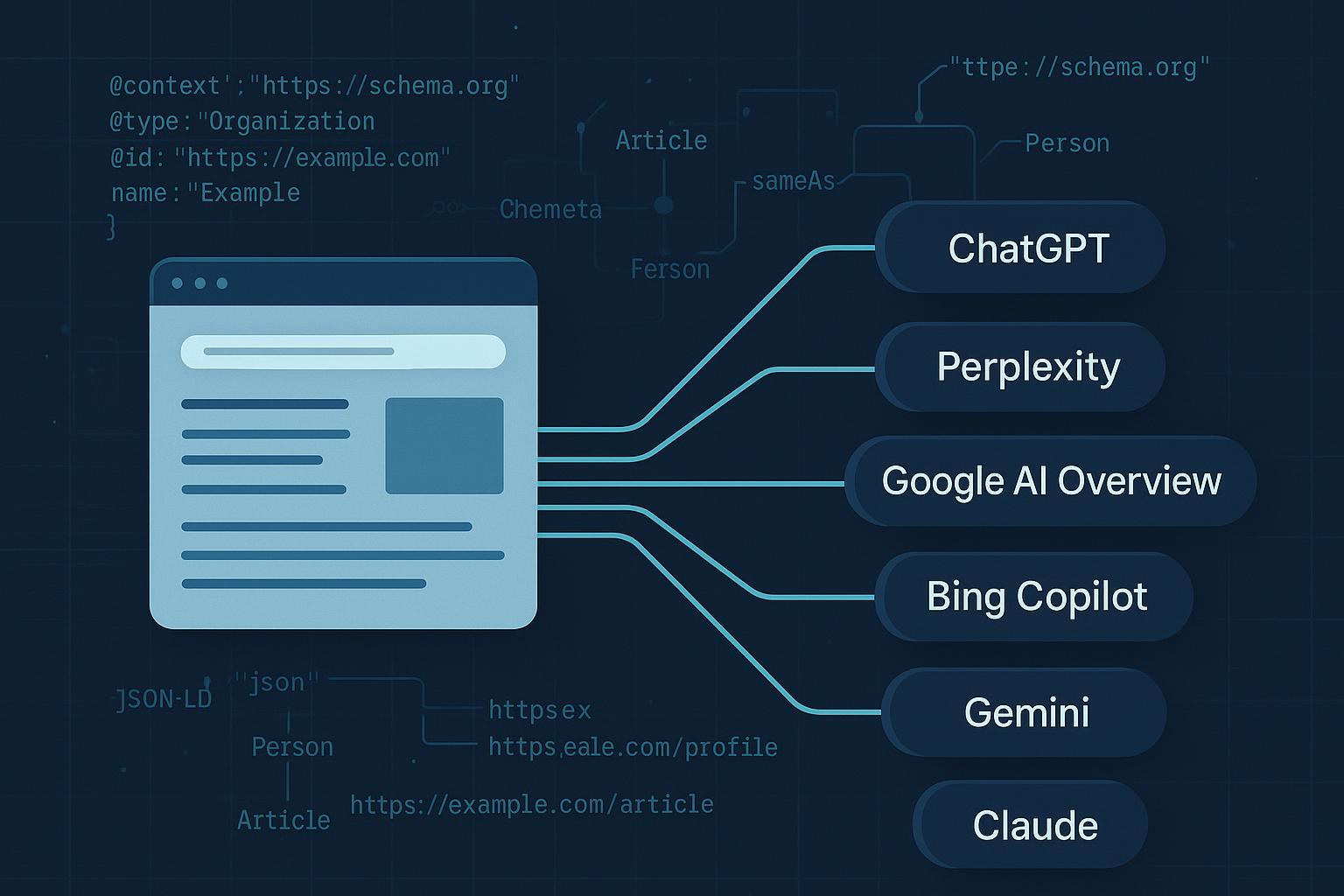

If your goal in 2025 is to be the source AI engines cite—across Google’s AI Overviews, Bing Copilot, Perplexity, Gemini, and ChatGPT—the fastest path is not magic keywords. It’s well-implemented structured data and ruthlessly clear semantics that make your pages easy to ground, quote, and trust. Based on field work through 2024–2025, I’ll share what actually moves the needle: how engines pick citations, the markup that helps, the content patterns that get reused, and a pragmatic workflow to test and measure results. No silver bullets—these are enabling practices that, when paired with authoritative content, materially improve your odds.

What engines actually rely on to pick citations

-

Google AI Overviews: Google states that to be eligible as a supporting link, your page must be indexed and eligible for a Search snippet; there are no extra technical requirements beyond standard search fundamentals. Structured data should match on-page content and helps Google understand page meaning. See Google’s official guidance in the Search Central “AI features and your website” (2025).

-

Bing Copilot: Microsoft explains Copilot grounds answers in top Bing results using a metaprompt plus search context, and shows citations inline and in a “Learn more” section. Details are outlined in the Bing blog “Introducing Copilot Search in Bing” (2025).

-

OpenAI/ChatGPT: If you want exposure, ensure your robots.txt allows GPTBot. OpenAI documents the user agent, controls, and IP verification on the OpenAI “GPTBot” page (2025). ChatGPT may include citations when using external content, but there’s no public spec guaranteeing consistent citation formatting.

-

Perplexity: The product emphasizes real-time answers with visible source links; transparency is a core behavior. See the Perplexity Hub post on Enterprise Pro and product transparency (2025) for positioning. Treat discoverability and trust signals similarly to search.

-

Gemini: Google’s Gemini app and API support grounding with Search and can surface web citations. For developer use-cases, review Gemini API “Grounding with Google Search” (2025) to understand how parsable, citable fragments are leveraged.

Bottom line: eligibility, crawlability, and clarity come first. Structured data and semantics strengthen machine understanding, but content quality, authority, and quotability drive selection.

Minimum viable technical readiness (foundation)

Before polishing markup, make sure your pages can be seen and quoted.

-

Crawlability and indexing

- Return 200 for canonical URLs, avoid heavy client-side rendering that hides content from bots.

- Google snippet eligibility: check pages with URL Inspection and ensure they meet technical requirements.

-

Robots and crawler access

- Allow GPTBot and ClaudeBot if you want to be considered in those ecosystems; confirm via server logs and user agent strings.

-

Entity disambiguation and consistency

- Use consistent names for your organization, products, and people across sites and profiles. Reference authoritative IDs (e.g., Wikidata) via sameAs.

-

Timestamp hygiene

- Keep datePublished and dateModified accurate. Volatile topics need visible “last updated” notes.

Semantic clarity patterns that win citations

Most engines extract and ground to passages that are self-contained, specific, and trustworthy. From experience, the following patterns consistently improve passage reuse:

-

- Place the definition, key number, or conclusion in the first 1–2 paragraphs. Follow with evidence, method, and constraints.

-

Clean heading hierarchy

- Use descriptive H2/H3 headings; avoid clever phrasing. Engines prefer headings that explain “what” and “how.”

-

Bullet lists and step-by-step sections

- Summaries, checklists, and numbered steps are highly quotable. Keep each bullet self-contained.

-

Evidence-first claims

- When you present a number or comparative statement, cite primary sources and include the year. Engines favor grounded content.

-

FAQs and definitions

- Add an FAQ section for common queries; include succinct answers that stand alone.

-

Passage-level quotability

- Write 2–4 sentence paragraphs that can be lifted without losing context. Avoid burying facts inside long narratives.

Structured data that matters in 2025 (with examples)

Use JSON-LD and keep markup aligned with visible content. Prioritize Organization, Person (author), Article/BlogPosting, Product/Offer, FAQPage, and HowTo where relevant. Include @id and sameAs for entity clarity.

Article/BlogPosting with author and entity disambiguation

{

"@context": "https://schema.org",

"@type": "Article",

"@id": "https://example.com/articles/ai-citations-2025#article",

"mainEntityOfPage": "https://example.com/articles/ai-citations-2025",

"headline": "How to leverage structured data and semantic clarity to win AI engine citations in 2025",

"description": "Field-tested practices for earning citations across AI engines with structured data and semantic clarity.",

"datePublished": "2025-10-04",

"dateModified": "2025-10-04",

"author": {

"@type": "Person",

"@id": "https://example.com/#author",

"name": "Alex Rivera",

"sameAs": [

"https://www.linkedin.com/in/alexrivera",

"https://wikidata.org/wiki/Q42"

]

},

"publisher": {

"@type": "Organization",

"@id": "https://example.com/#org",

"name": "Example Media",

"logo": {

"@type": "ImageObject",

"url": "https://example.com/assets/logo.png"

},

"sameAs": [

"https://twitter.com/example",

"https://crunchbase.com/organization/example-media"

]

}

}

Organization baseline

{

"@context": "https://schema.org",

"@type": "Organization",

"@id": "https://example.com/#org",

"name": "Example Media",

"url": "https://example.com/",

"sameAs": [

"https://www.linkedin.com/company/example-media",

"https://wikidata.org/wiki/Q123456"

]

}

FAQPage for quotable answers

{

"@context": "https://schema.org",

"@type": "FAQPage",

"@id": "https://example.com/articles/ai-citations-2025#faq",

"mainEntity": [

{

"@type": "Question",

"name": "What structured data types help AI citations?",

"acceptedAnswer": {

"@type": "Answer",

"text": "Article/BlogPosting, Organization, Person, FAQPage, HowTo, and Product/Offer where relevant. Always align with on-page content."

}

},

{

"@type": "Question",

"name": "Does structured data guarantee inclusion in AI Overviews?",

"acceptedAnswer": {

"@type": "Answer",

"text": "No. Structured data enables understanding and eligibility, but selection depends on content quality, authority, and quotability."

}

}

]

}

Implementation notes:

- Keep JSON-LD in the head or just before closing body.

- Validate syntax and vocabulary; ensure visible text mirrors markup to avoid trust issues.

- Use @id for persistent entity identifiers; prefer canonical URLs.

Engine-specific refinements (practical)

-

Google AI Overviews

- Ensure indexing and snippet eligibility. Align JSON-LD with visible content (Article, FAQPage, HowTo, Product/Offer). Strengthen E-E-A-T with author bios, editorial standards, and references.

-

Bing Copilot

- Write clean, quotable passages; cite primary sources directly in your content. Include “last updated” for volatile topics. Use Organization and Person schema for provenance.

-

Perplexity

- Put key facts and summary bullets near the top. Keep canonical URLs clean; avoid parameter duplicates. Clear titles and meta descriptions improve discoverability.

-

Gemini (app/API)

- Segment content with headings; place explicit references near claims. If building apps, leverage Search grounding; make fragments easy to quote.

-

ChatGPT/Claude ecosystems

- Maintain opt-in via robots.txt (allow GPTBot/ClaudeBot), keep pages fast and lightweight for easier ingestion, and avoid heavy scripts that block crawler parsing.

Implementation workflow (2025)

- Audit

- Indexability: Crawl with your favorite auditor; fix 4xx/5xx, canonicals, and rendering blockers. Confirm snippet eligibility.

- Content structure: Score pages on answer-first, headings, list density, and passage quotability. Identify core entities and missing disambiguation.

- Schema inventory: Extract current JSON-LD and validate.

- Enrich

- Add/upgrade Organization, Person, Article/BlogPosting, FAQPage/HowTo, Product/Offer. Include @id, sameAs, and correct timestamps.

- E-E-A-T: Strengthen author bios, credentials, references, editorial policy, and corrections.

- Validate

- Use the Schema Markup Validator (schema.org, 2025) for vocabulary correctness and the Google Rich Results Test (2025) for feature eligibility.

- Multi-engine testing

- Manually query target questions across Google (AIO), Bing Copilot, Perplexity, Gemini, and ChatGPT. Note which URLs appear; capture cited passages.

- Passage tuning: Revise headings, summaries, and FAQ entries to sharpen quotability—avoid keyword stuffing.

- Monitor and iterate

- Track AIO prevalence and your brand’s presence; corroborate with manual checks to avoid tool bias.

- Maintain freshness: schedule updates for volatile topics and reflect changes in schema and timestamps.

Tooling note: For monitoring AI citation visibility across engines, you can use platforms that snapshot AI answers and source lists. One option is Geneo, which focuses on AI search visibility and citation monitoring across ChatGPT, Perplexity, and Google AI Overviews. Disclosure: Geneo is our product.

Measurement framework: prove impact over 4–8 weeks

Because there’s no conclusive public evidence that markup alone guarantees citation uplift, treat experiments like controlled studies.

-

Baseline

- Define a fixed query set per engine (e.g., 50 questions per product category). Document current citation incidence.

-

Intervention

- Implement schema upgrades and semantic refactoring (answer-first, quotable bullets, explicit references). Limit changes to a defined batch of pages so you can attribute impact.

-

Post-measure

- Track 4–8 weeks. Measure citation frequency per engine, passage extraction rate, share of citations to target pages vs. other domain pages, and time-to-citation after updates.

-

Controls and confounders

- Account for seasonality, promotion, and new backlinks. If possible, keep a matched control set of pages without changes.

Evidence you can cite (context and constraints)

AI Overviews prevalence grew materially in early 2025, increasing the number of queries where citations matter. Semrush reported 13.14% of queries triggered AI Overviews in March 2025 on a 10M+ keyword dataset, per the Semrush “AI Overviews study” (2025). seoClarity observed similar surges—about 10.4% of US desktop keywords in March 2025—with a ~156.7% increase from September 2024 to March 2025, summarized in their seoClarity “AI Overviews impact research” (2025).

Caveat: Studies show engines often cite multiple sources and not strictly the top-ranking pages. That’s why quotable passages, entity clarity, and explicit references on your pages matter.

Common pitfalls and trade-offs

-

Over-markup

- Unsupported or misleading schema will produce errors and reduce trust; Google will not reward inaccurate markup.

-

Mismatch with visible text

- Engines cross-check; discrepancies undermine eligibility and credibility.

-

- Missing @id/sameAs can lead engines to cite competitors with clearer identity graphs.

-

Thin or derivative pages

- AI engines prefer substantive sources with references; thin summaries rarely earn citations.

-

Expectation gap

- Treat schema and semantics as enablers, not guarantees. Content quality and authority dominate selection.

-

llms.txt experiments

- A proposed /llms.txt standard has not been adopted by major providers as of mid/late 2025; it’s fine to experiment, but don’t expect impact yet. See PPC Land’s overview, “llms.txt adoption stalls” (2025).

30-day action plan

Week 1: Audit

- Crawl key sections; fix technical blockers and snippet eligibility.

- Inventory schema; identify gaps in Organization, Person, Article, FAQPage/HowTo.

- Map entities; add @id and sameAs targets.

Week 2: Enrich and refactor

- Implement JSON-LD updates aligned with visible text.

- Rewrite pages for answer-first clarity; add FAQs and quotable bullets.

- Add author bios, editorial standards, and references.

Week 3: Validate and test

- Validate schema; run Rich Results tests.

- Query target questions across engines; collect snapshots of citations.

- Tune passages and headings based on what gets reused.

Week 4: Monitor and measure

- Track citation frequency, passage extraction rate, and time-to-citation.

- Schedule updates for volatile topics; maintain timestamps.

- Document learnings and roll improvements site-wide.

Extend your practice

If you’re new to the concept of Generative Engine Optimization (GEO), we share practical workflows and experiments on our blog—start with this overview of Generative Engine Optimization to see how teams operationalize multi-engine visibility.

Final reminders

- Keep markup honest and aligned with visible content.

- Write for quotability: answer-first, clean headings, bullets, and explicit references.

- Validate, test across engines, and monitor over time—freshness and provenance signals matter as much as schema.

- Expect variability by engine and query type; iterate based on observed citation behavior.