How to Optimize Content for AI Citations: Step-by-Step Guide

Learn actionable steps to boost your site's AI citations and generative search visibility. Follow this step-by-step tutorial to make your content citation-ready.

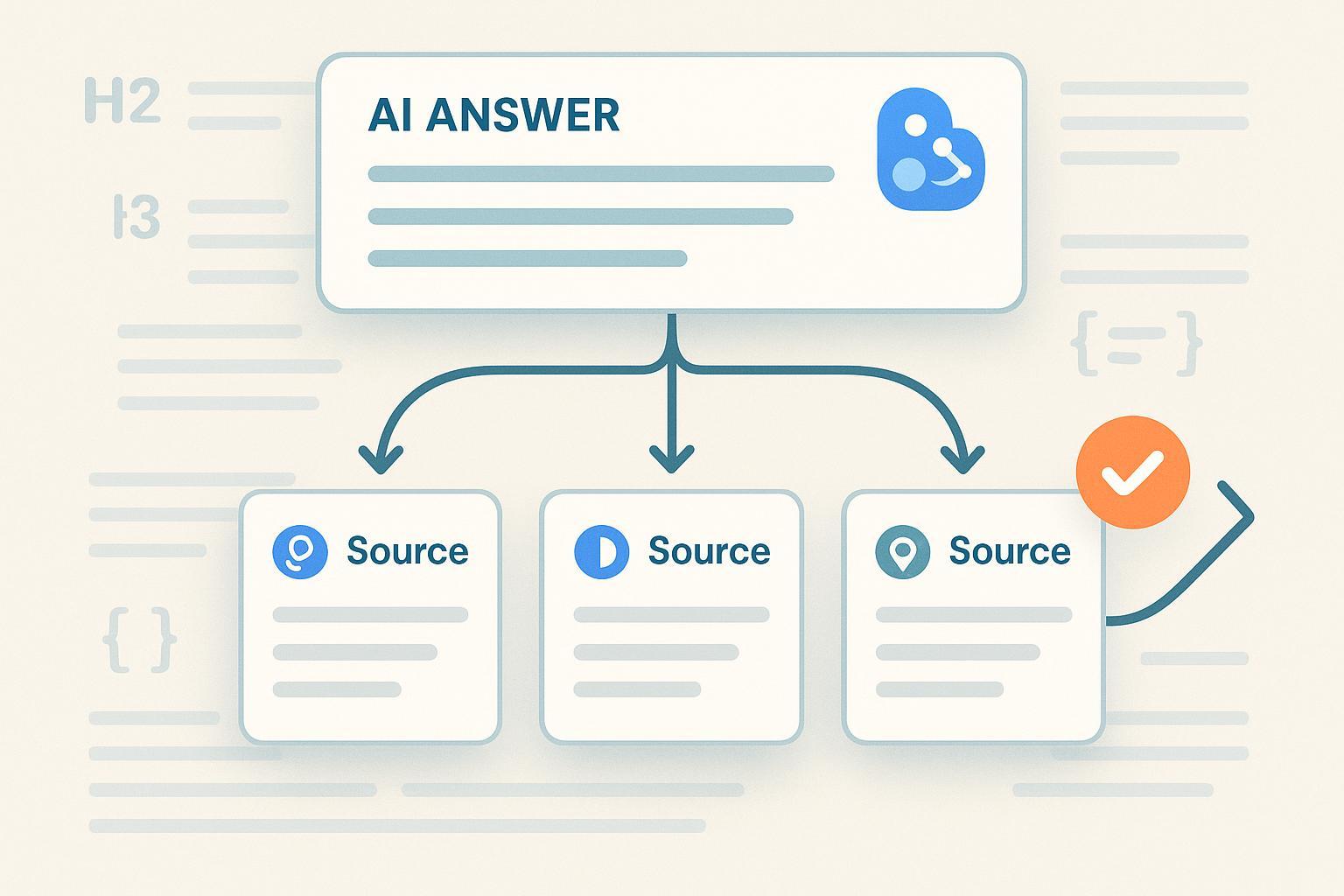

If you want your pages to be cited inside AI Overviews, Bing Copilot answers, or Perplexity results, you’re in the right place. This hands-on guide walks you through exactly how to make your content citation-ready and visible in generative search—updated for 2025 practices. You’ll set a baseline, structure your pages for extraction, add the right schema, shore up technical SEO, and create a simple monitoring loop to keep improving.

What you’ll need:

- Access to your CMS or site repo

- Ability to edit templates (for bylines, “last updated,” and schema)

- Access to Search Console/Bing Webmaster tools (or your SEO platform)

- 90–120 minutes for an initial pass; recurring monthly tune-ups take 30–45 minutes

Before we begin, two key definitions:

- AI citations: The linked attributions shown within AI-generated answers (e.g., Google AI Overviews, Bing Copilot) and answer engines (Perplexity).

- Generative search visibility: The likelihood your content gets surfaced, summarized, and linked to by AI-driven search features.

A quick reality check: Google does not provide any special “opt-in” for AI Overviews. Focus on helpfulness, clarity, and technical readiness according to Google’s guidance in AI features and your website and its 2025 best practices post, Succeeding in AI search.

Step 1: Audit your current AI citations and visibility baseline

Your first pass is a simple, repeatable audit. You’ll check a handful of priority queries and pages across major AI surfaces.

- Google Search → look for AI Overviews on your target queries and note any source links.

- Bing (Copilot/AI answers) → examine the inline citations and “learn more” source cards.

- Perplexity → run the same queries and inspect the Sources and Steps views.

Recommended sample set:

- 5–10 high-intent queries (commercial or decision-stage)

- 3–5 educational queries where your guides should be referenced

- Your brand query and a few product/solution queries

Practical example workflow: You can centralize this audit using Geneo. Disclosure: Geneo is our product. In one place, you can log which pages are cited on Google AI Overviews, Bing Copilot, and Perplexity for your chosen queries, and track how that changes over time.

How to test success

- You’ve captured a snapshot of which of your pages are cited across platforms for 10–15 core queries.

- You can point to the specific URL(s) cited per platform and any notable gaps (e.g., Bing cites you, Google doesn’t).

What if it fails?

- If you don’t see AI Overviews on Google for your query, try slight variations and check different geographies if possible.

- If nothing cites you, that’s okay—this guide is designed to help you earn those citations. Keep your baseline notes as a “before” picture.

Step 2: Make your pages answer-extraction friendly

AI systems prefer content that clearly answers a focused question and provides scannable support. Two moves make the biggest difference:

- Use question-led headings (H2/H3)

- Example: “What is generative search visibility?”

- Immediately follow the heading with a 40–60 word concise answer block.

- Support with lists, tables, and clear definitions

- Break complex topics into bullet steps or short tables.

- Define terms once and use them consistently.

This structure aligns with Google’s emphasis on clear, helpful formatting in its 2025 post Succeeding in AI search and complements the organizing approach described in the Search Engine Land organizing framework.

Add trust and clarity cues

- Visible byline with author expertise

- “Last updated” date near the top

- Brief summary or key definitions above the fold

How to test success

- The “read-back test”: Ask a teammate to skim your headings and first paragraphs. Can they repeat your short answers without scrolling far?

- Paste your short answer block into a snippet simulator or a simple text-only view. Does it stand alone?

What if it fails?

- Tighten the answer block to one paragraph of 40–60 words.

- Promote supporting bullets closer to the answer—don’t bury them.

- Rename vague headings into natural questions.

Note: For deeper reading on strategy beyond this tutorial, see our internal primer on Generative Engine Optimization insights.

Step 3: Implement and validate structured data (JSON-LD)

Structured data helps machines understand your page. It does not guarantee inclusion in AI features, but it improves clarity.

Suggested types by page purpose

- Guides and explainers: Article and, where applicable, FAQPage

- Tutorials: HowTo

- Company/about pages: Organization and Person (for authors)

Minimal FAQPage example (nest questions/answers under mainEntity):

{

"@context": "https://schema.org",

"@type": "FAQPage",

"mainEntity": [

{

"@type": "Question",

"name": "What is generative search visibility?",

"acceptedAnswer": {

"@type": "Answer",

"text": "Generative search visibility is the likelihood your content is surfaced and cited inside AI-generated answers across search engines."

}

},

{

"@type": "Question",

"name": "How do I get cited by AI Overviews?",

"acceptedAnswer": {

"@type": "Answer",

"text": "There is no opt-in. Focus on helpful, clear content, answer-focused structure, valid schema, and strong technical SEO."

}

}

]

}

Article with author and dates (attach to the page’s main content):

{

"@context": "https://schema.org",

"@type": "Article",

"headline": "Optimizing Content for AI Citations",

"author": {

"@type": "Person",

"name": "Your Name",

"url": "https://www.example.com/authors/your-name"

},

"datePublished": "2025-09-10",

"dateModified": "2025-10-07",

"image": [

"https://www.example.com/images/ai-citations-cover.jpg"

],

"url": "https://www.example.com/blog/ai-citations-guide"

}

Organization and Person basics (entity signals):

{

"@context": "https://schema.org",

"@type": "Organization",

"name": "Your Brand",

"url": "https://www.example.com/",

"sameAs": [

"https://www.linkedin.com/company/your-brand",

"https://x.com/yourbrand"

]

}

How to test success

- Validate with Google’s Rich Results Test and Schema.org’s Schema Markup Validator.

- Ensure no critical errors; address warnings where they improve clarity.

What if it fails?

- Confirm JSON-LD is on the canonical URL and not blocked by robots/meta tags.

- Add missing required properties; include recommended ones when practical.

- Remember: per Google’s AI features and your website, structured data aids understanding but doesn’t guarantee AI feature inclusion.

Step 4: Strengthen technical foundations that influence AI visibility

Crawlability and indexability

- Ensure important pages are indexable (no noindex) and reachable via clean internal links and sitemaps.

- Canonicalize duplicates; avoid conflicting canonicals.

- Make sure critical content (headings, definitions, answer blocks) is present in the initial HTML or rendered reliably server-side.

- Avoid hiding essential content behind user actions or heavy client-side scripts.

Performance and UX

- Track Core Web Vitals (LCP, INP, CLS). Target thresholds are outlined in Google’s Core Web Vitals overview.

How to test success

- Use Search Console’s URL Inspection to confirm indexing and canonical status.

- Run PageSpeed Insights or your RUM dashboard for LCP/INP/CLS.

- Spot-check critical templates with a text-only or fetch-and-render tool to confirm visibility of your short answer blocks.

What if it fails?

- Remove accidental blockers (noindex, disallow, or nosnippet) from high-value pages.

- Shift key content server-side or ensure hydration doesn’t delay it.

- Optimize images and critical CSS to improve LCP; minimize long tasks for better INP.

Step 5: Build topical authority and entity signals

To be cited, your page needs both clarity and credibility.

- Topic clustering: Group related content with clear hub-and-spoke internal linking.

- Internal links: Point from higher-authority hubs to specific Q&A pages using descriptive, consistent anchors.

- Author transparency: Add bios, credentials, and role-specific expertise; use Person schema.

- Organization clarity: Publish editorial standards and update policies; use Organization schema.

How to test success

- Create a quick internal link map: Can every spoke page be reached in two clicks from its hub? Are anchors descriptive?

- Check that every article has a visible byline, bio link, and “last updated” date.

What if it fails?

- Consolidate thin or overlapping posts into stronger, comprehensive guides.

- Add missing bios and link them consistently sitewide.

- If your space benefits from community validation, consider ethically participating in relevant discussions; for tactics, see our guide on driving AI search citations through Reddit communities.

Step 6: Freshness and transparency (and handling paywalls)

Freshness cues help AI systems and readers trust your page.

- Add a visible “last updated” date and briefly note what changed for sensitive or fast-moving topics.

- Maintain version notes for complex technical or regulatory content.

For paywalled content

- Use Paywalled Content structured data (isAccessibleForFree:false and cssSelector) so search engines understand which parts are gated; see Google’s Paywalled content structured data.

- Provide a meaningful lead-in or summary outside the paywall to avoid “invisible content” issues.

How to test success

- Verify that “last updated” displays on key pages and that your paywall markup validates without errors.

What if it fails?

- If AI systems don’t seem to understand your page, add more free summary content above the fold.

- Double-check that your paywall implementation aligns with Google’s guidelines and doesn’t resemble cloaking.

Step 7: Account for platform-specific nuances and run micro-tests

Google AI features

- There is no opt-in tag. Focus on helpful, unique content, clear structure, and technical soundness. Review the guidance in AI features and your website.

Bing Copilot

- Bing emphasizes grounded answers with inline source citations. The April 2025 announcement highlights these behaviors in Introducing Copilot Search in Bing.

Perplexity

- Perplexity shows transparent citations via Sources and often a Steps view. For an accessible overview of how it presents sources, see Built In’s explainer, what Perplexity is and how its Sources/Steps work.

- For a small set of queries, compare how each platform cites (or doesn’t) your content.

- Adjust your headings and short answer blocks based on what gets picked up.

How to test success

- You can demonstrate at least one improved citation or clearer presence in a summary across one platform after a round of edits.

What if it fails?

- Tighten your opening definition and elevate key lists/tables.

- Clarify entities (author/org) and add missing schema.

- Expand your topical cluster to fill obvious coverage gaps.

Step 8: Monitor monthly and iterate with a simple loop

Create a recurring 30–45 minute routine:

- Re-run your 10–15 core queries across Google/Bing/Perplexity.

- Note which pages are cited and where structure changes improved outcomes.

- Prioritize updates for pages showing momentum (near-misses or intermittent citations).

Optional: Include one concrete reference snapshot in your notes, such as this AI Search Visibility Analysis example, to keep your team aligned on what “good” looks like.

Soft next step

- You can use Geneo to centralize cross-platform citation tracking and trend changes over time with your team’s notes and priorities.

How to test success

- You have a one-page tracker showing queries, platforms, whether you’re cited, and which on-page changes preceded improvements.

What if it fails?

- Reduce scope to 5–7 priority queries until you’ve established a repeatable cadence.

- Focus edits on clarity: short answers, headings, and visible definitions before tackling advanced changes.

Quick closing checklist and common pitfalls

- Does each key page start with a clear, question-led heading and a 40–60 word answer block?

- Have you validated JSON-LD for Article/FAQ/HowTo and added Organization/Person where relevant?

- Are your canonical, indexing, and internal linking states clean and confirmed in Search Console?

- Do you show bylines, bios, and “last updated” dates?

- Are you tracking citations across Google, Bing, and Perplexity monthly and iterating based on results?

Common pitfalls to avoid

- Expecting schema to guarantee inclusion. It improves understanding, not selection.

- Burying the direct answer or definition below multiple screens of context.

- Rendering key content only after heavy client-side scripts, making it slow or invisible to crawlers.

- Ignoring entity signals: missing author bios and Organization clarity.

- Letting content go stale without visible update notes.

References and further reading

- Google Search Central, AI features and your website (ongoing guidance, accessed 2025)

- Google Search Central blog, Succeeding in AI search (2025)

- Search Engine Land, AI search content organizing framework (2024)

- Google Search Central, Core Web Vitals overview (updated through 2025)

- Bing Search Blog, Introducing Copilot Search in Bing (April 2025)

- Built In, What is Perplexity AI? (2024 explainer)