How to Implement GEO: Step-by-Step Guide for Marketing Teams with Geneo

Learn step-by-step how marketing teams can optimize for AI-driven answers using GEO & Geneo. Includes workflow, practical tips, and real success stories.

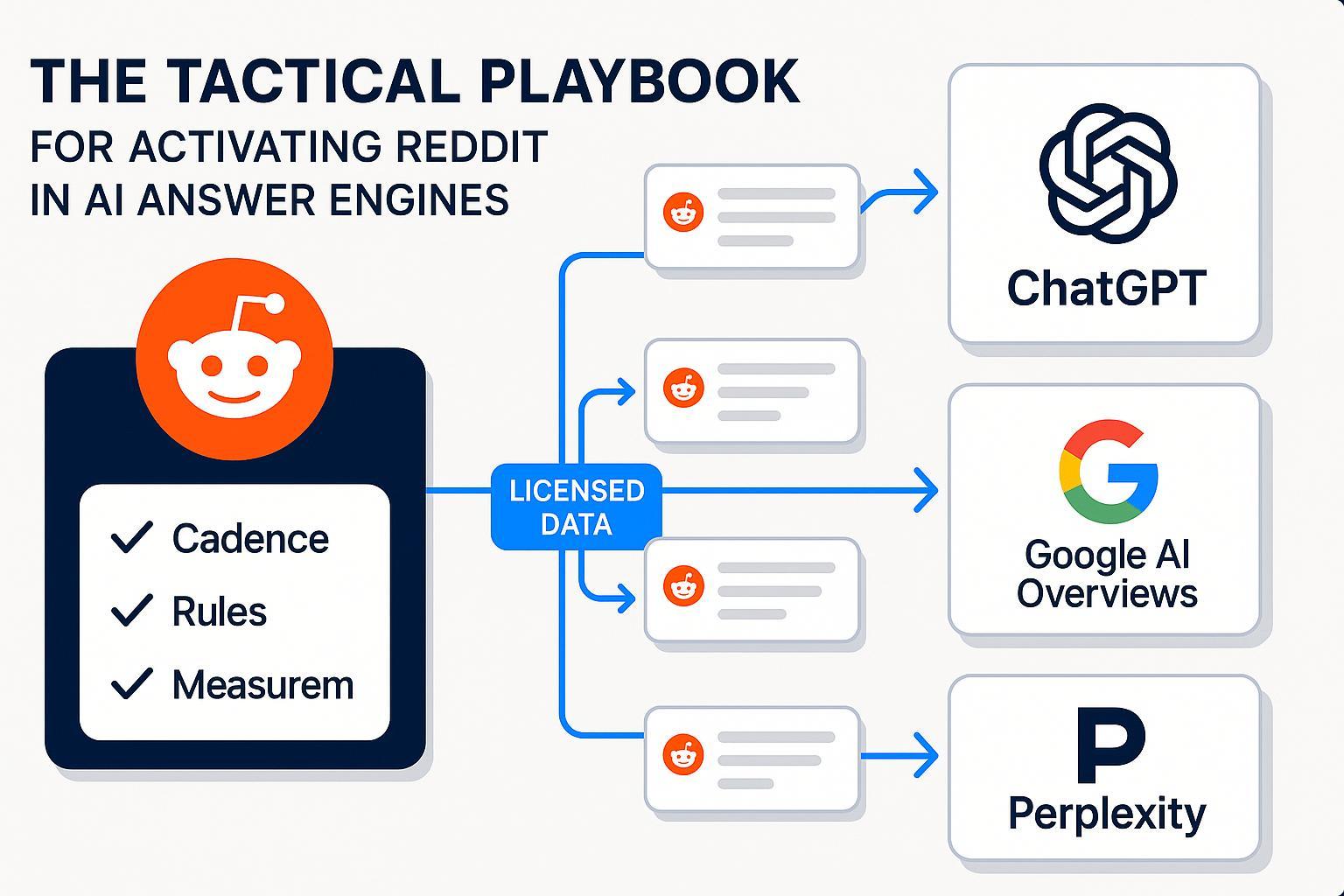

If you’re feeling the shift from traditional SEO to AI-driven answers, you’re not alone. This step-by-step tutorial helps your team implement Generative Engine Optimization (GEO) and Answer Engine Optimization (AEO) so your content earns inclusion and citations across ChatGPT, Perplexity, and Google AI Overviews.

Who it’s for: Marketing/SEO managers, content strategists, and agencies.

Time & difficulty: Plan 2–4 weeks for the first rollout (moderate difficulty), then iterate monthly.

Prerequisites: Access to your CMS, analytics (e.g., GA), a keyword/intent research tool, and capacity to update content and schema.

You’ll build a practical program: define goals, establish your cross-engine baseline, map answer-focused intent, optimize content and entities, and set up a monitoring cadence with clear reporting.

GEO vs. AEO, in practice

GEO focuses on increasing visibility and citations inside AI-generated answers across engines. A concise definition is outlined in Search Engine Land’s 2024 GEO explainer.

AEO emphasizes structuring content so answer engines can extract and present direct, reliable answers. See the practitioner framing in the CXL 2025 AEO guide.

How engines behave:

Google’s AI Overviews ground responses with source links and aim to assist with multi-step queries, per the Google Blog’s 2024 announcement.

ChatGPT Search provides timely answers with inline citations and a Sources view, according to the OpenAI Help Center (2024).

Perplexity emphasizes clickable citations for public web answers, as described in the Perplexity Hub (2024).

For a quick primer on engine differences and how each cites sources, skim this comparison: Perplexity vs. Google AI Overviews vs. ChatGPT.

Want to go deeper on GEO/AEO fundamentals and workflows? Browse the evolving insights on the Geneo blog hub.

Step 1: Set clear objectives and KPIs

Goal: Align your GEO/AEO program with business outcomes and measurable visibility.

What to do:

Define success for your customer journey. Examples: “Be cited in AI answers for core educational queries” (awareness), “Earn inclusion for comparison/best-of queries” (consideration), “Surface authoritative buying guides” (decision).

Select KPIs you can track:

Share of Voice (SOV) in AI Overviews and answer engine citations.

Number of branded/non-branded citations or mentions across engines.

Sentiment of brand mentions within AI answers (qualitative notes work initially).

Business outcomes: qualified leads, assisted conversions, revenue attribution.

Set thresholds for decision-making. Example: “When SOV drops by >10% over a month, trigger a diagnostic.”

Validation: Document your goals and KPIs in a one-page brief. If your team can’t explain them to stakeholders in 3–4 bullets, simplify.

Tip: Keep vanity metrics in check by pairing visibility (citations) with business impact (pipeline/revenue trends).

Step 2: Establish your cross-engine baseline

Goal: Understand where you currently appear and who dominates the answers you care about.

What to do:

Build a query set that realistically triggers AI answers. Include informational (“how to…”, “what is…”) and transactional (“best X for Y”, “compare A vs B”).

Check which queries trigger AI Overviews in Google and note the cited domains. Practical workflows for tracking AIO inclusions are outlined in the Advanced Web Ranking help guide (2025).

Log citations across engines and compute SOV. SE Ranking’s research offers a view into typical AIO source behavior—useful context as you record who gets cited, per SE Ranking’s 2024 AIO sources study.

Capture volatility. Recheck weekly and summarize trends monthly; answers can change frequently.

Validation: A spreadsheet (or dashboard) with queries, whether an AI answer appeared, and which domains were cited. Calculate SOV as your citations divided by total citations per query set, and track it over time.

Gotcha: Don’t confuse featured snippets with AI answers. The former is a single page excerpt; the latter is a multi-source answer with citations that can shift based on consensus and freshness.

Step 3: Select your competitor set

Goal: Benchmark against peers and credible aggregators that often capture citations.

What to do:

List direct competitors and high-authority publishers in your niche. For e-commerce, include marketplaces and review sites that frequently appear.

Include “content competitors” you don’t sell against but who dominate answers (think major media, encyclopedic resources, community sites).

Align on 6–12 core domains for routine benchmarking, then add niche sites per topic.

Validation: Confirm you’re not benchmarking only brands like yours. If aggregators and publishers dominate answers, they belong in your set.

Tip: In early stages, a mix of brand sites, industry publications, and review portals gives you a realistic picture of how engines assemble consensus.

Step 4: Map answer-engine intent to content opportunities

Goal: Translate natural-language questions into content that engines can extract and trust.

What to do:

Cluster questions and sub-questions around the same intent. Think conversational: “Is X better than Y for Z?”, “What’s the safest way to…?”, “How does X compare with Y?”

Draft “answer-first” outlines. Begin sections with a 40–60 word direct answer, then expand with supporting detail, lists, and tables.

Create Q&A blocks and concise summaries. Answer engines parse clear headings and structured snippets more reliably (see the AEO framing in the CXL 2025 guide linked above).

Plan corroboration. Identify credible external references you’ll cite to strengthen trust and reduce ambiguity.

Validation: For each target query cluster, you can point to a draft outline that contains a concise answer, supporting sections, and planned citations.

Gotcha: Traditional keyword volumes can mislead here. Prioritize intent clarity and completeness over chasing the highest monthly searches.

Step 5: Fix entity clarity, schema, and extraction structure

Goal: Reduce ambiguity about who you are and make your answers machine-parsable.

What to do:

Strengthen entity signals:

Use Organization/Brand schema (name, URL, sameAs to authoritative profiles), and ensure consistent naming across properties.

For key topics, reference authoritative knowledge bases where appropriate to disambiguate.

Add applicable structured data:

Frequently used types: FAQPage, HowTo, Product, Review, Article.

Keep markup accurate and aligned with on-page content; validate with testing tools.

Optimize for extraction:

Lead with concise answers.

Use clear H2/H3 headings, bullet lists, tables, and callouts that make parsing easy.

Demonstrate experience and trust: Author bios, date stamps, revision notes, and transparent sourcing.

Validation: Structured data tests pass, on-page answers are clearly scannable, and author credentials are visible.

Tip: Engines favor freshness for many queries—note the last updated date and maintain a predictable refresh cadence for your most important pages.

Step 6: Ensure technical hygiene

Goal: Remove barriers that prevent engines from discovering, understanding, or trusting your content.

What to do:

Confirm crawlability and indexability; avoid accidental noindex/robots exclusions on key pages.

Stabilize performance and rendering; server-side rendering can help ensure reliable parsing.

Keep HTML clean; avoid excessive client-side injection that delays content visibility to crawlers.

Monitor error budgets. If your site routinely serves errors or timeouts, it undermines inclusion in answers.

Validation: A simple monthly technical checklist—crawl stats healthy, index coverage accurate, Core Web Vitals acceptable, markup validated.

Gotcha: Overly complex JS frameworks that hide content or alter URLs can reduce your reliability for answer engines.

Step 7: Publish, corroborate, and earn inclusion

Goal: Make your content the easiest credible source to include.

What to do:

Publish with clear answers at the top and thorough supporting sections below.

Cite credible external sources to strengthen claims and align with consensus.

Cross-link related content to help engines follow your topical map.

Refresh: Update facts, revise sections, and add newly relevant questions as you observe answer patterns.

Validation: For each published page, confirm it has a concise answer, two or more credible citations, and updated meta information.

Tip: After publishing, monitor how engines assemble answers. If authoritative aggregators dominate, expand your content to address missing sub-questions or add clearer comparative tables.

Step 8: Monitor, report, and iterate

Goal: Run a sustainable program with weekly checks and monthly retros.

What to do:

Weekly: Spot-check your core queries across engines and note any inclusion or citation shifts.

Monthly: Report SOV changes, top gains/losses, sentiment notes, and resulting business metrics.

Portfolio approach: Avoid over-optimizing for one engine; track ChatGPT, Perplexity, and AI Overviews together.

Keep stakeholders aware of market shifts. Directionally, industry coverage of forecasts suggests AI assistants can impact traditional search usage—see Search Engine Land’s 2024 Gartner coverage for context.

Validation: A recurring slide or dashboard with SOV, notable query changes, and prioritized next actions.

Gotcha: Expect volatility. Answers and citations can change rapidly; anchor your program in steady iteration, not one-off wins.

Practical example: One workspace, prioritized actions

Imagine consolidating AI Overview SOV, citations by engine, and a prioritized content roadmap in one view. Tools can streamline this; for instance, Geneo brings multi-platform tracking and an Actionable Content Roadmap together. Disclosure: Geneo is our product.

How teams typically set this up:

Create a workspace for your brand and add your primary markets.

Import or define your query set (awareness, consideration, decision) and select engines to monitor.

Add your competitor domains (direct brands plus high-authority publishers/review portals).

Review SOV and citation trends by engine and topic cluster.

Use the Actionable Content Roadmap to produce a prioritized list of pages/queries to improve—e.g., add a direct answer summary, expand comparison tables, fix schema alignment.

Alternative approach: If you’re tool-light, maintain a spreadsheet to record weekly inclusions and a backlog of “next content actions.” The principle is the same—centralize visibility and make prioritization frictionless.

Success stories: What this looks like in the field

E-commerce: Growing AI Overview SOV for a category leader

An online retailer noticed competitors and review aggregators dominating AI Overviews for “best [category] for [use case].” The team:

Cleaned up Organization and Product entities, aligned naming conventions, and added consistent sameAs references.

Refreshed buying guides with answer-first sections and comparison tables; added FAQPage/HowTo schema where relevant.

Published corroborated specifications and safety notes with links to authoritative references.

Monitored weekly and iterated monthly, expanding coverage to adjacent sub-questions.

Outcome: More frequent inclusion across target query clusters and stronger visibility for the brand’s educational content. The key was entity clarity plus genuinely helpful, structured answers.

Agency: Competitive benchmarking wins that sell GEO retainers

A digital agency packaged GEO as a monthly service centered on cross-engine benchmarking:

Built a client-specific query portfolio and competitor set (brands + aggregators).

Reported SOV and citation shifts with a prioritized content backlog.

Ran quarterly “intent gap” workshops with client editors to fill sub-question coverage.

To compare multi-engine monitoring options and package deliverables, see this practitioner overview: Best tools to monitor brand mentions in ChatGPT answers (2025 comparison).

Outcome: The agency won retainers by demonstrating clear visibility metrics and a repeatable improvement plan—no hype, just consistent iteration.

Troubleshooting and guardrails

If visibility drops suddenly:

Reconfirm which queries still trigger AI answers; recalculate SOV and identify domains gaining ground.

Review freshness and corroboration on your pages; add or update citations.

Check technical hygiene (crawlability, rendering, markup validity).

If answers misrepresent your brand:

Strengthen entity clarity (Organization/Brand schema, consistent naming, authoritative profiles).

Publish updated, factual content on your official properties and cite credible references.

Use in-product feedback tools where available (e.g., Google Search feedback, Perplexity support channels) and monitor how answers evolve.

Avoid common pitfalls:

Confusing featured snippets with AI answers—look for multi-source citations.

Chasing keyword volume over intent clarity—answer engines prefer comprehensive, trustworthy coverage.

Over-optimizing for one engine—maintain a portfolio view and cross-engine cadence.

Next steps

You’re ready to run a GEO/AEO program: clear objectives, a cross-engine baseline, intent-mapped content, entity/schema alignment, and a steady monitoring loop.

Soft CTA: Start Free on Geneo and set up your first AI Visibility Workspace. Build momentum with a shortlist of high-impact pages, and revisit progress every month.