Top 7 Mistakes to Avoid for Generative Search Optimization (2025)

Avoid the 7 most costly mistakes in generative search optimization for 2025. Boost your brand's AI answer engine visibility with these must-know expert tips!

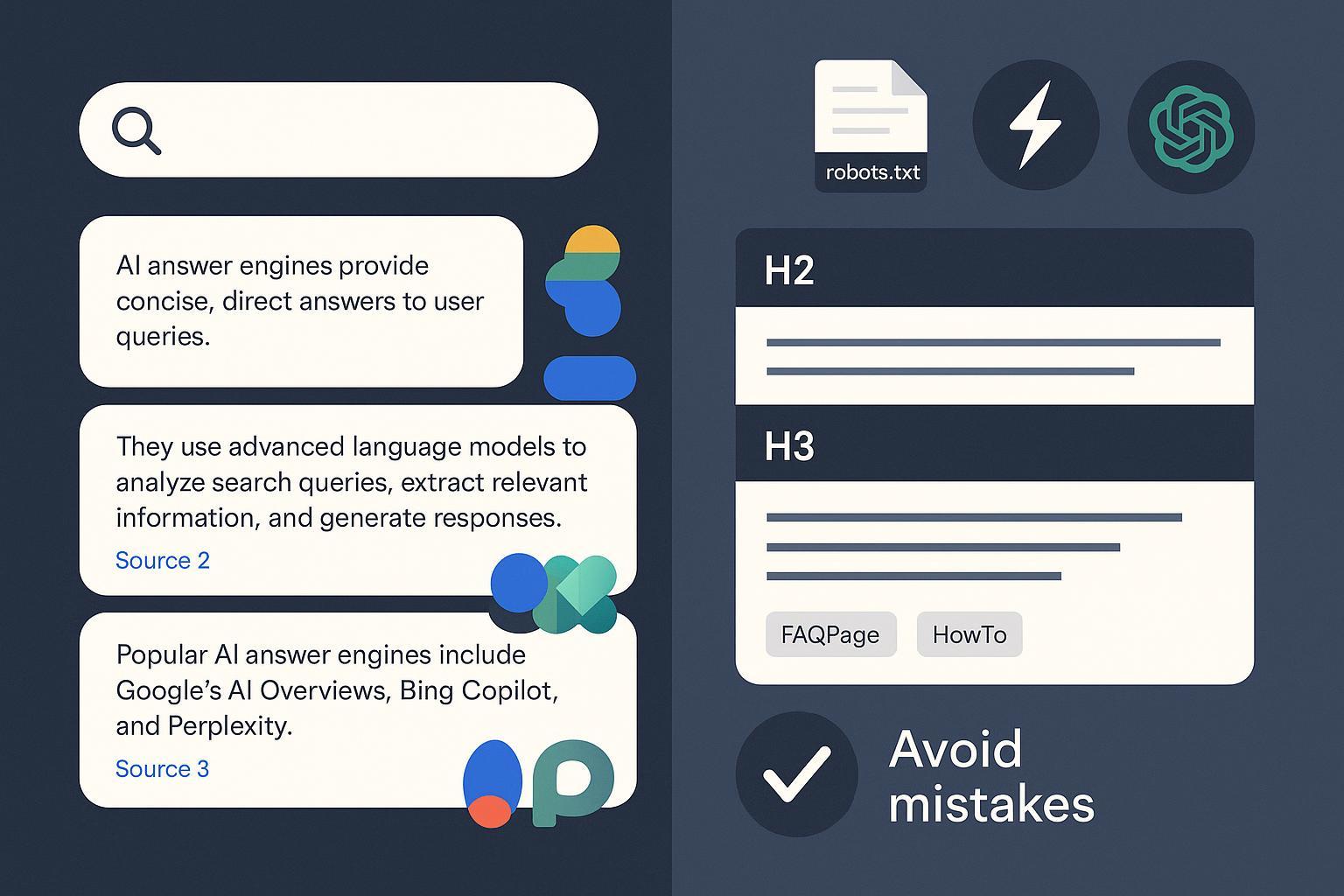

Generative and answer engines—Google AI Search/Overviews, Bing Copilot, Perplexity, and ChatGPT Browse—now synthesize multi-source answers and selectively cite pages. If you’re optimizing for inclusion and citations in 2025, avoid these common pitfalls. Platform behaviors and eligibility criteria can change, so treat the following as proven fundamentals with room to adapt.

1) Optimizing for keywords instead of questions and intents

Short, keyword-stuffed sections rarely win in AI answers. These engines assemble conversational responses, often preferring sources that directly address the underlying question and follow-up intents.

-

Why this hurts in generative engines

- AI systems favor content that is clearly helpful, unique, and answer-first. Google’s May 2025 guidance emphasizes people-first content and clear structure for AI features, including schema and crawlability. See the principles in Google Search Central’s 2025 guidance on succeeding in AI Search.

-

How to fix it (quick checklist)

- Map real questions and intents; use H2/H3 phrased as user questions.

- Open sections with an answer-first paragraph (40–60 words), then details.

- Add concise lists/tables and an FAQ block that tackles likely follow-ups.

- Keep semantic, readable HTML so passages are easy to parse.

2) Publishing thin, unmaintained content with weak freshness signals

Shallow or outdated content is less likely to be trusted or cited. Engines tend to prefer comprehensive, current pages with visible expertise.

-

Why this hurts in generative engines

- Google’s 2025 documentation stresses unique, helpful content and robust structure for AI features—thin pages won’t compete. Review the expectations in Google’s AI features and your website (2025).

-

How to fix it (quick checklist)

- Expand thin pages to cover key subtopics; add examples, diagrams, and data.

- Refresh quarterly and note “Updated” dates or changelogs where appropriate.

- Add author credentials and cite primary sources within the copy.

- Prioritize updates for pages that already earn (or almost earn) citations.

3) Skipping structured data and parse‑friendly formatting

Missing schema and chaotic layouts make extraction harder. Engines reward modular, well-labeled content they can trust and reuse.

-

Why this hurts in generative engines

- Structured data improves machine understanding; modular sections boost “snippability.” Microsoft’s 2025 guidance outlines how clear headings, schema, and modular content aid inclusion in AI answers: Microsoft/Bing’s 2025 guidance on inclusion in AI search answers. Also see practical “chunks/passages” guidance in Search Engine Land’s 2025 piece on micro-wins in AI Mode.

-

How to fix it (quick checklist)

- Add relevant schema types: FAQPage for Q&A blocks; HowTo for stepwise guides; Article with Organization/Person; Product/Review where relevant.

- Use semantic HTML (section, article, aside) and descriptive headings.

- Keep paragraphs tight and scannable. Align visible headings with schema.

4) Breaking discovery with robots.txt, bot throttling, or fragile site performance

If crawlers can’t reliably access your pages—or your site times out—your eligibility for inclusion and citations plummets.

-

Why this hurts in generative engines

- Robots.txt still governs access; llms.txt is advisory only. As explained in Search Engine Land’s June 2025 clarification on llms.txt vs. robots.txt, access control remains with robots.txt. For speed of discovery on the Bing ecosystem, IndexNow helps notify updates quickly (see Bing Webmaster Blog on IndexNow, 2025).

-

How to fix it (quick checklist)

- Audit robots.txt to ensure critical paths are crawlable by Googlebot/Bingbot and reputable AI-related bots you want to allow.

- Calibrate WAF/bot rules; avoid harsh rate limits for verified bots.

- Improve performance (TTFB, LCP), fix broken internal links, and ensure stable 200 responses.

- Implement IndexNow to accelerate discovery for new/updated URLs.

5) Treating GEO/AEO as a shortcut and neglecting SEO fundamentals

Answer engines still lean on the same foundations as traditional search: helpful content, clean architecture, credible links, and great UX.

-

Why this hurts in generative engines

- Pages that lack E‑E‑A‑T signals, sound information architecture, or technical health struggle to be selected. Google’s 2025 AI Search guidance reiterates helpfulness, original value, and technical readiness—see Google Search Central’s 2025 guidance for AI Search.

-

How to fix it (quick checklist)

- Build topic clusters with clear internal linking; avoid orphaned pages.

- Demonstrate expertise: author bios, first‑hand insights, original data.

- Keep technical SEO solid: HTTPS, mobile performance, indexability.

- Earn relevant links via high‑quality contributions and partnerships.

6) Optimizing for only one engine instead of multi‑platform reality

Citation behavior and content preferences differ across Google, Bing Copilot, Perplexity, and ChatGPT Browse. Focusing on one engine leaves visibility on the table.

-

Why this hurts in generative engines

- Transparency and sourcing vary by platform. For instance, a 2025 analysis found AI engines can be inconsistent in citing news and sources, underscoring the need to test across ecosystems; see the overview in Columbia Journalism Review’s March 2025 comparison of eight AI search engines. Perplexity, by contrast, explicitly provides inline citations, which you can design for by including concise, source-backed passages (see Perplexity’s overview of citations behavior, 2024).

-

How to fix it (quick checklist)

- Test a fixed query set monthly across AIO, Copilot, Perplexity, and ChatGPT Browse; log inclusion and the exact source URLs.

- Tailor formats slightly (subject to change):

- Google AIO: authoritative sources, answer-first sections, schema, entity clarity.

- Bing Copilot: modular lists/sections; use IndexNow for fast discovery.

- Perplexity: concise, fact-dense passages that cite primary data.

- ChatGPT Browse: fast, accessible pages with clear definitions and references.

- Use a neutral monitoring tool to track multi-engine citations and inclusion over time. One option is Geneo, which can monitor brand visibility and citations across AI engines. Disclosure: Geneo is our product.

- If you’re comparing enterprise monitoring platforms, see this practical breakdown of trade‑offs in the Profound vs Brandlight comparison.

7) Underinvesting in brand authority and third‑party citations

Engines look for signals that others trust you: expert quotes, reputable mentions, and consistent entity information. Without off‑site validation, your likelihood of appearing (and being cited) goes down.

-

Why this hurts in generative engines

- Platforms favor sources that are corroborated elsewhere. Community platforms like Reddit have formal data licensing with major AI players (2024–2025), elevating the visibility of credible contributions. This context helps explain why authentic community engagement can influence citations; see SEC references in 2024 filings cited broadly in coverage of Reddit’s licensing agreements.

-

How to fix it (quick checklist)

- Earn credible third‑party mentions (industry orgs, .edu/.gov where relevant, reputable media) and align Organization/Person schema.

- Publish original data and co‑authored pieces with recognized experts.

- Participate authentically in niche communities; for practical tactics, review this guide to driving AI search citations through Reddit communities.

- Track mentions and citations across engines; prioritize outreach in gaps.

Next steps and how to measure progress

- Build a reproducible query set. Track inclusion rate, citation count/reuse, and time‑to‑inclusion after updates across Google AIO, Bing Copilot, Perplexity, and ChatGPT Browse.

- Trend your AI share of voice by topic cluster, and correlate with refreshes, new links, and performance fixes.

- Document platform‑specific nuances and revisit monthly; behaviors are subject to change.

- Consider using a neutral monitoring platform like Geneo to centralize AI visibility tracking, sentiment, and historical comparisons. If you already use another enterprise tool, maintain your own audit log so you can validate changes independently.

Source notes and further reading

- Google’s perspective on helpful, structured, AI‑ready content (May 2025): Top ways to ensure your content performs well in Google’s AI Search

- Microsoft/Bing’s practical guide to inclusion in AI answers (Oct 2025): Optimizing Your Content for Inclusion in AI Search Answers

- How to structure “chunks” and passages for AI Mode (June 2025): SEL on micro‑answer optimization

- llms.txt vs. robots.txt (June 2025): SEL’s explanation of advisory vs. access‑control roles

- Speeding up discovery in the Bing ecosystem (May 2025): Bing Webmaster Blog on IndexNow

- Citation transparency challenges (Mar 2025): CJR’s comparison of eight AI engines

- Designing for platforms that always cite (Oct 2024): Perplexity’s overview of clickable citations