Best Practices for Monitoring Core Prompts in AI Visibility

Discover actionable strategies for monitoring core prompts in AI visibility. Tailored workflows for SMBs and brands, KPIs, and prompt categories for practitioners.

AI engines like ChatGPT, Perplexity, and Google’s AI Overview now surface answers that shape discovery, reputation, and conversion. If your brand isn’t visible—or is represented poorly—in those answers, you’ll feel it in traffic quality, branded search demand, and sales conversations. This guide shows you how to define your “core prompts,” set a monitoring cadence, and run a lightweight or enterprise-grade workflow depending on whether you’re an SMB or an established brand.

Why “core prompts” are your visibility backbone

Core prompts are the high-impact queries where you need consistent, accurate inclusion in AI answers. Think of them as your visibility backbone: brand/entity recall, category leadership, competitor comparisons, product/use-case queries, pricing/alternatives, sentiment/reputation, and news/recency.

AI answers are dynamic; engines retrain and re-rank, sources change, and context shifts—what looked fine last week can drift. Multiple industry trackers have documented volatility in AI answer inclusion and source citations across cohorts in 2025; for example, sector analyses reported fluctuating visibility patterns as models updated and publishers adjusted content. Practical takeaway: treat AI visibility like observability, not a one-off audit.

For foundational context on generative engine optimization frameworks, see the definitions overview in Geneo’s GEO/GSVO/GSO/AIO/LLMO explainer (2025). For a workflow-oriented lens from the search industry, Search Engine Land’s GEO content audit template offers a structured way to align content and monitoring.

The 7 core prompt categories to track (with examples)

Below are seven categories with sample prompts and what to log when you monitor. Use them as building blocks; tailor the phrasing to your brand, market, and buyer intent.

1) Brand/entity recall

The goal is accurate recognition and description wherever your name appears. Prompts like “What is [BrandName]?” or “Who offers [solution type] like [BrandName]?” reveal inclusion, placement in the answer, and whether engines explain you correctly. Log inclusion yes/no, whether you’re a primary or secondary mention, description accuracy, which citations the engine uses, and the overall sentiment tone.

2) Category leadership

Category prompts show if you make the cut for “best” and “top” lists in your space. Variations such as “Best [category] platforms for SMBs” and “Top enterprise [category] tools for compliance-heavy teams” help you see how you surface across segments. Record your rank position within the AI answer, your share of category mentions, and the quality of citations (are they authoritative docs and respected publications?).

3) Competitor comparisons

Comparisons are where narratives harden. “[BrandName] vs [Competitor]” and “Alternatives to [Competitor] similar to [BrandName]” should fairly reflect differentiators. Capture whether you’re included, how the framing positions you, any pros/cons attributed, and flags for outdated or inaccurate claims that require follow-up.

4) Product/use-case queries

Use-case prompts map to jobs-to-be-done: “How to track AI answer visibility across ChatGPT and Google AI Overview” or “Monitor sentiment of brand mentions in AI engines.” Check if your product is cited, whether the steps align with your capabilities, and if the recommended approach reflects current best practices.

5) Pricing/alternatives

Pricing shapes demand. A prompt like “[BrandName] pricing and best alternatives” should present up-to-date tiers and fair substitutes. Verify price accuracy, tier names, references to freemium/trials, and whether the alternatives listed match the buyer’s intent.

6) Sentiment/reputation

Visibility without positive framing can still cost you pipeline. Prompts such as “Is [BrandName] reliable for AI visibility?” and “User feedback on [BrandName] AI monitoring” reveal tone and recurring themes (support, accuracy, UX). Track a sentiment score, note patterns, and prioritize remediation content where needed.

7) News/recency

After launches or incidents, recency matters. “What’s new with [BrandName] this month?” and “Recent updates to [BrandName]’s AI monitoring capabilities” should include your latest announcements and correct timelines. Log whether updates appear, if they’re attributed to the right sources, and any misattributions to correct.

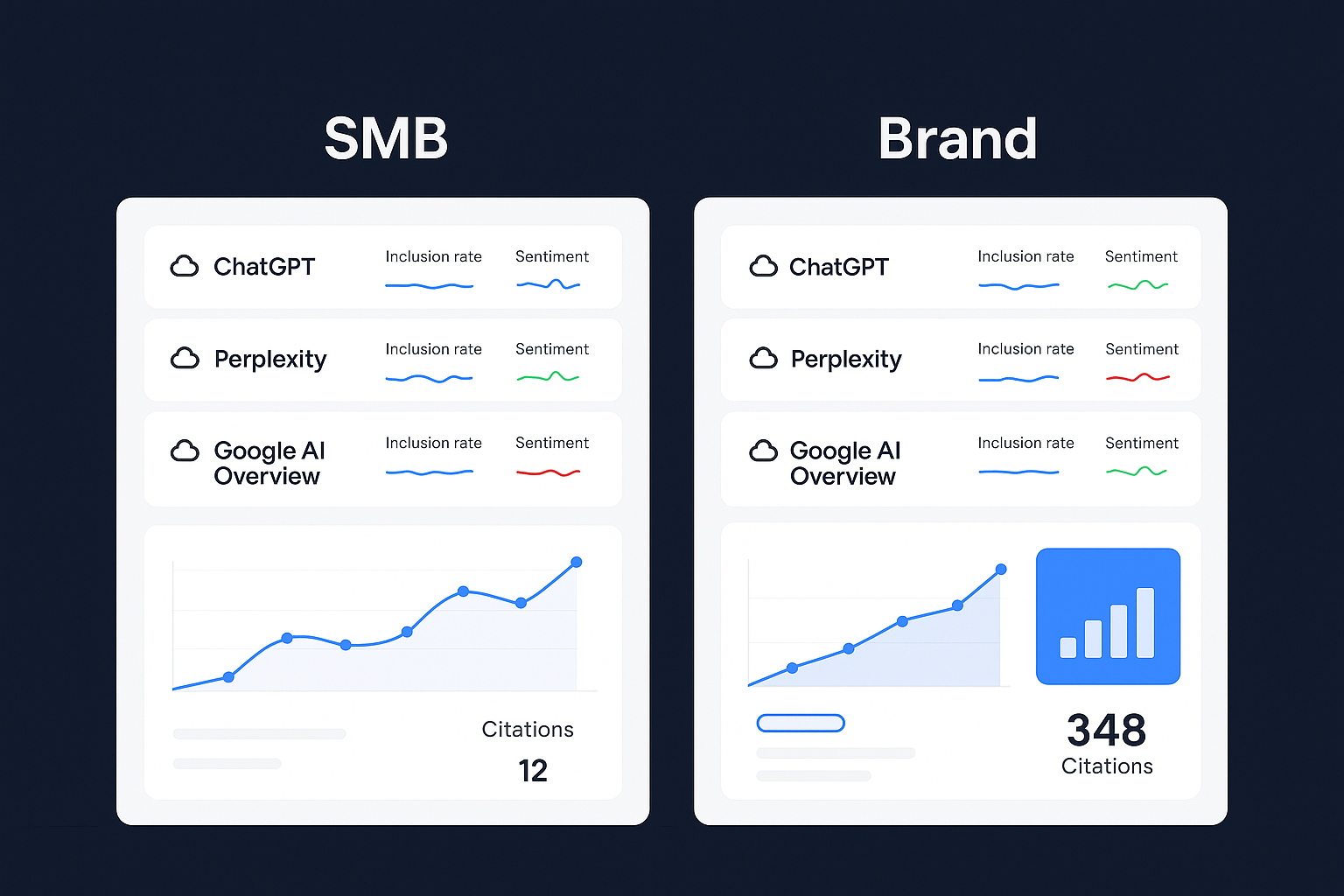

SMB vs. Established Brand: two operating models

SMBs usually need lean tooling and fast iteration. Established brands must balance visibility with governance, sentiment risk, and integration across stacks. Here’s a side-by-side view.

Aspect | SMB model (tooling/budget, scrappiness) | Brand model (integration/governance, reputation risk) |

|---|---|---|

Prompt set size | 25–40 core prompts | 60–120 core prompts across markets/products |

Cadence | Weekly for top 10; biweekly for the rest | Twice weekly for top-tier; weekly for long tail |

KPIs | Inclusion rate, top-position share, citation quality | All SMB KPIs + sentiment score, stability index, governance compliance |

Stack | Single platform + spreadsheet log | Integrated platform, data warehouse, CI/CD gates, alerting via Slack/Teams |

Alerts | Email summaries; threshold-based for top prompts | Real-time alerts; incident playbooks; executive summaries |

Security | Basic workspace roles | SSO, RBAC, audit trails, prompt change approvals |

Budget view | Free/low-cost tier; expand as ROI appears | Enterprise licenses; cross-department cost centers |

Step-by-step monitoring workflow

Design a repeatable workflow that fits your segment. Think of it like setting up observability for your brand’s AI presence.

Select and scope prompts. Start with the seven categories above. For SMBs, prioritize 10–15 brand, category, and competitor prompts. For brands, map prompts per product line and region, including phrasing variants like “best,” “top,” and “recommended.”

Log and version. Maintain a prompt register with owner, category, language, last run, and notes. Version prompts when wording changes and keep diffs so you can spot prompt drift. Store the AI answers, citations, and screenshots or JSON when available.

Schedule runs. Set a cadence aligned to business impact. High-value prompts get more frequent runs. Consider day-of-week and time variability. Automate where possible; manual spot checks still catch oddities.

Capture structured metrics. Track inclusion yes/no, rank position within the answer, share of mentions, citation domains, and sentiment tone. Compute a stability index across runs to flag volatility.

Tag sentiment and themes. Classify answer tone and narrative themes (accuracy, ease of use, support, governance). Negative shifts should open incidents.

Trigger alerts and incidents. Define thresholds: e.g., inclusion drop >20% week-over-week, sentiment turns negative for two consecutive runs, or incorrect pricing appears. For SMBs, a concise checklist suffices; for brands, route incidents to PR/legal/support as needed.

Governance and security (Brand). Implement role-based approvals for prompt changes, audit trails, and secure storage of logs. Gate releases: content updates or site changes shouldn’t ship if core prompts are failing; integrate a visibility check into CI/CD.

Where a platform fits: A solution like Geneo supports multi-engine tracking (ChatGPT, Perplexity, Google AI Overview), prompt history and versioning, and sentiment analysis, helping teams capture structured metrics and trigger alerts without heavy custom scripts.

Cadence, KPIs, and alerting

Your cadence should reflect risk and opportunity. For SMBs, weekly checks for the top 10 prompts and biweekly for the remainder usually strike the right balance, with a monthly executive summary. Established brands benefit from twice-weekly checks for tier‑1 prompts, weekly for tier‑2, and monthly roll‑ups per region and product line.

Across segments, anchor on five core KPIs: inclusion rate (percentage of runs where you appear), top-position share (how often you lead the answer), citation quality (distribution of authoritative sources vs. weak references), sentiment score (aggregate tone), and a stability index (variance of inclusion/position over time). Establish alert thresholds tied to business impact: a >20% inclusion drop on tier‑1 prompts should open an incident and trigger content freshness checks; two consecutive negative sentiment runs call for a root-cause review and remediation content; incorrect pricing or outdated claims require corrections to owned content and, when appropriate, outreach to influential sources.

To see how algorithm changes can affect visibility, the write‑up on the Google Algorithm Update October 2025 outlines practical implications for content monitoring and answer inclusion.

Evolving your prompt set

Core prompts aren’t static. Add prompts when you launch features, expand into new regions, change pricing, or when competitors pivot. Demote or remove prompts as demand falls or when visibility stabilizes and you need to reallocate attention. Tie monitoring to campaign calendars and peak seasons; after major releases, temporarily increase cadence. Keep owned sources—docs, product pages, explainers—clear, structured, and current. Engines tend to favor authoritative, well-structured sources; stale content invites drift and third‑party narratives.

Common pitfalls (and how to avoid them)

Over-focusing on a single engine is risky. Monitor at least ChatGPT, Perplexity, and Google AI Overview; cross‑engine discrepancies reveal where your source strategy needs work. Don’t overlook sentiment: visibility framed negatively still costs pipeline, so track tone and themes and publish support docs, tutorials, and case studies to shift the narrative. Lack of version control is another trap; without prompt history you can’t attribute changes to wording versus model updates—keep diffs and timestamps. Governance often gets deprioritized in large organizations: require approvals for prompt changes and run pre‑release checks, treating visibility incidents like performance regressions. Finally, thin internal sources hurt you; if docs are vague or dated, engines will lean on third parties. Invest in clear, structured updates and validate improvements in your next run.

Putting it all together: a simple operating rhythm

Define and tier your prompts, log and version every change, run scheduled checks that capture structured metrics, tag sentiment and open incidents when thresholds trip, update sources based on what you learn, and validate fixes in the next run. Close the loop with monthly roll‑ups and a quarterly review to evolve the prompt set. Here’s the deal: when you run this rhythm consistently, visibility stops being guesswork.

A platform can streamline this. Geneo helps teams track visibility across engines, analyze sentiment, and maintain prompt history so marketers can act quickly when answers drift.

Next steps

If you’re an SMB, start with 25–40 prompts across the seven categories, log results weekly, and focus on inclusion, rank, and citation quality. If you’re an established brand, integrate visibility checks into release pipelines, track sentiment across regions, and enforce governance.

Ready to operationalize AI visibility? Start a free trial of Geneo and put your core prompt monitoring on solid ground.