ChatGPT Plugins to GPT Store: 2025 AI Search Optimization Guide

Discover how OpenAI's 2025 shift from Plugins to GPTs rewrites AI search optimization. Get expert analysis, measurement strategies, and actionable steps.

Last updated: 2025-09-04

Update policy: We refresh this analysis every 30–45 days or sooner if OpenAI publishes major GPTs/Actions or GPT Store changes.

Executive summary

OpenAI’s 2023 “plugins” era has effectively given way to custom GPTs, the GPT Store, and Actions (function/tool calling) as the primary extensibility model. For marketers and SEO leaders, this reframes ChatGPT from a curiosity into both a distribution surface and an integration layer. The practical mandate for 2025: optimize your brand for inclusion and citation inside AI answers, decide whether a custom GPT is a viable channel, and implement cross-assistant measurement (ChatGPT, Perplexity, Google AI Overviews) with governance.

Verification notes:

- OpenAI has not published a single definitive post declaring an official “plugin sunset” date. The shift is clear from first-party materials, but timelines are implicit. See OpenAI’s DevDay post, which states Actions build on “insights from our plugins beta,” in Introducing GPTs (OpenAI, 2023).

- The GPT Store launched publicly in early 2024 for Plus/Team/Enterprise users; builder monetization was announced for Q1 2024, but specific ongoing revenue program details remain limited in official posts as of September 2025, per Introducing the GPT Store (OpenAI, 2024).

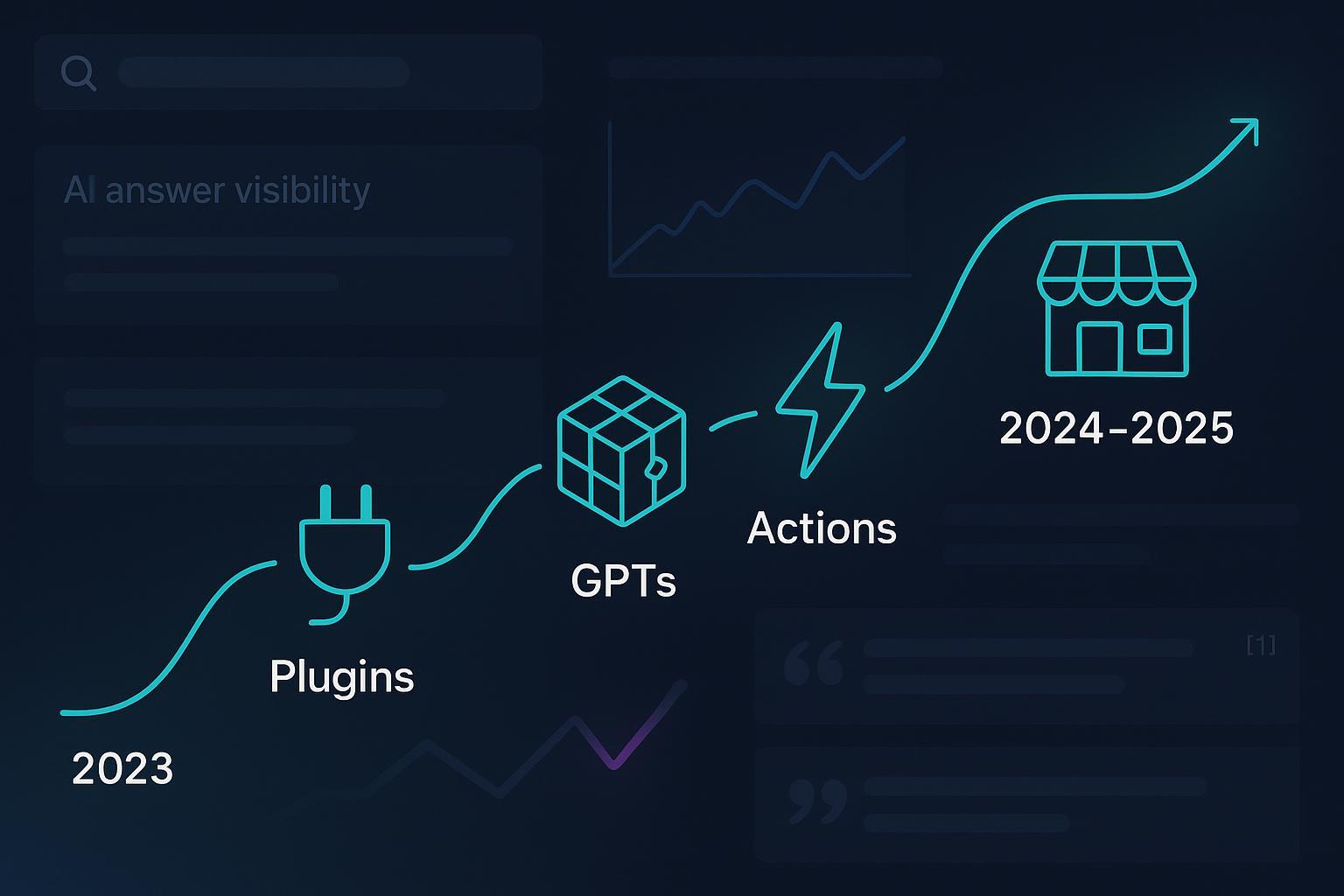

What changed (2023–2025): Plugins → GPTs, Actions, and the GPT Store

- Nov 2023: OpenAI introduced custom GPTs and previewed the Store, positioning Actions as a way to connect GPTs to external APIs “like plugins,” per Introducing GPTs (OpenAI, 2023).

- Nov 2023: The company also announced the Assistants API and a formal tool/function calling model, making agent-like behaviors (retrieval, code, tools) standard, per New models and developer products announced at DevDay (OpenAI, 2023).

- Jan 2024 onward: The GPT Store rolled out publicly to Plus/Team/Enterprise with verification, review, and discovery mechanics, per Introducing the GPT Store (OpenAI, 2024).

The practical takeaway: “Plugins” as a framing is outdated. GPTs plus Actions are the go-forward path for brand integrations and distribution inside the ChatGPT ecosystem.

How ChatGPT chooses and cites information today

Two things matter for visibility inside ChatGPT answers: how the model can fetch information, and what it prefers to cite.

- Web browsing/search is an opt-in tool that lets models fetch current information and surface links within responses, per Tools — Web search (OpenAI Platform Docs, 2025). When enabled in a GPT, this becomes a direct path to source inclusion and citations.

- GPTs can call external APIs via Actions defined with OpenAPI schemas, enabling transactional or data-enrichment flows. See Function calling in the OpenAI API (OpenAI Help, 2025) and the Agents SDK guide (OpenAI, 2025) on tool invocation and structured outputs.

Across AI engines, citation patterns are not uniform. In 2024–2025 tracking, Profound reported that ChatGPT disproportionately cited Wikipedia (47.9% share) while Perplexity favored Reddit (46.7%) and Google AI Overviews also leaned on Reddit (21%), with different mixes for other sources, per AI platform citation patterns (Profound, 2024–2025). Independent testing of AI search products found inconsistent citations and broken links, with Perplexity generally more transparent, per Tow Center’s analysis of eight AI search engines (Columbia Journalism Review, 2025). Overlap between AI citations and Google’s top results also varies by platform, per the AI mode comparison study (SEMrush, 2025).

Implication: You can’t assume Google-style ranking factors translate directly into AI answer inclusion. Optimize for entity clarity, authoritative sources that these systems already favor, and machine-readable access to your data.

The AI Answer Visibility Playbook (2025 edition)

- Entity and evidence readiness

- Canonicalize your entity data (brand, products, leaders, locations) and ensure consistent facts across high-authority profiles (e.g., Wikipedia/Wikidata if eligible, industry directories, official docs). The higher the authority of your corroborating sources, the better the odds of reliable inclusion.

- Publish clear, verifiable claims and cite primary evidence. Given citation heterogeneity, aim for multiple credible corroborators.

- Machine-readable access

- Provide structured data (Schema.org) for key assets and maintain crawlable documentation. When GPTs browse, parsable metadata matters.

- Expose public APIs and documentation where appropriate, with clean OpenAPI specs to support Actions design. See Function calling in the OpenAI API (OpenAI Help, 2025) for schema rigor and argument validation.

- Content designed for direct answers

- Lead with concise, factual summaries and expandable detail beneath. Practitioners emphasize answer-first formatting to increase inclusion chances in AI summaries; see guidance like Dan Shure’s advice quoted in Tripledart’s ChatGPT SEO guide (2025).

- Source credibility and maintenance

- Build and maintain authoritative artifacts (whitepapers, data portals, FAQs) and keep them current. Broken links and outdated claims reduce trust—and lower your chances of being cited in AI answers, as evidenced by inconsistency noted in CJR’s 2025 evaluation.

- Technical alignment with GPTs and Actions

- If you support transactional or data queries, consider providing an Action for your GPT with robust authentication, timeouts, and domain verification per enterprise guidance in GPT actions — Domain settings (OpenAI Help, 2025). The Agents SDK (OpenAI, 2025) can help manage tool loops and structured outputs.

Should you build a custom GPT? Distribution mechanics and ROI questions

Treat a custom GPT as a channel, not a trophy. Distribution hinges on three controllables: configuration quality, compliance, and ongoing engagement.

- Configuration: Store-ready GPTs require instructions, prompt starters, capabilities (e.g., Web Search), and optional knowledge files and Actions. See step-by-step details in Creating a GPT (OpenAI Help, 2025).

- Publishing and discovery: To be listed, set visibility to Everyone, complete builder verification, and pass policy review. Refer to Building and publishing a GPT (OpenAI Help, 2025) and the Store overview in Introducing the GPT Store (OpenAI, 2024).

- Compliance and safety: Review OpenAI’s restrictions—noncompliant GPTs can be delisted. See Usage policies (OpenAI, current).

Decision rubric for marketers and PMs

- Build if: Your users already search for your brand’s workflows in ChatGPT, you can expose unique data or actions via APIs, and you can maintain updates and support.

- Defer if: You lack a clear use case, no distinct data/action advantage, or insufficient resourcing to keep instructions, knowledge, and Actions current.

- Measure ROI: Track engagement inside ChatGPT (sessions, completions), off-platform conversions, and your Share of Answers for target queries before/after launch.

Measurement and governance: from anecdotes to accountable KPIs

Because AI answer engines differ in citation behavior, you need cross-assistant measurement to validate strategy and budget. A practical KPI set:

- Share of Answers (SoA): Percent of a query set where your brand is cited or appears in AI answers (by engine: ChatGPT with browsing, Perplexity, Google AI Overviews).

- Citation quality: Authority of domains citing you; presence and prominence of live, clickable links.

- Sentiment: Polarity/subjectivity of brand mentions within answers over time.

- Entity consistency: Alignment of key attributes (products, pricing ranges, leadership, locations) across answers.

External benchmarks highlight why this matters: platform biases toward certain sources (e.g., Wikipedia/Reddit) in 2024–2025 tracking by Profound and inconsistent linking noted by Columbia Journalism Review (2025) and overlap differences in SEMrush’s 2025 study.

Operationalizing the dashboard

- Data collection: Run scheduled audits for your query set across ChatGPT (with browsing enabled for reproducibility), Perplexity, and Google AI Overviews. Log sources, link presence, and sentiment snapshots.

- Tooling: Platforms such as Geneo focus on multi-assistant brand monitoring—tracking brand exposure, cited links, mentions, historical query trails, and sentiment—useful for before/after validation and board reporting. Teams often complement this with internal logs of API usage from their own Actions to correlate with engagement.

- Governance: Establish thresholds (e.g., drop in SoA > 15% week-over-week triggers investigation), define remediation playbooks (update docs, reinforce entity data, publish clarifications), and set a monthly review with cross-functional stakeholders.

Example improvement loop (anonymized)

- Week 0: Baseline audit shows low inclusion for product comparisons; citations skew to community forums.

- Weeks 1–2: Publish a clean, evidence-backed comparison page with structured data; update developer docs and OpenAPI schema for your pricing/availability endpoint.

- Weeks 3–4: Re-audit shows higher SoA and more citations to your docs; sentiment neutral→positive as answers reference clearer facts. Dashboards (e.g., in Geneo) capture uplift and source quality changes for executive reporting.

Methodology (how to run trustworthy audits)

- Fixed query set per segment (n ≥ 50 per segment), weekly cadence.

- For ChatGPT, use a consistent GPT with Web Search toggled on; capture links returned.

- Record date/time, engine version when available, full answer text, and all cited URLs.

- Annotate sentiment with a standard rubric; spot-check with human review.

Risks, compliance, and maintenance

- Policy and review risk: Noncompliant GPTs won’t be discoverable; follow Usage policies (OpenAI) and include privacy URLs when using Actions, as outlined in Building and publishing a GPT (OpenAI Help, 2025).

- Enterprise constraints: Some organizations restrict Actions domains and enforce timeouts; plan for these controls per GPT actions — Domain settings (OpenAI Help, 2025).

- Model drift and content decay: AI answers can change as models and corpora update. Institute quarterly content reviews, and monitor SoA and sentiment continuously.

- Over-reliance on one surface: GPT Store discoverability is not identical to an app store. Balance with owned channels and ensure your web properties remain the canonical source.

Practitioner checklist (condensed)

- Confirm entity consistency across authoritative sources; add/refresh structured data.

- Publish evidence-backed, answer-first pages for your core topics.

- If relevant, expose stable public APIs and document them with OpenAPI to enable Actions.

- Build a custom GPT only with a clear use case; configure Web Search, prompt starters, and compliant Actions.

- Implement a cross-assistant audit: SoA, citation quality, sentiment, entity consistency.

- Set governance thresholds and remediation playbooks; review monthly.

What to watch next

- Official updates on GPT Store discovery signals or monetization mechanics, starting from OpenAI’s Store announcement (2024).

- Changes to browsing/web-search tools and connectors that alter how citations are displayed, per the evolving Tools — Web search docs (OpenAI).

- Shifts in citation patterns across engines, tracked by longitudinal studies like Profound’s 2024–2025 analysis and comparisons such as SEMrush’s 2025 study.

Soft CTA: If you’re ready to baseline and improve your AI answer visibility, consider running a monitored audit with a cross-assistant tool like Geneo. Start here: Geneo — AI search visibility monitoring.