How Autonomous AI Agents Are Redefining Content Marketing Automation in 2025

Discover how autonomous AI agents are transforming content marketing workflows in 2025 with actionable playbooks, KPI tips, and expert data. Read now.

In 2025, the leap from task-level “copilots” to autonomous, goal-seeking AI agents is reshaping content marketing. What’s different isn’t just speed; it’s closed-loop decisioning. Agentic systems can plan multi-step work, execute across channels, and learn from performance telemetry without constant human prompting. For CMOs and content leaders heading into Q4 planning, the opportunity—and the responsibility—is to turn this capability into measurable, governed outcomes.

What’s new in 2025—and why it matters

Adoption has accelerated. In the United States, the PwC AI Agent Survey (May 2025) reports that 79% of companies have adopted AI agents in some capacity and 88% plan to increase AI budgets over the next year, with 66% of adopters seeing measurable productivity value, according to the PwC AI Agent Survey (2025). Executive urgency is high, with many leaders concerned about competitive lag.

Analysts have also elevated the theme. Gartner has flagged “agentic AI” as a top 2025 strategic trend, with enterprises moving from pilots toward production, as summarized in the Productive Edge overview of Gartner’s 2025 tech trends. Treat this as qualitative framing, since the detailed Gartner report requires subscription access.

Meanwhile, operational maturity remains a gap. McKinsey’s 2025 workplace AI analysis emphasizes that while investment is near-universal, few organizations consider themselves at true maturity; progress requires orchestration, governance, and measurement discipline, as outlined in the McKinsey “AI in the workplace” 2025 report.

Bottom line: 2025 is the year to translate interest into accountable, agentic workflows that are observable, auditable, and tied to revenue outcomes.

From assistants to autonomous workflows: What actually changes

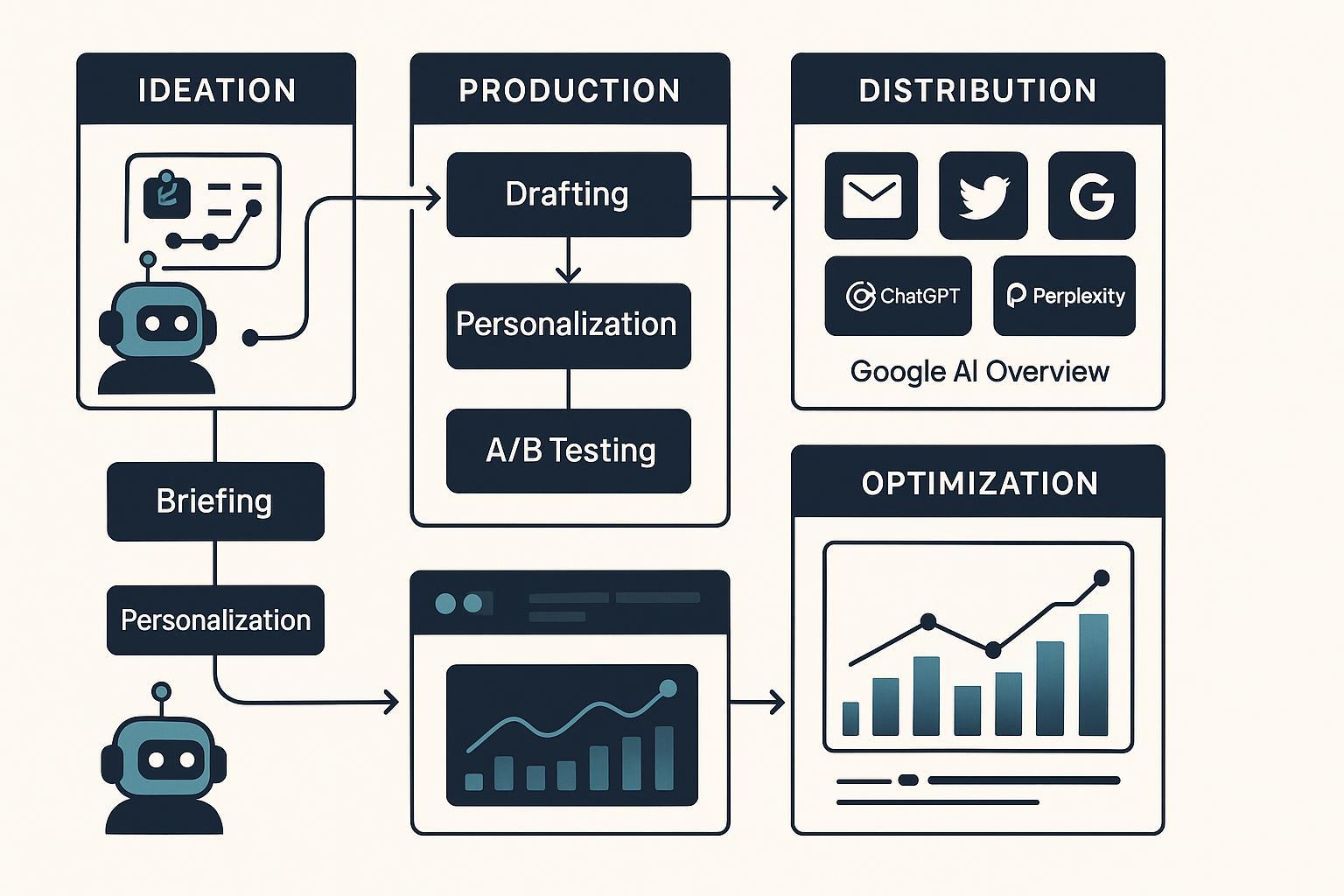

Autonomous agents don’t replace your marketing stack—they orchestrate it. The practical shift is from single-step assistive tools to multi-agent systems that plan, execute, and refine end-to-end content operations.

-

Ideation and audience insight

- Research agents synthesize trend signals, segment insights, and competitive gaps to prioritize topics. In practice, enterprise platforms are enabling agent-driven orchestration and experimentation; for example, Braze’s 2025 updates emphasize agent consoles and real-time decisioning for multichannel personalization, as described in the Braze 2025 announcements.

-

Production and QA

- Briefing agents generate SEO- and brand-aligned briefs, while editor agents enforce voice and factual fidelity via retrieval-augmented generation (RAG) and citation checks. Content operations suites are introducing dedicated agents for planning, metadata enrichment, and brand compliance; see Aprimo’s platform overview of AI agents for content ops in the Aprimo AI Agents platform page (2025).

-

Distribution and personalization

- Decisioning agents tailor messages by microsegment, select channels, and optimize send-times. Continuous experimentation (variant generation and bandit-style allocation) becomes the default.

-

Optimization

- Optimization agents watch telemetry (engagement, conversions, anomalies), trigger refreshes, and reallocate spend within guardrails. Crucially, they also ingest how your brand appears in answer engines—feeding new hypotheses back into the plan.

This last point is the differentiator. In 2025, the visibility that matters increasingly includes AI-driven answers (ChatGPT, Perplexity, Google AI Overview), not only traditional search and social. That’s where closed-loop learning gains power.

The measurement loop: Make AI agents accountable

To harness autonomy responsibly, define a KPI tree and instrument each stage. A pragmatic structure:

- Content velocity: assets created and shipped; cycle time per asset.

- Entity/topic coverage: how thoroughly target entities and topics are addressed (ties to SEO and to answer-engine coverage).

- AI search visibility share: your brand’s presence and sentiment in AI-generated answers across key platforms.

- Engagement: CTR, dwell time, unsubscribe rate by channel.

- Conversions and ROMI: lead-to-opportunity, CAC, and return on marketing investment.

- Quality and governance: factual fidelity pass rates, brand voice alignment scores, approval lead times, rollback incidents.

As you formalize this loop, it helps to adopt the language and methods of Generative Engine Optimization: see Generative Engine Optimization (GEO) for a concise overview of optimizing for answer engines.

To operationalize AI visibility measurement, teams often add purpose-built monitoring alongside their analytics stack. One option is AI search visibility monitoring across ChatGPT, Perplexity, and Google AI Overview, which surfaces brand mentions, sentiment, and linkbacks and maintains historical query tracking for trend analysis. Disclosure: Geneo is our product.

For a concrete sense of metrics like Total Mentions, Platform Presence, and Sentiment as they appear in practice, review this example AI visibility query report.

How this closes the loop:

- Agents plan and produce based on intent and entity coverage targets.

- Distribution agents tailor variants and allocate traffic.

- Measurement captures engagement, conversions, and AI answer visibility.

- Optimization agents trigger content refreshes and channel tweaks based on gaps (e.g., entity missing in Perplexity answers) and shift spend within bounded autonomy.

Governance and risk controls for autonomous execution

Autonomy without guardrails is a liability. Leading frameworks emphasize observability, approvals, and data governance:

-

Human-in-the-loop tiers

- Require approvals for sensitive topics or high-risk actions; allow low-risk changes (e.g., minor copy edits) to flow autonomously within thresholds.

-

Telemetry and audit trails

- Log prompts, plans, diffs, decisions, and outcomes. Ensure “who approved what” is discoverable.

-

Evaluation sandboxes and update windows

- Test new models/agents in a controlled environment; schedule agent/model updates and run regression checks before deployment.

-

- Isolate PII and apply role-based access. Ensure vendor DPAs are in place and observable.

-

Budget guardrails

- Set autonomy bands (e.g., ±10% reallocation authority per channel per week) with escalation triggers.

These practices align with enterprise guidance on orchestrating AI in the workplace (maturity, risk, and controls) highlighted in the McKinsey “AI in the workplace” 2025 report and with governance considerations for marketing and sales discussed by Deloitte in their Deloitte perspective on AI in investment management sales and marketing (2025).

A 30–60 day pilot playbook

Start small, but make it real. A focused pilot proves the value and surfaces gaps before you scale.

Week 0–1: Define scope and exit criteria

- Pick one workflow (e.g., content refresh program for top 50 evergreen assets).

- KPIs: cycle time, quality (factual pass rate, brand voice score), engagement delta, AI visibility share shift.

- Guardrails: autonomy thresholds, approval tiers, rollback plan.

Week 1–2: Instrumentation and integration

- Set up observability (logs, diffs, decision trails).

- Integrate with your CMS/DAM, MAP, CDP, and analytics. Configure feature flags for agent autonomy by environment (dev/stage/prod).

Week 2–4: Supervised execution

- Research and briefing agents propose refresh plans; editor agents deliver RAG-checked drafts; distribution agents deploy A/B variants.

- Human reviewers approve high-risk items; low-risk updates flow within bounds.

Week 4–6: Analyze and iterate

- Hold weekly agent performance stand-ups. Retire underperforming prompts/policies and promote winning patterns.

- Update autonomy bands based on observed stability; schedule model/agent updates in defined windows with regression tests.

Exit criteria

- Quantifiable improvements vs. baseline (e.g., −30% cycle time, +15% engagement on refreshed assets, measurable uptick in answer-engine presence).

- No critical governance incidents; acceptable rollback frequency; telemetry completeness above target.

KPIs and simple formulas to keep everyone aligned

-

Content velocity

- Assets shipped per week; Cycle time = “Ready for brief” → “Published.”

-

Entity/topic coverage

- Coverage score = Covered target entities / Total target entities within taxonomy.

-

AI search visibility share

- Visibility share = Your mentions in answer sets / Total answer sets analyzed across target platforms.

-

ROMI

- ROMI = (Attributed revenue − Marketing spend) / Marketing spend.

-

Brand voice alignment

- Alignment score from rubric-based review or model-assisted classifier calibrated on your style guide.

-

Governance health

- Approval lead time; Rollback rate; Factual fidelity pass rate (RAG/citation checks).

For teams formalizing answer-engine strategy, revisit the foundations of Generative Engine Optimization (GEO) to ensure your entity coverage and prompt structures map to conversational discovery.

Looking ahead: Building your 2026 roadmap

- Expand from one workflow to a multi-agent mesh across ideation, production, distribution, and optimization—connected via shared telemetry and a unified policy library.

- Standardize evaluation: monthly model/agent reviews; quarterly budget re-baselining; annual refresh of your entity taxonomy and content pillars.

- Tie incentives to measurement: give teams shared goals for AI visibility share, conversion lift, and ROMI to reinforce the closed loop.

- Strengthen governance: maintain auditability, provenance marks for AI-generated assets, and well-defined decision rights.

If you’re ready to formalize AI answer visibility in your loop, consider adding Geneo to your stack to benchmark, monitor, and trend your presence across key answer engines as you scale agents.

—

Updated on 2025-10-01

Planned change-log practice: We’ll update this article monthly through Q1 2026. Example entries: “2025-11-05: Added PwC 2025 adoption stat; refined KPI definitions; noted new Braze agent capabilities.”