Writesonic Review 2025: Does It Really Boost AI Visibility & SEO?

Hands-on Writesonic review (2025): Assessing AI visibility, citation gap fixes, and SEO impact for SMBs, agencies, and in-house SEO teams.

If you’ve watched your organic traffic dip as AI answers siphon clicks, you’re not alone. SMBs, in-house SEO teams, and agencies are scrambling to measure brand presence inside ChatGPT, Perplexity, Google’s AI Overviews, and beyond—and to win back visibility with content that earns citations.

Writesonic positions itself as a combined AI visibility tracker and content engine. We evaluated its toolkit and workflows from a skeptical SEO practitioner’s lens. Disclosure: We tested independently; no sponsorship or affiliate links are used in this review.

What Writesonic Actually Promises

In its current product messaging, Writesonic emphasizes three pillars:

AI visibility tracking across major platforms with share-of-voice, sentiment, and citation insights.

Actionable workflows to close citation and content gaps.

An integrated content engine and technical SEO assists to produce and refresh pages more likely to be cited by AI systems and rank in Google.

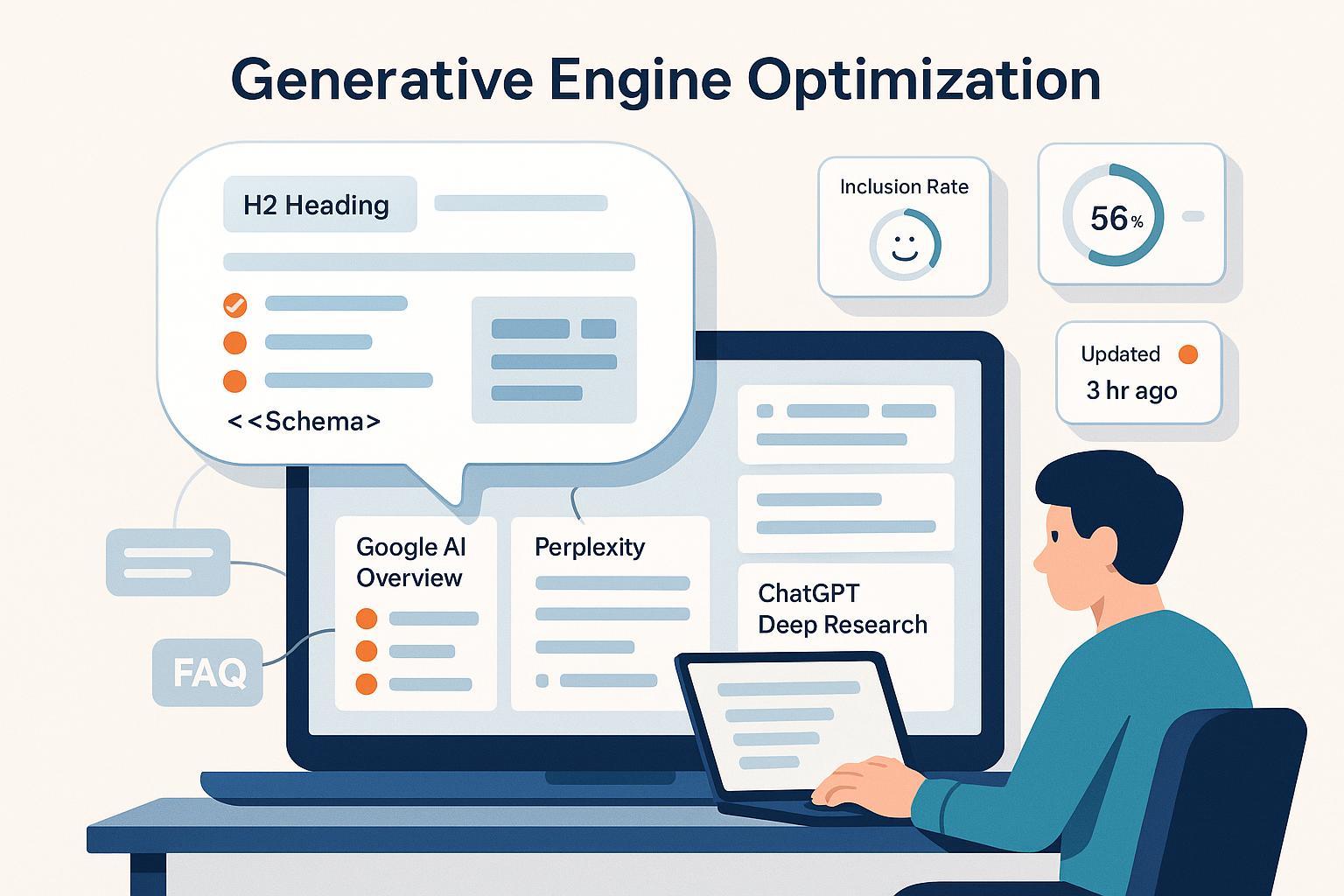

According to the GEO platform overview (Writesonic, 2025), the software tracks and benchmarks your brand’s presence across ChatGPT, Google AI Overviews, Claude, Perplexity, and others, surfacing competitor comparisons and optimization suggestions. The AI Search Analytics explainer (Writesonic, 2025) describes visibility scoring, sentiment, and crawler analytics, including references to identifying AI bot visits. For demand-side research, Writesonic introduces prompt-level trends via Prompt Explorer and AI search volume (Writesonic, 2025).

If you’re new to Generative Engine Optimization, here’s a quick primer: GEO sits alongside traditional SEO to optimize content for AI answers. For background, see Generative Engine Optimization beginner guide and the complementary Answer Engine Optimization (AEO) overview.

Our Field-Test Methodology Template (Replicable)

We strongly recommend readers run a structured, dated test rather than relying on generalized claims. Here’s a reproducible plan you can adapt:

Define your priority topic and prompts

Choose 3–5 high-intent prompts users would ask in ChatGPT/Perplexity and 2–3 target keywords for Google. Document the exact phrasing.

Establish baselines

Record your brand’s AI visibility, citations, and sentiment per platform. Note competitors cited by AI answers. Capture a Google ranking baseline for your top target pages. For a detailed audit flow, see how to perform an AI visibility audit.

Plan your interventions in Writesonic

Use citation gap insights to prioritize outreach (to authoritative sources and UGC/Reddit threads), refresh existing content with the content engine, add/update schema, and tighten internal linking.

Execute content and technical fixes

Publish refreshed content and new supporting assets; implement schema and internal links; run outreach to close citations.

Track outcomes weekly for 4–8 weeks

Monitor AI visibility score trend lines, citation changes, and sentiment. Check Google rankings and impressions. Keep screenshots and change logs.

Verify and attribute

Attribute lifts to specific actions (e.g., a new citation, a schema fix). Document what didn’t move and plan iterations.

Important: Avoid attributing improvements solely to content generation. In most cases, results hinge on a mix of editorial quality, authority signals, technical hygiene, and external mentions.

Feature Deep Dive: How the Toolkit Maps to Real Workflows

AI Visibility Score, Citations, and Sentiment

Writesonic’s visibility tracking aims to answer: “Where is my brand present or missing across AI answers, and why?” The AI Search Analytics overview (Writesonic, 2025) outlines:

Share-of-voice and sentiment across platforms.

Where competitors are being cited and you’re absent (citation gaps).

Indicators tied to AI crawler behavior.

Practical impact: Use this to prioritize pages and outreach targets. For example, if Perplexity consistently cites a competitor’s explainer, draft a better resource with transparent sources and reach out to editors or participate credibly in the UGC threads where those answers draw citations.

Prompt Explorer and “AI Search Volume”

The Prompt Explorer feature (Writesonic, 2025) is useful for discovering prompt-level demand and regional trends. Treat this like early-stage intent research: cluster prompts into themes, then map content and FAQs that match the user’s question language, not just keyword-centric phrasing.

Content Engine and Technical SEO Assists

Writesonic’s content tools aim to help you produce or refresh pages with better topical coverage and EEAT signals. The company’s materials describe an SEO checker/optimizer and agent-driven helpers. See the SEO Checker/Optimizer walkthrough (Writesonic, 2025) for the types of on-page recommendations the tool provides.

Practical impact: Use the optimizer to catch gaps in headings, entities, and internal links. Keep human editorial control for factual accuracy, tone, and claims that require citations.

Action Center and UGC/Reddit Targeting

Writesonic references an action-oriented workflow to fix technical issues, earn external mentions, and boost content quality. The product literature also mentions targeting Reddit/UGC within optimization tasks. These can be effective channels for earning credible mentions, provided your contributions are genuinely helpful and non-promotional.

Moment of Conviction vs. Doubt

What convinced us:

Seeing citation gap views lined up with competitor mentions is a strong signal for prioritization—especially for agencies managing multiple clients.

Prompt-level insights help align content with how users actually ask questions in AI tools.

Having optimization tasks in one place reduces context switching for in-house teams.

What still raises doubts:

Breadth vs. depth: With “many tools” under one roof, some modules may feel shallow for complex sites—especially technical SEO edge cases.

Editorial reality: Long-form or niche content still needs human editing and source verification to meet EEAT expectations.

Evidence transparency: Public methodology for how the “AI Visibility Score” is calculated is limited in marketing pages; we recommend readers validate lifts via dated, reproducible tests.

A Practical Before/After Playbook (Use This As Your Template)

Below is a framework you can follow; replace with your actual numbers during testing.

Baseline (Week 0): Document AI visibility, citations, sentiment per platform; record Google rankings and impressions for 2–3 target queries; list competitors frequently cited by AI.

Interventions (Weeks 1–2): Refresh one cornerstone article; add schema and internal links; produce a data-backed companion piece; conduct targeted outreach to authoritative sources and helpful UGC threads.

Tracking (Weeks 3–6): Monitor visibility trends, citation changes, and sentiment shifts; capture ranking movement and impressions; annotate changes.

Verification (Week 6+): Attribute gains to specific actions; plan follow-up iterations (e.g., expand FAQs, add expert quotes, secure additional citations).

Note: Do not publish headline numbers unless you have the screenshots and change logs to back them up.

Workflow Comparisons (Scenario-Based)

Writesonic vs. Jasper/Copy.ai for Writing

Model flexibility and factuality: Writesonic and Jasper both offer flexible prompting; factuality depends on source binding and editorial oversight. Copy.ai is fast for ideation but often needs heavier editing for technical posts.

Editing load: For niche or data-heavy topics, all three require human review; Writesonic’s optimizer can catch structural gaps, but it won’t replace expert fact-checking.

Where Writesonic adds value: Visibility + citation workflows and prompt-level demand research bundled with content tools.

On-Page Optimization: Writesonic vs. Surfer/Clearscope

Topic coverage: Surfer and Clearscope are mature for semantic coverage and competitor benchmarking; Writesonic’s optimizer is helpful but may be less granular for complex sites.

Internal linking and EEAT: Writesonic’s workflows encourage internal links and source-backed content; Surfer/Clearscope excel at content scoring depth. Many teams use these in combination.

Research/SEO Stack Fit: Writesonic with Ahrefs/SEMrush

Complementary roles: Ahrefs/SEMrush still dominate link/index coverage, technical audits, and traditional keyword research. Writesonic contributes AI-specific insights—visibility across generative platforms, citation gaps, and prompt demand.

Reporting: For agencies, combine Writesonic’s AI visibility trend lines with GSC and Ahrefs metrics for client-ready narratives.

Pricing and Plan Fit

Writesonic’s pricing evolves, and inclusions differ by tier. Rather than hard-code details that may change, check the live pricing page (Writesonic, 2025). SMBs should focus on whether a plan includes enough audits, content credits, and reporting. Agencies and in-house teams will care more about multi-seat controls, project/workspace management, and export options.

Value lens:

SMBs: Use prompt research + citation gap workflows to move the needle on one or two core topics before expanding.

In-house teams: Prioritize modules that integrate with your CMS and analytics stack; evaluate whether task assignments and statuses fit your governance model.

Agencies: Look for multi-client dashboards, repeatable playbooks, and clean exports for client reports.

Security, Privacy, Compliance

Public documentation for formal certifications (e.g., SOC 2 Type II, HIPAA) was not readily found on Writesonic’s main site during our review. Do not assume compliance without vendor confirmation. If your industry is regulated, request official documentation and security details directly from Writesonic before purchase.

Alternatives and Toolbox

Geneo: A Generative/Answer Engine Optimization platform focused on multi-platform AI monitoring, sentiment analysis, and an actionable content roadmap. It’s a fit when teams want prioritized GEO/AEO guidance and visibility tracking across ChatGPT, Perplexity, and Google’s AI experiences without adopting a full writing suite.

Profound: Concentrates on AI visibility measurement and citation tracking across leading platforms; useful for teams that want dedicated monitoring and briefs.

BrightEdge: Enterprise SEO platform with AI features and broad site audit capabilities; typically chosen by larger organizations for integrated SEO automation and reporting.

Keep evaluation criteria equal: coverage of AI platforms, actionability of playbooks, content quality controls, technical SEO assistance, integrations, pricing tiers, and transparent security disclosures.

Who Should Buy Writesonic (and Who Should Wait)

Buy if:

You need AI visibility tracking and practical workflows to close citation gaps, and you value having content optimization in the same environment.

Your team has the capacity to edit and verify long-form outputs and to follow through on outreach.

Wait or pilot first if:

You require rigorous, publicly documented calculation methods and APIs before committing.

Your site relies on complex technical SEO where specialized audits and granular controls are non-negotiable.

Governance tips:

Set editorial standards for sourcing and claims; build a citation checklist.

Track changes with dated logs; require screenshot evidence for reported lifts.

Combine AI visibility metrics with GSC/Ahrefs data to avoid over-attribution.

Verdict (2025)

Writesonic is evolving into a credible GEO/AEO companion for teams that want to monitor AI presence and act on citation gaps without leaving their writing environment. Its strengths are in prioritization and prompt-aligned workflows; its limits are the usual suspects in AI writing (factuality and editorial polish) and the need for clearer public methodology around visibility scoring.

If you run a measured pilot—baseline, interventions, tracking, and verification—Writesonic can earn a seat next to your SEO stack. Just keep your process disciplined: document everything, edit rigorously, and validate improvements with hard evidence.