Short vs Long-Form Content in Generative Search (2025)

Short-form vs long-form content for generative search in 2025: platform evidence, citation trends, and playbooks for Google AI Overviews, Perplexity, ChatGPT. Comparison and decision guide.

Generative search has reopened the “how long should my content be?” debate—but the real question for 2025 is different: which formats actually get pulled into AI answers, cited as sources, and still drive clicks?

This comparison takes a platform-first view (Google AI Overviews, Perplexity, ChatGPT/Copilot) and a task-first view (appear in AI boxes vs earn qualified traffic). The short version: structure and clarity increasingly matter more than sheer word count. The practical play in 2025 is a hybrid: fast, structured short answers plus deep, authoritative hubs.

How each platform assembles answers (and why length matters differently)

-

Google AI Overviews (AO)

- In a March 2025 panel study of 900 U.S. adults, the Pew Research Center found that users who saw an AI Overview clicked a traditional search result in 8% of visits versus 15% when no AO appeared; only about 1% of visits included clicks on links inside the AO. Pew also noted summaries “typically 67 words,” with 88% including more than three sources (published July 2025). See the details in the Pew Research short read (2025) and corroborating Search Engine Land coverage (2025).

- Measurements vary by methodology and month. An 8,000-keyword study reported an average AO length of 169 words in AWR’s 2024 analysis. SE Ranking tracked AO character counts fluctuating widely in 2024, peaking near 6,142 characters before settling closer to ~5,337 by Nov 2024; see SE Ranking’s 2024 recap and sources research and sources analysis updates. Semrush also shows shifting prevalence by vertical in 2024–2025; see its AI Overviews hub.

-

Perplexity

- Perplexity consistently displays linked citations and uses real-time retrieval. Multiple authoritative explainers describe this behavior, including the University of Florida Business Library FAQ (accessed 2025) and Microsoft-style feature explainers such as TechPoint Africa’s guide (2024–2025). No primary, quantitative study was found that proves a preferred source word count; optimize for clarity, structure, and citable claims rather than chasing a number.

-

ChatGPT/GPT-4o and Microsoft Copilot (browsing/web grounding)

- OpenAI’s model page lists GPT-4o with a 128,000-token context window and up to 16,384 output tokens, allowing long sources to be ingested while still favoring well-structured summaries for accurate extraction; see the OpenAI GPT-4o documentation (2025).

- Microsoft documents that Copilot Chat shows a “linked citation” section with the actual web queries it ran and the sources it used (visible in Copilot Chat and retained for 24 hours in the thread). See Microsoft Docs: manage public web access (2025) and Copilot privacy and protections.

Why this matters: Google’s AO rewards succinct, extractable claims (especially for narrow intents), Perplexity rewards clarity plus authority with transparent citations, and ChatGPT/Copilot can process long documents but still extract best from cleanly sectioned content.

What actually gets surfaced: evidence highlights

-

Google AI Overviews

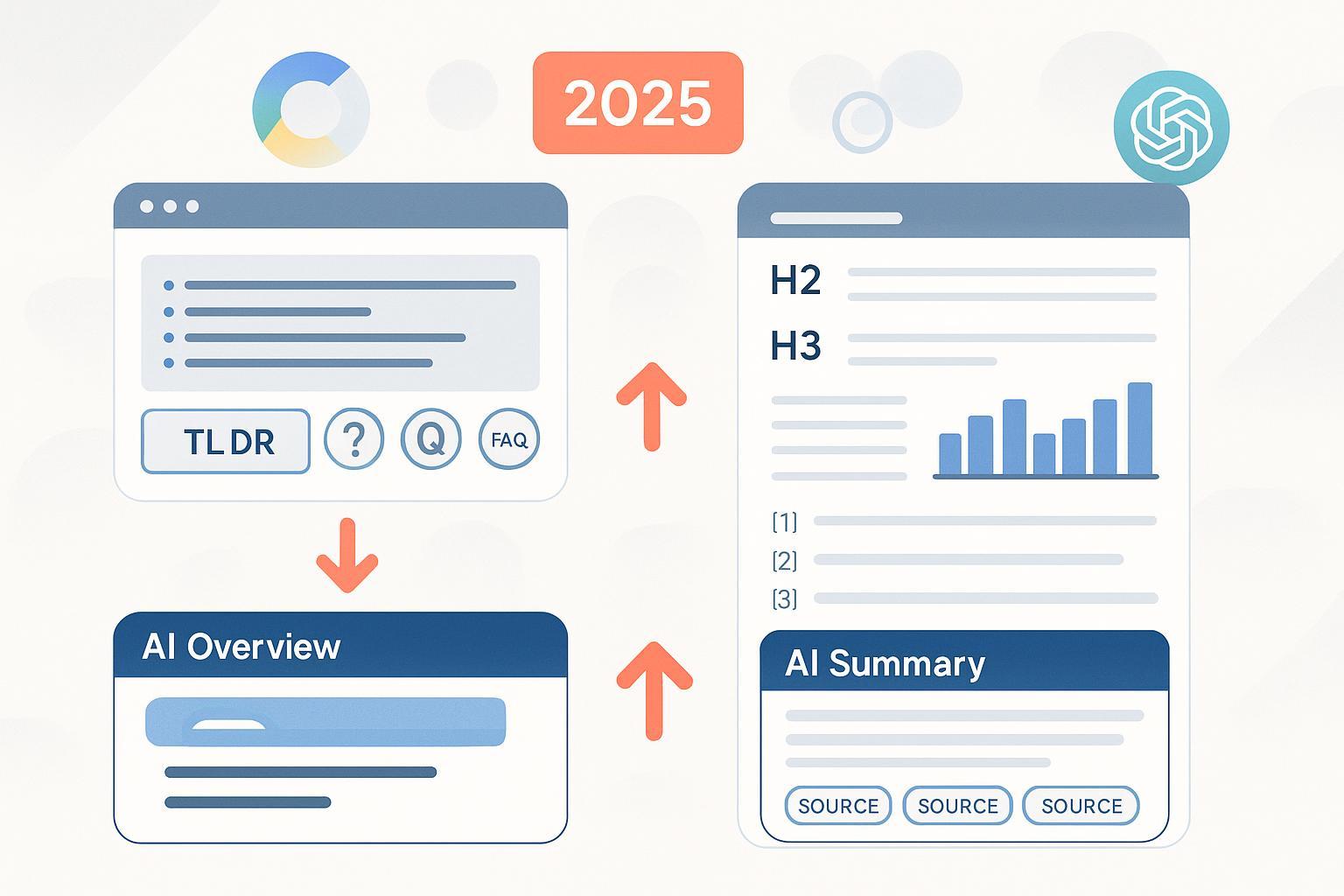

- Typical visible AO snippets were ~67 words in Pew’s March 2025 observation window, but other datasets measured much longer total AO text in 2024 (e.g., AWR’s 169-word average; SE Ranking’s thousands of characters). Plan for both: a crisp TL;DR and deeper, well-structured sections that can feed expanded summaries.

- When AOs appear, traditional organic CTR drops materially; in Pew’s dataset, link clicks fell from 15% to 8% of visits. See Pew Research (2025).

-

Perplexity

- Consistent citation behavior and avoidance of low-trust sources are widely observed in explainers and tutorials, but there’s no solid numeric “ideal word count.” Focus on scannable sections, explicit claims, and reputable sourcing. See UF Business Library FAQ and TrilogyAI’s deep-research comparison (2025).

-

ChatGPT/Copilot

- Large context windows mean long-form is usable; chunking and summaries dramatically improve extraction fidelity. See OpenAI GPT-4o docs and Microsoft Copilot docs.

Short vs long-form: strengths, risks, and best-fit scenarios

| Dimension | Short-form (≤800 words) | Long-form (≥1,800–2,500+ words) |

|---|---|---|

| Inclusion in AI answers | High for narrow, question-level intents (FAQs, checklists). Crisp TL;DRs, bullets, and schema help extraction. | Strong for complex/YMYL topics when sectioned with summaries and citations; serves as a canonical source to be cited. |

| Likely CTR outcome | Often lower incremental CTR (answer satisfied in-box), but ensures brand presence in AI modules. | Better for capturing qualified clicks when users need depth, comparisons, pricing, or proof. |

| E-E-A-T considerations | Risk of looking “thin” if not well-cited and authored; add bios, dates, references. | Opportunity to demonstrate expertise and first-party evidence; must avoid fluff and keep current. |

| Maintainability | Fast to produce and update; ideal for fast-changing facts. | Requires governance and modular updates; add TL;DR and dated sections to manage decay. |

| Best platforms/intents | Google AO for narrow intents; Perplexity for fact cards; Copilot quick references. | Perplexity Deep Research synthesis; ChatGPT browsing of comprehensive guides; Google AO expanded sections and YMYL. |

Playbooks you can ship this quarter

- For quick, narrow queries (brand presence inside AI boxes)

- Create single-intent “answer cards” ≤800 words:

- Title mirrors the question, with a 1–2 sentence TL;DR up top.

- Bulleted steps, definitions, or pros/cons; include a succinct conclusion.

- Add FAQPage or HowTo structured data where applicable (per Google Search Central guidance).

- Show last updated date, author credentials, and 2–4 authoritative references.

- For complex or YMYL topics (credible citations and durable authority)

- Ship comprehensive hubs ≥1,800–2,500 words:

- Start with an executive summary and “Key takeaways” list.

- Use clear H2/H3s, jump links, tables, and diagrams; cite primary sources.

- Include expert review, disclaimers, and methods sections for YMYL per the Google Search Quality Rater Guidelines (Jan 2025).

- Modularize updates (versioned stats blocks) and time-stamp changes.

- For product/transactional journeys (MOFU/BOFU)

- Keep long-form comparison and buying guides with feature matrices, implementation notes, and ROI examples; generative boxes appear less often, but depth converts.

Architecture for 2025: TL;DR + Depth (hub-and-spoke)

- Design dual layers:

- Layer A: Short answer pages (“spokes”) mapped one-to-one to specific questions; interlink to the canonical hub.

- Layer B: Long-form canonical hub with executive summary, FAQs, and citations; link out to each spoke and back.

- Governance checklist

- Freshness: quarterly review cadence for hubs; monthly for spokes in fast-moving areas.

- E-E-A-T: add author bios, references, and review notes; mark last updated.

- Technicals: FAQPage/HowTo schema where relevant; clean headings; descriptive alt text; accessible tables.

KPIs to track (and how to benchmark)

- Inclusion rate in AI answers by platform (percent of tracked queries where your domain is cited or linked).

- Citation share within an AI module (how often your domain appears among sources).

- CTR from AO-triggering SERPs vs non-AO SERPs, using cohort tracking (expect lower CTR on AO SERPs per Pew 2025).

- Update velocity (median days to update short vs long pages) and content decay rate.

- E-E-A-T audit pass rate (presence of bios, references, dates, schema).

Also consider: monitoring your visibility across AI search

- Consider using Geneo to monitor brand mentions, citations, and sentiment across AI Overviews, Perplexity, and ChatGPT, with historical tracking to see which short vs long assets get referenced over time. Disclosure: Geneo is our product.

FAQ

-

How short is too short?

- For most informational queries, anything under ~200–300 words risks looking “thin” unless it’s a definition or a snippet inside a broader hub. Aim for ≤800 words with structure and references.

-

Do tables and lists help?

- Yes—LLMs extract well from lists, tables, and bolded key facts. They also improve scannability for humans.

-

Does schema increase inclusion in AI Overviews?

- There’s no AO-specific schema, but FAQPage/HowTo structured data align well to narrow intents and can improve extraction. Treat schema as a helper, not a guarantee, and follow Google’s structured data policies.

-

Should I convert all long-form into short cards?

- No. Maintain canonical hubs for authority and conversions, then add spoke pages for the most common specific questions.

Sources and further reading

- Pew Research Center (2025) – Users click fewer links when AI summaries appear; typical AO snippet length and citation counts

- Search Engine Land (2025) – Coverage of Pew’s AO behavior findings

- Advanced Web Ranking (2024) – AI Overview length study across 8,000 keywords

- SE Ranking (2024) – AI Overviews 2024 recap and prevalence and sources/length analyses

- Semrush (2024–2025) – AI Overviews hub and Sensor-based studies

- OpenAI (2025) – GPT-4o context window and output tokens

- Microsoft Docs (2025) – Copilot web access and citation transparency and privacy/protections

- Google (Jan 2025) – Search Quality Rater Guidelines (YMYL, E-E-A-T)