How 2025’s Latest Search & AI Algorithm Updates Impact Visibility

Explore 2025's Google core/spam updates, AI Overviews, Bing Copilot & more. See how ranking, CTR & citations are changing—act now to future-proof your content!

In 2025, the ground under organic visibility shifted—again. Google shipped a March core update and an August spam update that rewired ranking and detection systems. At the same time, AI-generated answers expanded across Google’s AI Overviews/AI Mode, Bing/Copilot experimented with new answer layouts, and ChatGPT/Perplexity improved research and citation behavior. The net effect: fewer clicks on traditional blue links, more competition to be cited inside AI answers, and a new measurement paradigm where “rank” is only one signal in a broader visibility mix.

Below, I’ll translate what changed this year into practical moves you can deploy in Q4 2025 to protect and expand your findability across engines.

What actually changed in 2025

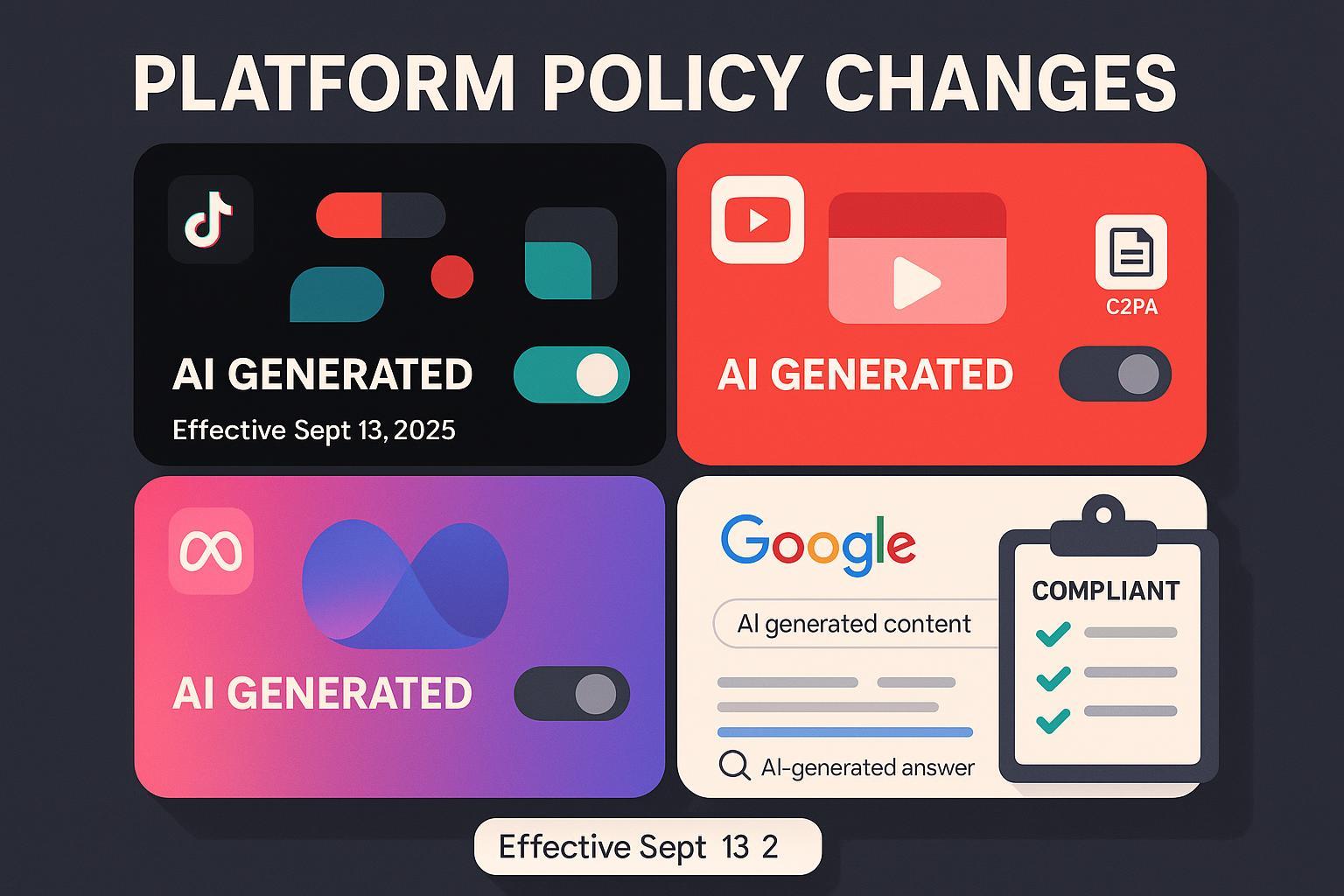

- Google confirmed a March 2025 core update that rolled out from March 13–27, 2025, on its status dashboard, and followed with an August 26–September 22 spam update documented on the same channel. See Google’s official entries for the March 2025 core update window and the August 2025 spam update timeline.

- Google’s AI Overviews—packaged as AI Mode—scaled through mid‑2025, with Google emphasizing broader availability and engagement lift in its AI Mode product update (May 2025).

- Third-party measurement shows that the presence of AI Overviews expanded materially in early 2025: Semrush/Datos reported AI Overviews triggering on about 13% of searches by March 2025 (US desktop, methodology detailed in their study).

- Bing/Copilot tested answer UX variations that can tuck sources behind a “read more” toggle or shift layouts into two-column styles—changes that may suppress immediate publisher CTR. Industry observers documented these experiments with screenshots in SERoundtable’s August–October 2025 coverage.

- In early October, SEOs reported fresh ranking volatility following the late-summer updates. While Google didn’t post a new confirmed update for those specific days, industry trackers noted turbulence; see SERoundtable’s October webmaster recaps for context on the fluctuations.

Why this matters for visibility and reporting

- When AI summaries appear, users click fewer classic links. In its July 22, 2025 analysis of March data, Pew found summaries appeared on 18% of Google searches; users clicked traditional links in 8% of those visits versus 15% when no summary appeared, and only about 1% clicked links inside summaries. Read the details in the Pew Research Center’s 2025 analysis of AI summaries and clicks.

- Zero‑/low‑click dynamics are now structural. Bain & Company’s February 2025 brief estimates roughly 60% of searches end without a click and many companies see 15–25% organic declines as AI answers rise; see Bain’s 2025 “Goodbye Clicks, Hello AI” report.

- Combine those with the Google core/spam updates and you have a visibility model with three moving parts: (1) classic ranking, (2) inclusion and citation within AI answers, and (3) resulting CTR. Winning in 2025 means optimizing for all three.

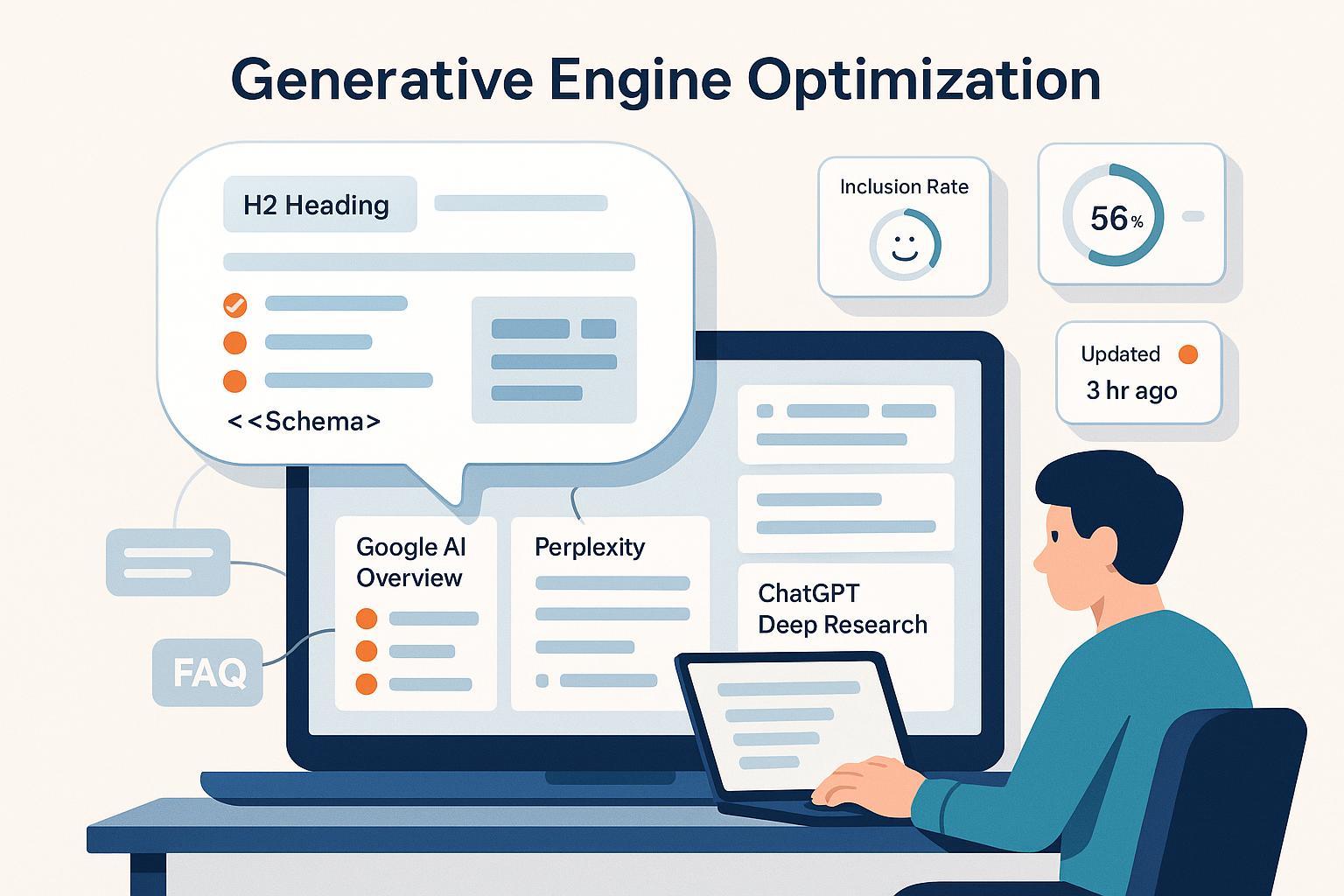

The new visibility model: ranking, citability, and evidence density

Think of AI answers as a parallel distribution channel with its own quality signals. Treat your content like an “answer supply chain”:

- Inputs: clean entities and facts (Organization/Person schema, consistent brand data, first‑party stats), quotable claims with dates and methods, expert bylines, and media types (FAQs, How‑Tos, Pros/Cons) that map to common answer intents.

- Transformation: search engines and answer models summarize, rerank, and sometimes merge sources; layout experiments (like Bing’s) influence how and whether sources surface.

- Outputs: your brand can appear as a citation, a snippet, or a referenced entity—with sentiment attached. That’s measurable and influenceable.

Practical implications:

- Elevate “evidence density.” Make key pages easy to quote: concise claim boxes, explicit dates and sample sizes, and links to primary sources. Add appropriate schema (Article/WebPage, Organization, FAQ/HowTo, Product, Pros/Cons). For YMYL-adjacent topics, show author credentials and, where appropriate, medical/legal review.

- Optimize for citability, not just rank. Headlines and H2s that match definitional, comparative, and procedural intents increase your chances of being pulled into multi-source answers.

- Track entity health. Ensure Knowledge Graph, Wikipedia/Wikidata, and directory/NAP facts are aligned; inconsistencies can degrade how confidently engines attribute facts to your brand.

Engine-specific playbooks you can run this quarter

Google AI Overviews / AI Mode

- Target intents that commonly trigger summaries: definitions, step‑by‑step guides, pros/cons, and comparisons. Cover “who/what/how/compare” variants in subheads and FAQs.

- Package concise, quotable facts with inline citations to primary sources. Keep claims crisp (3–12 words) and near the top of the page.

- Tighten structured data and authorship. Include Organization, Article, and appropriate supporting types (FAQ/HowTo/Product). Make author expertise and first‑party data obvious.

Bing / Copilot answers

- Lead with a one‑sentence definition or takeaway; Copilot often pulls the first 2–3 sentences.

- Keep source titles descriptive and unambiguous—tests show source visibility may collapse behind “read more” in some layouts, so clarity matters if only the title is shown. See examples discussed in SERoundtable’s 2025 write‑up of Copilot UX tests.

ChatGPT (browsing, Deep Research) and OpenAI ecosystem

- Provide “evidence‑dense” pages that browsing agents can cite cleanly. Clear headings that map to sub‑questions improve retrieval.

- Expect evolving citation breadth and formatting as OpenAI updates research and Responses capabilities; track notable behavior changes via the OpenAI Responses/API updates overview.

Perplexity

- Prioritize high‑signal sources (original research, primary data, authoritative explainers). Perplexity’s research modes highlight citation trails; make your methodology and attributions explicit so your pages are confidently included.

- Where appropriate, publish short “findings summaries” above the fold to increase snippet‑level quotability.

A practical monitoring workflow (cross‑engine)

- Baseline weekly: measure classic rankings, AI Overviews presence for your priority queries, and the count/position of your citations inside answer boxes across Google, Bing/Copilot, ChatGPT, and Perplexity.

- Track entity integrity: reconcile your brand’s structured profiles (schema, Wikidata, knowledge panels) each month.

- Investigate losses: when CTR drops, separate “rank loss” from “answer displacement” (you still rank, but an AI summary absorbs clicks).

Tooling example

- If you need an at‑a‑glance, cross‑engine view of AI answer citations and sentiment, Geneo can monitor mentions across Google AI Overviews, ChatGPT, Perplexity, and more, with historical tracking and basic sentiment labeling. Disclosure: Geneo is our product.

Your Q4 2025 operating cadence (and risk controls)

- Adopt a change‑log habit. Add a small “Updated on {date}” line to high‑intent pages when you refresh data or clarify definitions. It helps users and signals freshness.

- Refresh critical pages every 60–90 days this quarter. Update stats, methods, and examples; surface your most recent figures in summary boxes.

- Segment reporting by channel mechanics: classic organic, AI answer presence, and answer‑box citations. Do not rely solely on rank and sessions.

- Guard against measurement noise. During update windows, third‑party volatility trackers can swing; lean on Search Console and first‑party analytics for directional truth. For color commentary on current swings, see our take in this October 2025 algorithm volatility explainer.

- Evidence and schema audits. Quarterly, verify that your top 50 URLs expose quotable facts with sources and carry correct schema/author info.

- Distribution and digital PR. Seed quotable stats (license and attribute clearly) and engage high‑trust communities. Practical guidance on earning coverage that answer engines notice is outlined in our post on Reddit-driven AI search citations.

Mini checklist by role

- Marketing leadership: Reforecast traffic with a zero‑/low‑click assumption; reallocate toward evidence development (original research) and syndication that grows citability. Reference macro context in Bain’s 2025 analysis when aligning exec expectations.

- SEO managers: Stand up AI‑answer tracking, implement entity and schema fixes, and prioritize “evidence‑dense” rewrites on pages that map to high‑summary intents.

- Content editors: Standardize claim boxes with dates/methods, expand FAQ/HowTo sections, and ensure expert bylines are present and consistent.

Benchmarks to watch and how to interpret them

- Frequency of AI Overviews: Track the percent of your priority queries that trigger summaries. Semrush/Datos’ US‑desktop snapshot placed triggering at about 13% in March 2025; your vertical may differ.

- Click behavior when summaries appear: The Pew 2025 study on AI summaries and link clicks is a conservative baseline—use it to calibrate executive expectations.

- Macro zero‑click pressure: Bain’s 2025 “Goodbye Clicks, Hello AI” highlights the structural nature of the shift; plan channel diversification accordingly (email, social, partner syndication).

The bottom line

Classic rankings still matter, but in 2025 the bigger lever is your citability inside AI answers—and the evidence density, entity hygiene, and packaging that earn it. Treat AI answers as a distribution channel, not a black box. Refresh high‑intent pages this quarter, harden your schema and authorship signals, and add a weekly cadence to measure answer presence and citations across engines.

If you want a consolidated view of your brand’s AI‑answer presence while you operationalize these changes, you can centralize monitoring with Geneo and keep your team focused on evidence‑driven content improvements.

Updated on 2025-10-12: Added references to early‑October ranking volatility observations and Bing Copilot UX testing.