Top SaaS Customer Questions to AI Assistants — Agency Optimization Guide

Get the answers agencies need: Top SaaS questions buyers ask AI assistants, how citations work, and steps to optimize your FAQ for top visibility.

SaaS buyers now ask AI assistants for quick, confident answers before they ever talk to sales—or even click a blue link. This FAQ maps the questions customers ask across the journey, how engines choose sources, and what agencies can do to get reliable citations, build authority, and measure AI visibility. If assistants increasingly decide which brands show up, how do you make sure they quote you accurately?

What questions do SaaS buyers ask AI assistants during discovery and evaluation?

Across thousands of category searches, the same intents keep showing up: price and ROI, security and compliance, integrations and implementation, usability, vendor credibility, and side‑by‑side comparisons. Research highlights transparency on pricing and fast time‑to‑value as urgent expectations in B2B purchase decisions, per the G2 2024 Buyer Behavior Report (PDF) and HubSpot’s overview of the new buyer’s journey (2025). Security certifications, incident history, and implementation feasibility also surface as frequent deal gates in vendor guidance and buyer studies.

Two quick examples:

- Pricing/ROI: “How much does [Product] cost? What’s in each plan? What’s the payback period?” G2’s buyer research and its explainer on software pricing transparency (2025) show demand for clear pricing and quick value realization.

- Security/Compliance: “Is [Product] SOC 2 or ISO 27001 certified? How is data encrypted? Is SSO/SAML supported?” These themes appear consistently in review‑platform vendor guidance and evaluation checklists.

To help teams plan by stage, use this high‑level map:

| Journey stage | Example AI question intents |

|---|---|

| Discovery | “What category fits my use case?” “Top [category] tools for [industry].” “Budget ranges for [category].” |

| Evaluation | “X vs Y for mid‑market teams.” “Does [Product] integrate with Salesforce/Slack?” “SOC 2? ISO 27001? SSO/SAML/SCIM?” “Implementation timeline and resources.” |

| Onboarding | “How do I set up SSO?” “How to import users/data?” “What are API rate limits?” “How to configure alerts?” |

| Support | “Billing changes and refunds.” “Integration failed—how to fix?” “Exporting data on cancellation.” |

When you write the answers, keep one intent per question and make the top 2–5 sentences complete on their own. Think of that block as an answer capsule that an assistant can quote without trimming context.

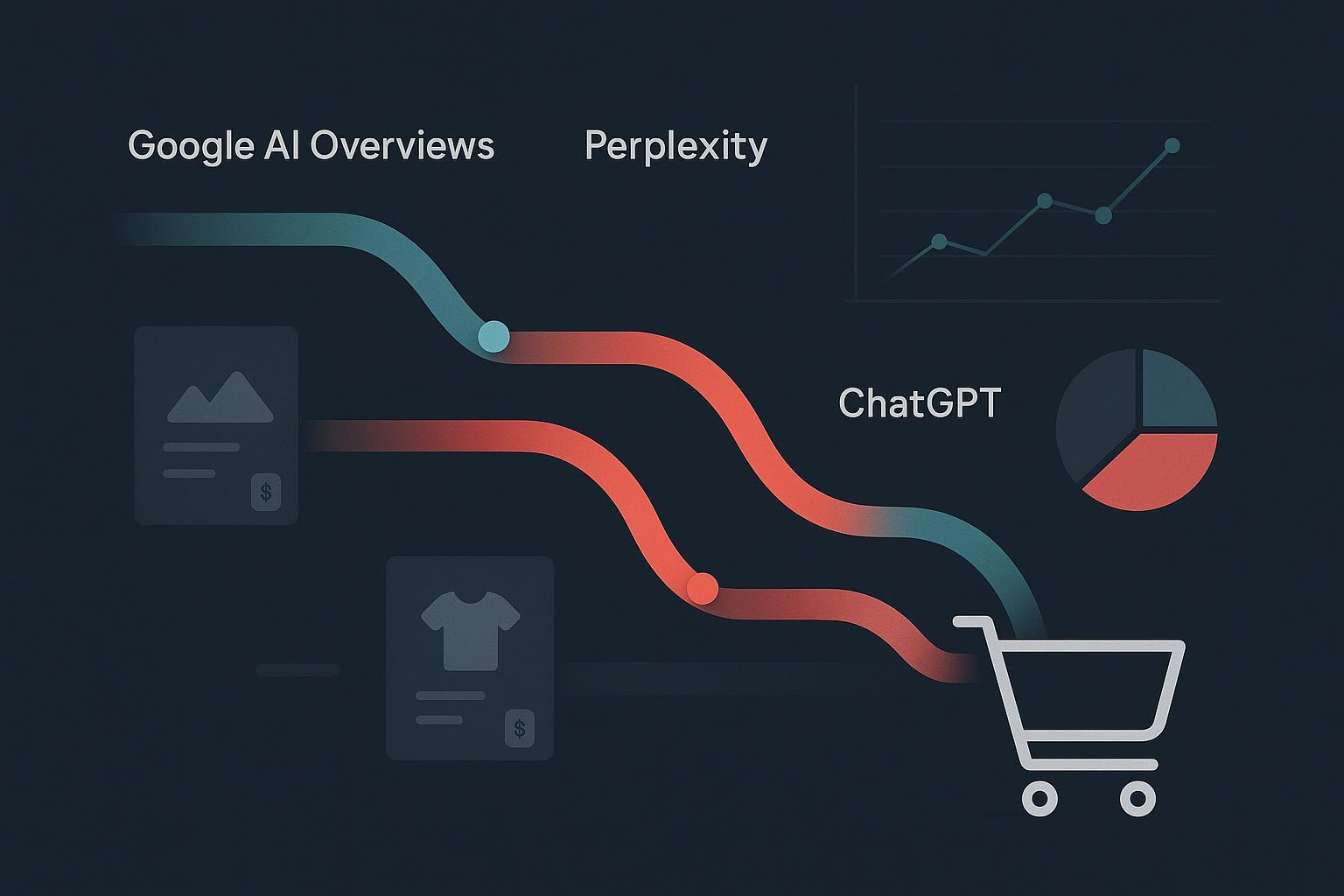

How do AI assistants pick sources and citations?

-

Google AI Overviews (AIO): Google says AI Overviews synthesize subtopics and show a diverse set of supporting links, with control governed by standard mechanisms like noindex, nosnippet, and max‑snippet. There aren’t special technical requirements beyond quality and eligibility standards—optimize for helpful, people‑first content, clear indexing, and topic authority, per Google’s AI features guidance (2025). Independent studies through 2025 show mixed traffic impact: several report lower CTR on informational queries featuring AIO compared with classic results, while Google notes these modules can send higher‑quality clicks. Agencies should set expectations accordingly and aim to be one of the cited sources by providing extractable answers and depth. See Search Engine Land’s reporting on CTR shifts (2025) for one such analysis.

-

Perplexity: Perplexity emphasizes citations by design—answers include numbered references linking to sources, per the Perplexity Help Center (2025). Analyses in 2024–2025 suggest it often cites more sources than peers and favors up‑to‑date, authoritative pages for factual claims.

-

ChatGPT (with browsing/search): When browsing or advanced research is enabled, ChatGPT includes citations to source material; see OpenAI’s capabilities overview (2025). Content traits correlated with citations include clear answer capsules, owned/original data, and low link density inside the capsule, per Search Engine Land’s late‑2025 analysis of ChatGPT citations.

What content patterns make your answers extractable for AI assistants?

-

Build answer‑first sections: Put a short, self‑contained answer at the top of each question or subtopic, then expand below. Use specific, unambiguous language and cite authoritative sources when making claims. Keep the capsule’s link density low so the quoting engine doesn’t struggle to attribute.

-

Use supported structured data (and avoid deprecated ones):

- FAQPage: Still useful for structuring Q&A pairs even though rich results are largely restricted to government/health sites since 2023; see the FAQPage structured data documentation. Keep one FAQPage object per page; ensure each Question and its Answer are visible; validate with the Rich Results Test.

- Product/ProductGroup: For product or plans pages, include required properties and consider returns/merchant policies where relevant; see Google’s Product variants guidance and merchant return policy schema docs (2024–2025). Google may ignore incomplete markup (noted in 2024 office hours).

- HowTo: Google deprecated HowTo rich results in 2025—see “Simplifying Search Results”—so do not rely on them for visibility.

- Article: Generic Article enhancements are de‑emphasized; lean on fundamentals. See the SEO Starter Guide (updated 2026) for current best practices.

-

Entity clarity and internal linking: Reinforce core entities (your product, category, integrations) with consistent naming and internal links from related pages. This helps assistants resolve ambiguity and improves your chance of being included in comparisons.

-

Validation workflow: After shipping, validate JSON‑LD, fetch as Google, check for errors/warnings, and keep a change log. Monitor whether assistants paraphrase your capsule accurately and adjust phrasing for clarity.

How should we localize FAQs for different countries and languages?

-

Start with intent research per locale: The question stems shift by market—payment methods, data residency, or local integrations may matter more. Build locale‑specific Q&A where it adds value.

-

Implement hreflang and maintain parity: Use proper hreflang for language/region pairs, avoid IP‑based redirects, and keep content parity so assistants don’t see conflicting answers across locales. For a practical overview, see Ahrefs’ localization SEO guide (2025).

-

Translate for clarity, not just words: Align terminology with local norms (e.g., SSO vs. enterprise login phrasing), and update screenshots/workflows. Use a single source of truth for facts (pricing logic, security claims) and manage local exceptions explicitly.

-

Operational cadence: Localize high‑intent pages first (pricing, integrations, security FAQ), then expand. Review quarterly to keep fast‑changing details current.

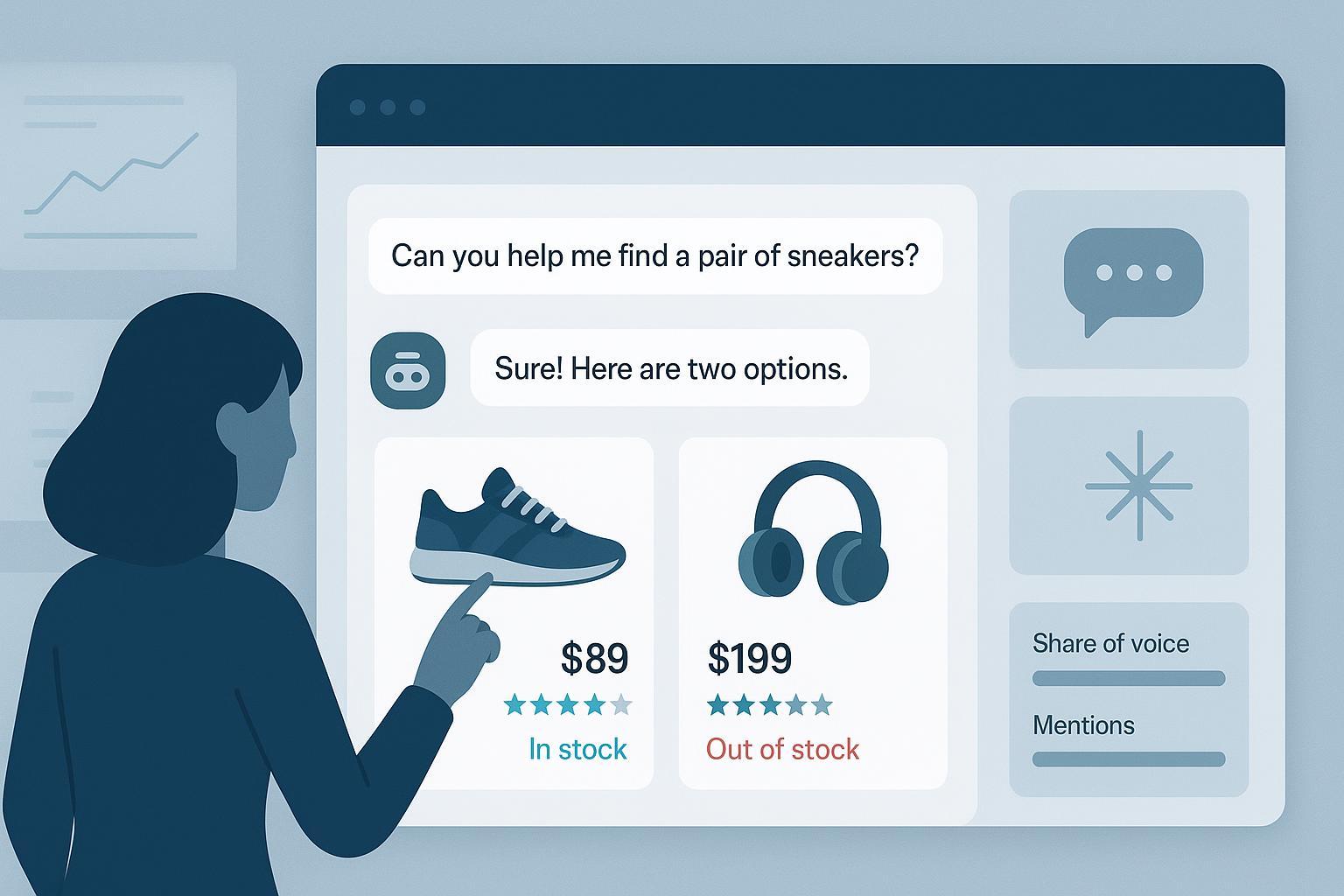

How can agencies measure and iterate AI visibility across engines?

Define KPIs that reflect multi‑engine reality:

- Inclusion and citation rate by engine (AIO, Perplexity, ChatGPT‑browsing)

- Share of Voice across engines and query sets

- Co‑citations with authoritative entities and directories

- Journey‑stage coverage (what percent of key questions cite or mention your brand?)

- Qualitative accuracy of AI paraphrases

- Changes in inclusion post‑update (content refreshes, schema tweaks, PR wins)

Set a steady cadence:

- Track weekly across a fixed prompt set; annotate changes in a log.

- Run structured updates every 3–6 months and compare pre/post visibility.

- A/B test answer capsules and schema presence/placement on representative pages.

Disclosure: Geneo (Agency) is our product. For cross‑engine monitoring and client‑ready reporting, agencies often centralize AI mentions and Share of Voice in one place. A tool like Geneo (Agency) supports tracking brand mentions and citations across ChatGPT, Perplexity, and Google AI Overviews, with white‑label dashboards for reporting. For planning the audit itself, see this guide to an AI visibility audit and a practical ChatGPT vs. Perplexity vs. Gemini vs. Bing monitoring comparison.

Quick troubleshooting: Why aren’t we getting cited?

- Your answers aren’t quote‑ready: The page buries the lead or mixes multiple intents. Create a crisp capsule (2–5 sentences) with unambiguous language up top.

- Outdated or uncited claims: Assistants prefer current, attributable facts. Refresh data, add dates, and link to original reports using descriptive anchors.

- Weak entity signals: Your product and category aren’t reinforced across the site. Tighten internal linking, clarify naming conventions, and earn co‑citations from credible sources.

- Deprecated or misapplied schema: Remove broken HowTo, fix FAQ parity issues, and complete Product markup. Validate after every release.

- Locale mismatch: Hreflang is missing or IP redirects are interfering. Ensure parity and stable URLs.

- Competitive set moved: Rivals shipped new comparisons or original data. Update your content, add owned data, and pursue digital PR to strengthen authority.

Ready to move? Make a short list of the five highest‑intent questions per stage, create answer‑first capsules with supporting evidence, deploy structured data you can validate, and set a weekly monitoring rhythm. Then iterate. That’s how you build durable AI visibility without chasing every algorithmic twitch.