The Ultimate Guide to Optimizing Content for AI Search Results (2025)

Master 2025 tactics for earning citations in AI-powered search: Google AI Overviews, Bing Copilot, ChatGPT, Perplexity. Advanced schema, robots, measurement. Get the playbook!

AI answers now sit above or alongside classic results. In 2025, winning visibility means earning citations inside AI Overviews, Copilot answers, ChatGPT/Search, and Perplexity—without abandoning traditional SEO. This guide gives you a practitioner-grade playbook: what changes, what to implement, and how to measure it across engines.

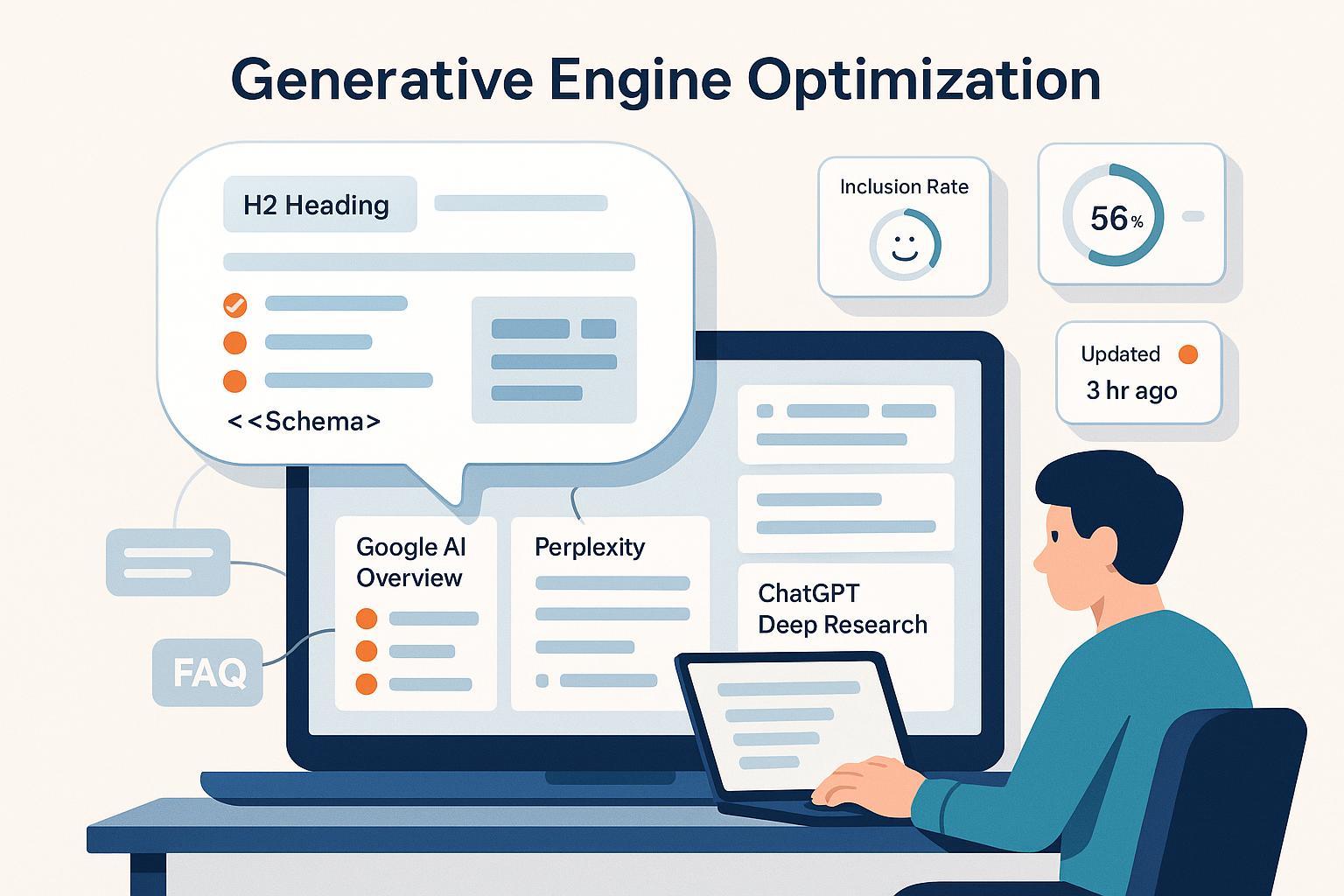

How AI answers are assembled (and why it matters for your content)

Google explains in its AI features documentation (2025) that AI Overviews and AI Mode can use a “query fan-out” technique—issuing multiple related searches to gather supporting sources and display a wider set of links. That means your content can be pulled in via expanded subtopics, not only the exact query. See Google’s own description in AI features and your website (Google Search Central, 2025).

Two implications for optimization:

- Be the best short answer for the core question, and the best explainer for adjacent sub-questions.

- Make it effortless for models to cite you: clearly scoped sections, crisp definitions, and obvious evidence.

Google also stated in 2024 that AI Overviews are designed to help users “get to the gist” and explore links, claiming more diverse clicks to supporting sites compared to earlier designs; see the announcement in Generative AI in Search (Google, 2024). Treat AI panels as a distribution channel that rewards scannable, verifiable nuggets backed by credible sources.

What wins across engines in 2025: patterns and page design

- Answer-first structure: Lead with a one-paragraph definition or list that could be quoted verbatim.

- Skimmable nuggets: Bulleted steps, short tables, and tight subheadings aligned to how AI summaries are formatted.

- Clear evidence: Attribute key facts to primary sources with short, descriptive anchors and years.

- Entity clarity: Real author bios, Organization/Person schema, and consistent “sameAs” links.

- Freshness signals: Visible “last updated,” dated examples, and change logs for living docs.

- Multimedia: Support with images (good alt text) and short videos (timestamps).

Engine-by-engine playbooks

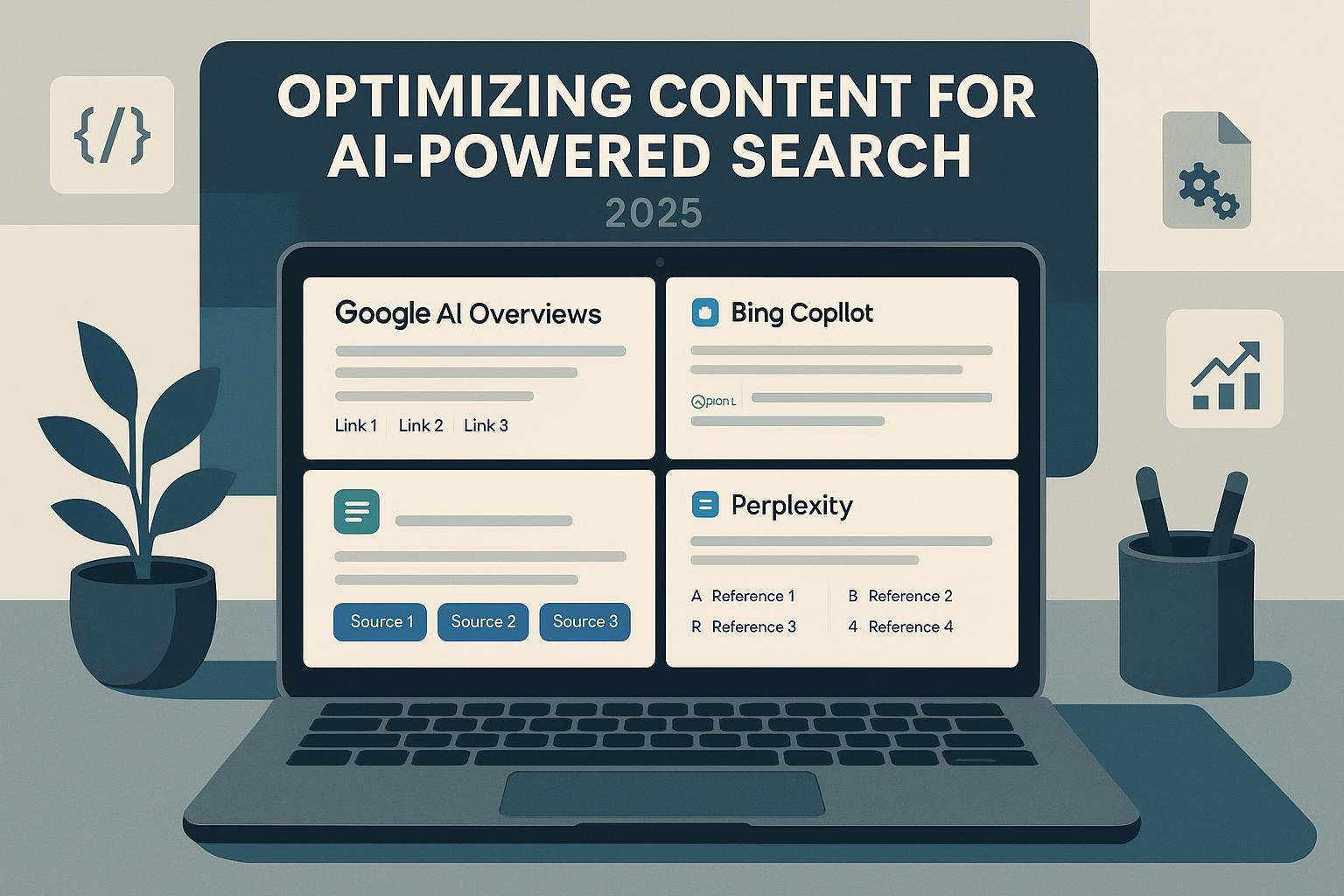

Google AI Overviews and AI Mode

What we know from Google’s public docs (2025):

- AI Overviews/AI Mode can expand queries and surface a broader set of links; see AI features and your website (Google Search Central, 2025).

- The feature only shows when additive to classic Search and therefore won’t trigger on every query.

- There is no special “AIO opt-out.” Standard controls (robots, noindex, snippet controls) still apply, but they aren’t labeled as AIO-specific in Google’s documentation.

Winning patterns:

- Create definitional blocks: 40–90 words, precise phrasing, and a single-sentence summary that could be reused.

- Cover sub-questions your audience asks (pricing, steps, pros/cons, risks). Query fan-out favors comprehensive topical hubs.

- Use JSON-LD structured data on the page (Article, Organization/Person) and keep authorship and dates explicit. Google reiterates the approach in Intro to structured data (Search Central, 2025).

Caveats:

- FAQ/HowTo visibility changed: In August 2023, Google reduced FAQ rich results and limited HowTo on mobile; see Changes to HowTo and FAQ rich results (Google Search Central, 2023). Use these types when they match on-page content and user needs, not just to chase snippets.

Bing Copilot Search

Microsoft’s 2025 announcement emphasizes transparent citation: Copilot shows every link used to craft the answer and inlines citations within sentences. See Introducing Copilot Search in Bing (Microsoft Bing Blog, 2025).

Optimization moves:

- Provide compact, verifiable facts and definitions. Copilot often highlights phrase-level citations.

- Ensure your sources are fresh and well-attributed; stale or weakly sourced statements are less likely to be trusted.

- Use schema and authorship; align your wording to the question intent and adjacent sub-intents.

OpenAI ChatGPT/Search (and OAI-SearchBot)

Controls and behavior to know:

- OpenAI documents multiple crawlers. GPTBot is primarily for model training; you can allow or block it via robots. See GPTBot (OpenAI, 2025).

- OAI-SearchBot is used for ChatGPT Search indexing and citations; you can allow it even if you block training. See Overview of OpenAI crawlers (OpenAI, 2025).

Optimization moves:

- Publish concise, cite-ready explainers and comparisons your audience searches for inside ChatGPT.

- Add source-backed stats with dates; align headings to common prompt structures (“pros and cons,” “steps,” “compare X vs Y”).

Perplexity

Perplexity’s product emphasizes live retrieval with inline citations to sources. The company has stated it honors robots.txt, while distinguishing user-driven agents from bots. See the discussion in Agents or bots: making sense of AI on the open web (Perplexity, 2024/2025). At the same time, some 2024–2025 reports allege stealth crawling via obfuscated user agents; for example, Cloudflare’s report describes evasion techniques—see Cloudflare’s analysis of undeclared crawlers (Cloudflare, 2025).

Practical approach:

- Use robots.txt to state your policy; monitor logs. For critical enforcement, add CDN/WAF rules and rate limits.

- Publish scannable answers with strong citations. Perplexity often rewards clean, high-precision summaries.

Structured data and entity signaling that actually help

Google recommends JSON-LD for ease and consistency. See the guidance in Intro to structured data (Search Central, 2025). Prioritize:

- Organization and Person entities with “sameAs” to authoritative profiles.

- Article/BlogPosting with author, datePublished, dateModified.

- FAQPage/HowTo when genuinely present on the page (remember 2023 visibility changes noted above).

About 2025 deprecations: Multiple industry outlets reported Google dropped or simplified several rich result types in June 2025; until the Search Central page is identified, treat this as tentative. See coverage in Google deprecates seven structured data types (PPC.Land, 2025) and Google drops reporting on several structured data types (Search Engine Land, 2025).

Example JSON-LD snippets

Organization

{

"@context": "https://schema.org",

"@type": "Organization",

"name": "ExampleCo",

"url": "https://www.example.com/",

"logo": "https://www.example.com/logo.png",

"sameAs": [

"https://en.wikipedia.org/wiki/ExampleCo",

"https://www.wikidata.org/wiki/Q42",

"https://www.linkedin.com/company/exampleco/"

]

}

Person (author)

{

"@context": "https://schema.org",

"@type": "Person",

"name": "Jane Smith",

"url": "https://www.example.com/authors/jane-smith",

"jobTitle": "SEO Director",

"sameAs": [

"https://www.linkedin.com/in/janesmith/",

"https://scholar.google.com/citations?user=XXXX"

]

}

Article/BlogPosting

{

"@context": "https://schema.org",

"@type": "BlogPosting",

"headline": "How to Optimize for AI Overviews in 2025",

"datePublished": "2025-10-12",

"dateModified": "2025-10-12",

"author": {"@type": "Person", "name": "Jane Smith"},

"publisher": {"@type": "Organization", "name": "ExampleCo"}

}

FAQPage

{

"@context": "https://schema.org",

"@type": "FAQPage",

"mainEntity": [

{

"@type": "Question",

"name": "What is an AI Overview?",

"acceptedAnswer": {

"@type": "Answer",

"text": "AI Overviews are AI-generated summaries in Google Search that provide links to supporting sources."

}

}

]

}

E-E-A-T and helpful content, applied

Google’s guidance continues to reward content that demonstrates experience, expertise, authoritativeness, and trustworthiness. Revisit the 2023–2025 advice in Creating helpful, reliable, people-first content (Google Search Central, 2025) and the policy note in Google Search and AI content (Google, 2023). In practice:

- Make the author and editorial process visible. Link author bios and disclose automation if used.

- Cite original data and include dates and sample sizes when relevant.

- Add “last updated” and a short “what changed” note for living articles.

- Build topic hubs with internal links that guide beginners to deeper pages.

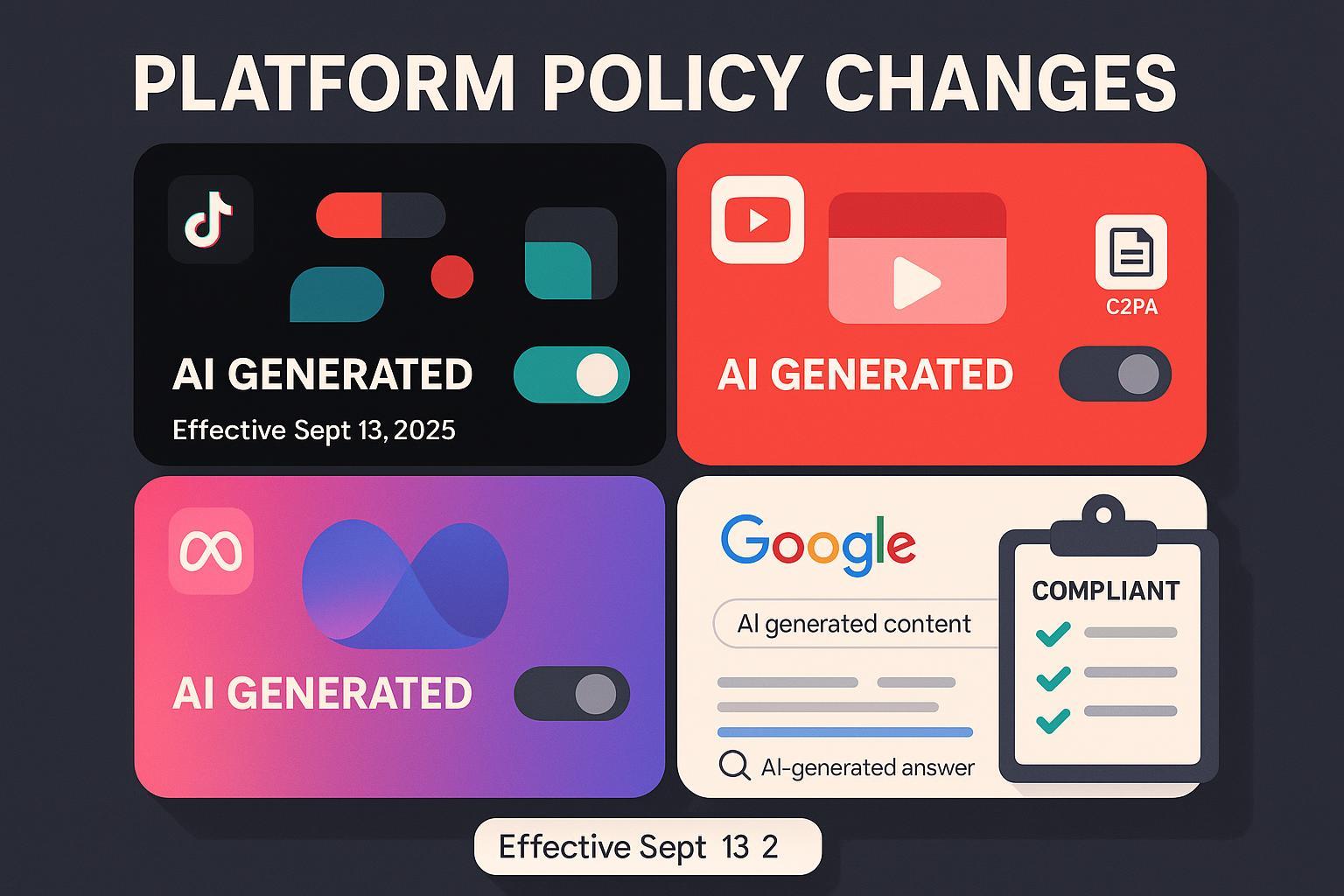

Robots, crawler governance, and llms.txt

Your goal is to create a policy that allows citation-focused crawlers while limiting training access (if that’s your position), and to enforce it when necessary.

Key tokens and bots:

- Google-Extended governs use of crawled content for Gemini training/grounding; it is not a ranking factor. See Google common crawlers (Google-Extended) (Google, 2025).

- OpenAI crawlers include GPTBot (training) and OAI-SearchBot (search/citation). See Overview of OpenAI crawlers (OpenAI, 2025) and GPTBot (OpenAI, 2025).

- ClaudeBot (Anthropic) respects robots.txt per Does Anthropic crawl the web and how to block (Anthropic Support, 2025).

- CCBot (Common Crawl) supports opt-outs; see opt-out protocols (Common Crawl, 2024/2025).

Example robots.txt patterns (tailor to policy)

Baseline: allow search/citation, block training

User-agent: GPTBot

Disallow: /

User-agent: Google-Extended

Disallow: /

User-agent: OAI-SearchBot

Allow: /

User-agent: Bingbot

Allow: /

Strict: block selected AI crawlers

User-agent: GPTBot

Disallow: /

User-agent: ClaudeBot

Disallow: /

User-agent: PerplexityBot

Disallow: /

User-agent: CCBot

Disallow: /

Notes:

- Robots is voluntary. For non-compliant agents, enforce via CDN/WAF rules, bot management, and rate limits. If you’re concerned about Perplexity compliance, combine robots with IP/ASN-based rules.

- Keep a change log and test regularly; misconfigured blocks can suppress desired citations.

Emerging files and protocols

- llms.txt is a community convention for providing LLM-friendly indexes of your content. It’s not a standard but can help discovery. See the repo at llms.txt (2024–2025).

- The Model Context Protocol (MCP) is a developing open standard for securely connecting AI apps to external data/tools; relevant if you expose data via controlled plugins or APIs. See the MCP specification (2025-03-26) (Anthropic-led, 2025).

Content patterns AI engines reward

- Definitions: 1–2 sentences in plain language, then a short elaboration.

- Step-by-step: 5–9 steps with verbs up front (“Audit… Map… Implement… Validate…”).

- Comparisons: Short tables with criteria and a one-line verdict per row.

- Evidence: Inline, descriptive anchors to primary sources. Avoid generic “here” links.

- Visuals: Annotated screenshots and diagrams; alt text summarizing the insight.

Measurement: tracking inclusion, citations, and sentiment

What to log per engine/query:

- Inclusion: Is your page cited/included? If yes, where and how often?

- Prominence: Panel position, inline vs. source list, and whether the snippet quotes your phrasing.

- Sentiment/stance: Positive, neutral, or negative framing around your brand or product.

- Freshness: Date seen; delta vs. last audit.

- Impact signals: Correlated traffic changes or branded query volume shifts.

Manual baseline workflow:

- Build a query set covering core topics and intents.

- On a cadence (weekly/biweekly), run checks in Google (AIO), Bing Copilot, ChatGPT/Search, and Perplexity.

- Capture screenshots, copy response text, and record sources cited. Annotate any sentiment shifts.

- Store in a shared sheet or a lightweight database; add GA4 annotations for notable changes.

Tooling options (neutral overview):

- Multi-engine trackers that monitor AI panels and sentiment. Some teams also leverage vendor suites or custom scrapers. Validate features and data policies independently.

- Contextual validation methodologies like MIT’s 2024 approach “ContextCite” that aim to improve trustworthy AI citations; see MIT News on ContextCite (MIT, 2024).

Practical example (neutral, reproducible)

- Set up a dashboard to monitor multi-engine visibility and tone. You can log citation frequency per engine and label sentiment at the answer level.

- Using Geneo for this workflow, you might configure a brand and a competitor, track queries weekly across AI Overviews, Copilot, ChatGPT/Search, and Perplexity, and export a CSV of citations with sentiment labels to augment your reporting. Disclosure: Geneo is our product.

- Balance this with alternatives and manual methods: for example, vendor-neutral tools, Looker Studio dashboards pulling from a sheet, or custom scrapers, depending on your governance needs.

Extended internal reading on real-world setups:

- See cross-industry examples of “multi-engine visibility and sentiment tracking” in this roundup: case studies on AI search strategy.

- For a neutral landscape review of “AI brand monitoring tools,” compare approaches in this practical write-up: tooling comparison.

If you’re defining program goals for stakeholders, describe outcomes as: “increase inclusion rate for priority topics,” “shift sentiment to neutral/positive,” and “reduce time-to-detect misinformation.”

Operations: cadence, governance, and roles

- Cadence: Quarterly technical reviews (robots, sitemaps, structured data); monthly content updates on tier-1 pages; weekly AI panel audits for priority topics.

- Roles:

- SEO/Content: owns page patterns, schema, interlinking, and freshness.

- PR/Comms: addresses misinformation and sentiment remediation.

- Engineering: owns robots/CDN/WAF enforcement and data exports.

- Analytics: owns dashboards and causal analysis.

- Playbooks: Define “misinformation response” SOPs with timelines, source outreach, and factual corrections.

Staying resilient through algorithm changes

- Maintain a change log and A/B test copy length, lists vs. paragraphs, and schema variants.

- Track industry news and your own results; for context on Google changes, see this commentary on recent algorithm updates.

Advanced and future-proofing

- Prompt testing: Periodically test how engines answer your queries. Where do they get facts wrong? What phrasing do they quote? Incorporate the findings into your on-page summaries.

- llms.txt: Consider adding a /llms.txt that indexes your most authoritative resources to help compliant agents discover the right pages; see the community spec at llms.txt (2024–2025).

- Model Context Protocol: If you expose data or tools to AI apps, MCP can standardize secure connections and permissions; see the MCP specification (2025-03-26) (2025).

30/60/90-day AI search optimization plan

30 days

- Audit top 50 URLs for answer-first sections, authorship, dates, and outbound citations.

- Implement Organization/Person and Article schema; validate with Rich Results Test.

- Configure robots.txt to reflect your policy on training vs. search/citation bots; deploy WAF rules if needed.

- Establish a 50–100 query monitoring list and run the first baseline audit.

60 days

- Build or enhance 6–10 topic hubs with internal links to prerequisites and deep dives.

- Add FAQ/HowTo sections where genuinely useful; ensure on-page content mirrors the markup.

- Launch an AI panel visibility dashboard with weekly snapshots and sentiment fields.

- Update or consolidate stale pages; add “last updated” and change logs.

90 days

- Expand measurement to include prominence scoring and answer-level sentiment trend lines.

- Run prompt tests quarterly and publish learnings internally.

- Pilot llms.txt and evaluate utility.

- Present a leadership report tying inclusion/sentiment shifts to content releases and policy changes.

FAQs and common pitfalls

- Is there an “opt-out” of Google AI Overviews? Not specifically per Google’s docs. You can use standard indexing/snippet controls, but there’s no AIO-specific switch documented in AI features and your website (2025).

- Should I mark up everything with schema? No. Use types that match visible content and user intent. Over-markup or mismatched markup can be ignored or cause quality issues. Remember the 2023 FAQ/HowTo visibility change (Google, 2023).

- Can I block training while allowing citations? Often, yes: for OpenAI you can block GPTBot and allow OAI-SearchBot; see OpenAI crawlers overview (2025). Google-Extended can be disallowed without affecting ranking; see Google common crawlers (2025).

- What about Perplexity compliance? Use robots.txt plus CDN/WAF enforcement as needed; Cloudflare’s 2025 post documents concerns—see Cloudflare’s analysis (2025).

- How do I judge success? Track inclusion rate, prominence, and sentiment over time per engine. Tie movements to content releases and policy changes, not just to seasonality.

Closing

If you apply answer-first formatting, clean entity/schema signals, thoughtful crawler governance, and consistent measurement, you’ll improve your odds of earning citations across AI Overviews, Copilot, ChatGPT/Search, and Perplexity—while strengthening classic SEO. If you want a turnkey way to operationalize this playbook, Geneo can help you monitor multi-engine AI visibility and sentiment alongside your manual audits.