How to Train Your Team on GEO Principles: A Practical Guide

Learn actionable steps to train your team on GEO principles for AI visibility, citations, and content performance. Complete training plan inside.

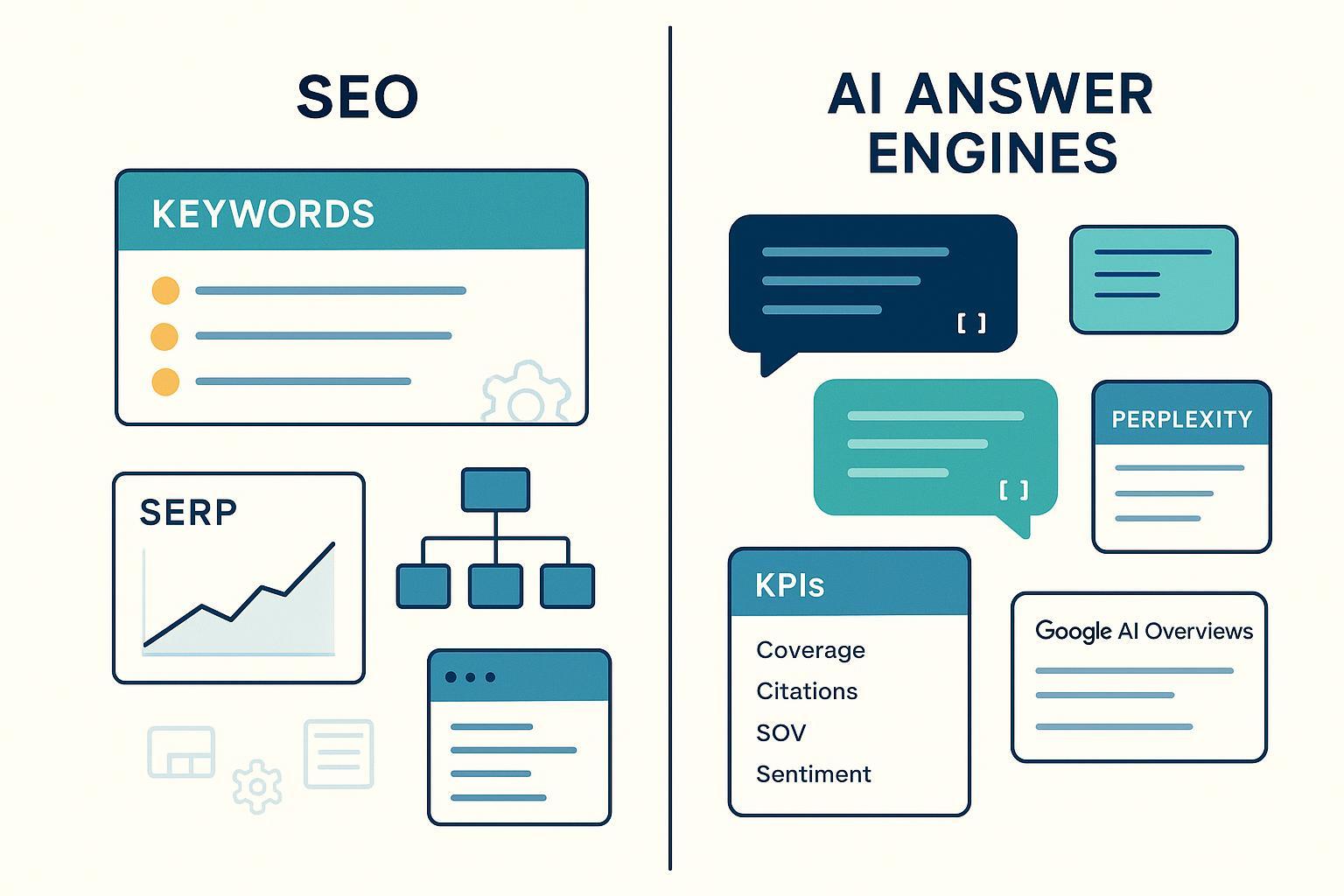

If your content earns links in classic SERPs but rarely appears inside AI answers, it’s time to train for GEO—Generative Engine Optimization. GEO focuses on getting your pages understood, cited, and surfaced within AI-generated responses across ChatGPT, Perplexity, and Google’s AI features. As Search Engine Land explained in 2024, GEO is about “boosting visibility in AI-driven search engines,” not local or geographic SEO, and it changes how teams plan and measure content performance. See the overview in the publisher’s 2024 guide: the definition of GEO in Search Engine Land’s explainer (2024).

GEO in one page: what it is and why it matters

GEO is the practice of structuring and substantiating content so that AI systems can easily extract, attribute, and link to it. The shift matters because AI interfaces increasingly present synthesized answers with citations, not just ten blue links. OpenAI’s product update shows this direction clearly: ChatGPT Search provides timely answers with a dedicated Sources view and inline links, per OpenAI’s announcement (2024). Perplexity’s interface similarly foregrounds numbered citations to source pages, as described in Perplexity’s Help Center (2025). And Google documents how sites can support its AI features with helpful content and structured data; see Google Search Central’s AI features guidance (2025).

If you’re new to GEO, start with the strategic concept of AI visibility—your share of presence inside AI answers—and why it drives brand discovery. For a deeper primer, read our internal guide on AI visibility and brand exposure in AI search.

The core GEO principles your team must master

At a glance, GEO turns abstract “helpfulness” into concrete editorial and technical behaviors. Train to these five pillars.

| GEO principle | What good looks like in practice |

|---|---|

| Clarity & structure | Direct-answer blocks, scannable H2/H3s, concise lists and tables, on-page FAQ that mirrors real questions. |

| Authority & E-E-A-T | Expert bylines, primary/official sources, quotes with context, visible update logs and dates. |

| Semantic completeness | Cover entities and related sub-intents; define terms; include synonyms users actually say; add internal links to cornerstone pieces. |

| Citation-worthiness | Verifiable facts, stats, and definitions written in crisp, quotable sentences; canonical datasets or methodologies when possible. |

| Technical hygiene | JSON-LD for Article/FAQ/HowTo/Product where appropriate; fast pages, mobile-first UX, clean canonicalization and sitemaps. |

These practices align with industry guidance that emphasizes structured formatting, trustworthy sourcing, and schema. For example, Google’s structured data docs explain Article, FAQPage, and HowTo implementations you can validate in Rich Results Test; see Google’s structured data documentation (ongoing).

Role-based learning objectives (so everyone knows what “good” means)

Give each function a clear outcome: The SEO lead defines prompt sets, maps conversational intents and entities, and specifies schema in briefs while owning GEO QA gates; the content strategist or writer crafts direct answers, adds 2–3 primary sources per key claim, keeps a citation log, and writes crisp, quotable lines; PR/Comms packages expert quotes and proprietary stats on media-friendly pages; web/technical implements and validates JSON-LD (Article, FAQPage, HowTo, Product) and maintains performance, mobile UX, sitemaps, and canonicalization; legal/compliance establishes claim review, disclosure rules, and AI transparency notes for regulated topics.

The 30–60–90 day training plan

Here’s a practical cadence you can run without vendor lock-in. Think of it like strength training for content operations: short, repeatable drills that compound.

Days 1–30 (Foundations)

- GEO 101 and KPI orientation: Contrast GEO vs. traditional SEO and local SEO. Define AI visibility, citation rate, attribution, and “chunk” extraction (the specific lines AI lifts). Lab: Reformat a target page with a one-paragraph direct answer, clean H2/H3s, and a 4–6 question FAQ.

- Sourcing & E-E-A-T: Add primary sources (official docs, original research) to your top three pages; include author bios and an updated-on note. Lab: Create a shared citation log for each page.

- Schema basics: Implement JSON-LD for Article and FAQPage; validate via Rich Results Test. Lab: Fix any errors and re-submit in Search Console.

- Prompt-alignment test I: Run five real questions per page in ChatGPT Search and Perplexity; note which sentences AIs quote or paraphrase; log sources selected.

Days 31–60 (Application)

- Semantic completeness: Expand briefs to cover related sub-intents and synonyms; add internal links to cornerstone explainers. Lab: Write a new section and a small table that clarifies definitions/data.

- Platform nuance II (Google): Review eligibility signals and how links show in Google AI features. Lab: Compare two content variants; track which one appears in Overviews after re-crawl. See our analysis of Google AI Overview tracking methods.

- Technical hygiene: Address Core Web Vitals on the template you edited; confirm canonical tags and sitemap inclusion. Lab: Document changes and re-measure.

- PR/Comms integration: Publish a data-backed explainer or mini-study; include clear methodology and downloadable table. Lab: Draft 2–3 quotable lines with stats.

Days 61–90 (Scaling & governance)

- GEO QA and troubleshooting: Audit 10 pages against the pre-publish checklist below; fix missing schema, thin sources, or vague claims. Lab: Before/after snapshots.

- Governance & ethics: Set a quarterly fact-check and SME review cadence; document AI content disclosures where applicable. Lab: Simulate an editorial council decision.

- Measurement & optimization: Build a dashboard that shows AI visibility and citation frequency from your prompt set. Lab: Write a monthly memo with findings and next steps.

Want broader context for your team? Share our primer on traditional SEO vs. GEO so stakeholders understand why workflows and KPIs evolve.

Platform-specific labs: what to test and what to log

ChatGPT Search (Browse with Bing)

- What to test: Five–ten prompts a buyer or journalist would ask. Include entity variations and synonyms.

- What to log: Which of your pages are cited in the Sources view, which sentences were extracted or paraphrased, and whether your images appear with source links. OpenAI confirms sources and inline links in its 2024 product announcement.

Perplexity

- What to test: Queries that require synthesis (definitions, comparisons, step-by-step how-tos).

- What to log: Citation numbers and order; whether Perplexity names your brand/domain; which statements it lifts. Its Help Center describes multi-source synthesis and linked citations in the 2025 overview.

Google AI features (including Overviews)

- What to test: Evergreen informational prompts tied to your cornerstone pages; watch for entity clarity, schema presence, and on-page helpfulness.

- What to log: If and how your page appears (prominent links, inline attribution), competing sources, and changes after content updates. See Google’s AI features guidance (2025) for site-owner best practices.

KPIs and dashboards (what to measure and how)

Measure the share and quality of your presence inside AI answers. Treat these as frameworks; naming and formulas vary by team.

- AI visibility rate: The percentage of tracked prompts where your brand/content appears in AI answers (by platform). Instrument by running your prompt set weekly and recording presence.

- AI citation rate: The share of AI answers that explicitly cite or link to your pages. Classify per platform (inline link, footnote, Sources panel).

- Attribution rate: The portion of answers that reference your brand or domain even when paraphrasing.

- Chunk extraction frequency: How often specific sentences or data from your pages show up verbatim or near-verbatim.

- Engagement from AI answers: When trackable, clicks and downstream behavior from AI citations; otherwise triangulate with branded query trends and lead tagging.

To help your analysts, we outline measurement frameworks and trade-offs in LLLMO-style metrics for AI answer quality. Multi-brand teams may also want a central workspace; see our agency/white-label overview.

GEO QA and troubleshooting

Use this lightweight pre-publish checklist on every strategic page:

- Direct answer: Is there a one-paragraph answer near the top that addresses the main question in plain language?

- Sources: Are 2–3 primary, high-authority citations present for key claims, with descriptive anchors?

- Schema: Is valid JSON-LD present (Article plus FAQPage/HowTo if relevant) and tested?

- Entities: Are terms defined, synonyms included, and internal links added to cornerstone pages?

- Trust: Are bylines, bios, and updated-on notes visible? Are claims current?

If a page still fails to appear or be cited, try this remediation flow: replace weak sources with primary ones; add a data table or methodology section; tighten quotable lines; add or fix schema; re-run platform tests; log changes and outcomes. Google’s documentation on structured data explains correct implementations and validation, which supports AI feature eligibility—see Google’s structured data guidance.

Practical tooling example (objective, optional in your program)

Disclosure: Geneo is our product. Geneo can be used to monitor brand mentions, citations, and sentiment in AI-generated answers across ChatGPT, Perplexity, and Google AI features; log historical prompt/answer snapshots for training reviews; and surface content opportunities based on what AIs are citing. Teams often create a monthly “GEO review” where they compare their prompt set results, note which lines were extracted, and align the next sprint’s briefs accordingly.

Next steps

Pilot the 30–60–90 plan with 10–15 pages. Keep a shared prompt and citation log, and run weekly platform tests. Stand up a simple dashboard for AI visibility and citation rate by platform, then set monthly targets and a quarterly retraining cadence. If you need centralized monitoring and an auditable history of AI citations, try Geneo. For background context to brief your stakeholders, share our guide on traditional SEO vs. GEO.