Google SpamBrain AI Update 2025: Best Practices for SEO Compliance

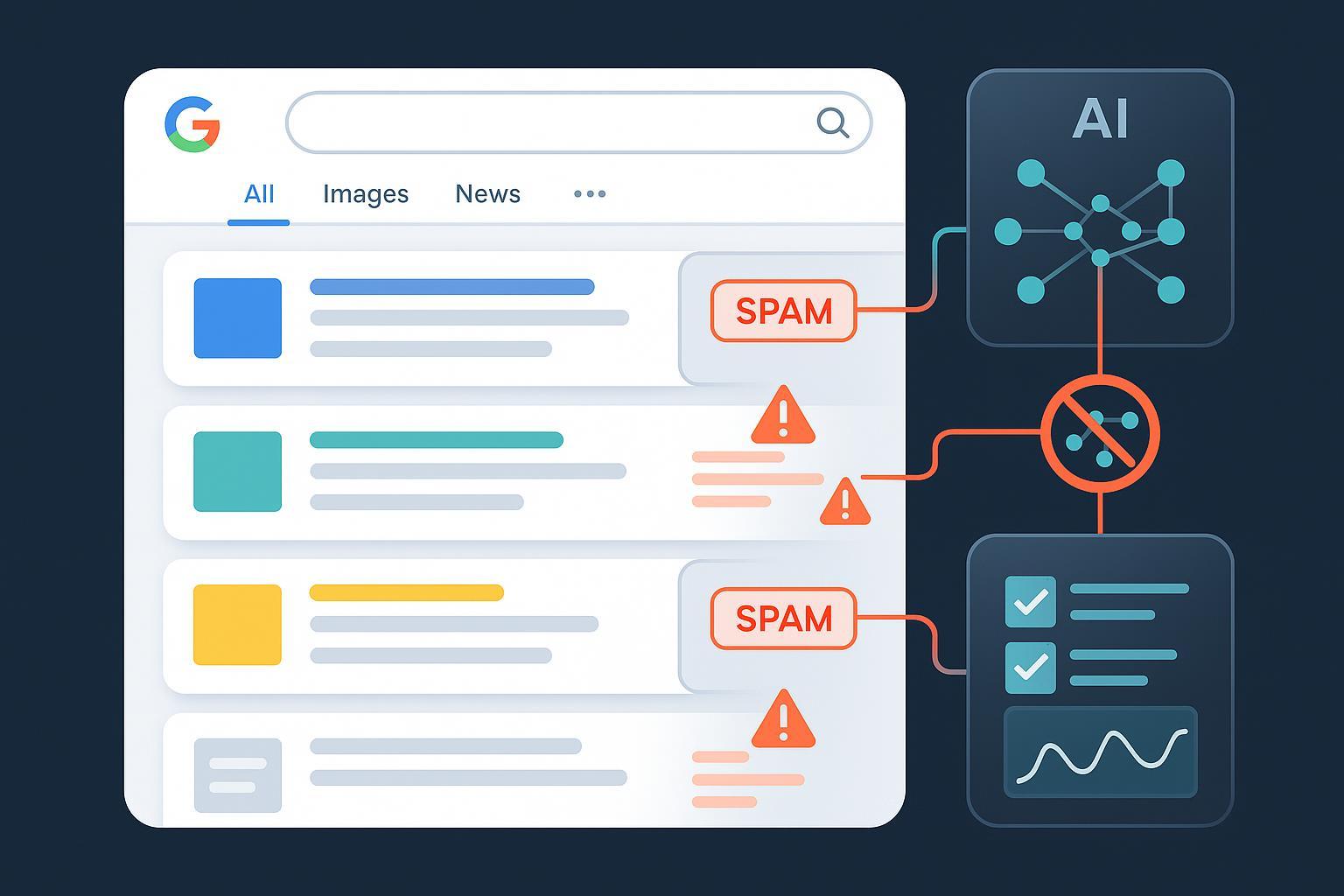

Discover proven best practices from SEO experts to stay compliant after Google’s SpamBrain AI update 2025. Actionable workflow for scaled content, links, technical, and UGC spam vectors. Monitoring and recovery strategies for global brands.

The August 2025 spam update began on August 26 and finished rolling out globally on September 22, 2025. Google describes this class of update as routine enforcement of its spam policies across all languages, with completion confirmed on the Search Status Dashboard. For timing details, see Google’s incident page cited in the section below.

In practice, this update strengthened automated detection against familiar spam vectors rather than inventing brand-new rules. If your site experienced demotions or lost visibility during the rollout, treat it as an enforcement event: audit for scaled low‑value content, manipulative links, cloaking/sneaky redirects, site reputation abuse (often called “parasite SEO”), expired domain misuse, and UGC/hacked content issues—and fix them systematically.

What actually changed in 2025

- Rollout and completion: Google’s status dashboard recorded the August 2025 spam update start and completion; the rollout took ~27 days to finish. See the incident entry in the Google Search Status Dashboard (August 2025 spam update); industry outlets also confirmed completion on Sept 22, 2025, including Search Engine Land’s completion report (Sept 22, 2025).

- Enforcement focus areas: Google’s standing spam policies continued to apply. Practically, teams reported impact around:

- Scaled/AI content abuse (mass‑produced pages with little added value). Google’s 2025 guidance states: using generative tools to generate many pages without value may violate the scaled content abuse policy—see Google’s generative AI content guidance (2025).

- Link schemes, cloaking, sneaky redirects, hidden text, and other classic violations—codified on the Spam Policies for Google Web Search page.

- Site reputation abuse and expired domain misuse. These are widely discussed by practitioners; Google referenced updating its site reputation abuse policy earlier in 2025—see Google Search Central’s “Robots Refresher: page-level granularity” (March 2025).

- UGC/hacked content and community spam. For practical controls, Google’s Prevent user-generated spam doc remains the canonical playbook.

Two operational realities to keep in mind:

- Most visibility losses in spam updates are algorithmic demotions, not manual actions. If you did receive a manual action, Google explains the reconsideration process here: Reviewed results/manual actions guidance.

- Recovery is a function of root-cause remediation plus time. Once issues are fixed, systems need to reassess the site; expect weeks to months for durable recovery depending on scope and severity.

A 7-step compliance workflow that actually works

These steps reflect what our teams have used successfully during and after the 2025 rollout. Adapt the specifics to your site’s scale and risk profile.

1) Discovery: identify likely vectors and affected areas

- Pull data from Google Search Console (Performance, Pages, Queries), analytics, and server logs to pinpoint where visibility dropped and which sections are implicated.

- Cross-check content clusters that saw aggressive publishing velocity, thin pages, or templated AI output. Note doorway-like structures or hub/spoke patterns that don’t deliver unique user value.

- Flag technical anomalies: soft‑404s, infinite facets/pagination, duplicate parameterized pages, or unexpected canonical/redirect behavior.

2) Triage: decide algorithmic vs manual action and prioritize fixes

- Manual action present? You’ll see it in Search Console’s “Manual actions.” If so, focus remediation on the cited issues and document evidence for reconsideration.

- No manual action? Treat it as algorithmic demotion. Prioritize fixes by business impact and severity: scaled low‑value content first, then link schemes, then cloaking/redirects, then UGC/security.

3) Content remediation: raise editorial quality and reduce scale where quality can’t be assured

- Remove, noindex, or consolidate thin, duplicate, and mass‑generated pages that add little user value. Reduce publishing cadence until human editorial review can keep up.

- For AI-assisted workflows, enforce human-in-the-loop: fact-checking, originality checks, source attribution, and an editor sign-off before publishing, consistent with Google’s generative AI content guidance (2025).

- Add real experience signals: author bios, credentials, first-hand insights, and dated updates. Align titles and on-page intents to actual searcher needs to avoid doorway characteristics, per the Spam Policies index.

4) Technical and markup audit: eliminate deceptive UX and schema mistakes

- Validate structured data; remove misleading or irrelevant schema (e.g., review markup on pages without reviews). Keep markup consistent with content and avoid manipulative rich-result tactics.

- Improve crawlability and indexability: fix soft‑404s, canonicalization errors, hreflang conflicts, and JS rendering issues. Check that redirects are honest and necessary—no sneaky/split testing cloaking.

- Confirm HTTPS, performance, mobile UX, and accessibility best practices. Intrusive interstitials or deceptive UI can be interpreted as low-quality or manipulative.

5) Links and reputation: clean acquisition and outbound policies

- Audit inbound links for paid placements, excessive exchanges, PBNs, or hidden links. Where removal is infeasible, consider disavow judiciously. Avoid overusing disavow—it’s a last resort.

- Tighten outbound link policies: add rel="nofollow" or rel="sponsored" where appropriate; avoid linking to low-quality or irrelevant destinations en masse.

- Guard against site reputation abuse: do not host third-party pages that bypass your editorial standards; set explicit placement rules and minimum quality requirements.

6) Security and UGC hardening: stop spam at the source

- Patch vulnerabilities and scan regularly for hacked content and cloaking artifacts.

- Implement UGC controls: rate limits, moderation queues, spam filters, and link attributes (rel="ugc"/rel="nofollow"). Google’s UGC abuse prevention guidance provides concrete patterns to follow.

7) Monitoring and reassessment: measure progress and stay compliant

- In Google Search Console, track Performance and Indexing weekly for 8–12 weeks post-fix. Request reindexing of key improved pages, and annotate changes to correlate recovery with remediation.

- Set up a cadence for spot-checking AI-generated answers and brand visibility across new AI surfaces and SERPs.

- For ongoing monitoring of brand mentions and AI-generated answer sentiment across ChatGPT, Perplexity, and Google AI Overview, a neutral option is Geneo. Disclosure: Geneo is mentioned as an example monitoring tool; no direct ranking impact is implied.

- For complementary context on how communities influence AI citations, see this practitioner guide on Reddit community AI search citations best practices.

Advanced playbook for 2025

These are the areas where compliant sites still trip up.

Internationalization and hreflang

- Avoid doorway-like international pages. Each language/region variant should be meaningfully localized, not just translated boilerplate.

- Verify hreflang mappings and canonical clusters to prevent self-competition and mis-indexing, especially on large catalogs.

Commerce and affiliate transparency

- Disclose sponsored relationships and affiliate programs; annotate links appropriately (rel="sponsored").

- Ensure product review and round-up pages add first-hand testing insights, original photos/data, and unique comparisons—not just rewritten manufacturer specs.

Publisher guardrails and “parasite SEO” risk

- Do not host third-party content that you wouldn’t publish under your own editorial standards. If you provide hosting/subdomains to partners, enforce quality criteria and maintain editorial oversight.

- Audit syndicated content and native advertising placements to avoid low-value page factories on your domain.

Structured data discipline

- Validate schema at publish time; remove types that don’t match the actual content. Misleading markup is both a spam risk and a trust killer.

How recovery looks in the real world (an anonymized micro‑case)

- Situation: A mid-size publisher saw sharp declines concentrated in sections with templated AI-assisted explainers and aggressive internal hub/spoke expansion. Schema was broadly applied, including review markup on informational pages.

- Actions: They cut ~30% of low-value explainers, consolidated duplicative hubs, removed misapplied review schema, and instituted editor sign-off for all AI-assisted drafts. UGC controls were tightened with moderation queues and rel="ugc" on comment links.

- Outcome: Visibility stabilized within a few weeks and gradually improved as key sections were reassessed. The clearest leading indicators were fewer soft‑404s, improved indexing coverage, and regained impressions for the cleaned-up hubs. Manual action was not involved, so no reconsideration request was needed—recovery simply tracked the site’s improved quality and compliance.

Trade-offs to note:

- Reducing content velocity may temporarily depress long-tail coverage, but it raises overall editorial standards and avoids scaled abuse.

- Removing misapplied schema can lower short-term rich result footprint, yet protects against future spam enforcement.

Manual actions: if you have one, do this

- Confirm the manual action in Search Console and read the cited examples carefully.

- Fix the root cause holistically, not just the examples. Create a remediation log: URLs affected, fixes applied, dates, and screenshots.

- Submit a reconsideration request that explains root cause, specific fixes, and process changes to prevent recurrence. Google details this process in Reviewed results/manual actions guidance.

Expectations: Reconsideration can take time. Be patient and keep improving site quality while you wait.

Monitoring and future-proofing: your ongoing regimen

- Weekly checks (8–12 weeks): Search Console Performance/Indexing; annotate changes; request recrawls for priority fixes.

- Monthly technical passes: structured data validation, redirects/canonicals, crawl traps, Core Web Vitals, and security scans.

- Quarterly editorial reviews: sample AI-assisted content for originality, fact integrity, and user value; recalibrate publishing cadence to match your editorial bandwidth.

- Community and AI surface checks: Spot-check how your brand is represented in AI-generated answers. For additional context on monitoring AI surfaces, this overview of Profound review 2025 with alternative recommendations explains why teams track citations across ChatGPT, Perplexity, and Google AI Overview.

Future updates: Spam enforcement evolves. Keep an eye on the Spam Policies for Google Web Search and the Google Search Status Dashboard (August 2025 spam update) for official signals. Industry watchers like Search Engine Land and Search Engine Roundtable regularly document volatility and completion timings; for this update, see Search Engine Land’s completion report (Sept 22, 2025).

The closing checklist

Use this as a quick run-through before and after any spam update:

-

Content

- Remove/consolidate low-value, duplicate, and mass-generated pages

- Enforce human editorial review for AI-assisted content

- Add author bios, first-hand experience, citations, and updated dates

-

Technical & Markup

- Validate and prune misleading schema

- Fix soft‑404s, canonical/hreflang conflicts, crawl traps

- Confirm clean redirects and honest UX; improve performance and mobile

-

Links & Reputation

- Remediate manipulative inbound links; tighten outbound link policies

- Avoid hosting third-party pages that bypass your editorial bar

-

Security & UGC

- Patch vulnerabilities; scan for hacked content/cloaking

- Implement UGC moderation and appropriate link attributes

-

Monitoring & Recovery

- Track Search Console weekly for 8–12 weeks; annotate and request recrawls

- If a manual action exists, fix thoroughly and submit reconsideration with evidence

By treating the 2025 spam update as a catalyst for rigorous editorial and technical governance—rather than a one-time scare—you’ll build resilience against future enforcement cycles and keep your content compliant.