Google AI Mode Visual Search Update: Latest Impact & 2025 Strategy

Explore Google’s AI Mode visual search update (2025). Learn strategic impacts, visual discovery changes, and how brands can monitor results today.

Updated on Oct 2, 2025

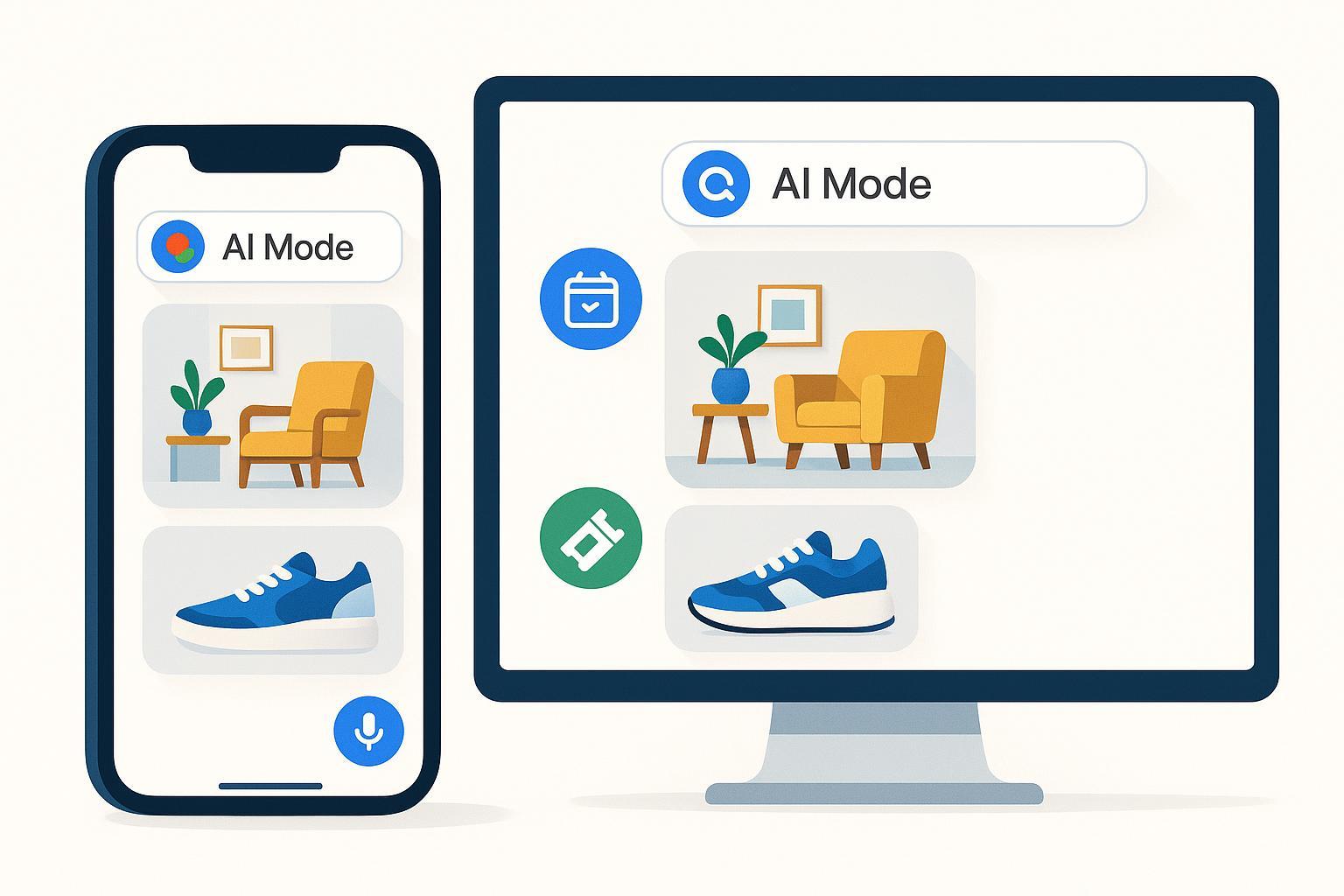

Google’s latest AI Mode updates push Search further into a multimodal, conversational, and visual-first experience—distinct from classic SERPs and even from AI Overviews. In late September 2025, Google introduced more image-led exploration and shopping responses within AI Mode, and throughout August–September it expanded agentic capabilities that help users complete tasks like restaurant reservations. Official context from Google highlights that AI features are driving “more queries and higher-quality clicks” (Google Search Blog, Aug 6, 2025), while the September 30 post details how users can “ask a question conversationally” and receive a range of visual results in AI Mode rolling out in English in the U.S. this week.

- According to Google’s Aug 6, 2025 Search blog on AI engagement, AI Mode and AI Overviews encourage more complex queries and higher-quality clicks.

- Google’s Aug 21, 2025 announcement on agentic features and expansion explains the rollout of task-completion capabilities (starting with restaurant reservations) and broader availability.

- The Sept 30, 2025 post on visual exploration outlines image-led searches, continuous conversational refinement, and links on images to learn more or shop.

What’s actually different now: interaction, discovery, and completion

AI Mode sits as a dedicated, conversational experience within Search. Instead of ranked blue links, users see synthesized answers enhanced with images, product cards, and modules that can connect to real-world tasks. Multimodal inputs—text, voice, even photos—make it easier to start with visual inspiration and refine iteratively.

- Fewer traditional links: Citations and partner modules dominate, shifting discovery toward sources referenced within AI answers rather than classic organic lists. Industry explainers like Conductor’s AI Mode overview (2025) and Semrush’s optimization guide (2025) note the distinction between AI Mode and AI Overviews, with AI Mode functioning as a separate tabbed experience.

- Visual exploration: The September update emphasizes image-led queries—think “maximalist bedroom inspiration” refined with modifiers like “dark tones and bold prints.” Google’s post says each image includes a link to learn more or shop (Sept 30, 2025).

- Agentic tasks: From the August update, AI Mode begins to help with reservations and is “expanding soon” to local services and event tickets (Aug 21, 2025). Media reports list partners across dining and ticketing; treat these as evolving integrations.

Why this matters to marketers and SEOs

Discovery in AI Mode rewards entity clarity, authoritative evidence, and structured, multimodal content—more than it rewards traditional rank positions.

- Visibility strategy shifts from “position” to “presence”: The goal becomes being cited in AI answers, visually represented with compelling assets, and featured in modules that handle tasks.

- Measurement moves beyond CTR: With click paths compressed by agentic flows and image-led browsing, you’ll need to track answer presence, citation share, link placement within the AI panel, and sentiment of brand mentions.

- Feed-level readiness: Expose inventory, availability, pricing, and policy data via schema.org and product/local/event feeds so AI Mode can surface and act on reliable information.

How to optimize for visual, multimodal AI Mode (actionable guide)

-

Clarify your entities and authority

- Consolidate canonical information about your brand, products, and topics. Align with Organization, Product, Person, and LocalBusiness schema.

- Establish authoritative evidence: studies, primary data, and clear author bylines. Industry guidance from Semrush’s 2025 AI Mode optimization stresses entity clarity and comprehensive schema.

-

Prepare rich visual assets matched to intent

- Map search intents to image sets: inspiration, comparison, how-to, and shopping. Use descriptive filenames, alt text, and ImageObject metadata.

- Ensure images are navigable: Google indicates each visual result links to learn more or shop (Sept 30, 2025). Provide landing pages that answer follow-up questions naturally.

-

Strengthen structured data and feeds

- Implement Product, Offer, AggregateRating, HowTo, FAQ, and Event schema where relevant.

- Keep feeds fresh: Merchant Center product feeds, local inventory availability, and event ticketing data. Google’s visual update ties into Shopping Graph scale and refresh cadence cited across industry coverage, including Search Engine Land’s Sept 30, 2025 analysis of visual responses.

-

Align content to conversational refinement

- Design pages for natural follow-ups (filters, modifiers, comparisons). Support voice and image-first entry points.

- Use internal linking and on-page UX to guide iterative exploration.

-

Establish editorial governance and accuracy

- Distinguish facts from predictions; add update notes when regions/languages or partner integrations change.

- Maintain an internal change-log tied to public claims and schema adjustments.

Extended reading: For deeper methods on Generative Engine Optimization and AI-driven visibility across platforms, explore the Geneo blog.

Measurement beyond clicks: a practical framework

With AI Mode compressing the path to completion, clicks alone underrepresent brand influence.

- Answer presence and citation share: Track how often your brand is referenced in AI Mode answers and your share among cited sources.

- Link placement and interaction cues: Note whether images/cards link to your pages, and where they appear within the panel.

- Sentiment of mentions: Monitor tone in AI answers and across platforms (positive/neutral/negative). Benchmarks can reference cross-platform examples like Geneo’s GDPR fines 2025 query report to understand cited-source dynamics.

- Assisted conversions via agentic flows: Attribute reservations or ticket purchases supported by AI Mode modules when possible; use UTMs and server-side tagging to capture indirect influence.

- Coverage by region/language: Log where AI Mode is active for your audience. Several outlets reported September language expansions beyond English; treat specifics as evolving and verify before claiming.

Example workflow: monitoring AI Mode citations and sentiment (mid-article)

You can set up a neutral monitoring loop across AI Mode, AI Overviews, and other generative engines:

- Identify priority queries (inspiration, shopping, local services).

- Capture AI Mode panels and cited sources weekly.

- Track sentiment and citation share over time.

- Correlate with feed updates and schema changes.

In practice, a platform like Geneo can be used to audit brand visibility across Google’s AI experiences and other engines, including citation tracking and sentiment analysis. Disclosure: Geneo is our product.

Governance for evolving facts and comparative context

- Regions and languages: Google’s posts state expansion, with the Sept 30 visual update rolling out in English in the U.S. this week. Reputable media have reported additional languages in September 2025; cite with dates and treat as subject to change.

- Partners and subscriptions: Google’s Aug 21 post confirms reservations and upcoming local services/tickets. Media coverage lists partners (e.g., OpenTable, Ticketing platforms) and notes potential premium tie-ins; verify before operationalizing.

- Access and UI: Describe AI Mode as a dedicated tab/experience within Search; availability can vary by account and region.

For interface distinctions and optimization advice, see Conductor’s 2025 explainer on AI Mode vs. AI Overviews and 9to5Google’s Sept 30, 2025 visual update coverage.

What’s next: predictions and immediate actions

Near-term, expect broader language/region support, more agentic integrations (local services and tickets), and richer visual understanding spanning photos and short videos. Measurement telemetry in Google’s tools may evolve, but plan for third-party tracking of answer presence and sentiment.

Immediate actions:

- Run an entity and schema audit for priority categories.

- Map visual intents and refresh images with metadata.

- Ensure product/local/event feeds are current and accessible.

- Set up weekly monitoring and a change-log.

Soft CTA: If you need a consolidated view of AI citations and sentiment across Google AI Mode and other engines, consider integrating a brand intelligence workflow that includes Geneo.

Source references and change-log discipline

- Official context: Google Search Blog (Aug 6, 2025; Aug 21, 2025; Sept 30, 2025).

- Industry analysis: Conductor Academy (2025), Semrush Blog (2025), Search Engine Land (Sept 30, 2025), 9to5Google (Sept 30, 2025).

Change-log

- Oct 2, 2025: Initial publication reflecting Sept 30 visual update and Aug agentic features; added governance notes on languages/partners and measurement framework.