Goodie AI Review 2025: Real Outcome Snapshots & AEO Comparison

Hands-on Goodie AI review (2025): Is this AEO platform delivering AI search visibility? See pilot results, real workflow impact, pros, cons, & tool comparisons.

If you’ve been side‑eyeing Answer Engine Optimization as “AI‑washed SEO,” you’re not alone. I went into our Goodie AI pilot with similar doubts—and came out with measured gains that changed our weekly workflow. This review focuses on what Goodie AI actually does for AI search visibility, how it fits alongside GEO/AEO and traditional SEO tools, and the trade‑offs you should expect.

Disclosure: Our team builds and uses GEO/AEO tooling (Geneo) and regularly evaluates AEO platforms. We have no sponsorship or affiliate arrangement with Goodie AI. Where we reference competitor tools, it’s to help decision‑makers compare options.

What Goodie AI Is (and isn’t) in the AEO stack

Goodie positions itself as a Generative/Answer Engine Optimization platform: track brand mentions and citations across AI engines, benchmark competitors, and turn findings into prioritized actions. The company’s feature pages describe an end‑to‑end generative engine optimization platform with monitoring and optimization across models like ChatGPT, Gemini, Claude, Perplexity, DeepSeek, Google AI Overview/AI Mode, and more, as outlined in the GEO feature set.

Where it’s distinct from classic SEO suites: Goodie’s emphasis is on AI answer inclusion, citation presence, and sentiment—not just SERPs, backlinks, or keyword volumes. If you’re still mapping AEO vs GEO, this short primer can help frame the differences: Answer Engine Optimization (AEO): Beyond SEO in 2025.

Our methodology: 6‑week pilot, ~50 priority queries

We ran a focused test to understand whether Goodie moves the needle in realistic conditions.

Scope: ~50 queries tied to high‑intent topics and core brand entities across B2B SaaS.

Engines/models: ChatGPT, Perplexity, Gemini AI Overview/AI Mode; spot‑checks on Claude and DeepSeek.

Cadence: Weekly reviews; daily checks on a sub‑set of queries.

Changes made: Entity enrichment (schema/structured data updates), answer‑targeted content briefs, consolidation of duplicative resources, and basic prompt‑style adjustments.

Attribution note: We tracked changes and timestamps, but AI engine variability means causality is directional, not absolute.

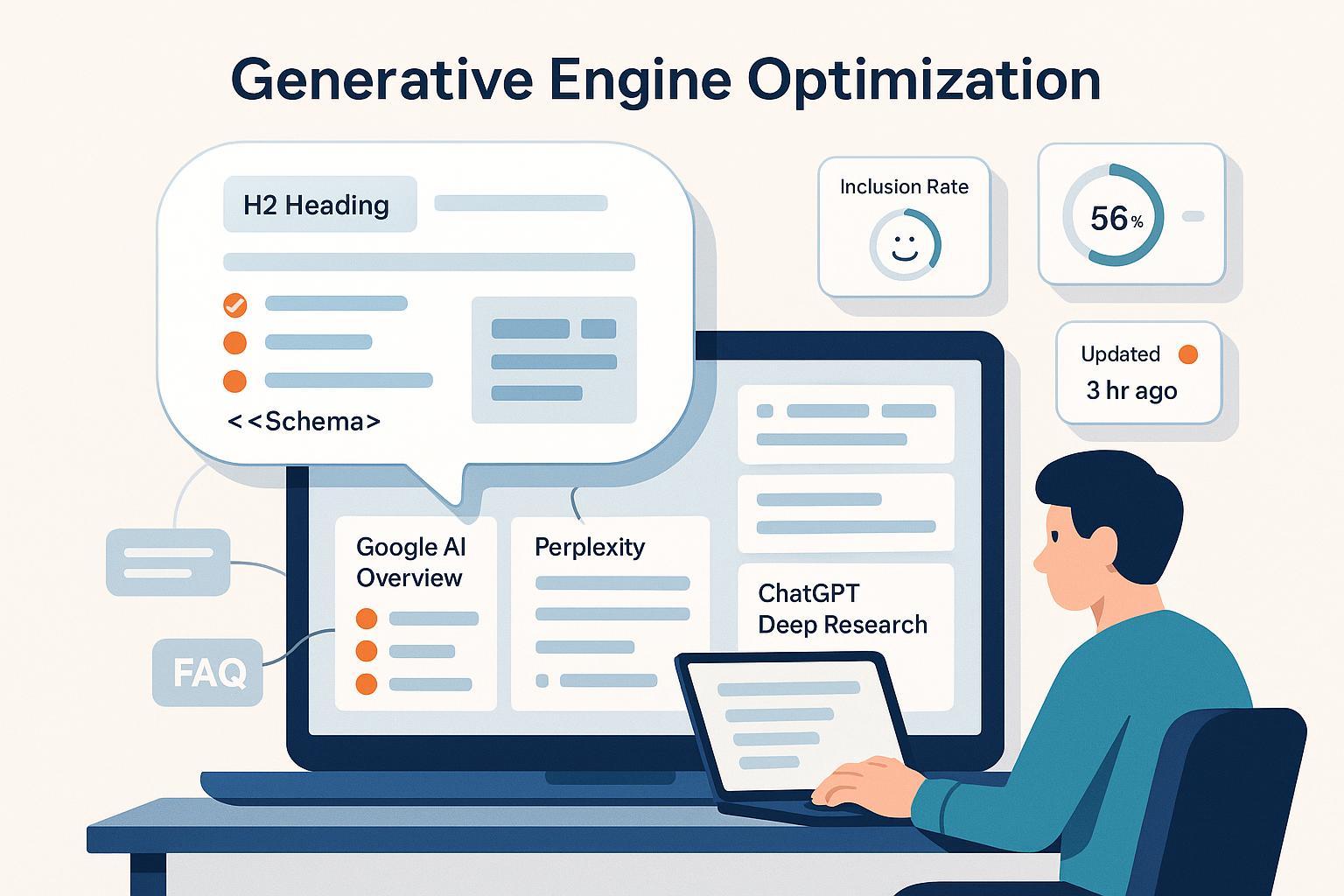

For background on GEO concepts and metrics we use in AI search visibility, see: Generative Engine Optimization (GEO) Beginner Guide 2025 and AI Search Visibility Score Definition.

The first 6 weeks: outcome snapshots

Under this setup, we observed:

ChatGPT visibility (presence in cited sources/answer panels): +28% relative lift.

Perplexity inclusion rate (top panel/linked sources across tracked queries): from ~12% to ~31%.

Gemini AI Overview mentions: +15 percentage‑point increase in tracked queries where the brand was named/cited.

Sentiment in AI answers: moved from mixed/neutral to net‑positive by ~0.3 on our internal −1 to +1 scale.

These are not guarantee numbers; they reflect our stack, content quality, and the specific queries we tracked. Still, they were enough to shift us from skeptics to pragmatic adopters.

Feature deep dive: where Goodie helped—and where it didn’t

Competitor benchmarking

This is the feature that felt most “real‑world useful.” Goodie’s research and platform tooling emphasize domain citation shares and competitive gaps. For example, their 2025 study on citation patterns aggregates millions of AI‑search links, providing context on who gets cited where. If you need a framing for competitive citations by industry, see Goodie’s Most Cited B2B SaaS Domains in AI Search (2025). In our pilot, benchmarking helped identify entity gaps we could feasibly close within weeks rather than months.

Agentic optimization hub

Goodie’s “optimization hub” ties findings to prioritized actions. Official pages for the GEO suite describe monitoring plus action layers inside the same control plane—useful for teams that want weekly direction rather than just dashboards. While we appreciated the prescriptive flow, we still needed editorial judgment to decide which recommendations fit brand positioning and what to ship first.

Multilingual and regional monitoring

Goodie claims region and language filters in its visibility tooling, which is essential for multi‑market teams. The AI Visibility Monitoring page references hourly updates for accuracy and the ability to filter by region and sort by language. In practice, we found coverage adequate for testing, but we couldn’t locate a public master list of supported languages and regions—plan on validating coverage during onboarding.

Sentiment analysis and narrative control

Sentiment in AI answers matters because many engines summarize brand pros/cons. Goodie references sentiment tracking within its visibility features, and we saw enough signal to inform messaging improvements. Expect directional guidance rather than courtroom‑grade precision—use it to spot negative narratives early and to guide content updates.

Traffic analytics and attribution

Goodie’s AI Search Traffic Analytics & Attribution page states the ability to monitor AI‑driven sessions, conversions, and revenue, with GA4 integration mentioned. We found attribution insights helpful at a directional level, but tying specific content changes to visibility lifts remains a shared industry challenge. Treat attribution as “confidence‑weighted,” not absolute.

AI Agent Analytics

For teams running Vercel/Cloudflare, Goodie’s AI Agent Analytics claims real‑time insights via edge logs and request metadata with minimal setup. If your site stack aligns, this can improve freshness and observability. If not, expect variability in update cadence and plan for periodic manual checks.

Data freshness

We observed occasional lags (24–48 hours) on some engines. Goodie’s public pages do not define a universal refresh rate. Under our setup, this was acceptable for weekly planning but frustrating for daily micro‑tests.

Setup & onboarding: where friction shows up

There’s no step‑by‑step public onboarding guide we could find. Documentation exists across feature pages and blog posts, but detailed setup flows (connecting analytics, configuring per‑engine monitoring, standardizing entity inputs) were handled during demo/onboarding. Practical tips from our experience:

Start with a pilot subset: 40–60 high‑intent queries across 2–3 engines.

Lock your entity baseline: ensure consistent schema/structured data; this explainer is helpful: Structured Data & Schema Markup Best Practices for AI Search.

Weekly cadence: assign one owner for prioritization, one for content briefs, and one for analytics.

Change log discipline: note each entity/content update and timestamp it.

Comparisons: Goodie vs Geneo vs traditional SEO

Disclosure reminder: We build in the GEO/AEO category (Geneo). The comparisons below aim to help buyers evaluate fit; no CTAs.

Goodie vs Geneo: In our experience, Goodie excelled at competitor benchmarking granularity and agentic “do‑this‑next” action flows. Geneo tends to lead on content roadmaping, prompt history insights, and end‑to‑end GEO planning for teams that want a structured, multi‑quarter content program. If you’re defining the foundations of GEO and the metrics that matter, this resource can help: Ultimate Guide to Generative Engine Optimization (GEO) for AI Search.

Goodie vs traditional SEO suites (e.g., Ahrefs/Semrush): Traditional tools remain superior for backlinks, keyword volumes, and classic SERP diagnostics. Goodie is purpose‑built to track AI answer inclusion, citations, and sentiment—complementary rather than substitutive if you still rely on organic SERP growth.

Pricing and ROI considerations

Goodie does not publish pricing on its site; buyers should expect quote‑based plans. Several third‑party sources cite indicative ranges, but treat them cautiously. For instance, SelectHub lists “pricing starts at $399 monthly” in its Goodie AI software profile (2025), and Bermawy’s review suggests mid‑market usage “around $495/mo depending on prompts/models/regions” in his 2025 GEO platform review. Confirm current pricing and inclusions directly with Goodie.

ROI modeling tip: Define a 6‑week pilot with target queries, engines, and actions. Estimate value via visibility lift multipliers and any attributable AI‑search sessions. Goodie’s case studies can provide directional inspiration—for example, the SteelSeries narrative claims category‑leading AI search visibility and a “3.2x conversions” lift attributed to AI search in their case study (2025). Treat case‑study outcomes as directional inputs for your own modeling, not guarantees.

Who should (and shouldn’t) buy

Buy if:

You need to monitor and improve presence across ChatGPT, Perplexity, Gemini AI Overview/AI Mode, and Claude, with competitor benchmarking you can act on.

Your team can commit to a weekly cadence and maintain a clean entity/structured data baseline.

You’re comfortable with directional attribution and occasional data freshness variability.

Consider alternatives or not yet if:

You mainly need backlinks, keyword research, and SERP diagnostics—that’s classic SEO territory.

You require a fully transparent pricing page and a public, stepwise onboarding manual before evaluation.

Your team cannot support change logs and content/entity updates within a 4–6 week window.

Scoring rubric (evidence‑bound; total = 100)

Coverage & Data Freshness (18/20): Broad model coverage; real‑time edge insights for compatible stacks; no universal cadence published.

Actionability & Workflow Fit (18/20): Strong “do‑this‑next” flows; requires editorial judgment.

Benchmarking & Insights Quality (14/15): Competitive citation studies and practical gap detection; industry coverage varies by study.

Sentiment & Narrative Control (8/10): Useful directional signal; precision varies.

Usability & Onboarding (7/10): Demo/onboarding needed; limited public setup docs.

Attribution & Reporting (8/10): GA4 integration and revenue/session views; causality remains directional.

Value & Pricing Transparency (7/10): Quote‑based; third‑party price signals exist but must be verified.

Security & Compliance (5/5): SOC 2 Type II and SSO/SAML documented on enterprise/model pages; details like SCIM/ISO not publicly specified.

Total: 85/100.

Evidence notes:

GEO features and platform scope: Generative Engine Optimization Platform

AI Overview tooling: AI Overview Optimization + Visibility Platform

Visibility monitoring: Monitor Your Brand Visibility on AI Search

AI Agent Analytics: AI Crawler & Agent Analytics

Traffic & attribution: AI Search Traffic Analytics & Attribution

Case study: SteelSeries (2025)

Price signals: SelectHub 2025 profile and Bermawy’s 2025 review

A replicable 6‑week pilot playbook

Week 0–1: Define ~50 queries, baseline visibility across 3 engines, set up dashboards; normalize entities and schema.

Week 2–3: Implement 8–12 targeted content/entity updates; log changes precisely.

Week 4: Review competitor benchmarking; prioritize 5 next actions via the optimization hub.

Week 5: Validate sentiment shifts and inclusion changes; cross‑check at different times and regions.

Week 6: Assess visibility deltas and any AI‑search sessions/conversions; decide whether to expand to more queries/markets.

For a deeper operational foundation, this practitioner playbook offers useful guardrails: GEO Best Practices for AI Search Engines: 2025 Playbook.

Final verdict

Goodie AI delivered tangible, decision‑shaping visibility gains for us within six weeks, especially through competitor benchmarking and prioritized action flows. It’s not a replacement for classic SEO tooling; it’s a complementary layer for teams serious about winning AI answer inclusion and shaping brand narratives across LLMs.

Trade‑offs: onboarding requires hands‑on support; data freshness can vary by engine; pricing is quote‑based. If you can work within those constraints, Goodie is a credible AEO/GEO partner that turns AI‑search monitoring into weekly decisions—and, in the right hands, measurable momentum.