Why Generative Engine Optimization (GEO) Is the New SEO in 2025

GEO is overtaking SEO as AI answers dominate in 2025. See key stats, expert advice, and a practical playbook to protect your brand’s visibility.

Updated on 2025-10-12

The ground has shifted from ranking blue links to earning references inside AI-generated answers. As Google’s AI Overviews (AIO) and answer engines like Perplexity, Copilot, and ChatGPT Search absorb more queries, brands win visibility when models choose to summarize—and cite—their content. That discipline has a name: Generative Engine Optimization (GEO). Below is what’s driving the shift in 2025, how GEO differs from classic SEO, and a playbook to adapt without losing rigor.

What GEO is—and how it extends SEO

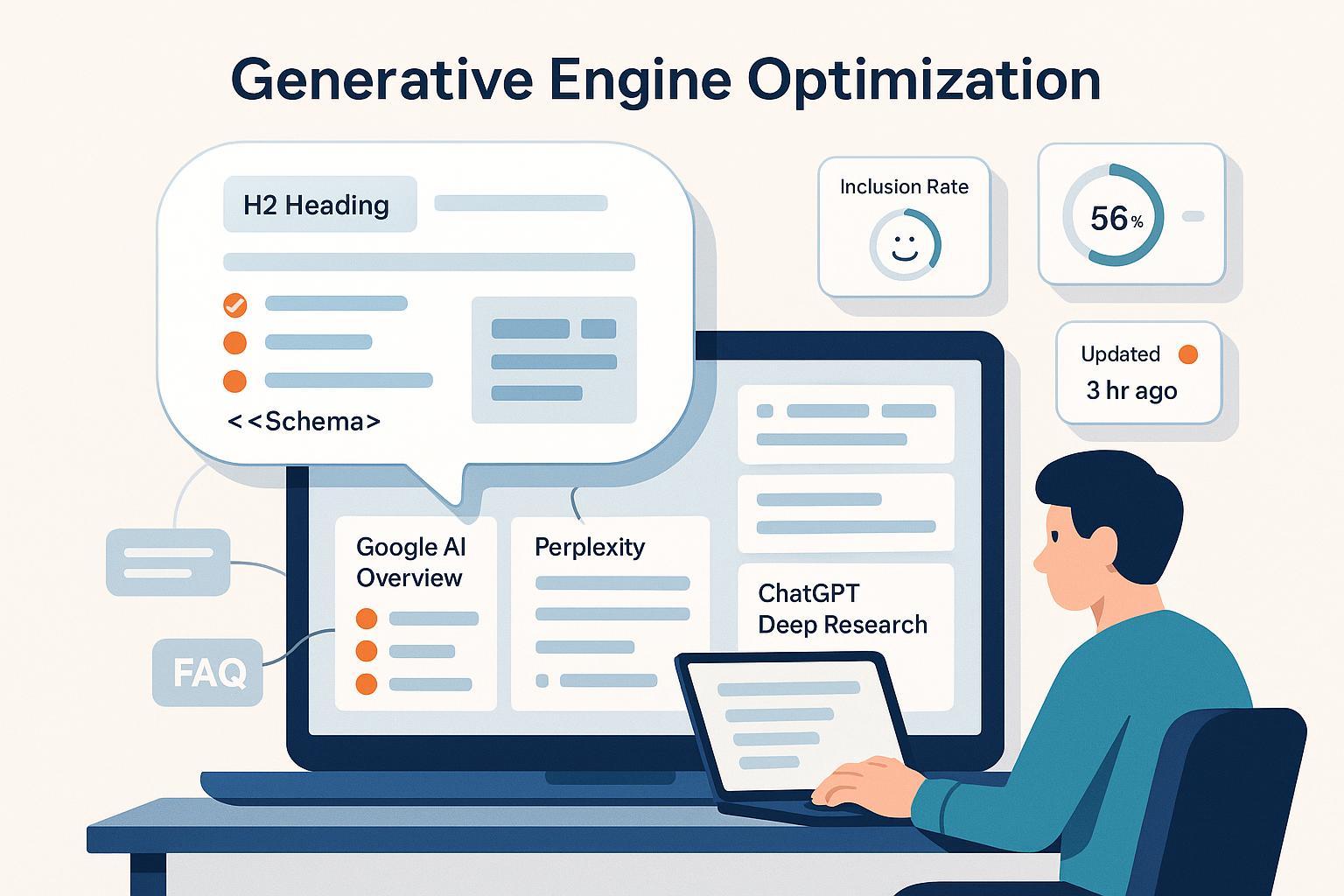

GEO is the practice of structuring content and entities so they are discoverable, trustworthy, and citable inside AI answers across engines. As a starting definition, see the industry primer from Search Engine Land in What is generative engine optimization (GEO)? (2024), which frames GEO as optimizing for visibility in ChatGPT, Perplexity, Gemini/Copilot, and Google AI Overviews: What is generative engine optimization (GEO)? — Search Engine Land.

Key idea: GEO doesn’t replace SEO; it shifts the optimization target from “rank position on a SERP” to “reference-worthiness inside model-generated summaries.” Your technical hygiene, crawlability, and E‑E‑A‑T still matter—but the content must be meaning-dense, evidential, and easy for models to parse and quote.

Why 2025 is the tipping point

Two forces converged this year: the growing prevalence of AIO-style answers and measurable changes in click behavior.

- Prevalence: In a U.S. desktop snapshot, Semrush reported AI Overviews appearing on 13.14% of queries in March 2025, up from 6.49% in January—a rapid rise concentrated in informational intent. See the detailed methodology in the Semrush AI Overviews study (2025): Semrush AI Overviews study (2025).

- Click behavior: Multiple 2025 datasets show organic CTR erosion where AIO appears. For example, Search Engine Land’s coverage of Seer and BrightEdge datasets reported new lows in organic CTR as AIO grew, with some segments seeing double‑digit declines year over year. See the synopsis in Google organic and paid CTRs hit new lows: Report — Search Engine Land (2025).

- Guidance from Google: Google’s official 2025 advice is to double down on people‑first content and Search Essentials—there’s no special “AIO markup” or formula. See Google Developers: Succeeding in AI search (2025).

The implication: If answers often satisfy the query before a click, your brand’s presence inside those answers becomes a first‑order distribution channel. GEO is how you earn that presence.

Engines don’t cite the same way—optimize accordingly

Generative engines vary in how they select, attribute, and display sources. Those differences change which tactics pay off.

- Source diversity varies. In April 2025 comparison research, SE Ranking found different duplicate‑domain rates across engines—indicating how widely each engine pulls from unique sources (lower duplicate rate = more diverse sourcing). See the cross‑engine snapshot in ChatGPT vs. Perplexity vs. Google vs. Bing: comparison research — SE Ranking (2025).

- Overlap with classic top 10 results differs. ToTheWeb’s AI Search Optimization Guide (updated through Sept 2025) describes how Perplexity often overlaps with Google’s top 10, while SGE/AIO historically pulled a large share from outside the top 10—broadening the pool of eligible sources. See AI Search Optimization Guide — ToTheWeb.

- Citation UX is heterogeneous. Perplexity and ChatGPT Search tend to show clickable citations inline or in a sources panel; AIO may place fewer links and often below the fold; Copilot blends citations with Bing results. Practically, that means formatting, evidence placement, and entity clarity can affect whether and how your brand is referenced.

Takeaway: Treat engines as distinct distribution surfaces. Validate what they quote for your priority topics and adapt formatting to increase citability where it matters most.

A practical GEO playbook for 2025

- Anchor every claim to verifiable evidence

- Use short “claim blocks” that bind a statement to a named source and date, ideally within the same paragraph.

- Prefer first‑party data and primary sources; for external references, cite the canonical page and surface the year in text.

- Write for entities, not just keywords

- Introduce the core entity early (organization/product/topic) and connect it to authoritative graphs where appropriate (e.g., Wikipedia/Wikidata entries, official profiles).

- Disambiguate synonyms and acronyms in prose to help models map concepts correctly.

- Add rich, clean structure that models can parse

- Use schema consistently (Organization, Product, HowTo, FAQ, Article) and keep it truthful, minimal, and valid.

- Co‑locate key facts near their schema counterparts to avoid contradictions.

Example Article schema stub for a GEO explainer page:

{

"@context": "https://schema.org",

"@type": "Article",

"headline": "Generative Engine Optimization (GEO): 2025 Guide",

"datePublished": "2025-10-12",

"author": {

"@type": "Person",

"name": "Editorial Team"

},

"mainEntity": {

"@type": "Thing",

"name": "Generative Engine Optimization",

"sameAs": [

"https://en.wikipedia.org/wiki/Search_engine_optimization",

"https://searchengineland.com/what-is-generative-engine-optimization-geo-444418"

]

}

}

- Engineer for citations, not just completeness

- Position concise, quotable definitions and bulletproof stats near the top of sections.

- Use explicit attributions in text (“In 2025, Semrush found…”) so engines can lift a self‑contained, source‑backed sentence.

- Build third‑party corroboration and community signals

- Encourage reputable third‑party coverage and references; align facts across your site, social bios, and high‑authority profiles.

- Participate in user‑generated arenas that engines surface (e.g., niche forums and high‑signal threads). For tactics that help brands engage constructively in relevant Reddit communities, see this field guide: Reddit communities and AI search citations — best practices.

- Refresh cadence and volatility control

- Expect answer engines to evolve weekly. Establish a monthly content refresh for high‑impact topics and hotfix when engines change citation UX or guidance updates land.

- Maintain an update log on volatile pages with date‑stamped stat revisions.

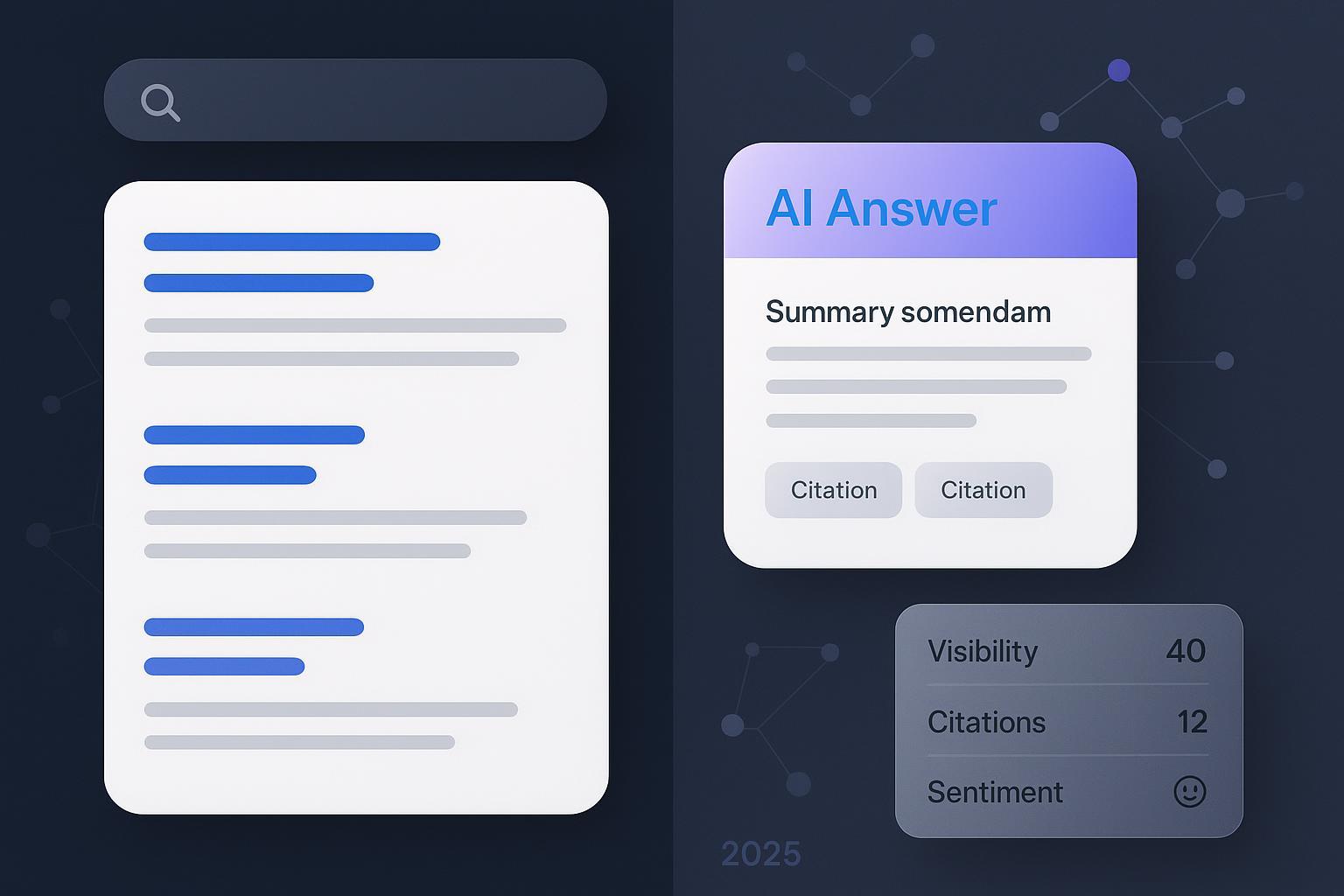

Measurement and governance for GEO

Your leadership won’t fund what you can’t measure. Establish a GEO dashboard aligned to how engines actually behave.

Core KPIs

- AI answer visibility rate: Percentage of tracked queries where your brand/page is cited in the answer.

- Citation count and quality: Number of distinct citations per engine and whether the reference is clickable.

- Sentiment of AI mentions: Positive/neutral/negative tone in the generated answer.

- Downstream CTR: Where citations are linked (e.g., Perplexity, Copilot), measure clicks and engagement.

- Conversion proxies: Demo signups, trials, or newsletter signups from pages most frequently cited.

Monitoring cadence

- Weekly: Track answer visibility, citation share, and sentiment shifts by topic cluster.

- Monthly: Roll up trends to exec‑level reporting and prioritize fixes for topics with high visibility but negative or inaccurate summaries.

- Event‑driven: When Google or engine providers update documentation or UX, run immediate spot checks on critical topics.

Tooling and workflow example

- Teams commonly pair GA4 and GSC with specialized AI‑answer monitoring. For a deeper walkthrough of setting up AI answer‑engine visibility and sentiment monitoring alongside your search stack, see this explainer: AI answer-engine visibility and sentiment monitoring.

- Many teams also use monitoring platforms that aggregate citations across ChatGPT, Perplexity, Copilot, and AI Overviews. One such platform is Geneo, which supports cross‑engine brand monitoring, citation tracking, and sentiment analysis. Disclosure: Geneo is our product.

Governance checklist

- Source of truth: Maintain a single, reviewed repository of claims, figures, and citations for your editors to reuse.

- QA before refresh: Validate that updated sections retain consistent facts across schema, copy, and media.

- Intake for hallucinations: Triage incorrect or harmful AI summaries; coordinate corrections by improving on‑site clarity, adding corroboration, and escalating feedback to engine providers if needed.

How engines differ in practice—and how to adapt

- Google AI Overviews: Often compresses multiple sources into a short summary. Place clear, concise claims with named sources in your intros; ensure entity clarity and up‑to‑date facts so models can lift with confidence. Avoid bloating pages; favor clarity and evidence density.

- Perplexity: Typically shows inline citations and a sources panel. Create scannable sections with quotable sentences tied to primary sources; offer concise answers with expandable depth.

- ChatGPT Search: Similar emphasis on concise, well‑sourced explanations. Ensure your most quotable definitions and stats are early and unambiguous.

- Copilot: Blended with Bing search. Maintain strong technical hygiene and authoritative references; align with commercial intent patterns when applicable.

For a research‑based, high‑level comparison of how these systems source and cite, see the 2025 cross‑engine analysis by SE Ranking and the long‑running overview in ToTheWeb’s AI search guide (linked above). Use those patterns to prioritize where entity work and citation engineering will deliver the biggest lift.

The next 90 days: a prioritized GEO rollout plan

Weeks 1–2: Assessment and baselining

- Identify top topic clusters by revenue/strategic value; collect current answer‑engine outputs for representative queries.

- Audit E‑E‑A‑T, entity clarity, and claim‑evidence binding on your 20 most important pages.

- Stand up monitoring for answer visibility, citation counts, and sentiment.

Weeks 3–6: Content and entity upgrades

- Implement schema and structural fixes; move definitions and statistics higher in sections.

- Add primary‑source attributions to anchor key claims; reconcile facts across pages and profiles.

- Engage in high‑signal communities with credible contributions; document a response plan for hallucinations and inaccuracies.

Weeks 7–12: Experimentation and reporting

- Run A/B section‑level tests (position of stats, summary blocks, FAQ additions) and measure citation frequency/placement.

- Roll up KPIs for leadership; tie to downstream behaviors (newsletter signups, demo requests).

- Expand coverage to adjacent clusters; refresh high‑impact pages monthly.

If you need a lightweight, centralized way to track multi‑engine citations and sentiment while you execute this plan, you can use platforms that monitor AI answers across engines. Tools in this category (including Geneo) help teams spot shifts quickly and coordinate updates without relying on manual checks.

Why this won’t become a gimmick

Some worry GEO will devolve into a bag of tricks. Three reasons it won’t:

- The engines explicitly reward clarity, originality, and provenance. Google’s 2025 guidance reiterates that there’s no AIO‑specific markup—quality wins over hacks.

- The distribution layer has changed; your content is still the product. GEO simply optimizes how models can confidently reuse and attribute it.

- The metrics are operational: visibility rate, citation share, sentiment, and downstream CTR are measurable and tie to outcomes.

What to watch next

- AIO incidence by intent and vertical: Expect variability; keep ranges in your reporting rather than single‑number expectations.

- Shifts in citation UX: Minor changes to link placement can materially affect CTR.

- Provenance signals: More engines may nudge toward explicit source transparency, rewarding claim blocks and canonical references.

Sources and further reading (selected)

- Definition and scope of GEO: What is generative engine optimization (GEO)? — Search Engine Land

- AIO prevalence snapshot: Semrush AI Overviews study (2025)

- CTR behavior shifts: Google organic and paid CTRs hit new lows: Report — Search Engine Land (2025)

- Official guidance: Succeeding in AI search — Google Developers (2025)

- Engine differences: ChatGPT vs. Perplexity vs. Google vs. Bing — SE Ranking (2025)

- Sourcing patterns and overlap: AI Search Optimization Guide — ToTheWeb

Mini change-log

- 2025-10-12: Initial publication with Semrush March 2025 AIO share and Google Developers 2025 guidance; added cross‑engine sourcing insights (SE Ranking; ToTheWeb).