Ultimate Guide: How Digital Marketing Agencies Can Master GEO for AI Search

Comprehensive guide for agencies on Generative Engine Optimization (GEO)—service lines, monitoring, cross-engine tactics, and multi-brand reporting. Book a walkthrough!

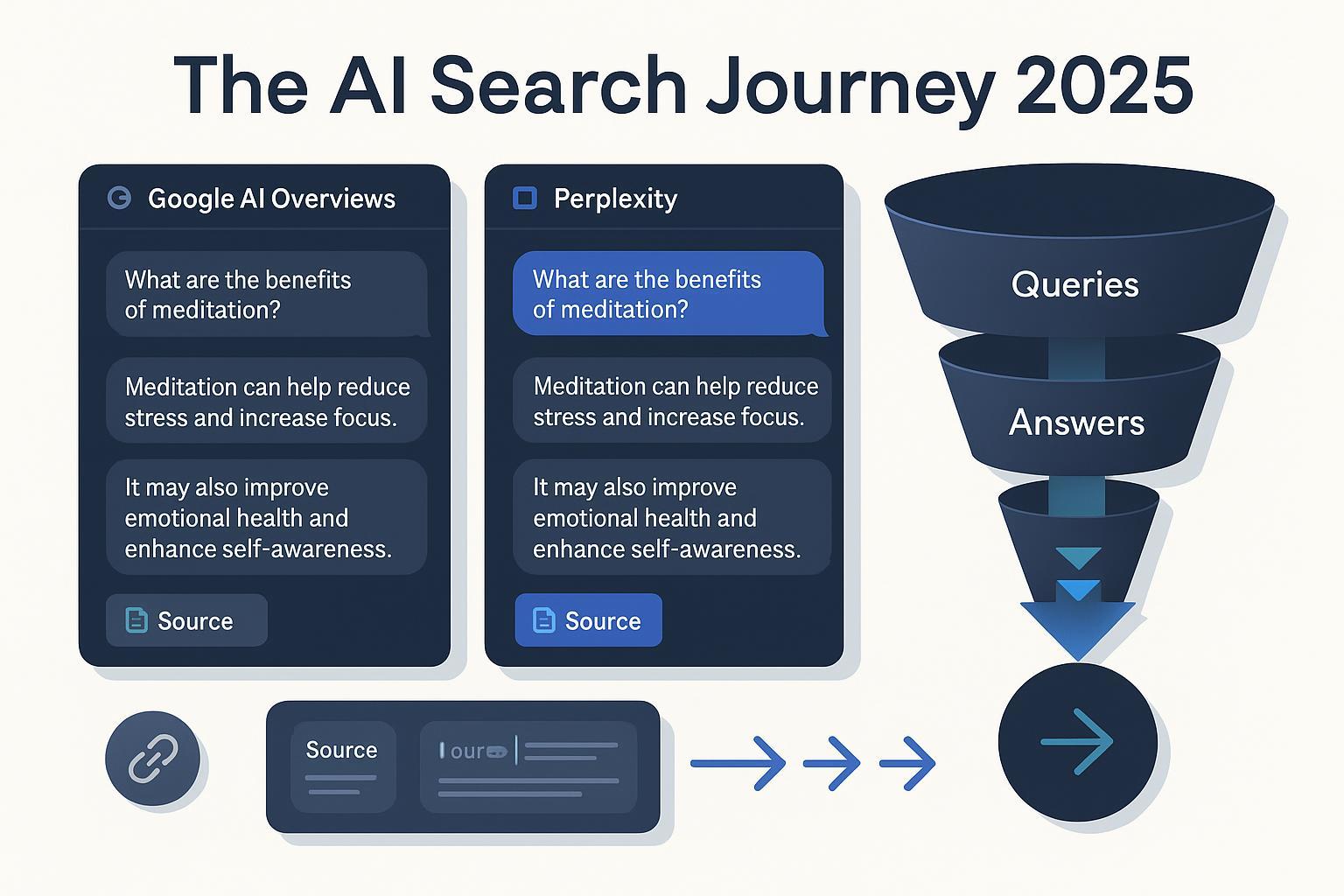

The search landscape is shifting from ten blue links to synthesized answers. AI assistants and AI-enhanced search now draft responses, cite sources, and shape brand perception in a single screen. For agencies, this creates both risk (brand omission, outdated facts, unfavorable framing) and opportunity (category leadership, authoritative citations, consistent multi-market messaging). GEO—Generative Engine Optimization—is the discipline of making your clients’ brands findable, citable, and favorably represented across AI surfaces like Google AI Overviews/SGE, Perplexity, ChatGPT/GPT‑Search, Claude, Gemini, and Bing Copilot.

In this guide, I’ll take the agency operator’s lens: why GEO belongs in your service mix, exactly what to offer, how to measure outcomes, how to package delivery, and where specialized monitoring platforms fit in.

Why Agencies Need to Provide GEO (Now)

You can treat GEO as the natural extension of SEO, content, and digital PR—focused on how AI systems select and cite sources. Agencies that move early build defensible advantages for their clients in:

Brand protection: Ensure accurate facts and inclusion for branded and navigational queries.

Category capture: Win favorable mentions for non-branded, high-intent category queries.

Narrative management: Reduce inconsistencies and correct outdated or misleading claims in AI answers.

Launch impact: Seed fresh, authoritative materials so new products appear in AI answers quickly.

Multi-market governance: Keep entities, facts, and positioning aligned across languages and regions.

The mechanics of how answers are assembled continue to evolve. For example, Google describes the appearance of AI features and how sources are shown and understood via structured markup and quality signals in its documentation (see Google’s 2024–2025 guidance in the Search Central AI features page). Industry studies in 2025 also examine what gets cited and why, with patterns around authority, freshness, and clarity, as summarized in the Search Engine Land analysis of 8,000 AI citations (2025).

A Simple Mental Model: How Generative Engines Decide What to Cite

In client audits, we typically use this mental model:

Entity clarity: Can the engine clearly disambiguate your client’s brand, products, and people across the web? Consistent names, IDs, and cross-links matter.

Authority: Would a conservative algorithm trust this source? Credentials, editorial standards, reputable mentions, and topic alignment help.

Freshness and specificity: Are there recent, precise facts the engine can lift? Timestamps, versioning, and up-to-date stats are favored for evolving topics.

Structure and accessibility: Is the information easy to parse? Clean HTML, headings, JSON‑LD structured data, and fast, crawlable pages aid ingestion.

Evidence density: Do you maintain “answer-ready” hubs—FAQs, stats, methods, and documentation—that AI can cite verbatim?

Keep this model at the center of your GEO program; it drives both content decisions and technical implementation.

GEO Service Lines for Agencies (What to Offer)

Below is a practical, agency-ready list of service lines with examples and deliverables. Mix and match to fit client maturity and scope.

1) Entity & Knowledge Graph Optimization

Disambiguation and IDs: Map brand and product entities to authoritative identifiers (e.g., Wikidata QIDs, Crunchbase profiles) and maintain consistent naming.

sameAs harmonization: Add robust sameAs arrays across Organization, Person (authors/execs), and Product entities.

Author/Org schema: Implement Organization, Person, and Article/BlogPosting schema with bios, credentials, affiliations, and publication dates. Google’s documentation for Article structured data (last updated across 2024–2025) lays out required and recommended properties; see Google’s Article structured data guide.

Editorial profile and policy: Publish author pages, editorial standards, and methodology pages to support E‑E‑A‑T signals.

Checklist

Identify key entities and create/update Wikidata items with multilingual labels.

Create/normalize Crunchbase, LinkedIn, and other authoritative profiles.

Add sameAs arrays to org/author/product schema; validate.

Build or strengthen author bios with credentials and topic expertise.

2) Structured Data & Information Architecture

Schema.org coverage: Organization, Person, Article/BlogPosting, FAQ, HowTo, Product, Video, and Event where relevant.

Semantic HTML: Headings, lists, tables, captions, and alt text for reliably parseable content.

Answer modules: Dedicated FAQ hubs, stats/evidence pages, glossaries, and product spec sheets.

Internal architecture: Shallow, logical hierarchy; fast access to documentation and data pages.

3) Answer‑Ready Content Engineering

Concise explainers: 300–800 word explainers that directly answer canonical questions in your category.

Evidence hubs: “Stats and methodology” pages with updatable data and clear sourcing.

Expert POVs: Credible authors with bylines, quotes, and original analysis.

Cadence: Update high-velocity topics quarterly; evergreen fundamentals annually or when facts change.

Micro‑example

For a cybersecurity client: Publish “What is zero trust? (2025 update)”, a “Zero trust statistics and definitions” hub, and a “Zero trust implementation checklist”—each with author bio, references, and schema.

4) Authority & Citation Seeding (Digital PR)

Prioritize reputable domains: Industry bodies, standards orgs, .gov/.edu, and respected media.

Co‑citation plays: Collaborate on research with credible partners; pitch bylined analyses.

Event and standards alignment: Participate in working groups; publish position papers and link them from authoritative hosts.

5) Technical Accessibility for AI Crawlers

Server‑side rendering (SSR) or hybrid rendering so essential content and JSON‑LD ship in initial HTML. Google’s guidance on dynamic rendering for JavaScript-heavy sites remains a useful reference for ensuring bot-accessible content; see Google’s dynamic rendering page.

Robots and sitemaps hygiene: Provide up-to-date XML sitemaps; avoid accidentally blocking key resources.

Bot controls and monitoring: Respect/decide on GPTBot and PerplexityBot directives; verify actual crawl behavior.

Feeds and APIs: Where appropriate, expose machine-readable docs, FAQs, and product catalogs for ingestion.

On Perplexity specifically, the company documents its crawler and how to control it via robots directives (see PerplexityBot docs). At the same time, in 2025 Cloudflare alleged some stealth, undeclared crawlers associated with Perplexity that bypass robots.txt rules; see the Cloudflare investigation (2025). Agencies should implement multi‑layer bot governance (robots, IP filtering, and logs) and monitor for anomalies.

6) Reputation & Narrative Management

Profile consistency: Align facts (founding year, headcount, leadership) across owned and earned profiles.

Inaccuracy remediation: Identify and correct errors across top wikis, third-party bios, and marketplace listings.

Community Q&A: Where allowed, provide verified answers (forums, vendor marketplaces), and maintain official statements.

7) RAG/Knowledge Hub Enablement

Central data hub: Structured FAQs, documentation, and datasets that are easy to ingest and cite.

Versioning and changelogs: Aid freshness signals and traceability.

Developer docs and schemas: Machine-readable endpoints for specs and policies.

8) Multilingual/Multi‑Market Localization

Hreflang and language tags: Use consistent implementation, bidirectional links, and x‑default where appropriate. Google’s internationalization guide remains the canonical reference; see Managing multi‑regional and multilingual sites.

Localized schema: Translate schema properties like inLanguage and contentLocation; align entity names and labels per market.

Governance: Define who owns updates in each market and how releases are coordinated.

9) Measurement & Experimentation

Prompt/test libraries: Maintain standardized prompt suites and query panels by theme (branded, category, competitor).

Experiment design: Test content patterns, citation seeding, and structured data variants.

Cohort tracking: Compare inclusion and framing across markets, engines, and time windows.

Cross‑Engine Differences (At‑a‑Glance)

AI surface | How it cites/displays sources | Practical notes for optimization | What to monitor |

|---|---|---|---|

Google AI Overviews/SGE | Clickable source cards/links under or within the AI answer | E‑E‑A‑T, structured data, answer‑ready hubs, freshness | Inclusion rate across query panels; which pages/quotes are cited; prominence |

Perplexity | Prominent multi‑source citations with snippets; Collections | Allow crawl if desired; ensure concise, quotable passages; technical accessibility | Citation frequency; authority mix of domains; collection saves |

ChatGPT/GPT‑Search | AI web answers with inline citations; powered by web index | Ensure parseable content and evidence hubs; monitor evolving behavior | Source presence in answers; relative position; model/version notes |

Claude | Inline citations in responses; evolving | Clarity, evidence, author expertise; avoid JS‑only content | Inclusion and framing tone; stability across runs |

Gemini | Similar to Google surfaces with inline/footnote citations | Align with Google’s structured data and entity clarity | Inclusion and which entities/pages are chosen |

Bing Copilot | Source links/snippets from Bing index | Authority and recency matter; clean technicals | Inclusion share, first‑cited vs later citations |

For deeper context on productized behaviors and documentation, see Google’s AI features docs (2024–2025) via the Search Central page, OpenAI’s 2024 announcement of ChatGPT Search, and Perplexity’s crawler guidance in the PerplexityBot docs.

How to Monitor GEO Performance (Agency Framework)

Your goal is to quantify “share of answer,” diagnose why inclusion rises or falls, and connect those deltas to business outcomes.

Surfaces to Track

Google AI Overviews/SGE

Perplexity

ChatGPT/GPT‑Search

Claude

Gemini

Bing Copilot

Core KPIs

Share of Answer (SoA): Inclusion rate across a defined query set (by theme and market).

Engine Coverage: Percentage of tracked engines that include the brand for a query set.

Citation Frequency & Quality: Total citations and distribution by domain type (e.g., .gov/.edu/industry bodies vs. general media).

Position/Prominence: First-cited vs. lower placements; whether your client appears in the lead paragraph vs. footnotes.

Sentiment/Framing: Positive/neutral/negative tone and narrative context.

Freshness/Recency: Age of the cited pages vs. your update cadence.

Business Outcomes: Demo requests, lead quality, and brand search lift mapped to themes/markets.

2025 industry coverage proposes new GEO KPIs and approaches to track inclusion and framing across generative search; see the Search Engine Land discussion of new KPIs for generative search (2025).

Methods & Cadence

Automated query panels: Curate panels for branded, category, and competitor themes per market. Run weekly or biweekly.

Prompt suites: Standardize test prompts per engine and log model/version metadata.

Answer snapshotting: Store full answer text, cited URLs, and positions. Track deltas over time.

Competitive benchmarking: Track inclusion share for the top 3–5 competitors; compare citation domains and prominence.

Alerting: Notify for loss/gain of inclusion, sentiment shifts, and significant model updates.

QA loops: Investigate false negatives due to rendering issues or blocked bots.

Practical example of tooling in context: You can centralize multi‑brand, multi‑market monitoring—tracking inclusion rates, citations, and answer snapshots—using platforms such as Geneo. Disclosure: Geneo is our product. Use purpose‑built tools to reduce the overhead of manual panels and to maintain historical timelines, but continue to validate critical changes with spot checks across engines.

Sample Quarterly Monitoring Cycle

Define target query panels by theme and market; set baselines for SoA and citation quality.

Implement prioritized fixes (entity schema, answer hubs, PR seeding, SSR issues).

Track week‑over‑week deltas; investigate drops (crawlability, content freshness, sentiment changes).

Publish quarterly report: wins, losses, hypotheses, and next‑quarter experiments.

Geneo for Agencies: Current Capabilities and What’s in Development

Current focus

Multi‑brand monitoring: Track inclusion and citations across multiple brands and markets; maintain answer snapshots and timelines; benchmark competitors; configure alerts.

Cross‑engine coverage: Monitor major AI surfaces with aggregated visibility and market filters.

Reporting workflows: Export historical records and assemble client‑ready views with inclusion trends and narrative notes.

In development (Agency version)

White‑label portals/domains for client access.

Customizable report builders and templates.

Role‑based access/permissions for teams and clients.

Automated insights/alerts, API/export options, and enterprise‑grade security/SLA controls.

Notes

Feature availability evolves; confirm details in a live discussion. The monitoring model above is tool‑agnostic and can be executed with a combination of platform support and manual QA.

Packaging & Delivery for Agencies

A practical engagement model makes GEO predictable and repeatable across clients.

Recommended Delivery Flow

Intake and scoping

Stakeholder mapping (SEO, Content, PR, RevOps), markets/languages, priority products.

Define query themes (branded, category, competitor) and target AI surfaces.

Audit (4–8 weeks)

Baseline AI visibility and SoA; entity/knowledge graph mapping; structured data and technical audits; content inventory; PR/citation assets; international governance.

Deliverables: GEO opportunity map, prioritized backlog, implementation roadmap.

Pilot (3–6 months)

Execute high‑impact fixes and content/PR plays for selected markets and panels.

Weekly monitoring; rapid iteration; stakeholder enablement.

Retainer (ongoing)

Scale across brands/markets; establish a content refresh cadence; PR cycles; governance; quarterly optimization and reporting.

Tiering Considerations

Dimensions: number of brands, markets/languages, query sets/themes, engines tracked, reporting cadence, and collaboration requirements.

Illustrative packaging

Starter: 1 brand, 1–2 markets, 3 panels, 3 engines, monthly reporting.

Growth: 2–3 brands, 3–5 markets, 5–8 panels, 4–5 engines, biweekly reporting, PR seeding.

Enterprise: 4+ brands, 6+ markets, 10+ panels, all major engines, weekly ops, custom dashboards, governance, and enablement.

Price bands vary widely by scope and complexity; the structure above helps align expectations and avoid under‑scoping.

Collaboration Model

SEO + Content: Entity clarity, answer hubs, structured data, refresh cadence.

PR/Comms: Authority mentions, partner research, bylines, corrections.

Dev/Ops: SSR/dynamic rendering, feeds/APIs, bot governance.

Analytics/RevOps: KPI mapping to GA4/CRM, cohort analysis by market and theme.

Hypothetical Case Vignette (Illustrative)

Context

A global B2B SaaS brand (5 markets, 3 languages) is inconsistently cited in AI answers for category queries; branded answers include outdated pricing.

Intervention

Entity cleanup: New Organization and Author schema with sameAs arrays; Wikidata alignment for brand and flagship product.

Answer hubs: Published “2025 category explainer,” “statistics and definitions,” and a “how‑to implementation checklist” with named experts.

PR seeding: Partnered with an industry standards body on a joint mini‑study with unique data points.

Technical fixes: Migrated key content to SSR; exposed a machine‑readable specs endpoint.

Monitoring: Established panels across SGE, Perplexity, GPT‑Search, Claude, and Copilot; set alerts.

Outcomes (directional)

Inclusion gains: Share of Answer improved across category panels in 3 of 5 markets over two quarters.

Citation quality: Greater share of citations from standards/media domains; first‑cited position more frequent.

Narrative correction: Branded answers now reflect current pricing and feature names; sentiment normalized.

This vignette is illustrative; results vary by competition, authority, and release cadence. Emphasize trend deltas, not absolute benchmarks.

Common Pitfalls to Avoid

Relying on client‑side JS for essential content or schema; engines may not consistently render it.

Treating GEO as “just SEO”; you also need PR, governance, and monitoring muscle.

Thin, uncited content; engines prefer precise facts with provenance.

Ignoring international alignment; entity and content mismatches across markets create confusion.

Over‑locking down crawlers without measurement; verify bot behavior and balance access with controls.

Practitioner Checklists

Audit essentials

Entities mapped (org, products, authors) with authoritative IDs and sameAs.

Organization/Author/Product/Article schema validated; visible bios and editorial policy.

FAQ/stats/evidence hubs exist and are updated; timestamps present.

SSR or hybrid rendering for key pages; JSON‑LD in initial HTML.

Robots/sitemaps validated; GPTBot/PerplexityBot decisions documented; logs monitored.

International: hreflang implemented consistently; localized schema; governance defined.

Monitoring essentials

Query panels by theme and market; baseline SoA/coverage set.

Weekly/biweekly answer snapshotting with version/model notes.

Alerts for inclusion drops, sentiment shifts, and major engine changes.

Competitive benchmarks across engines and markets.

Quarterly review tying GEO deltas to demand gen metrics.

Execution essentials

Content refresh calendar linked to panel findings.

PR calendar with research/broadcast opportunities.

Backlog of structured data improvements and technical fixes.

Clear owners across SEO, Content, PR, Dev, and Analytics.

References for Further Reading

How AI features appear and cite sources in Google Search (2024–2025): Search Central AI features page

What gets cited in AI answers (2025 analysis): Search Engine Land on 8,000 AI citations

Google AI Overviews behavior and studies (2024–2025): Ahrefs’ AI Overviews study

ChatGPT’s AI web search product (2024): OpenAI Introducing ChatGPT Search

Managing JavaScript for crawlability: Google on dynamic rendering

International/multilingual SEO: Google’s multi‑regional and multilingual sites guide

Perplexity crawling controls and a 2025 investigation: PerplexityBot docs and Cloudflare’s 2025 crawler post

New KPIs for generative search (2025): Search Engine Land’s KPI overview

CTA: Book a GEO Discussion for Your Agency

If you manage multiple brands or markets and want to operationalize GEO, email support@geneo.app to book a discussion and walkthrough.

Discovery questionnaire (copy/paste into your email)

What markets/languages and how many brands do you manage?

Which AI search surfaces matter most to your clients (e.g., SGE, Perplexity, ChatGPT, Claude, Gemini, Bing Copilot)?

Do you have defined query themes (branded, category, competitors) we should track?

What current content/PR assets exist (research, stats hubs, FAQs) and how is your structured data coverage today?

What are your top KPIs for GEO (e.g., share of answer, citations, engine coverage, demo requests)?

Do you need white‑label reporting/portals and role‑based access? Any security/compliance requirements?

Preferred engagement model (audit, pilot, retainer) and timeline.

—

Final note: GEO is not a fad. It’s the connective tissue between your clients’ expertise and the AI systems that increasingly mediate buyer research. With a clear playbook and disciplined monitoring, agencies can protect brands, win category visibility, and demonstrate measurable impact across markets.