Generative Engine Optimization Strategy for Marketing Teams: Best Practices 2025

Discover actionable 2025 best practices for Generative Engine Optimization (GEO). Enhance AI search visibility and reporting for marketing teams with proven strategies, KPI tracking, and platform-specific playbooks.

Why GEO matters in 2025 (in one paragraph)

AI answer engines now mediate a meaningful slice of discovery. Large-scale tracking shows Google’s AI Overviews appeared in roughly 6.49% of U.S. desktop queries in January 2025 and rose to 13.14% by March, more than doubling in two months according to the Semrush AI Overviews impact study (2025). Early traffic analyses suggest AI answers can send fewer but often higher-intent clicks, with shorter sessions than traditional search, based on iPullRank’s early referral data (2025). Net: your brand must be discoverable and accurately represented inside AI answers—not just in blue links.

What changes from SEO to GEO: Five working principles

These are the practices that consistently move the needle across Google AI Overviews/AI Mode, ChatGPT Search/Atlas, and Perplexity.

Optimize at the passage level

What it means: Engines often select passages rather than entire pages. Your page needs self-contained, quotable blocks that answer a specific sub-question with facts, numbers, and context.

Why it works: Technical analyses of AI Mode suggest dense retrieval and pairwise LLM prompting favor bite-sized, answerable segments; industry studies also show overlap between AIO sources and top organic pages but with passage-level differences. See iPullRank’s technical deep dive (2025) and Surfer’s AIO sources study (2025).

How to do it:

Write 80–150 word “answer blocks” per subhead.

Put the conclusion first, then 1–2 supporting stats and a named source.

Use lists and small tables where they clarify extraction.

Prioritize E-E-A-T and verifiability

What it means: Clear authorship, dates, credentials, methods, and citations—plus unique primary data—make your content more trustworthy and extractable.

Why it works: Google’s guidance emphasizes people-first helpful content and transparency; AI features build from indexed, quality content. See Google Search Central: AI features and your website (2025) and Google’s guidance on using generative AI content (2025).

How to do it:

Add bylines with credentials and update dates to all answer pages.

Cite original sources with inline links; summarize study year, sample size, and geography where relevant.

Publish your own benchmarks or research to become a core source others cite.

Engineer entity clarity and disambiguation

What it means: Make your brand, products, and key concepts unambiguous across your site and profiles so engines consistently identify and attribute your content.

Why it works: Retrieval and synthesis rely on entity understanding. Ambiguity causes misattribution or mixing with similarly named entities.

How to do it:

Create a glossary/FAQ hub for entities, acronyms, and product names.

Use consistent naming, canonical URLs, and organization markup.

Cross-link author profiles and key assets to reinforce identity.

Build co-citations through credible, reusable assets

What it means: Engines reward sources that are corroborated by other reputable sites. Your data pages, methodology notes, and explainers should be the ones others quote.

Why it works: Studies of AIO sources show repeated use of a limited set of authoritative domains. See Surfer’s 2025 AIO sources analysis.

How to do it:

Publish a 1–2 page, high-signal stat summary quarterly and pitch it to journalists/newsletters.

Provide downloadable tables and clear methodology to encourage accurate referencing.

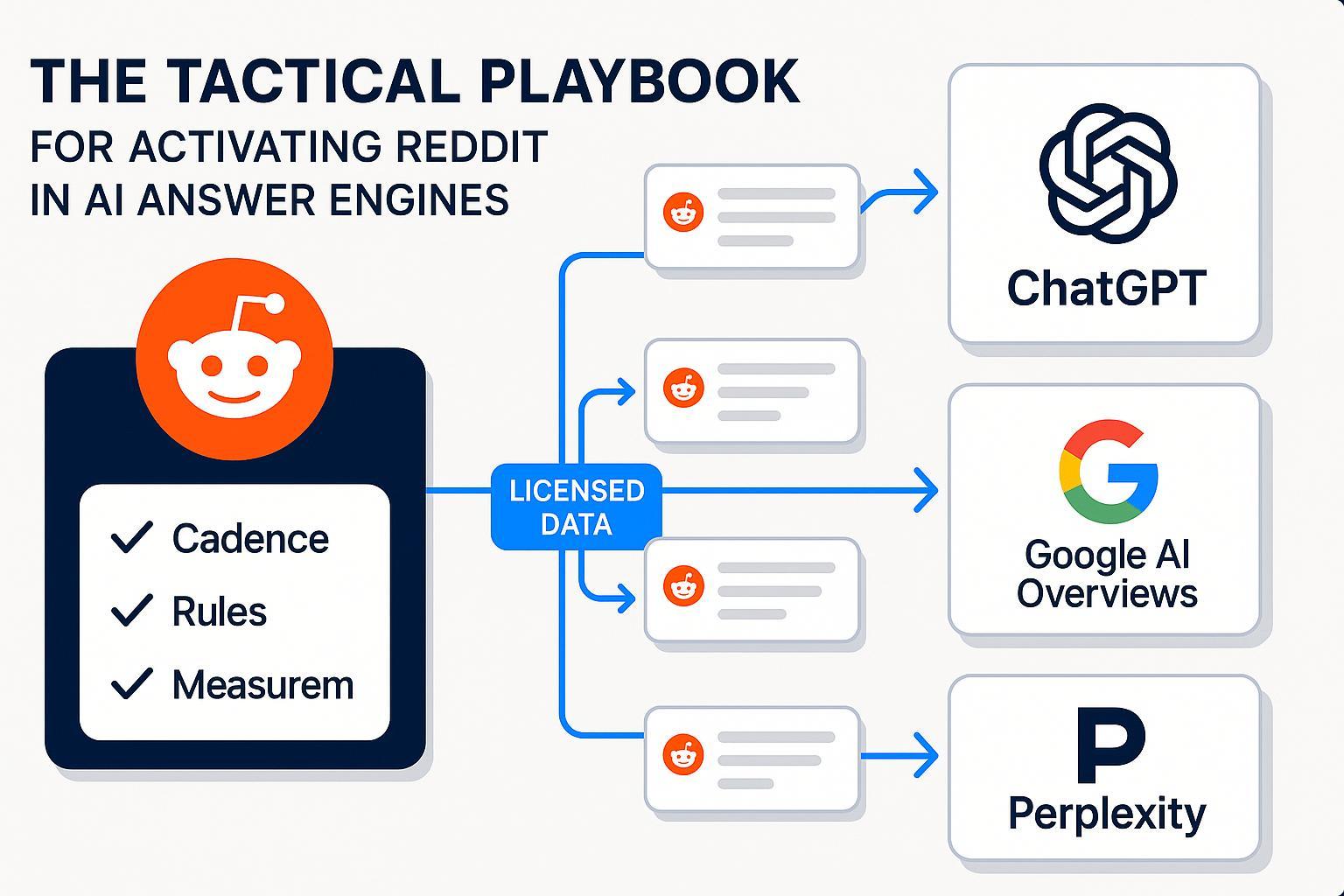

Contribute expert answers to high-signal communities (e.g., relevant subreddits, Stack Overflow) with proper citations to your research.

Design for answer engines, not just web readers

What it means: Structure content so AI can rapidly extract and verify claims—short declarative sentences, explicit definitions, and scoped sections.

Why it works: OpenAI’s Deep Research emphasizes multi-source verification; well-structured pages with clear links tend to be favored in synthesis. See OpenAI: Introducing Deep Research (2025). Perplexity also highlights multi-source synthesis and transparent citations; see Perplexity’s Deep Research post (2025).

How to do it:

Use the “one claim, one paragraph” rule.

Place source links adjacent to the claim, not buried at the bottom.

Include “In brief” summaries at the top of long pages for quick extraction.

For foundational definitions and evolving tactics, see the Geneo blog for ongoing GEO primers and platform updates.

Measurement-first setup: KPIs, instrumentation, and a 90-day plan

What gets measured gets improved. Standardize this KPI stack across engines.

Visibility KPIs

Share of Answers: % of tracked prompts/queries where your brand is cited in AIO/AI Mode, ChatGPT Search/Atlas, or Perplexity.

Citation Mix & Depth: Total citations by engine; how often you appear as a core/repeat source on a topic.

Platform Distribution: Your visibility split across engines; trend monthly.

Entity Coverage: % of priority entities correctly represented in AI answers (brand, products, categories).

Quality KPIs

Sentiment of Mentions: Positive/neutral/negative tone of AI answer mentions; tag drivers.

Passage Match Score: Heuristic similarity between your answer blocks and surfaced passages.

Outcome KPIs

AI Referrals: Clicks from AI Overviews/AI Mode, ChatGPT Search/Atlas, Perplexity (track UTMs where possible). Early 2025 analyses suggest fewer referrals and shorter sessions than traditional search; set expectations accordingly, referencing iPullRank’s 2025 findings.

Conversion Rate from AI Referrals: Compare to organic search; expect variance by intent.

Time-to-Visibility: Days from update/publication to first observed AI citation.

Instrumentation checklist (week 0–2)

Define a tracked query/prompt set per product line and lifecycle stage (awareness to evaluation).

Implement UTM conventions for AI referrals; configure analytics dashboards.

Build a content inventory with “answer block” IDs mapped to URLs and subheads.

Set up logging for AI answers: date, engine, query, cited URLs, sentiment note.

90-day milestones

Weeks 2–6: Rewrite top 10–20 pages to answer-first format; publish one data asset for co-citations; begin outreach.

Weeks 6–10: Fan-out adjacent prompts; publish entity FAQs; fix misattribution; refresh laggards.

Weeks 10–12: Review KPI deltas vs baseline; refine playbook; plan next 90 days.

For differences among platforms and how that shapes your KPI targets, see this side-by-side Perplexity vs Google AI Overviews vs ChatGPT comparison.

Platform playbooks you can implement this quarter

Google AI Overviews and AI Mode

Source selection behavior to account for

Observational studies show a strong overlap between AIO sources and top organic domains, but not perfect one-to-one alignment at the passage level. See the Semrush comparisons (2025).

Page format that works

Start pages with a 2–3 sentence “In brief.”

Use H2s for intent variants; under each, place a 100–150 word answer block plus a table or list.

Add 1–2 citations per block to primary sources.

Technical must-haves

Schema where relevant (HowTo, FAQ, Product, Event); clean canonicalization, fast load, stable DOM.

Transparent authorship and last-updated date. Align with Search Central guidance (2025).

Quick wins

Convert your top 5 evergreen guides into answer-first layouts; add stat boxes with year stamps.

Add a short clarification FAQ under each page to boost entity disambiguation.

Pitfalls

Bloated intros; inconsistent naming; outdated stats; syndication outranking your original.

Perplexity

Source selection behavior to account for

Perplexity performs multi-search synthesis and shows transparent citations; recency and credibility matter. See Perplexity Deep Research (2025).

Page format that works

Longform hubs with tight, labeled sections; clear dates and author bios.

Embed citation-ready blocks with tables and methodologies.

Technical must-haves

Canonical tags to prevent syndication outranking; visible update history; strong internal linking to canonical explainer pages.

Quick wins

Publish quarterly data roundups; pitch academics/journalists/newsletters likely to be co-cited.

Pitfalls

Vague claims without sources; outdated data; fragmented coverage across many thin pages.

OpenAI ChatGPT Search, Deep Research, and Atlas

Source selection behavior to account for

Deep Research and Search emphasize multi-source verification and link-out for transparency. See OpenAI: Deep Research (2025).

Page format that works

Skimmable summaries, short declarative claims, and explicit definitions.

Link primary sources adjacent to the claims.

Technical must-haves

Keep core answers on authoritative, stable URLs vs ephemeral posts; avoid duplicate versions with weak canonicalization.

Quick wins

Create 8–12 “definition + benchmark” pages for high-intent topics.

Pitfalls

Over-indexing on promotional copy; burying citations at the bottom of long pages.

Build the content production system your engines need

Editorial spec for answer-first pages

Target reading time: 6–9 minutes.

Structure: Intro (In brief: 2–3 sentences) → H2 for each intent variant → 100–150 word answer block → table or list → 1–2 inline citations.

Evidence: Each answer block must include a named stat or method, plus a year.

Metadata: Author bio, credentials, last updated, and a resource box with related internal links.

Passage engineering checklist (repeat per H2)

Lead with the answer in the first sentence.

Include one specific number and one named source.

Add a short “How we measured” or “Method” if data-driven.

Close with a next-step link or table for extractability.

Co-citation and outreach playbook

Publish one original data asset per quarter with a downloadable table.

Seed to 10–20 newsletters/journalists; summarize key stats on communities (per policy) with canonical links.

Track co-citation growth and refresh the asset every 90 days with new datapoints.

Freshness operations

Maintain a “stat ledger” for every page; schedule 90-day reviews.

Replace or annotate stale data; keep year stamps current in the copy.

Practical workflow example: measuring and improving Share of Answers

Here’s a realistic setup we’ve used with marketing teams to instrument GEO without boiling the ocean in month one.

Step 1 — Define the scope

Pick 50–100 priority prompts/queries across lifecycle stages and 2–3 competitors.

Step 2 — Instrument tracking

Log weekly presence in AI Overviews/AI Mode, ChatGPT Search/Atlas, and Perplexity: query, engine, cited URLs, your inclusion (Y/N), and sentiment.

Tag landing pages with UTMs specific to AI engines.

Step 3 — Content changes

Rewrite the top 10 pages into answer-first layouts with fresh stats and inline citations.

Publish one net-new data asset designed for co-citations.

Step 4 — Iterate based on deltas

Prioritize pages where Passage Match Score is high but you’re not cited—tighten claims, improve sources, and clarify entities.

Step 5 — Tooling layer

Use a centralized tracker to monitor Share of Answers, citation mix, and sentiment across engines, and to surface a prioritized refresh roadmap.

In practice, a platform like Geneo can consolidate multi-engine visibility, sentiment of mentions, and content recommendations into a single view while preserving your analytics workflow. Disclosure: Geneo is our product.

Scenario playbooks (pick what fits your quarter)

Product launch (days 0–45)

Goals: Establish entity clarity for the product, seed early co-citations, and secure inclusion in definition-level answers.

Actions:

Publish a definition + FAQ page and a technical spec with tables.

Create a benchmark/data mini-report tied to your category.

Run outreach to 15–20 newsletters and analysts.

Watch:

Entity coverage in AI answers; Share of Answers on “what is [product]” and “best [category] for [use case]”.

Crisis response (days 0–30)

Goals: Correct misinformation and stabilize sentiment in AI answers.

Actions:

Publish a timestamped clarification page with verifiable sources; link from homepage/footer for authority.

Update top related pages with a short, factual “In brief” note summarizing the correction.

Contact syndicators to add rel=canonical and credit original.

Watch:

Sentiment of Mentions; inclusion of your clarification page in AI answers.

Competitive incursion (days 0–45)

Goals: Regain core-source status on key queries.

Actions:

Refresh cornerstone pages with newer stats, clearer tables, and explicit counter-comparisons.

Publish a short data note debunking a common misconception with named sources.

Strengthen author bios and interlink related assets to reinforce authority.

Watch:

Citation depth vs competitor; Passage Match Score improvements; time-to-visibility after updates.

Common pitfalls to avoid

One-and-done rewrites. GEO is a refresh discipline; stale stats and undated claims lose citations over time.

Ignoring entity ambiguity. Similar product names or acronyms cause misattribution in AI answers.

Over-indexing on one engine. Portfolio your effort across Google, ChatGPT, and Perplexity; winning on one doesn’t guarantee the others.

Bare URLs or “sources” sections without inline anchors. Engines favor verifiable, adjacent citations.

Syndication beating the original. Enforce canonicalization and keep your original richer with methods, visuals, and context.

Reporting to stakeholders: set expectations with data

Executive-friendly scorecard

Visibility: Share of Answers by engine and trend.

Quality: Sentiment of Mentions and top drivers.

Outcomes: AI referrals and conversions vs traditional organic.

Pipeline impact: Pages/answers influencing opportunities, with time-to-visibility.

Expectation-setting anchors

Reference macro patterns: AIO presence climbed quickly in early 2025 per the Semrush study, while AI-mode referrals may be fewer and shorter per iPullRank’s analysis. This combination means progress often shows first in Share of Answers before translating to traffic, then to pipeline.

Risk and limitation notes

Google does not publish full selection algorithms for AIO/AI Mode; use observational studies and your own logs to navigate.

Case studies are still emerging; treat uplift magnitudes as directional and validate locally.

Publisher referrals can fluctuate post-AIO; several industry digests reported declines, e.g., this Digiday analysis (2025). Your mileage will vary by intent and category.

Next steps

If you need a single place to track Share of Answers, sentiment of mentions, and a prioritized content roadmap across Google, ChatGPT, and Perplexity, consider trying Geneo to operationalize this framework without adding headcount.