How Generative AI Changed Content Authenticity: 2025's New Rules

Explore the 2025 mandates for authenticity and originality in the age of generative AI—Google updates, new labeling, and actionable compliance strategies. Read expert advice!

Updated on 2025-10-12

If your brand’s content strategy still treats “originality” as a matter of phrasing and style, you’re operating with a 2023 playbook. Across 2024–2025, the rules of authenticity have shifted from what you write to how you produce, prove, and label it—plus how platforms surface and judge it. The upshot: originality now requires verifiable provenance, transparent disclosures, and search-safe editorial integrity.

This article translates the past 18 months of policy, platform, and legal changes into a practical playbook for marketing leaders, SEO/AEO teams, and legal or compliance partners.

What changed in 2024–2025—and why it matters

-

Search integrity jumped to the forefront. In March 2024, Google rolled out a major core update and three new spam policies targeting “scaled content abuse,” “site reputation abuse,” and “expired domain abuse.” Google’s guidance emphasizes that mass-produced, unoriginal pages—regardless of whether they’re made with AI—risk enforcement, while genuinely helpful content can be rewarded “however it is produced.” See the announcement in the 2024 Google Search Central core update and spam policies.

-

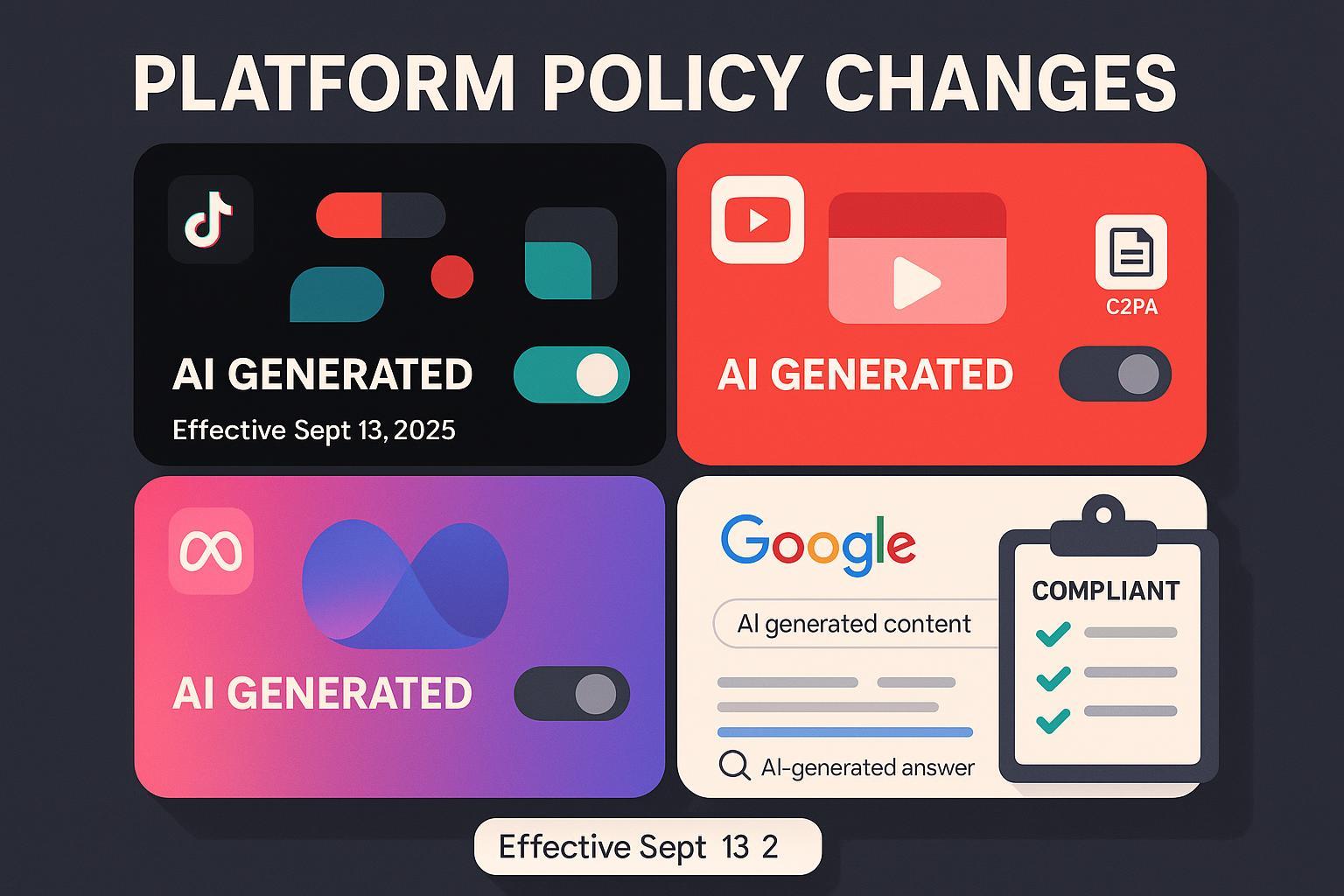

Platform labeling matured. YouTube now requires creators to disclose realistic synthetic or meaningfully altered media; labels surface in descriptions, and for sensitive topics may appear more prominently in the player, per the YouTube Help policy on altered or synthetic content (2024–2025). TikTok began automatically labeling AI-generated media that carries Content Credentials metadata and attaches credentials to its own AI outputs, according to the 2024 TikTok Newsroom announcement on C2PA-based labeling.

-

Provenance tech went mainstream. The ecosystem rallied around Content Credentials (built on the C2PA standard) and watermarking like Google/DeepMind’s SynthID. A defense-sector explainer outlines how cryptographic signing and “Durable Content Credentials” can help preserve provenance even when metadata is stripped, while also noting limitations—see the 2025 DoD/CSI Content Credentials brief.

-

Regulation and guidance advanced. The EU AI Act’s Article 50 sets transparency obligations for deep fakes and certain public-interest communications, with application phased into 2026 per the EUR‑Lex 2024 AI Act text, Article 50. In the U.S., the Copyright Office reaffirmed in 2025 that copyright protection hinges on human authorship and case‑by‑case analysis of human creative control, per the U.S. Copyright Office AI Report Part 2 (2025).

-

Audiences demand transparency. In October 2025, Pew reported that among U.S. adults who encounter AI summaries in search, only a small share trust them “a lot,” while a majority express conditional trust and want clear disclosures; see the Pew Research Center short read (2025-10-01).

The authenticity triangle: provenance, labeling, and search integrity

Think of authenticity now as a triangle you have to keep in balance. Overinvest in one side and neglect the others, and you risk distribution loss, user distrust, or compliance exposure.

-

Provenance you can verify

- What it is: Attaching cryptographically signed Content Credentials to assets (images, video, audio—and increasingly, text manifests) to document how the piece was created and edited over time. Invisible watermarking (e.g., SynthID) can add another signal but is not a silver bullet.

- Why it matters: It provides a chain of custody and auditability that brand, platform, or legal stakeholders can review. However, credentials are only as reliable as your workflow discipline and toolchain.

-

Platform disclosure you can stand behind

- What it is: Honest, context-appropriate labeling of realistic synthetic or materially altered media, aligned with platform rules (e.g., YouTube, TikTok, Meta). Some platforms now add or read labels automatically via Content Credentials.

- Why it matters: Disclosures protect audience trust and reduce policy risk. Under some regimes (EU AI Act), certain disclosures will be legal obligations.

-

Search integrity you can defend

- What it is: People-first content that resists “scaled content abuse” patterns and demonstrates real expertise and usefulness. Editorial control and unique analysis over volume.

- Why it matters: Google’s 2024 policies formalized penalties for mass, near-duplicate AI output and low-supervision third-party hosting. Helpful, human-led content—regardless of AI assistance—remains the north star.

Limitations to acknowledge

- Content Credentials can be removed or lost if workflows aren’t disciplined; “Durable” approaches aim to mitigate this, but they’re still maturing.

- Watermarks can degrade or be evaded; treat them as complementary signals, not guarantees.

- Platform labeling UX and thresholds evolve; keep a quarterly review cadence.

A practical playbook for 2025

Below is a cross-functional, defensible workflow that blends originality, compliance, and distribution. Tailor it to your risk profile and industry.

- Editorial governance and originality

- Define clear tiers of AI involvement: AI-assisted (tools used for ideation or drafting under human direction) vs. AI-generated (media that could be perceived as realistic but didn’t happen). Document this in briefs and version history.

- Require a human-in-the-loop originality protocol: a human sets the thesis, selects sources, synthesizes insights, and edits for unique perspective; AI can draft variants or assist with structure, but final analysis must be human.

- Attach Content Credentials by default for visual and audiovisual assets; preserve originals and maintain an audit trail. Adopt Durable Content Credentials practices where feasible.

- Label realistic synthetic or materially altered media consistent with platform rules (e.g., YouTube’s thresholds for “meaningfully altered”), and align ad disclosures with organic content conventions.

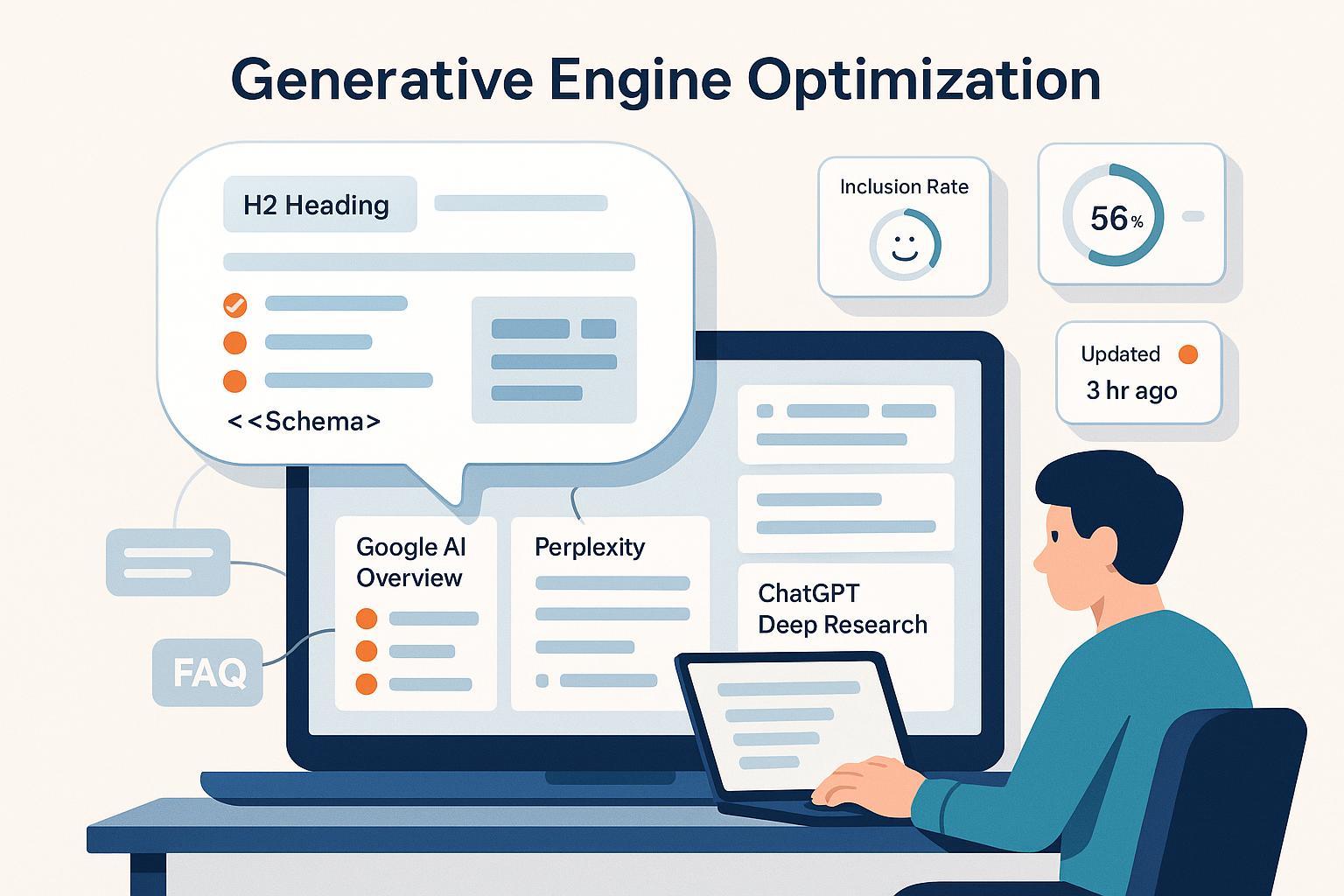

- Search/AEO hygiene

- Avoid scaled content patterns: no mass programmatic pages, stitched content, or “template plus keyword” multiplication. Resist hosting low‑oversight third‑party pages for reputation boost.

- Show people-first value: expert bylines and bios, descriptive anchors to primary sources, and clear problem-solution framing. Measure satisfaction (time on task, helpfulness ratings, resolved intent).

- Consolidate duplicative pages, prune thin archives, and map queries to distinct intents before drafting. Use post-publication reviews to verify that AI assistance didn’t introduce redundancy.

- Provenance-by-default production

- Standardize tools that support Content Credentials across capture, edit, and export. Train creators to keep credentials intact through handoffs.

- Maintain a provenance register: asset ID, credential status, AI tools used (model family, version if available), and human approver.

- Stress-test workflows: confirm credentials survive common transforms (compression, cropping) and platforms you rely on.

- Platform disclosure matrix

- Build a one-page matrix covering YouTube, TikTok, and Meta: when to disclose; how labels are presented; what counts as “realistic” or “meaningfully altered”; penalties for non-disclosure; and how imported Content Credentials are treated.

- Re-verify quarterly as help docs and enforcement notes change.

Measurement and monitoring: prove authenticity, protect distribution

Two things can be true: your process can be authentic, and platforms might still misrepresent or omit you in AI answers. That’s why monitoring matters.

- Track your presence and sentiment across AI surfaces (AI Overviews, chat answers, and short-video feeds). Capture how your brand is summarized, whether sources are cited, and how the tone shifts over time.

- Compare visibility and citation patterns before/after key policy updates (e.g., Google’s March 2024 changes; new platform disclosure tools). Adjust provenance and editorial SOPs if you see drops tied to specific content categories or asset types.

- Document when Content Credentials appear to be read by platforms (e.g., TikTok auto-labels assets carrying credentials) and note exceptions for troubleshooting.

A neutral example workflow

- In practice, brands often centralize monitoring for AI answers and sentiment across engines and keep a change log alongside provenance records. Tools like Geneo can support this by tracking brand mentions and visibility across ChatGPT, Perplexity, and Google AI Overviews, alongside sentiment trends and historical query records. Disclosure: Geneo is our product.

- For a deeper look at how teams operationalize cross‑engine monitoring and adjustments to editorial/SOPs, see these 2025 AI search strategy case studies.

What to report monthly

- AI answer coverage by topic cluster and intent tier (navigational, informational, transactional)

- Citation and link inclusion rates in AI answers

- Sentiment and framing deltas vs. your brand voice

- Issues with Content Credentials visibility or platform labels

- Remediation actions and experiments (e.g., revising disclosure copy; tightening originality checks; re-exporting assets with intact credentials)

What’s next: a 3–6 month outlook

- EU AI Act Article 50 guidance: Expect ongoing Commission clarifications and emerging codes of practice through 2026. Some sectors will face earlier scrutiny; prepare now by solidifying disclosure policies and provenance workflows aligned to the legal text.

- Platform UX evolves: YouTube’s disclosure UI and TikTok’s auto‑labeling scope (including audio) continue to iterate. Assign owners to watch help-center updates and test how labels present for sensitive topics.

- Search documentation updates: Google may refine examples or enforcement notes around site reputation abuse and scaled content patterns. Keep a governance log so you can quickly audit content if traffic shifts.

- Watermarking and credentials mature: SynthID and Content Credentials integrations will broaden. Treat them as layered signals; continue prioritizing human editorial control and helpfulness.

Quick compliance and originality checklist

-

Before production

- Define user intent and unique POV; assign a human owner for thesis and synthesis

- Confirm which assets need Content Credentials and which may require labels later

- Choose sources you can anchor with descriptive links to primary documents

-

During production

- Keep an audit trail: prompts, drafts, human edits, source list

- Attach credentials on export; verify survival after edits/compression

- Draft clear disclosure language for any realistic synthetic/altered media

-

Before publishing

- Run a scaled-content sniff test: is this page meaningfully unique, or a templated variation?

- Validate labels and metadata; ensure author bios and expertise are present

- Do a final people-first pass: does it resolve the reader’s question decisively?

-

After publishing

- Monitor AI answer visibility, citations, and sentiment monthly

- Review platform policy changes quarterly; update the disclosure matrix

- Maintain a mini change log for provenance and labeling edge cases

Mini change log

- 2025-10-12: Initial publication; includes references to Google’s March 2024 policies, 2024–2025 platform labeling updates, 2025 USCO guidance, and current consumer trust data.

Cited references (selected)

- Google — core update and spam policies announcement (2024-03-05)

- YouTube Help — altered or synthetic content disclosure (2024–2025)

- TikTok — C2PA-based automatic labeling announcement (2024-05-09)

- DoD/CSI — Content Credentials and Durable Credentials brief (2025-01-16)

- EU — AI Act Article 50 text (2024)

- U.S. Copyright Office — AI Report Part 2 on copyrightability (2025)

- Pew Research Center — AI summaries trust short read (2025-10-01)