The Future of Search 2025: AI Overviews & Natural Language Trends

Explore 2025’s search revolution—AI Overviews, Bing Copilot, Perplexity stats, GEO playbook, and proven measurement tactics. See expert insights!

A year ago, “best running shoes” might have been your query. Today, you’re more likely to type—or say—“I run 15 miles a week on wet pavement—what shoes will prevent shin splints, and where can I buy them nearby?” That shift captures the moment: search is evolving from matching keywords to understanding and completing tasks through conversational answers that cite sources, summarize options, and invite follow‑up questions.

What actually changed in 2024–2025

Across the big engines, product updates pushed search toward conversational, multimodal answers—fast.

-

Google launched AI Overviews broadly in the U.S. in May 2024 and continued expanding. In 2025, Google introduced AI Mode with deeper reasoning and multimodality, emphasizing helpful web links and follow‑up exploration; see Google’s official update: AI Mode update (May 20, 2025).

-

Microsoft introduced Copilot Search in Bing on April 4, 2025, describing a blend of traditional and generative search with sentence‑level citation linking, which makes provenance easier to trace: Introducing Copilot Search in Bing (2025).

-

OpenAI rolled out ChatGPT Search in October 2024 with fast answers and links to relevant web sources, available via chatgpt.com: Introducing ChatGPT Search (2024).

-

Perplexity doubled down on a citation‑forward answer design and publisher partnerships, making source visibility a core UX element. While implementations evolve, the through‑line is clear: answers first, citations visible, and conversation continues.

What the numbers say about presence and clicks

Trigger rates and behavioral impacts vary by device, geography, and vertical. Still, several reputable datasets outline the direction of travel—use them as ranges, not absolutes, and always note the sample and window.

-

Presence in Google results: Independent tracking indicates meaningful AI Overviews presence in 2025. For example, seoClarity’s U.S. analyses reported rising incidence across the year and documented overlap between AI Overview citations and top organic results; see their ongoing research summaries in 2025: seoClarity AI Overviews impact and overlap studies (2025).

-

Monthly growth snapshots: Semrush reported that 13.14% of queries triggered AI Overviews in March 2025 (up from 6.49% in January 2025) based on its proprietary SERP sampling: Semrush AI Overviews study (July 22, 2025).

-

User behavior when summaries appear: In a 2025 analysis of Google usage, Pew Research found that when an AI summary appeared, users clicked a traditional web result in 8% of visits vs. 15% when no AI summary appeared; moreover, 26% of pages with an AI summary ended with no clicks, compared with 16% without one: Pew Research on user behavior with AI summaries (2025).

Here’s the takeaway: when answer units surface, they soak up attention. For brands, visibility now includes whether you appear in the answer unit’s citations—on top of classic organic rankings.

From keywords to intents: how queries are actually changing

Natural‑language queries look like tasks, constraints, and context, not just nouns. They often include:

- The job to be done (“compare,” “outline a plan,” “draft a schedule”).

- Constraints (budget, time, geography, device, compliance).

- Preferred modality (text, image, video, voice) and follow‑ups.

Think of it this way: a good query now reads like a brief. A few examples of rewrites that reflect intent (not just keywords):

-

“best project management tool” ➜ “We’re a 15‑person agency with strict SOC 2 needs—compare Asana vs. ClickUp vs. Monday for permissioning and client portals.”

-

“b2b pricing page” ➜ “Show me B2B SaaS pricing page patterns that reduce sticker shock; include two examples with transparent overage fees.”

-

“how to prune roses” ➜ “I’m in zone 6 with powdery mildew issues—how do I prune climbing roses in late spring without stressing new growth?”

These prompts signal intent, entities, and evaluation criteria—exactly the inputs generative engines use to craft a better answer and choose which sources to cite.

GEO that actually helps (without the hype)

Generative Engine Optimization (GEO) isn’t a bag of tricks; it’s a re‑prioritization of fundamentals for an answer‑first world. Practical pillars include:

- Entity and context clarity: Make who you are, where you operate, and what you do unambiguous. Align brand, product, and people entities across your site and reputable profiles.

- Trust signals and citations: Cite high‑quality primary sources, maintain clean outbound and inbound link hygiene, and show your work with methodology notes.

- Structured data and scannable evidence: Use schema where appropriate, include concise comparisons, pros/cons, step flows, and embed reference‑ready snippets.

- Task‑completion content: Write to the job: prerequisites, steps, constraints, and outcomes. Add modality where it helps (short demo video, diagram, downloadable checklist).

For a grounding in frameworks and definitions, see Search Engine Land’s continually updated GEO library (2024–2025).

A measurement playbook for AI answer engines

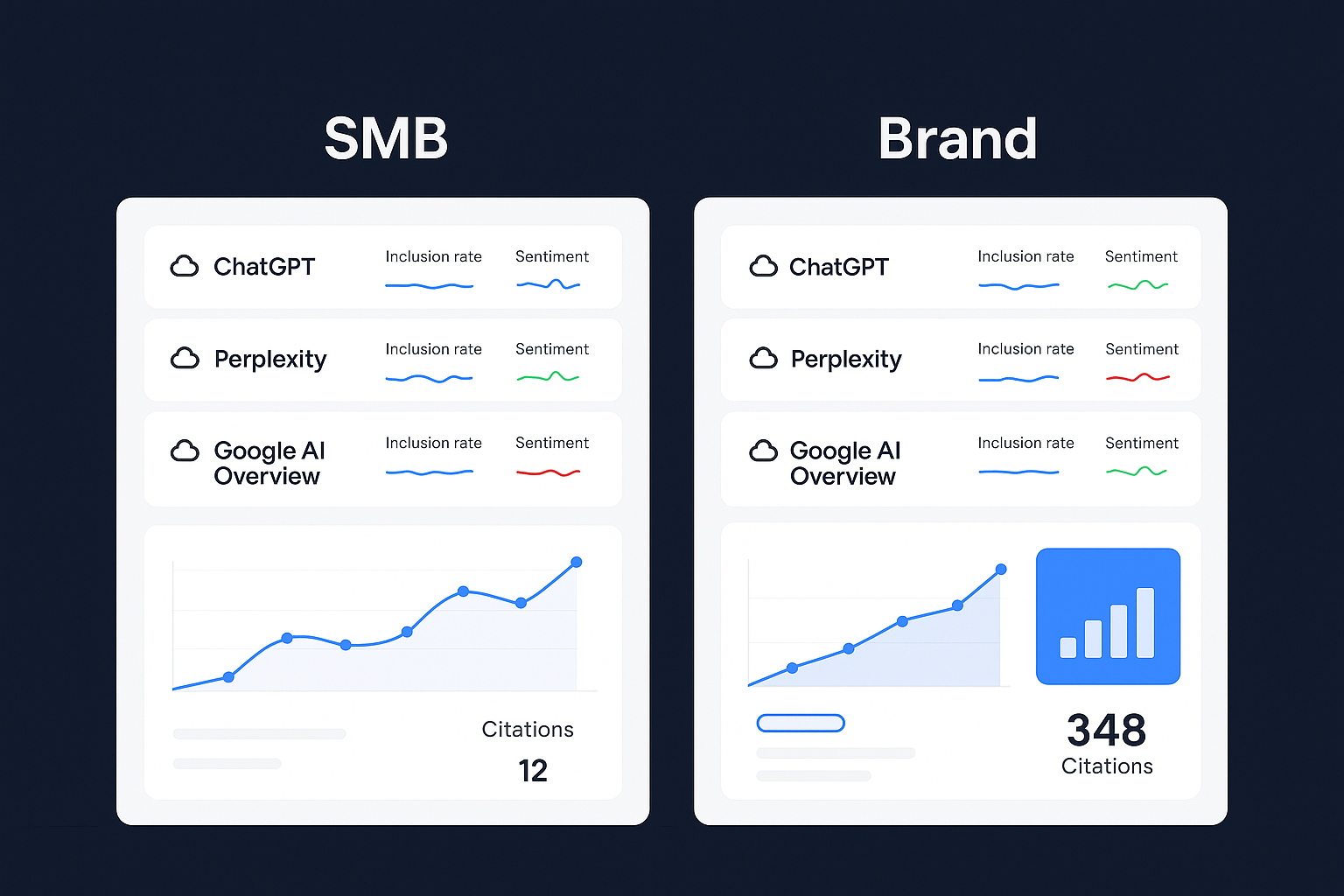

If you can’t measure brand visibility in answer units, you can’t manage it. Here’s a compact, repeatable logging approach you can implement this week.

- Scope: Track core topics and high‑value intents across Google (AI Overviews/AI Mode), Bing Copilot, Perplexity, and ChatGPT Search.

- Cadence: Weekly snapshots for each prompt variant; more frequent checks during known volatility (major Google updates, product launches).

- Method discipline: Always record the exact prompt, engine, device, location, date/time, whether an answer unit appeared, which citations appeared, brand mentions, link positions, and sentiment.

Two helpful internal primers when you’re comparing engines or scoring prompts: platform monitoring comparison: ChatGPT vs. Perplexity vs. Gemini vs. Bing (guide) and a prompt‑level visibility and sentiment walkthrough.

Disclosure: Geneo is our product. In practice, Geneo can be used to log cross‑engine prompts, track whether your brand is cited in AI answers, and score sentiment over time. That said, any workflow is viable if you capture the fields below and maintain a change‑log.

| Field | Example | Why it matters |

|---|---|---|

| Prompt (verbatim) | “Outline a 10‑step SOC 2 readiness plan for a 15‑person SaaS.” | Lets you reproduce results and test variants. |

| Engine + Surface | Google AI Overview (mobile), Bing Copilot (desktop) | Surfaces behave differently by engine/device. |

| Geo/Language | U.S., English | Triggers and citations can be region‑specific. |

| Date/Time | 2025‑11‑01 10:32 PT | Enables volatility tracking and audits. |

| Answer unit present? | Yes/No | Core KPI: visibility opportunity exists or not. |

| Citations (ordered) | example.com, gov.site, vendor.com/blog | Where authority is drawn from; track position. |

| Brand mention/link? | Mentioned + link in position #2 | Measures exposure inside the answer. |

| Sentiment | Positive/Neutral/Negative with short note | Brand safety and messaging risk. |

| Follow‑ups tested | “Compare with ISO 27001” | Reveals conversation paths and new exposure chances. |

| Notes/Anomalies | “Citation rotated after refresh; screenshot saved.” | Supports QA and incident response. |

Operational tips:

- Keep screenshots of answer units and citation carousels so you can review changes.

- Normalize entities (brand, product, people) so your log remains queryable.

- Add a weekly change‑log summary: net gain/loss in citations by engine; new opportunities from follow‑up questions; sentiment swings.

Role‑specific next steps

- For SEO and data leads: Instrument the log above, prioritize entity clean‑up, and run weekly prompt experiments across engines. Share deltas, not anecdotes.

- For content editors and PMMs: Convert top tasks into scannable, reference‑ready pages and briefs; include citations and concise comparisons ready to be quoted by answer engines.

- For CMOs and VPs of Growth: Budget for cross‑engine monitoring and QA. Set quarterly targets for answer‑unit citation share and brand sentiment, not just blue‑link rankings.

What to watch next—and where to focus

Expect continued changes in trigger rates, multimodal inputs (voice, image, short video), and how citations are displayed and weighted. Google’s shift toward AI Mode and Bing’s sentence‑level citations are early signs of a more transparent, task‑centric search flow. OpenAI and Perplexity will keep pushing answer quality and source visibility. Your unfair advantage isn’t a secret tactic—it’s disciplined measurement and content that actually helps someone complete a task.

If you want one place to run prompt tests, track citations across engines, and keep a clean change‑log, tools like Geneo can help you monitor and interpret the results over time—lightweight to start, rigorous as you grow. For ongoing context about volatility, keep an eye on algorithm updates and how answer behavior evolves; our perspective on shifts in late 2025 is summarized here: Google algorithm update perspective (Oct 2025).

One final question to keep your team aligned: If an answer engine summarized your topic tomorrow, would your brand be one of the trusted citations—and can you prove it over time?