Ethical AI Video Best Practices for Marketers: Safeguards & Compliance Guide

Learn proven best practices for ethical AI video generation, with actionable safeguards, workflow checklists, Sora 2 tips, and compliance for marketers.

Ethical AI video isn’t just a compliance checkbox; it’s a trust multiplier. Marketers adopting generators like Sora 2 can ship creative faster, test more ideas, and personalize at scale—but the reputational and legal stakes are real. This best-practice playbook distills what has worked in teams that deploy AI video responsibly, with step-by-step workflows and safeguards aligned to 2025 regulations and platform policies.

Why this matters for performance and trust

- Video ROI remains strong in 2025: industry roundups show most marketers still report positive returns from video; for example, HubSpot’s 2025 compilation (summarizing Wyzowl’s longitudinal surveys) highlights sustained ROI signals in video marketing according to the HubSpot 2025 video statistics roundup.

- Consumer interest in AI video is rising, especially for interactive and personalized formats, as discussed in the Idomoo 2025 consumer trends overview.

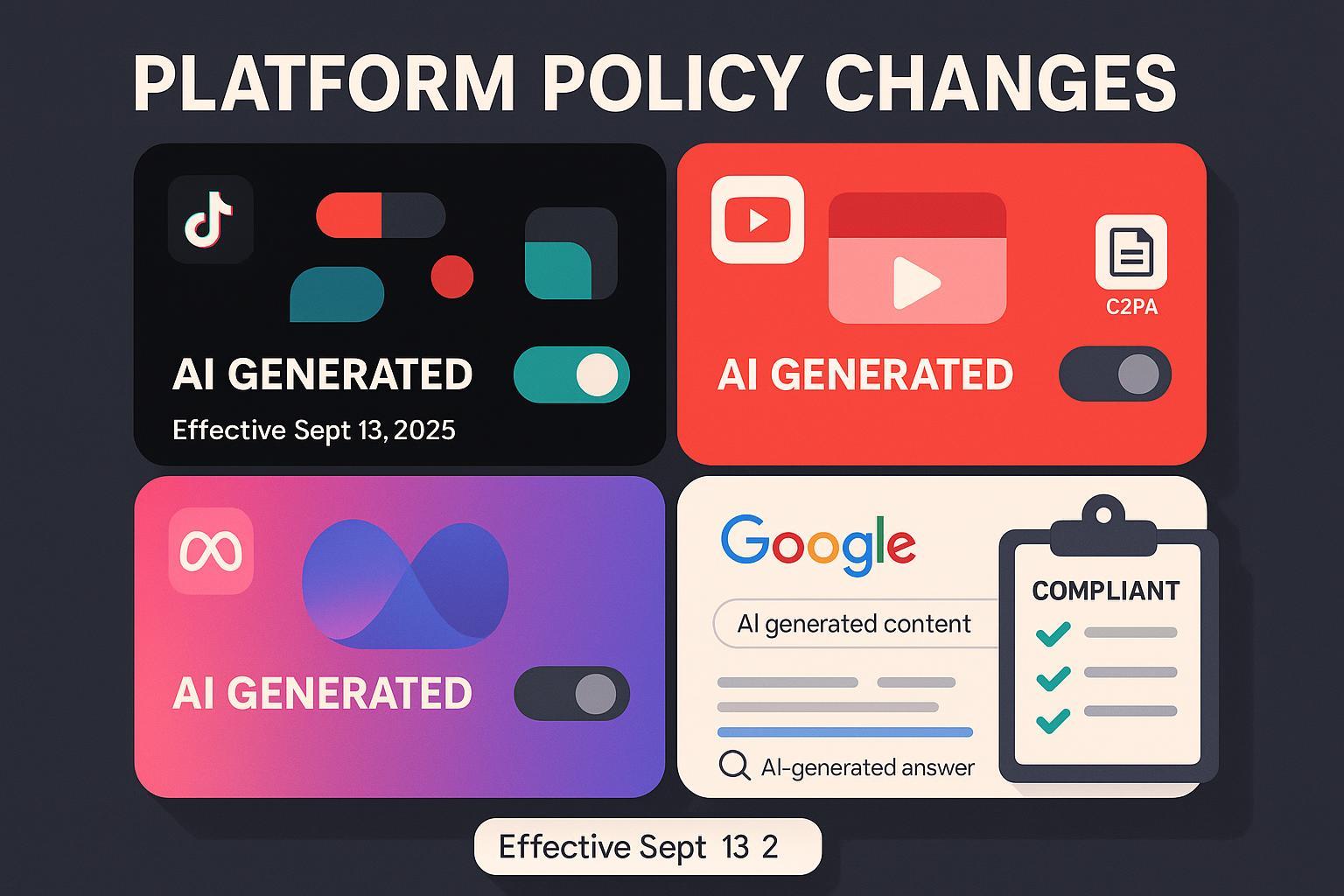

- Platforms increasingly require disclosure of realistic synthetic media. YouTube and others are moving from “optional disclosure” to mandatory labels for certain content categories—see YouTube’s guidance on disclosing altered or synthetic content.

The takeaway: ethical AI video is not a theoretical exercise. It directly affects distribution, monetization, and audience trust.

Five foundational principles you should operationalize

-

Transparency (clear labeling)

- If your video includes realistic synthetic scenes or characters, label it clearly and prominently. This aligns with platform policies and the EU’s direction of travel on transparency obligations for deepfakes (see the European Commission’s 2025 FAQ on the forthcoming Code of Practice and transparency guidance in the European Commission 2025 AI transparency FAQ).

-

Consent and rights of publicity

- Secure written consent for any likeness or voice. Unionized commercial work now includes explicit AI-related protections—review the DLA Piper 2025 overview of SAG‑AFTRA Commercials Contracts.

-

Provenance and content credentials (C2PA)

- Embed and preserve provenance metadata so downstream platforms and audiences can verify origin. The C2PA Technical Specification 2.2 (2025) defines current best practices for creation and preservation.

-

Copyright/IP hygiene

- The U.S. Copyright Office reiterates that works lacking human authorship are not protectable; mixed works require disclosure and assessment. Review guidance at the U.S. Copyright Office’s AI resource, and ensure licenses and rights chains are documented.

-

Bias, diversity, and fair representation

- Build a fairness review into creative QA. Cross-functional guidance from advertising and AI ethics groups emphasizes human oversight and bias testing; see the IAB Tech Lab’s AI in Advertising Primer (2024).

The end-to-end ethical workflow (use this playbook)

1) Pre‑production: brief, consent, and compliance setup

- Define purpose and audience. Avoid sensitive contexts that could mislead (e.g., simulated news footage presented as real).

- Consent kit:

- Written consent for any real person’s likeness or voice; include clauses for synthetic generation, future edits, and takedown procedures.

- For commercial productions, verify union obligations and usage terms.

- Rights chain log:

- Track all source assets (stock, licensed music, models), prompt drafts, and generated outputs.

- Note training data license statements for vendors (if provided) and your usage terms.

- Provenance plan:

- Choose tools that support Content Credentials/C2PA and configure your delivery stack (CDN/CMS) to preserve metadata.

- Fairness plan:

- Set up a “representation checklist” to audit for stereotypes, cultural accuracy, and diversity.

- Regulatory scan:

- If your campaign touches politics or public policy, review state deepfake laws and add conspicuous disclaimers where required. At minimum, align with evolving transparency norms indicated by EU guidance in 2025.

2) Production & generation: prompt hygiene and safeguards

- Write prompts that avoid deception. Do not simulate real endorsements or impersonate individuals.

- Use platform disclosure features during export where available.

- Apply visible on‑screen labels for realistic synthetic scenes, and ensure descriptions note “AI‑generated elements.”

- For Sora 2 and similar tools, stay within usage policies and avoid prohibited content categories.

3) Post‑production: verification and sign‑off

- Human review:

- Multi‑reviewer QA for factuality, fairness, and consent adherence.

- Technical checks:

- Verify Content Credentials are embedded and intact; test a sample through an inspector tool.

- Legal review:

- High‑risk or sensitive campaigns get counsel sign‑off.

4) Publishing: platform‑specific disclosures

- YouTube: Use the disclosure prompt/checkbox for realistic synthetic content; understand label placement tiers discussed in YouTube’s disclosure guidance.

- Meta: Expect “Made with AI” labels on applicable assets based on detection and self‑disclosure, per Meta’s labeling updates (2024–2025).

- TikTok: Follow the platform’s instructions for labeling AI‑generated content described in TikTok’s support guidance on AI‑generated content.

5) Monitoring: listen and be ready to respond

- Track sentiment and anomalies during the first 72 hours post‑launch.

- Establish escalation paths for:

- Mislabeling or missing disclosures

- Allegations of deepfake misuse

- Complaints alleging bias or misrepresentation

- Prepare a rapid response template and a public correction protocol.

Compliance cheat sheet for 2025 (what to do now vs. plan ahead)

-

EU AI Act transparency trajectory:

- Marketers should prepare to label realistic synthetic content and adopt machine‑readable provenance. The European Commission outlines timelines and transparency expectations in the European Commission 2025 AI transparency FAQ.

-

FTC advertising and endorsements:

- Disclose material connections clearly and ban fake testimonials. Stay current via the FTC’s Artificial Intelligence hub (updated 2025) and review the FTC’s 2024 final rule banning fake reviews/testimonials.

-

Platform policies:

- YouTube synthetic disclosure: see YouTube’s policy page.

- Meta labels: see Meta’s newsroom post on labeling AI content.

- TikTok labels: see TikTok’s support page on AI‑generated content.

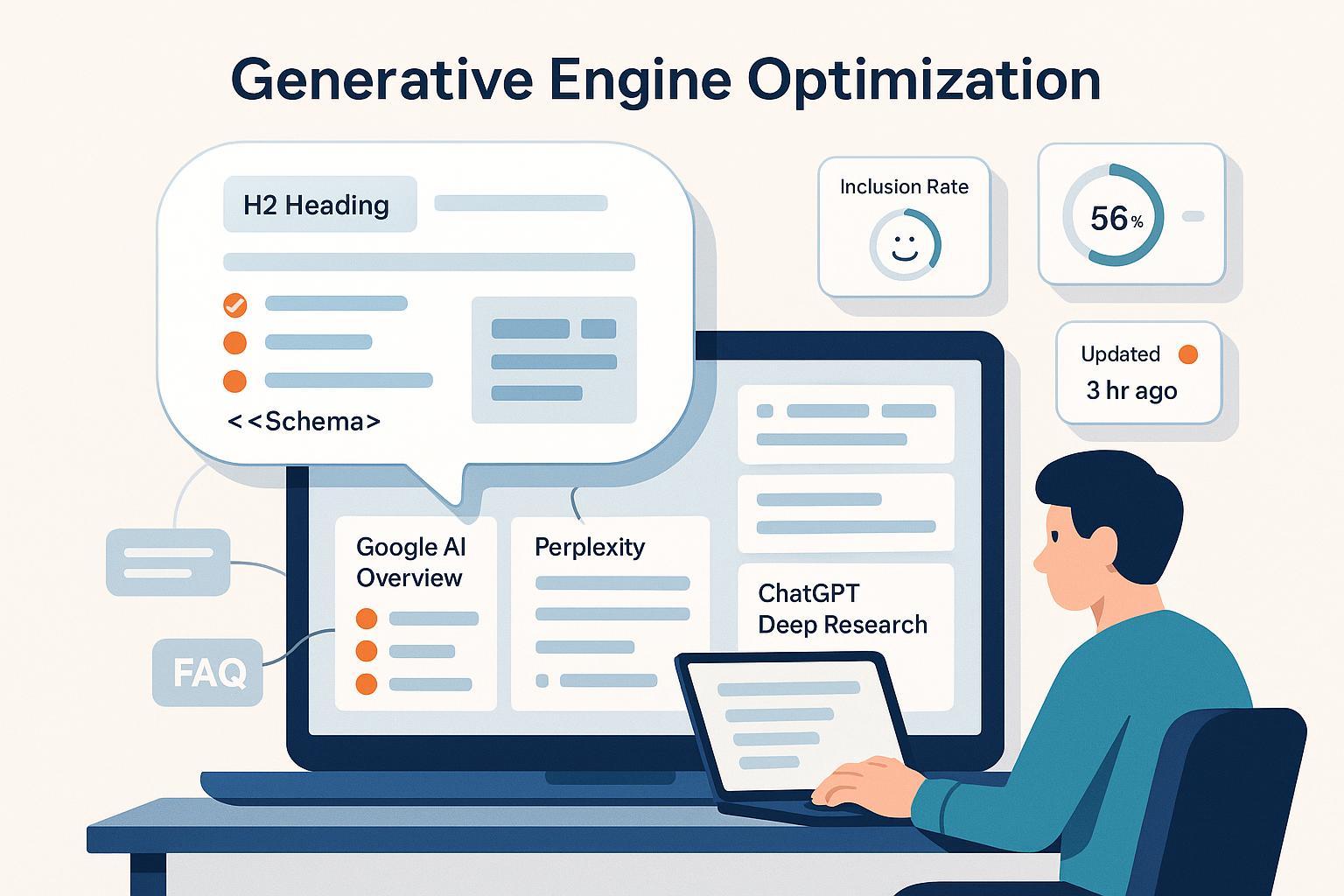

Sora 2 and provenance: how to operationalize responsibly

OpenAI documents a multi‑layered safety stack and refers to watermarking/provenance signals for Sora outputs. Treat this as one layer—pair it with your own visible disclosures and Content Credentials across the workflow.

- Review OpenAI’s Sora documentation and policies for current guardrails in the OpenAI Sora 2 System Card and the OpenAI “Launching Sora responsibly” overview. Align prompt strategies to the OpenAI policies for images and videos.

- Do not rely solely on platform watermarks. Implement C2PA/Content Credentials at creation and ensure your CMS/CDN preserve metadata per the C2PA Technical Specification 2.2 (2025).

Practical note: Some features evolve rapidly. Document your assumptions and re‑check vendor policies quarterly.

Opportunities and ROI: where AI video excels (and where it doesn’t)

- Speed and cost: Rapid iteration on storyboards and test variants; smaller crews for production of explainer, product demo, and social shorts.

- Personalization at scale: Auto‑generate multiple language/localization versions; tailor scenes to segments.

- Creative testing: A/B prompts, scenes, and scripts; quickly learn audience preferences.

- Multichannel reach: Platform‑specific cuts, subtitles, and aspect ratios with automation.

How to measure ROI credibly:

- Track time saved (hours per asset), production cost per minute, and cycle times from brief to publish.

- Compare engagement (watch time, CTR), conversion rates, and sentiment versus traditionally produced videos.

- Use controlled pilots for 4–6 weeks per channel before scaling.

Trade‑offs to acknowledge:

- Hyper‑real synthesis increases disclosure burdens and reputational risk.

- Complex scenes may still require traditional production for quality or authenticity.

- Some audiences prefer human‑made storytelling; align format to context and brand values.

Advanced safeguards that pay off in real campaigns

- Bias audits and fairness logs:

- Record prompt choices, representation decisions, and reviewer notes. Rotate reviewers to reduce blind spots.

- Rights management:

- Maintain signed consent forms; catalog likeness/voice permissions and expiration windows.

- Delivery‑path integrity:

- Confirm that your CMS, CDN, and social upload workflows retain Content Credentials. Test periodically.

- Crisis protocol:

- Draft a three‑step playbook: verify and document; communicate transparently; remediate and update controls. Equip spokespeople and publish an outline on your newsroom page.

Copyable checklists

Ethical review checklist (before publishing)

- Purpose and audience clarity; avoid misleading real‑world contexts

- Explicit, written consent for any likeness/voice; union obligations reviewed

- IP rights chain verified; stock/licensing documented

- Disclosure label added on screen and in description where realistic synthesis appears

- Platform‑specific disclosure toggles completed (YouTube, TikTok, etc.)

- Content Credentials embedded and verified; delivery preserves metadata

- Fairness review passed; diversity and cultural accuracy confirmed

- Legal review complete for sensitive/political contexts

- Monitoring plan active (social listening, anomaly alerts)

Rapid response checklist (if there’s a problem)

- Verify facts, provenance, and consent trail within hours

- Issue clear public statement; pin corrections; update labels where missing

- File takedown requests with platforms if impersonation or harmful deepfake misuse occurs

- Document incident; adjust prompts/datasets/policies; publish a post‑mortem summary when appropriate

Platform policy nuggets marketers often miss

- YouTube labels can be more prominent for sensitive topics—teams should pre‑plan additional on‑screen disclosure for news‑adjacent content.

- Meta and TikTok use both self‑disclosure and detection signals; assume your content may be auto‑labeled even if you forget to check a box.

- FTC enforcement on deceptive AI claims includes exaggerated performance statements—substantiate or avoid claims about “AI makes videos 10x better” unless backed by rigorous data.

Implementation timeline

- 30 days: Draft internal AI video policy; train creative teams; implement consent templates; adopt platform disclosure steps; run a small pilot with full provenance and fairness checks.

- 60 days: Extend pilots to two channels; standardize checklists; set up social listening and escalation; start tracking ROI metrics.

- 90 days: Roll out across priority campaigns; publish an external “Responsible AI” note; audit delivery paths for Content Credentials retention; schedule quarterly policy reviews.

Further resources and next steps

-

If you need a broader foundation on AI content creation and tool selection, explore complementary guides:

- A practical overview of tools: Best AI Content Generators for Every Creator

- Video SEO tactics for creators: Best AI SEO Tools for YouTube

- Ethical and operational basics: Comprehensive Guide to AI‑Generated Content

-

For teams coordinating AI writing and publishing alongside video, you can centralize workflows using QuickCreator for planning and content ops. Disclosure: We mention QuickCreator as a relevant resource; evaluate independently to ensure it fits your compliance and workflow needs.

Source notes for practitioners

- EU transparency and labeling trajectory: see the European Commission’s 2025 guidance in the AI transparency FAQ.

- FTC advertising/endorsement guidance and enforcement updates: follow the FTC Artificial Intelligence hub and the 2024 final rule banning fake reviews/testimonials.

- Platform disclosure policies: YouTube disclosure help, Meta labeling newsroom post, TikTok support page.

- Sora 2 documentation and OpenAI policy pages: Sora 2 System Card, Launching Sora responsibly, OpenAI policies for images/videos.

- Provenance standard: C2PA Technical Specification 2.2 (2025).

- Copyright/IP and authorship: U.S. Copyright Office AI page.

- Union and likeness protections: DLA Piper 2025 overview of SAG‑AFTRA Commercials Contracts.