Top E-commerce AI Assistant Questions & Agency Optimization Tactics

Discover the top e-commerce questions AI assistants get and proven agency strategies to optimize for AI shopping in 2026.

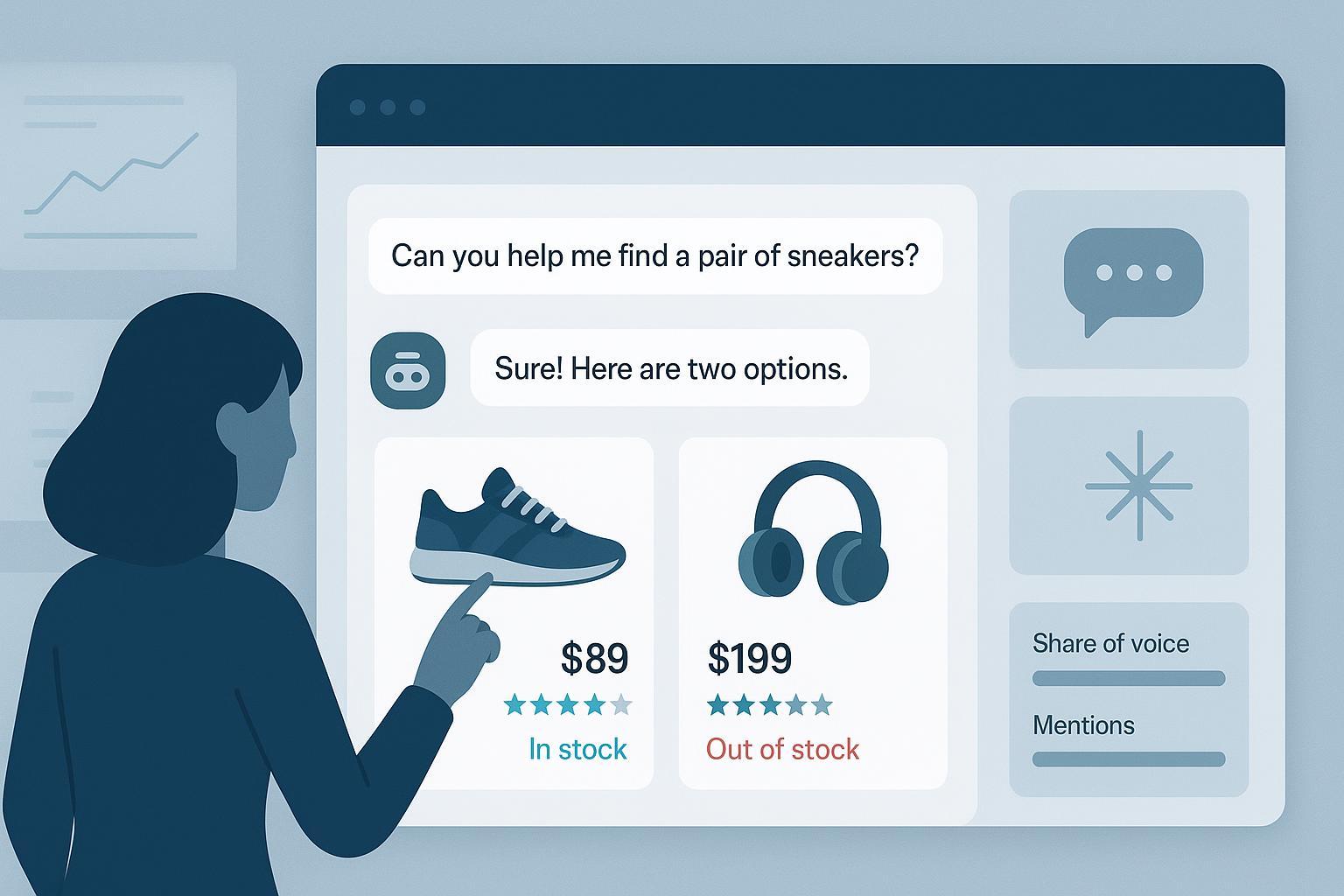

Shoppers don’t type like search engines anymore—they talk to assistants. Queries bundle budget, persona, specs, and context, and the answers often appear inside ChatGPT, Perplexity, or Google’s AI experiences. For agencies, this shifts the focus from classic rankings to whether a brand and its products are cited, recommended, and convertible in AI. If you’re new to the concept, here’s a primer on the idea of AI visibility and why it matters.

Below is a practitioner FAQ that maps real shopper questions to specific levers your teams can execute across PDPs, feeds, and policies—plus how to measure impact when AI systems intercept clicks.

The core shopper questions AI assistants receive (and what to optimize)

Q1) “What are the best [product] under $[budget]?”

Price‑constrained superlatives are bread‑and‑butter for assistants. Chat interfaces often build buyer guides and product cards from structured specs, reviews, and reliable price/availability data. To qualify, ensure complete Product and Offer structured data, accurate GTIN/MPN/brand, and fresh prices/stock synced via your feed or Content API. Google recommends automatic item updates to align landing‑page reality with Merchant Center data; this helps AI experiences surface correct pricing and availability (see Google’s guidance on Content API best practices and Automations for price/availability).

On‑page, add comparison‑ready specs (dimensions, materials, ingredients, care, noise levels, power draw). If you want assistants to pick your products for “under $X,” make “price‑to‑value” legible in copy, tables, and schema.

Q2) “Which is better: [A] vs [B]?”

AI assistants thrive on side‑by‑side clarity. Add comparison tables that align attributes across competing SKUs—yours and major alternatives. Use canonical identifiers (GTIN/brand/model) so assistants can reconcile items across sources. Keep PDP FAQs with direct, sourced answers to common head‑to‑head questions. Where Google’s AI Overviews appear, solid structured data and clear editorial comparisons make it easier for the system to cite you; for context on AEO behavior and measurement, see this executive guide to AEO best practices (2025).

Q3) “Is this right for [use case/persona]?”

These intents need narrative plus specs. Add “Best for” sections on PDPs (“Best for small apartments,” “Best for beginners”), compatibility matrices, and environmental constraints (room size, voltage standards, water resistance). Assistants like ChatGPT’s Shopping Research synthesize this into buyer‑fit language; OpenAI described how it compiles detailed guides and clarifying questions in its 2025 Shopping Research post.

Q4) “Got a gift idea for [persona/age/hobby]?”

Gift prompts depend on persona‑tagged copy, bundles, and curated collections. Include price tiers, age ranges, and hobby tags in category copy and product attributes; expand alt text and captions on lifestyle images to signal use context. Short PDP videos with transcripts help assistants summarize who the product suits.

Q5) “Show me something like this but cheaper/sustainable/local.”

Offer on‑page alternatives (similar‑items blocks), sustainability badges with verifiable criteria, and clear materials sourcing in specs. For “local,” make sure local store inventory is set up so assistants can surface nearby in‑stock options. Google documents how to submit local inventory for free listings/LIAs via the Merchant Inventories API or feeds; start with the local inventory guide to ensure store codes, availability, and price are accurate.

Q6) “Is it in stock near me?”

This is where Local Store Inventory (LSI) alignment matters. Link and maintain your Business Profile, use the free local listings/LIAs, and keep local availability fresh. Assistants will favor sources that can answer availability with confidence.

Q7) “What’s the return/warranty policy and shipping details?”

Policy clarity isn’t glamorous, but assistants reference it. Publish returns and shipping details on site and in structured data. Google supports MerchantReturnPolicy and shipping policy markup; note that product‑level policies can override organization‑level, and Search Console/Merchant Center settings can supersede markup. See Google’s return policy structured data and shipping policy structured data.

Engine nuances agencies should plan for

-

ChatGPT: Shopping Research compiles detailed buyer guides and, in some cases, enables Instant Checkout for approved merchants. To be reliably cited, make your PDPs verifiable and your brand pages trustworthy references (OpenAI’s 2025 posts outline the experience).

-

Perplexity: Conversational shopping uses contextual memory and can present curated product cards; Instant Buy via PayPal enables direct purchase in chat for supported merchants.

-

Google Search (AI Overviews/AI Mode): Appearance is volatile and can depress classic organic CTR when AI answers absorb demand. Invest in structured data completeness, feed freshness, and policy clarity so your brand is a trusted citation. Industry reporting in 2025 covered CTR declines tied to AEO presence.

Quick map: question pattern → optimization lever

| Shopper question pattern | Content and data levers agencies should own |

|---|---|

| Best X under $Y | Comparison hubs and PDP tables; complete Product/Offer schema; fresh price/stock via feeds/API; canonical identifiers (GTIN/brand); evidence in copy for price‑to‑value; Google’s Content API best practices |

| A vs B | Side‑by‑side tables; PDP FAQs; consistent model identifiers; editorial summaries that state “who should buy which” |

| Fit for use/persona | “Best for” sections; compatibility matrices; environmental constraints; transcripts for short videos |

| Gift ideas | Collections with persona/age/hobby tags; tiered price ranges; lifestyle imagery with descriptive alt text |

| Like this but cheaper/sustainable/local | Alternatives modules; sustainability attributes; local inventory setup via Google’s documented local inventory flows |

| In stock near me | Business Profile alignment; free local listings/LIAs; store codes and on‑hand accuracy |

| Returns/warranty/shipping | On‑site policy pages; MerchantReturnPolicy + shipping policy markup |

Localization and variants (extra FAQs)

Q8) “Does this work in my country/size standard/language?”

Publish localized PDPs with correct units (imperial/metric), voltage/electrical standards, and size conversions. Align localized pricing and availability in feeds by market. Use hreflang on alternates and ensure reviews include local language where possible. Assistants will prefer sources that match the user’s locale and constraints.

Q9) “How do we keep color/size/material variants straight in AI answers?”

Give each variant a unique ID and group with item_group_id in Merchant Center; include attributes such as color, size, material. Ensure the PDP preselects the exact variant tied to the landing URL and that structured data mirrors feed values. Google’s support materials emphasize variant hygiene and grouping.

Measurement and reporting (what to track when clicks shift to AI)

Q10) “How do we measure our brand’s presence inside AI answers?”

Track prompt‑level visibility: the exact questions, whether your brand or SKUs are mentioned, the type of recommendation (shortlist, primary pick, alternative), and placement within the answer. Segment by assistant (ChatGPT, Perplexity, Google) and by category. Correlate swings with site/feed changes, policy updates, or review surges. For a structured approach, see this guide on how to perform an AI visibility audit and companion advice on tracking and analyzing AI traffic (2025).

Disclosure: Geneo (Agency) is our product. For agencies that need white‑label tracking across ChatGPT, Perplexity, and Google’s AI Overviews, Geneo (Agency) can be used to monitor brand mentions, recommendation types, and share of voice over time and to deliver client‑ready dashboards. Use any monitoring tool that fits your stack; the key is to log prompts, platforms, and placement consistently.

Q11) “What KPIs make sense when AI Overviews reduce traditional CTR?”

Use a KPI set that reflects AI surfaces. Track: Share of Voice inside AI answers (by engine, category, and intent), AI Mentions and Citations, Recommendation Placement (primary vs alternative), Platform Breakdown (% of appearances by engine), and policy/spec completeness (a quality score you can audit monthly). To contextualize CTR changes in Google, pair these with impressions, merchant listing clicks, and revenue by SKU/category. Your job is to show whether your brand is being recommended and whether assistant‑driven conversions are rising elsewhere.

Troubleshooting and risk

Q12) “We stopped getting cited—what changed and how do we fix it?”

Start with recency and consistency. If price or availability drifted from your feed to your landing page, assistants may suppress or mistrust your data. Validate Product/Offer markup with rich results testing and ensure the landing page reflects feed values exactly. Re‑check GTIN/MPN coverage and brand names; mismatches can exclude you from comparisons. Confirm policy markup or settings (returns/shipping) haven’t been deprecated or overridden. Finally, examine reviews: a drop in recency or density can nudge assistants toward other sources. When the issue is Google‑specific, align your fixes with this practical overview of optimizing content for AI citations to strengthen the signals assistants rely on.

Put it into your next sprint

Pick two categories with high assistant demand (budget superlatives and A‑vs‑B comparisons are safe bets). Enrich PDP specs and comparison tables, fix GTIN gaps, sync price/stock, publish structured policy data, and set up local inventory where relevant. In parallel, start logging prompts and AI mentions so you can prove movement even when traditional CTR moves the other way. Think of it this way: if assistants already hold the conversation, your job is to make your products the easiest ones for them to trust, cite, and recommend.