ChatGPT vs Perplexity vs Copilot: Citation Verifiability Comparison 2025

Unbiased 2025 review: ChatGPT, Perplexity, Copilot citation verifiability, scenario analysis, UX, admin controls. Original research for PMs, founders. Full VS comparison.

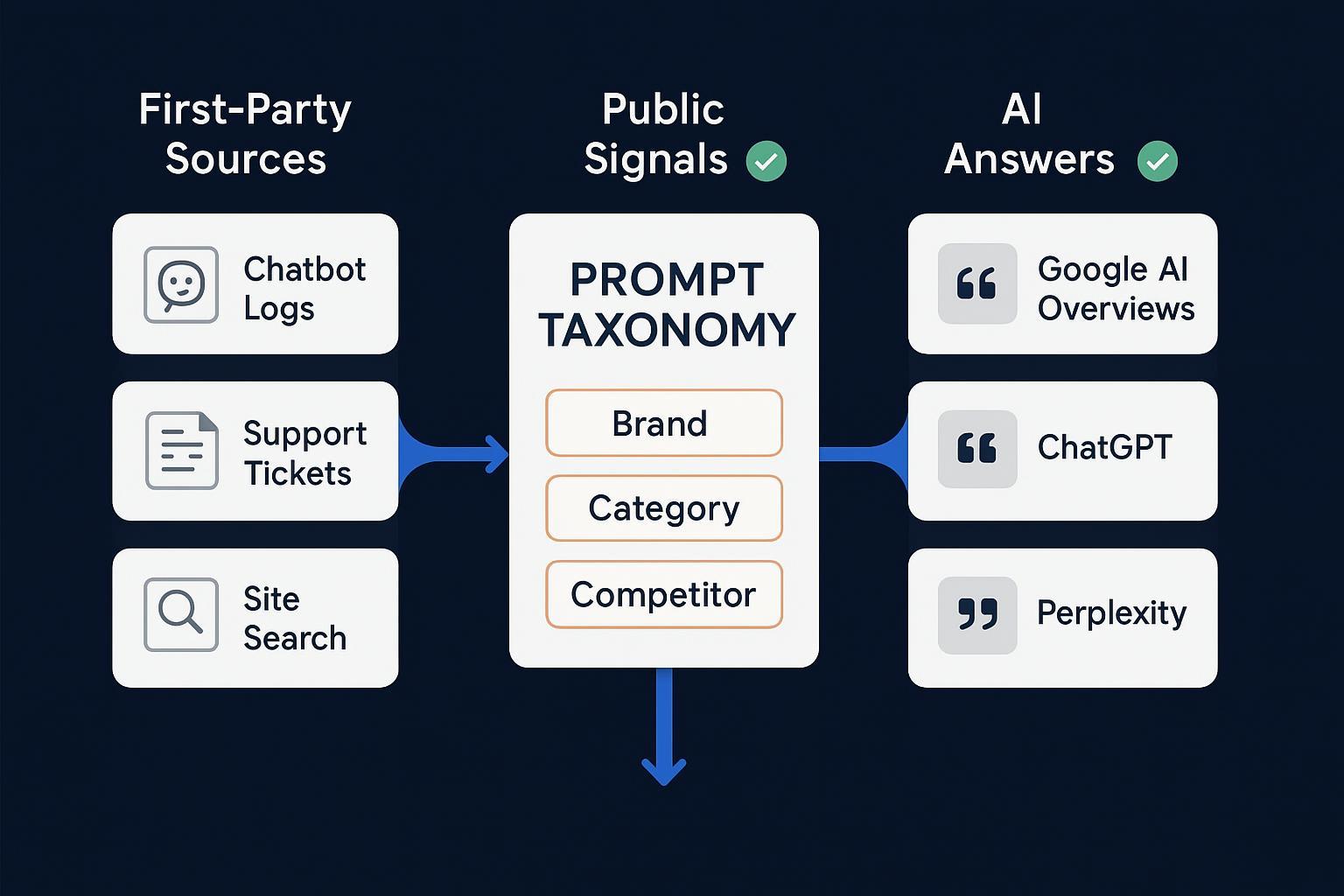

Product managers and founders increasingly rely on AI assistants to surface answers their users can trust. The bottleneck isn’t just whether tools provide links—it’s how quickly a reader can verify those links against claims. In this original research framing, we focus on citation verifiability: the extent to which a response’s sources are accessible, relevant, and clearly bound to the specific assertions users care about.

We compare OpenAI ChatGPT, Perplexity AI, and Microsoft Copilot through the lens of real-world product decisions: UX friction vs. trust, governance controls, and operational trade-offs. We do not declare an absolute winner; each tool has strong fits and limitations depending on scenario.

Method and metric: what “citation verifiability” means

Our primary metric is a “verifiability score” that combines:

Presence of citations for factual claims when web grounding/search is used

Accessibility (non-paywalled or at least with a visible abstract), with correct author/publisher labeling

Claim-to-source binding (can we map a particular sentence to a specific source without ambiguity?)

Recency adherence for time-sensitive queries (e.g., news, product updates within the past 12–18 months)

Verification cost: clicks and seconds required to confirm the claim

We operationalize this across identical queries and scenarios, instrumenting available controls:

ChatGPT: Search/browsing on, measure presence/format of citations and binding; note Deep Research behavior where applicable

Perplexity: Default inline citations, plus Focus modes (or Enterprise “Choose sources”) to control source types

Copilot: Web grounding enabled/disabled via tenant policy; measure changes when grounded on organizational data vs. public web

To frame expectations, we anchor claims to official documentation and one large-scale external audit.

How each tool selects and presents citations

ChatGPT (OpenAI)

ChatGPT’s web-capable behavior centers on ChatGPT Search and related browsing modes. OpenAI describes it as providing “fast, timely answers” with links to relevant web sources directly in responses; availability spans Plus, Team, Enterprise, Edu, and a gradual rollout to Free users as of late 2024–early 2025, with explicit guidance to double‑check information according to OpenAI’s “Introducing ChatGPT Search” (Dec 2024, updated Feb 2025). For deeper, multi-step synthesis, OpenAI introduced “deep research,” an agentic process that reasons across many sources, though public docs do not promise per‑claim citation binding; see OpenAI’s “Introducing deep research” (updated July 2025).

In practice, when ChatGPT invokes search/browsing, it displays linked sources, often clustered after sections or at the end. However, per‑claim binding may be implicit rather than explicit—users sometimes need to click through and manually confirm which sentence the link supports. OpenAI’s own release notes reiterate that the assistant can make mistakes and users should verify information, per ChatGPT Release Notes (accessed Nov 2025).

Strengths for verifiability

Broad coverage and strong synthesis, especially on complex, multi-source topics

Links often point to authoritative pages when search is triggered

Deep Research can surface diverse evidence for nuanced questions

Constraints and risks

Ambiguous claim-to-source mapping in longer answers increases verification cost

Occasional mismatched or outdated citations; requires user diligence

Bibliographic metadata may be minimal (publisher/date not always obvious inline)

Operational tips

Encourage shorter, claim-focused prompts and request “per‑claim references” directly

For time‑sensitive tasks, specify a date window and ask for the publication date next to each citation

Consider a second pass where the model is asked to align each claim with a specific link and quote

Perplexity AI

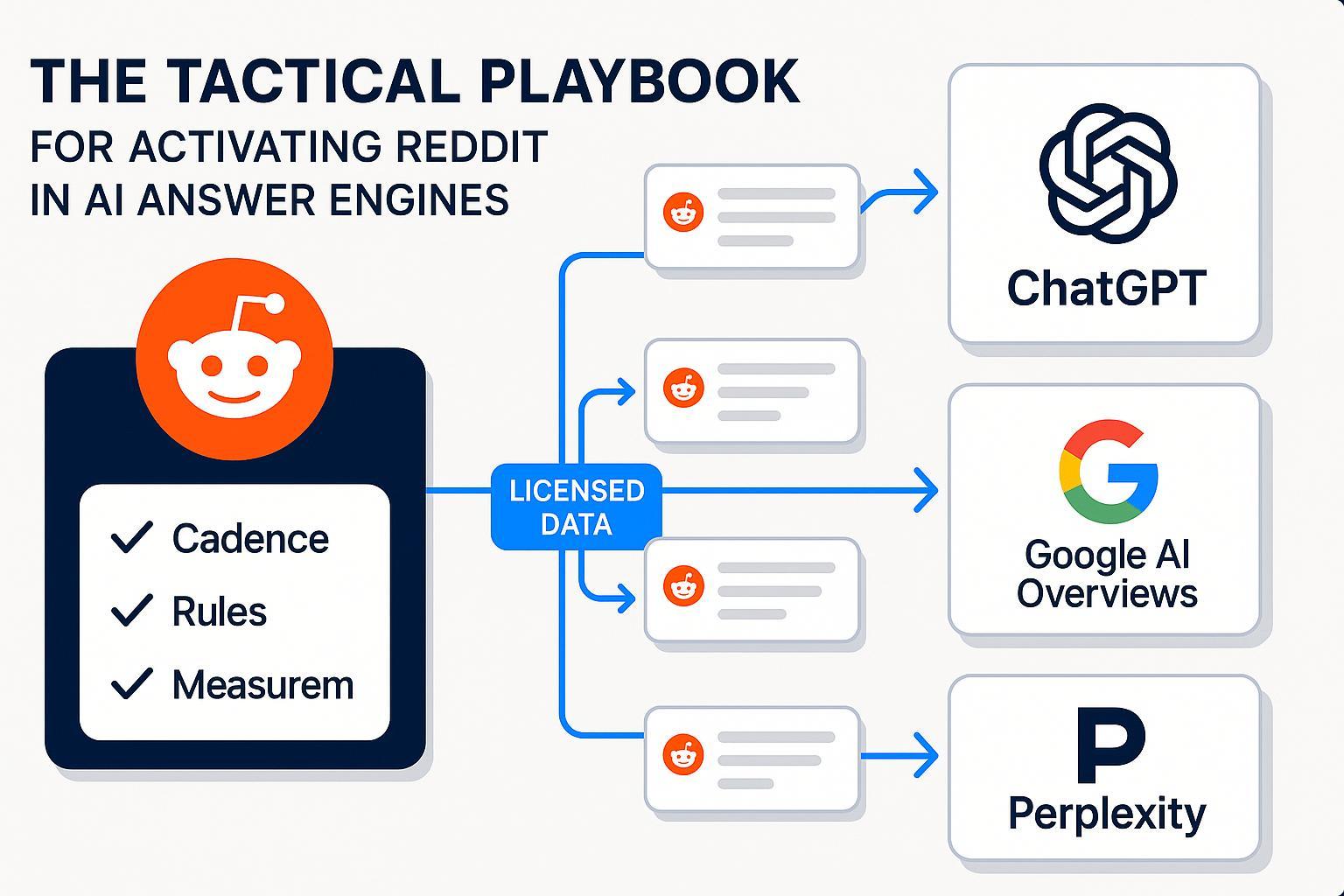

Perplexity positions itself as an “answer engine” where citations are integral. The company states that “every answer comes with clickable citations, making it easy to verify information,” as described in Perplexity’s “Getting started” guide (2024). Users can steer source types via Focus modes (Web, Academic, YouTube, Reddit) and, in Enterprise deployments, a “Choose sources” control replaces Focus—allowing grounding on Web, Organizational Files, both, or none, per Perplexity Help: “Why can’t I see focus mode…” (2025).

In typical use, Perplexity presents inline citations adjacent to claims or sections, with visible source labels. This claim‑to‑source proximity often reduces verification steps. Perplexity also exposes configuration via a Search API for filtering and customization, as outlined in the Perplexity Search guide (2025).

Strengths for verifiability

Clear, inline citation placement enhances claim-to-source binding

User/admin controls over source types improve auditability

Emphasis on transparency and multiple sources per answer

Constraints and risks

Potential domain overrepresentation or bias based on crawlability/recency

Still susceptible to model errors (e.g., mismatched or shallow sources on niche topics)

Some citations may point to pages with limited context; users should confirm dates and authors

Operational tips

Use Academic Focus for research-heavy queries; enforce Web+Org in Enterprise for blended grounding

Ask the model to extract the exact passage that supports a claim

Monitor domain diversity and set guardrails against overreliance on a single outlet

Microsoft Copilot

Copilot can ground responses on the public web (via Bing), organizational data (Microsoft Graph), and local files. In Microsoft 365 apps, sources appear as clickable citation pills, and users can select artifacts to cite in drafting workflows. Microsoft documents these capabilities in its official learning resources; see Microsoft 365 Copilot overview (Oct 2025) and the release notes (Oct 2025).

For governance, admins can enable or disable public web search at the tenant or group level via the Cloud Policy service, effectively constraining Copilot to organizational data when disabled. Microsoft’s authoritative policy guide details this control in “Data, privacy, and security for web search in Microsoft 365 Copilot” (Sept 2025).

Strengths for verifiability

Enterprise-grade grounding controls and auditability

Citation pills and source lists help track which artifacts influenced the answer

Cautious refusals can reduce confidently‑wrong outputs in sensitive domains

Constraints and risks

If web grounding is disabled or stale, responses may reflect outdated or narrow sources

Public web citations may still lack per‑claim binding in conversational flows

Quality depends on the freshness and accessibility of organizational repositories

Operational tips

Align tenant policies to scenario: enable web search for market intel; disable for internal-only drafting

Require source timestamps and authors in UI microcopy where possible

Build a verification sidebar that previews cited passages from both Graph and web sources

External evidence snapshot: what broad audits found in 2025

A large journalism-focused audit underscores that citation correctness remains a challenge across AI search tools. The Tow Center at Columbia Journalism Review tested eight AI search engines across 1,600 queries derived from 200 news articles and reported substantial citation error rates (Oct 2025). Their findings—summarized in the article “We Compared Eight AI Search Engines. They’re All Bad at Citing News” by CJR/Tow Center (2025)—showed systemic issues. In that dataset, Perplexity had the lowest error rate among tools tested, while ChatGPT Search exhibited higher incorrect citation rates; Microsoft Copilot faced similar challenges. Although journalism queries are a specific domain, the study’s scale and methodology provide a cautionary baseline: even the most transparent tools still need verification steps.

Scenario-based recommendations for PMs and founders

Best for rapid fact-checking and research ops

Perplexity’s inline citations and Focus/Choose‑sources controls reduce click‑depth and time‑to‑trust

Use Academic Focus for studies; enforce date windows; prompt for quoted support passages

Best for enterprise governance and mixed grounding

Microsoft Copilot offers robust admin policies for web vs. organizational grounding and shows source artifacts clearly

Ideal when compliance requires tenant‑level control; pair with internal content freshness audits

Best for general-purpose chat with occasional web lookups

ChatGPT provides strong synthesis and breadth, with citations when search is invoked

Ask for per‑claim references and visible dates; consider follow‑up prompts to map claims to links explicitly

Compliance-sensitive (health, finance, law)

Favor tools/modes that show explicit source passages and dates; log refusals as positive signals

Consider disabling public web grounding where misinterpretation risk is high; use curated internal repositories

Implementation checklist: instrument verifiability in production

Define and log a verifiability score per response (presence, accessibility, binding, recency, verification cost)

Capture source metadata: title, publisher, author, publication date, access type (open/paywalled)

Enforce date windows for time‑sensitive queries; flag outdated citations

Provide UI affordances for per‑claim binding (hover/numbered references next to sentences)

Add a “quoted support” toggle that extracts the exact passage supporting each claim

Detect paywall/broken links and auto‑fallback to open summaries or alternate authoritative sources

Instrument admin toggles (Copilot web search policy; Perplexity Choose sources; ChatGPT Search on/off) and record their states in logs

Build a verification sidebar that shows cited snippets and highlights query terms used for grounding

Limitations and what’s next

This article synthesizes authoritative documentation and one large, domain‑specific external audit. Tool behavior evolves quickly; exact pricing tiers and feature entitlements change. We plan to publish a replicable dataset and protocol focused on citation verifiability across a diverse query set, with timestamps and mode identifiers, and to update findings as 2025–2026 releases ship.

Source notes (selected)

OpenAI: ChatGPT Search provides fast answers with links; users should double‑check information — see “Introducing ChatGPT Search” (Dec 2024; updated Feb 2025)

OpenAI: Agentic multi‑step synthesis via deep research — see “Introducing deep research” (updated July 2025)

OpenAI: Reliability caveats reiterated in release notes — see ChatGPT Release Notes (accessed Nov 2025)

Perplexity: Citation‑first design and verification UX — see “Getting started with Perplexity” (2024)

Perplexity: Enterprise “Choose sources” replaces Focus — see Help Center guidance (2025)

Perplexity: Search filtering/customization — see Search guide (2025)

Microsoft: Copilot overview and release cadence — see Microsoft 365 Copilot overview (Oct 2025) and release notes (Oct 2025)

Microsoft: Admin policy to allow/disable public web grounding — see Manage public web access (Sept 2025)

External audit: Journalism‑domain citation error rates across AI search tools — see CJR/Tow Center report (Oct 2025)