Best Practices for Brand Visibility in AI-Generated Answers (2025)

Discover actionable strategies and measurement frameworks to boost your brand's visibility in AI-generated answers when SEO alone is no longer enough. Learn how to optimize citations, entity clarity, and monitoring for ChatGPT, Gemini, and Google.

If you’ve noticed organic traffic softening even while you hold top rankings, you’re not alone. In 2025, AI-generated answers are changing the visibility equation. Large studies show that when Google’s AI Overviews appear, the traditional #1 result loses a substantial share of clicks—Ahrefs reported an average 34.5% CTR drop for the top position (Mar 2025), and the effect compounds across informational queries. Pew Research (July 2025) found users encountering an AI summary were less likely to click outbound links at all. Meanwhile, Semrush’s tracking of 10M+ queries shows AI Overviews appearing on over 13% of searches by March 2025 and expanding. The bottom line: rank-1 ≠ visible. You now need your brand to be included or cited inside the AI answer itself.

- According to the Ahrefs analysis in 2025, AI Overviews reduced the top result’s CTR by an average of 34.5%: Ahrefs AI Overviews reduce clicks.

- Pew’s July 2025 study found users are less likely to click links when an AI summary appears: Pew Research 2025 finding.

- Semrush’s longitudinal study shows AI Overviews appeared on 13.14% of queries by March 2025: Semrush AI Overviews study.

This article distills what consistently works to earn citations and mentions inside AI answers across ChatGPT, Gemini/Google AI Overviews, and Perplexity—plus how to measure progress, mitigate risks, and adapt by maturity stage.

What Works First: 30-Day Foundation

In practice, brands that improve inclusion in AI answers do five things in their first month. Treat this as a checklist you can execute sprint-by-sprint.

- Clean up and clarify your entity footprint

- Standardize your exact brand and product names across your website, social profiles, business listings, and knowledge bases.

- Implement Organization (or LocalBusiness/Product) schema on key pages with a complete sameAs graph (Wikidata, Crunchbase, LinkedIn, YouTube, GitHub if relevant). This reduces ambiguity for LLMs.

- If you don’t have a Wikidata item, create or update one with clear aliases, a precise description, and authoritative links.

- Control and observe crawling access

- Ensure your robots.txt is intentional. If visibility is the goal, do not inadvertently block AI-focused crawlers. Google’s official guidance on the Robots Exclusion Protocol remains the canonical reference: Google Search Central robots.txt guide (2025).

- Monitor server logs and analytics for AI crawler traffic patterns; use your WAF to rate-limit as needed while allowing legitimate access.

- Publish canonical, scannable answers for clustered intents

- Create or refresh “canonical explainer” pages that map to common user questions. Use scannable subheads, concise answer paragraphs, and inline citations to reputable sources.

- Add FAQs and glossaries. Perplexity and other engines often extract short, referenced snippets.

- Date-stamp updates and keep a visible changelog to signal freshness.

- Add and maintain structured data

- Use Organization/LocalBusiness/Product schemas on core pages; add FAQ/HowTo where appropriate.

- Ensure bylines, author bios, and reviewedBy fields are used in YMYL categories. LLMs prefer content with clear provenance and accountability.

- Before you optimize, measure your current presence: Are you cited in AI answers for your priority topics? How often, by which engines, with what sentiment and accuracy? Establish a snapshot so you can quantify progress.

Pro tip: Treat this as a monthly “visibility hardening” sprint. The above steps are iterative; the first pass is about establishing clarity and control.

30–90 Days: Build Authority and Close the Loop

Once your foundations are in, your next wins come from external authority, systematized remediation, and deeper knowledge graph alignment.

- Target high-signal sources that AIs frequently cite in your category (industry associations, standards bodies, top-tier publications, .gov/.edu pages).

- Publish data-backed assets—original research, benchmarks, and clear methodologies—designed to be cited.

- Maintain listings hygiene with rich attributes, consistent NAP, category alignment, and fresh reviews; a 2025 analysis of 6.8M AI citations indicates that the majority of citations come from brand-controlled sources (owned sites and listings), highlighting the ROI of fundamentals: Search Engine Land on Yext’s 6.8M-citation study (2025).

- Establish an answer remediation cadence

- Weekly, test a scripted set of prompts across ChatGPT, Gemini/Google AI Overviews, and Perplexity. Capture screenshots and log sentiment, accuracy, and missing mentions.

- For any inaccuracies or omissions, ship corrective content on your site with clear citations, then use in-product feedback to request corrections. Google explicitly acknowledged correcting misinterpretations swiftly during the AI Overviews rollout: Google Search blog update (May 2024).

- Update third-party profiles (e.g., listings, partner directories) to reflect the corrected facts and reinforce consistency.

- Seed and strengthen your knowledge graph

- Expand your sameAs network (Wikidata, Wikipedia if notable, Crunchbase, social profiles, app stores, software directories like G2/Capterra for SaaS) and keep naming consistent.

- Interlink your owned properties with consistent entity names and descriptions; avoid introducing alternate spellings that fragment signals.

90+ Days: Lead with Proprietary Data and Multimodal Readiness

When the basics are humming, differentiate with assets AIs want to quote and formats they can comfortably parse.

- Become the canonical source for something that matters

- Release quarterly/annual benchmarks, APIs, or indices relevant to your category. Document methods and provide downloadable datasets.

- Pitch findings to trusted outlets. LLMs prefer reputable, well-cited sources; PR distribution still helps discovery and citation.

- Make your content multimodal-friendly

- Provide transcripts for videos, alt text for images, and clean metadata for PDFs (title, author, description, dates). Use Schema.org ImageObject/VideoObject where relevant.

- Keep visual assets captioned with concise, declarative statements AIs can lift.

- Design for voice and conversational phrasing

- Create natural-language Q&A blocks with short answers (2–4 sentences) and a source. Test via ChatGPT Voice or mobile assistants to ensure clarity and pronunciation.

- Where appropriate, include pronunciation guidance for brand or product names.

Tooling and Measurement: How to Know You’re Winning

You can’t improve what you can’t measure. Your stack should track coverage, sentiment, accuracy, and competitor benchmarks across AI engines.

Core KPIs to track

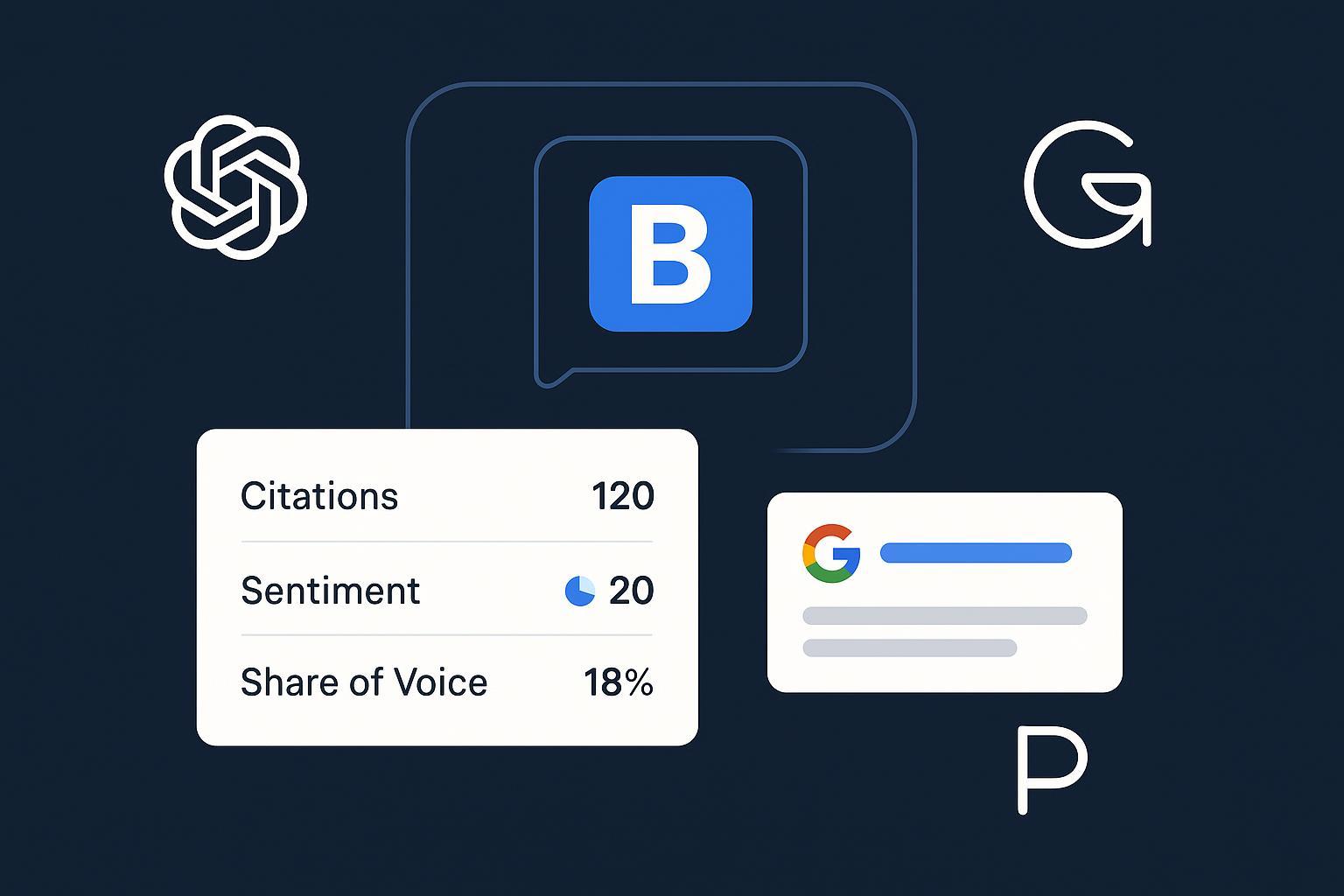

- AI citation count by engine (ChatGPT, Gemini/AI Overviews, Perplexity)

- Share of voice in AI answers vs. a defined competitor set

- Sentiment and accuracy of mentions (positive/neutral/negative; factual correctness)

- Coverage across funnel intents (problem, solution, brand, competitor comparisons)

- Time-to-correction for hallucinations and correction acceptance rate

- Assisted conversions attributed to AI surfaces (brand lift in direct/organic, surveys, and aided recall)

Selecting monitoring and optimization tools

- Evaluate engine coverage and freshness (near real-time vs. lag), sentiment/accuracy analysis, competitor benchmarking depth, data export options, and governance features (issue tracking, audit logs).

- Example of a platform focused on multi-engine tracking and optimization: Geneo — used by marketing teams to monitor brand mentions and citations in AI answers across ChatGPT, Perplexity, and Google AI Overviews, analyze sentiment, and guide content updates.

Neutral perspective on stack composition

- Specialized AI-visibility tools complement, not replace, your traditional SEO suite. Keep your rank tracking and content optimization tools; add an AI-answer layer to see what users now see first.

Technical Realities and Trade-offs You Should Acknowledge

Leaders who stay pragmatic about today’s limits and opportunities move faster and avoid rework.

Robots.txt still matters—but it’s not your only control

- The Robots Exclusion Protocol is still the foundation for controlling crawler access; refer to the canonical guidance: Google Search Central robots.txt guide (2025).

- There’s an unresolved discrepancy around certain crawlers. In mid-2025, Cloudflare documented patterns it attributed to Perplexity using undeclared crawlers that bypass robots directives; Perplexity’s public documentation states its bots honor robots.txt. Read both: Cloudflare investigation (June 2025) and Perplexity bots documentation. In practice, pair robots.txt with WAF rules and monitoring.

About llms.txt and similar proposals

- There are proposals to standardize AI crawler controls beyond robots.txt, but as of mid-2025 no major platform enforces them. See the IETF draft exploring REP extensions: IETF draft: robots AI control (2025). Treat these as forward-looking and optional; don’t rely on them for permissions.

Freshness bias is real

- Engines like Perplexity appear to weight recency. Keep update logs on evergreen assets and publish concise changenotes.

Entity disambiguation is the quiet work that pays off

- Consistent names, IDs (Wikidata/Crunchbase), and sameAs graphs reduce hallucinations and increase correct attributions.

Risk Management: Hallucinations, Bias, and Crisis Response

Build a lightweight governance loop. It prevents small inaccuracies from snowballing.

Monitoring cadence

- Weekly: run your priority prompt set across engines, capture outputs, and tag issues (factual errors, outdated data, unsafe claims).

- Event-driven: launches, incidents, or media spikes warrant ad-hoc checks.

Correction channels and expectations

- Use in-product feedback for AI Overviews, ChatGPT, Gemini, and Perplexity to flag errors or missing citations; they are the practical channels today. Google publicly acknowledged rapid fixes during the 2024 AIO rollout: Google Search blog update (May 2024). Expect variable turnaround and keep your own content updated.

Hardening your content against misinterpretation

- Make key claims explicit, verifiable, and consistently worded across all owned properties and listings.

- Publish FAQs addressing common misconceptions and include dated references.

- Maintain author credentials and, in sensitive categories, reviewedBy metadata and appropriate disclaimers.

- Healthcare: Avoid PHI; ensure authorship credentials and medical review where indicated; maintain audit logs.

- Finance: Align with FINRA/SEC guidance; avoid promissory or unsubstantiated language; preserve data lineage for any reported metrics.

Sector Nuances and Local/Voice Considerations

A one-size-fits-all approach wastes effort. Tailor tactics by vertical and surface.

Healthcare (YMYL)

- Prioritize E-E-A-T signals: credentialed authors, clear sourcing, and medical review. Use MedicalEntity/Condition/Drug schema where relevant.

- Provide concise “what it is / when to see a doctor / risks” answer blocks with citations.

Finance

- Emphasize audited data, methodology transparency, and disclaimers. Keep versioned pages with visible update histories.

B2B and SaaS

- Ship data-driven thought leadership (benchmarks, TCO calculators). Pursue analyst/community citations and authoritative directories (e.g., G2/Capterra) to strengthen entity graphs.

Local and multi-location

- Listings hygiene (GBP) with consistent NAP, services, and hours. Encourage reviews and maintain Q&A. Add LocalBusiness schema and make your location pages fast, clean, and scannable for quick pickup by AI answers.

- Structure content as short Q&A pairs, include alt text and transcripts, and optimize filenames/metadata so multimodal models can attribute properly.

Benchmarks and Real-World Signals to Set Expectations

Be data-informed about the pace of change and the scale of achievable gains.

- Expect 20–35% CTR declines on traditional organic when AI Overviews are present, even for high positions—multiple 2025 studies corroborate this, including Ahrefs and Amsive’s analyses of non-branded terms and lower-ranking keywords. See: Ahrefs 2025 CTR analysis and Amsive’s AIO CTR study (2025).

- Progress is measurable in weeks, not just quarters, when you address entity hygiene and authoritative citations. A 2025 study of millions of citations indicates most AI citations trace back to brand-controlled properties, reinforcing the ROI of owned-asset excellence: Search Engine Land on Yext’s 6.8M-citation analysis.

- Google’s AI Overviews surface continues to evolve and expand, per its official updates, so treat this as an ongoing program, not a one-off project. Semrush documented growth to 13.14% of queries by March 2025: Semrush AI Overviews study.

Your First Two Sprints: A Practical Playbook

Sprint 1 (Weeks 1–2)

- Audit entity consistency across site, listings, and profiles; implement Organization/LocalBusiness/Product schema with sameAs.

- Create/update Wikidata item; connect authoritative IDs.

- Review robots.txt and WAF rules; enable logging for AI crawlers.

- Identify three “canonical explainer” topics; ship concise, cited answer sections and FAQs; add update logs.

- Capture your AI visibility baseline across engines and priority prompts.

Sprint 2 (Weeks 3–4)

- Pitch one data-backed asset to top-tier outlets; syndicate responsibly.

- Launch a weekly remediation loop: test prompts, log issues, publish corrections, submit feedback in-product.

- Expand your sameAs graph; align names and descriptions across owned and third-party profiles.

- Instrument KPIs: citation counts by engine, sentiment/accuracy, SOV vs. competitors, and time-to-correction.

Quarterly operating cadence (repeatable)

- Refresh priority content with concise, dated updates; retire conflicting claims.

- Publish or update one proprietary dataset or benchmark per quarter.

- Review listings hygiene and reviews; update rich attributes.

- Reassess tool coverage and governance; export data to your BI stack for trend analysis.

Common Pitfalls to Avoid

- Treating AI visibility as an SEO bolt-on rather than a cross-functional program (content, PR, data, and engineering must coordinate).

- Chasing speculative controls like llms.txt as a silver bullet; as of 2025, stick to robots.txt plus WAF and monitoring.

- Over-optimizing single pages while neglecting entity clarity across your ecosystem—AIs need consistent signals everywhere.

- Ignoring measurement. Without baselines, you can’t credibly attribute improvements or secure ongoing investment.

Executive Summary for Stakeholders

If you need the one-slide version for leadership:

- Problem: AI answers intercept attention; traditional rankings no longer guarantee discovery.

- Strategy: Optimize to be cited and included inside AI answers. Focus on entity clarity, authoritative sources, structured data, freshness, and a weekly remediation loop.

- Investment: Add an AI-answer monitoring layer to your stack; fund quarterly proprietary content; maintain listings excellence.

- Risk: Monitor and correct hallucinations via in-product feedback; harden content with explicit, consistent claims and versioning.

- KPI: Track citations, share of voice, sentiment/accuracy, funnel coverage, and time-to-correction.

Source Notes and Further Reading

To keep this article concise, we embedded a limited set of primary references across sections. For convenience, here are representative anchors cited above:

- 2025 CTR impacts and AIO prevalence: Ahrefs AI Overviews reduce clicks, Semrush AI Overviews study, and Pew Research 2025 finding.

- Brand-controlled sources dominate AI citations: Search Engine Land on Yext’s 2025 analysis.

- Controls, crawlers, and proposals: Google robots.txt guide, Cloudflare investigation (2025), Perplexity bots documentation, and IETF draft on robots AI control.