Best Practices for Answer Engine Optimization (AEO) in 2025

Discover agency-suited AEO strategies for 2025: boost AI citations, track brand metrics, and measure visibility with actionable GEO techniques. Learn leading KPI frameworks.

If you run an agency, you can’t treat AI search as a black box anymore. Answer Engine Optimization (AEO) and Generative Engine Optimization (GEO) aren’t side projects—they’re measurable programs that affect how clients are seen in Google’s AI features, Perplexity, and ChatGPT-like assistants. Here’s the core idea: prioritize what you can track—citations, mentions, sentiment, and share of voice—and let those numbers guide your roadmap.

Key takeaways

Measure first: AI Share of Voice, citation rate, sentiment, and entity correctness should anchor your AEO/GEO plan.

Structure for extractability: use answer-first copy and complete JSON-LD schema; validate and iterate.

Optimize per platform: Google favors standard SEO readiness plus helpful content; Perplexity rewards clarity, recency, and citations; ChatGPT behavior varies—focus on provenance and extractable facts.

Run continuous cycles: audit → implement changes → monitor trends → iterate every 30 days.

AEO/GEO in 2025: what they are and why they differ from SEO

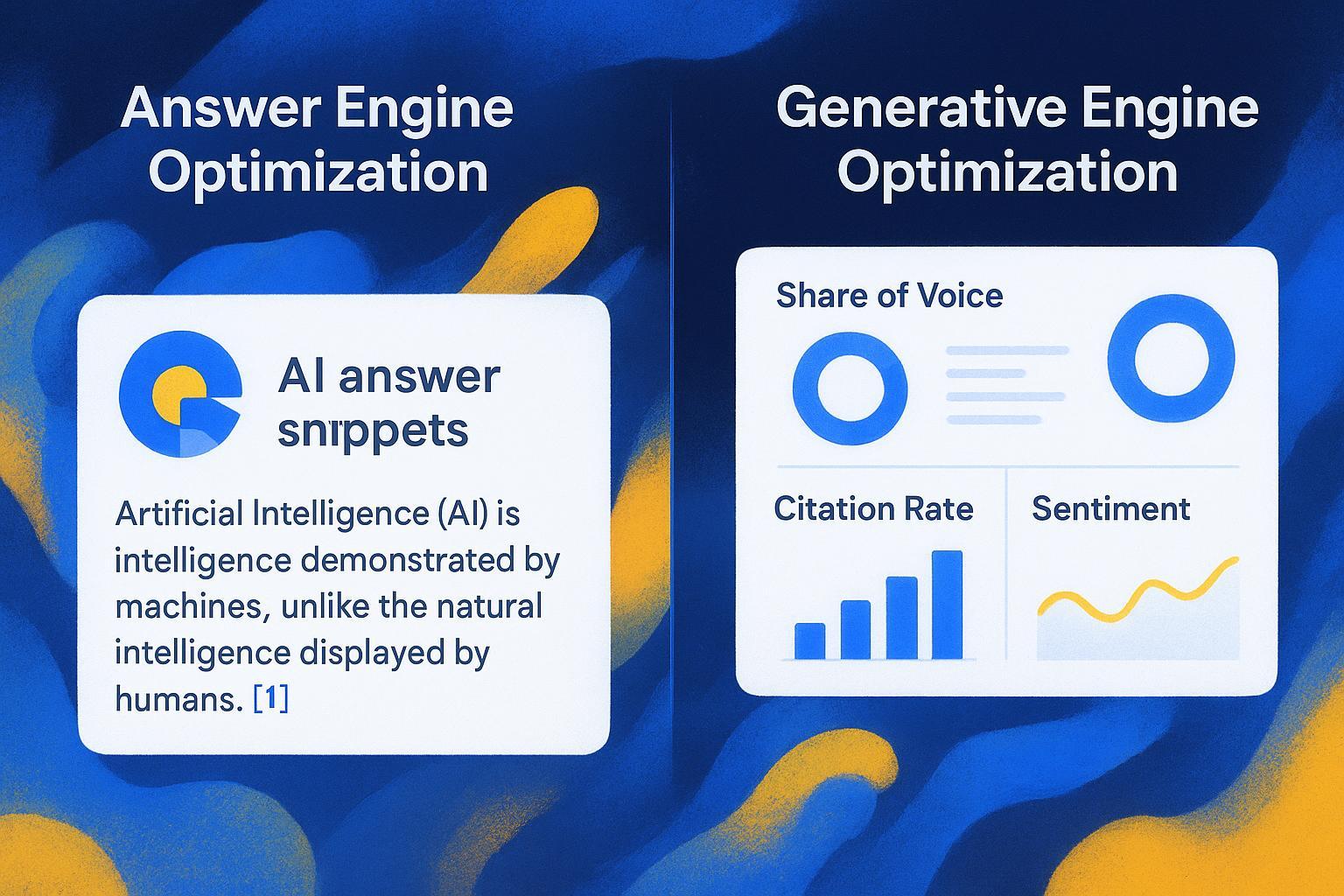

AEO targets getting your pages selected, summarized, and cited inside AI-generated answers. GEO widens the scope to any generative engine that retrieves, reasons, and composes outputs across sources. Traditional SEO remains the foundation, but the success signal shifts: engines need clear entities, extractable facts, and citation-worthy pages—not just rank-worthy ones.

Google’s own guidance makes this explicit: there are no special technical tags to "turn on" AI features; the fundamentals still apply—helpful, reliable, people-first content, strong technical hygiene, and complete structured data. See Google’s Search Central guidance in 2025 on how AI features use the same eligibility principles and their advice on helpful content and structured data for AI surfaces (2025).

If that’s the baseline, what’s different? Two things: how you structure information (answer-first, Q&A blocks, tidy tables) and how you prove credibility (citations, author/organization entities, consistent sameAs links). Those choices raise the odds your page becomes a cited source in AI answers.

The agency measurement stack: KPIs you should track weekly

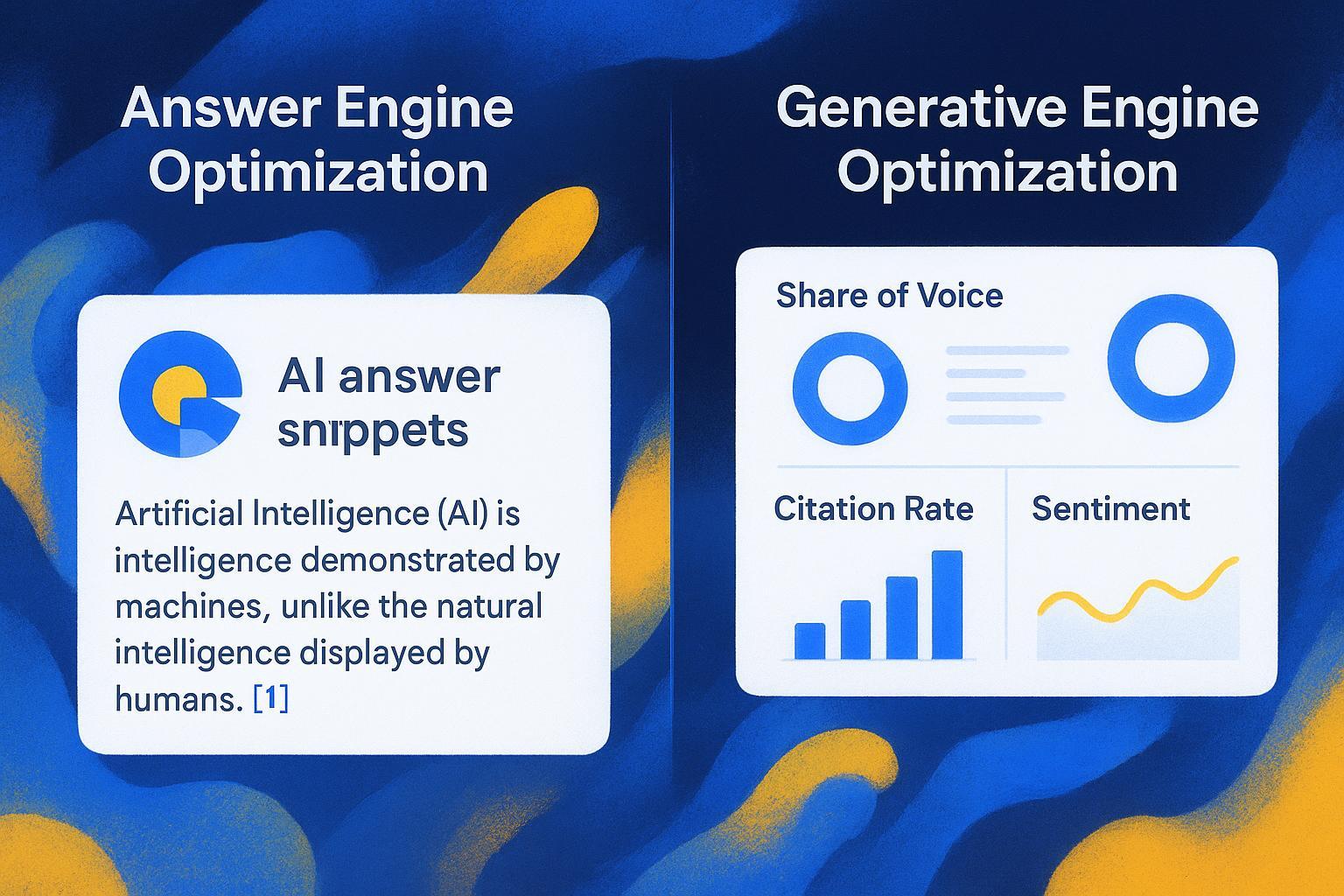

Winning AEO/GEO work looks more like product operations than one-off SEO tasks. Set up a measurement stack and revisit it weekly. Focus on AI Share of Voice (the percentage of relevant AI answers that mention your client), citation rate (the share of answers that link to your domain), sentiment (tone with context and source), entity correctness (accurate brand/product representation), time-to-citation (days from publishing or updating to first AI citation), and the share of citations by content type (how-to, FAQ, product, guides). Pair these with SEO metrics (traffic, impressions) and add plain-English interpretations—“SOV rose 6 points after schema cleanup; most gains came from refreshed FAQs and a how-to guide.” For a 90–120 day plan aligned to agency cadence, see AEO Best Practices 2025 Executive Guide.

Disclosure: Geneo is our product. As an agency-friendly example, a monitoring stack can include neutral tools that track AI mentions and citations across engines and competitors. Geneo’s dashboards report a Brand Visibility Score and break down brand mentions, link visibility, and reference counts; formulas are proprietary, but the outputs map to the KPI model above.

Audit → optimize → monitor: a repeatable workflow

Run a 30-day cycle built on four moves. First, scan engines with a fixed query set (Google AI features, Perplexity, ChatGPT prompts that ask for sources) and capture which brands are cited and how. Second, analyze patterns against your baseline KPIs and competitors to expose bottlenecks—uncited product pages, incomplete how-to steps, or inconsistent entities. Third, optimize: implement answer-first summaries, add Q&A subheads, clarify facts with attributions, and fix JSON-LD with complete properties, nested author/organization entities, sameAs, and dates; validate before redeploy. Fourth, monitor deltas after 2–4 weeks and note where FAQs begin to pick up citations or entity fixes reduce misattribution. For templates and a deeper audit process, reference How to Perform an AI Visibility Audit for Your Brand.

Technical implementation that drives extractable answers

AI engines reward clarity they can parse and quote. That’s equal parts content pattern and machine-readable context. Default to JSON-LD in the head; validate with Google’s Rich Results Test and the Schema.org validator. Use the right types and fill them thoroughly—Article, FAQPage, HowTo, Product, Organization, Person—and nest author Person within Article while linking Organization and Person to authoritative profiles via sameAs. Add concrete facts (quantities, dates, SKUs/GTINs, prices, citations), and consider tables and FAQs for engines that prefer verifiable statements. For background and code-oriented guidance, see How to Integrate Schema Markup for AI Search Engines and Google’s 2025 note on succeeding in AI features.

<script type="application/ld+json">

{

"@context": "https://schema.org",

"@type": "Article",

"headline": "How to audit AI citations in 30 minutes",

"author": {

"@type": "Person",

"name": "Alex Rivera",

"sameAs": [

"https://www.linkedin.com/in/alexrivera",

"https://en.wikipedia.org/wiki/Example_Expert"

]

},

"publisher": {

"@type": "Organization",

"name": "Example Agency",

"sameAs": [

"https://twitter.com/exampleagency",

"https://www.wikidata.org/wiki/Q43229"

]

},

"datePublished": "2025-11-10",

"dateModified": "2025-12-01",

"mainEntityOfPage": {

"@type": "WebPage",

"@id": "https://example.com/ai-citation-audit"

}

}

</script>

Platform-specific tactics: what’s different across engines?

Behaviors vary by engine and even by mode. Treat this field guide as directional, not deterministic.

Engine | How citations are shown | What tends to be rewarded | Notes/refs |

|---|---|---|---|

Google AI features | Inline links/cards referencing multiple sources | Standard SEO readiness, helpful content, complete structured data, strong entities | See AI features and your website (Google, 2025) |

Perplexity | Numbered inline citations with source panels | Clear, verifiable statements; recency; structured pages; authoritative profiles | See How Perplexity works (Help Center) |

ChatGPT | Variable; depends on prompt/model/context; enterprise and tools may show sources | Extractable, well-attributed facts; clear provenance | See ChatGPT release notes |

For Google, keep SEO fundamentals tight and evidence-rich content current; avoid schema misuse. For Perplexity, publish skimmable facts, FAQs, and data tables with attribution; recency and clear claims matter. For ChatGPT, design content others can cite reliably and ask for sources when testing internally.

Common pitfalls and how to avoid them

Teams often over-index on Google-only metrics while neglecting Perplexity and other engines; misuse FAQ schema on non-FAQ content; ship thin updates without new facts; ignore crawlability and indexation basics; and assume deterministic behavior from ChatGPT. The fix is straightforward: measure across engines, apply schema only where appropriate, expand with fresh data and explicit attributions, confirm technical hygiene, and test prompts with source requests.

Reporting cadence and executive communication

Adopt monthly sprints with a fixed query set plus a rotating exploratory set. Show trend lines for SOV and citation rate, annotate the changes shipped in the prior sprint, and break down citations by content type and engine to highlight where FAQs outperform how-tos or where product specs win on Perplexity. Include a compact risk register covering misattributions, entity confusion, and outdated facts. For sentiment rubrics and audit templates, see How to Perform an AI Visibility Audit.

Next steps: a pragmatic checklist for this quarter

Define your query set and competing domains; tag by intent and product line.

Stand up dashboards for SOV, citation rate, sentiment, and entity correctness.

Fix structured data on 10–15 high-impact pages; add answer-first blocks and Q&A heads.

Refresh recency-sensitive pages with new facts and tables; add attributions.

Run a 30-day cycle; compare deltas; double down on formats picking up citations.

If you prefer a ready-made workflow reference, review the internal tutorials linked above. Geneo can serve as a neutral monitoring layer across engines while you run this playbook—no silver bullets, just consistent measurement and iteration.