2025 Best Practices for AI Search Optimization with Cross-Channel Data

Discover authoritative strategies to unify social, search, and AI data for optimized brand visibility in generative search engines. Includes actionable workflows, KPI dashboards, and Geneo integration for professionals.

If your brand’s visibility now lives across Google AI Overviews/Gemini, Bing/Copilot, ChatGPT, and Perplexity, optimizing in just one channel is no longer enough. In 2025, the teams winning AI search are unifying social, classic SEO, and AI citation data into one operating model—and iterating weekly.

Why this matters now: multiple independent analyses show AI features are siphoning clicks from classic blue links. In 2025 roundups, position‑1 CTR drops of roughly 15–35% were reported when AI Overviews appear, with the steepest declines on non‑branded informational queries, as summarized in the 2025 coverage by Search Engine Land on AI Overviews CTR impact. At the macro level, zero‑click behavior has become the norm: in the 2024 US/EU study of billions of searches, SparkToro’s zero‑click analysis found that only about 37–38% of searches resulted in an open‑web click. At the same time, AI assistants are starting to send measurable—if still modest—traffic, with SMB sites seeing AI referrals grow over six months per Search Engine Land’s 2025 coverage of SMB AI referrals.

This guide distills how practitioners are using cross‑channel data to systematically earn citations, maintain brand presence in AI answers, and keep performance measurable.

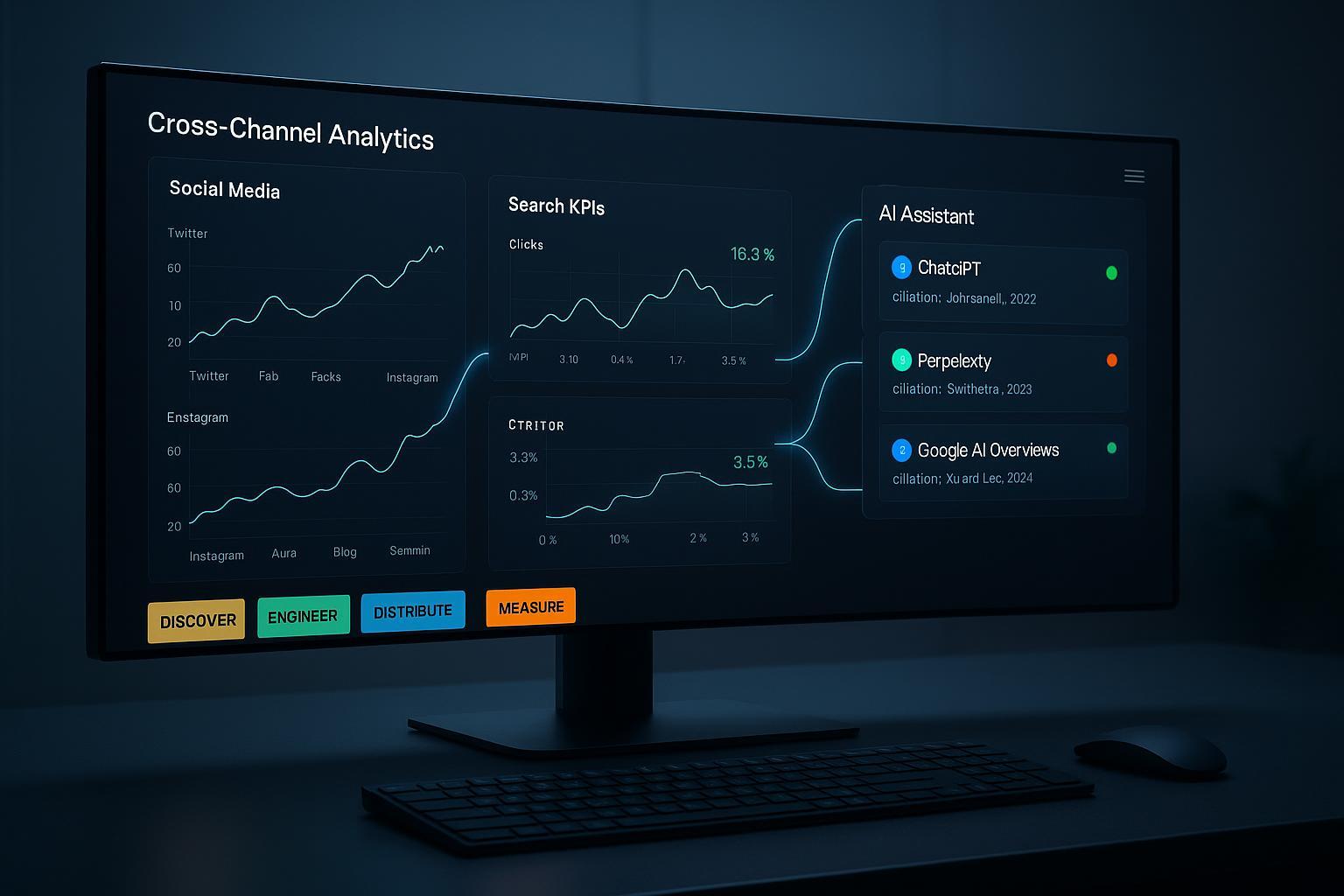

The operating model: Discover → Engineer → Distribute → Measure

Over the past year, the most resilient teams I’ve worked with use a four‑loop operating model:

- Discover: Consolidate signals from social, search, and AI assistants. Identify what the market is asking, where you appear, and how you’re described.

- Engineer: Structure content and entities so AI systems can parse, trust, and cite you.

- Distribute: Build cross‑channel authority—especially in sources AI tends to cite (news, expert blogs, forums, YouTube).

- Measure: Track AI‑native KPIs alongside SEO metrics, annotate actions, and iterate.

Each loop can be run in a week; complete the cycle every 2–4 weeks.

1) Discover: unify signals across social, search, and AI

Your discovery loop should answer three questions: What are people asking? How do AI systems answer? Where is our brand cited (and in what sentiment)?

Practical inputs to unify:

- AI answers and citations: Save the current answers that Google AI Overviews/Gemini, ChatGPT (with browsing), and Perplexity present for your core topics, together with their cited sources.

- Search demand and classic SEO: Pull your Google Search Console queries, impressions, and positions; map to your informational and commercial themes.

- Social signals: Track volume, sentiment, and influencers around your main entities and topics.

- Crawling/bot footprint: Audit which AI agents are hitting your site and whether you allow/deny them.

Ground truth sources to rely on:

- Google confirms AI features do not require special markup; eligibility follows helpful content, E‑E‑A‑T, and structured data best practices per Google Search Central’s AI features guidance (2025).

- OpenAI details how to manage GPTBot and OAI‑SearchBot via robots.txt and user‑agent controls in OpenAI’s bots documentation (2025).

- Perplexity documents PerplexityBot and Perplexity‑User fetches, along with robots.txt considerations, in Perplexity’s bots guide (2025).

How to operationalize in one week:

- Compile a “topic × assistant” grid. For your top 20 topics, capture the current answers from Google AI Overviews, ChatGPT (with links), and Perplexity. Note the cited domains and sentiment of references to your brand.

- Map search demand. From GSC, export queries and cluster them by intent; label topics that currently trigger AI Overviews.

- Pull social data. Use your social listening tool to identify narratives, sentiment, and creators discussing your topics.

- Bot audit. Check server logs and security/CDN analytics for GPTBot and PerplexityBot traffic; verify your current robots.txt stance.

Where Geneo helps in Discover:

- Use Geneo’s multi‑platform monitoring to see when your brand is cited in ChatGPT, Perplexity, and Google AI Overviews, and how the answer sentiment trends over time, as outlined in Geneo’s product overview and its walkthrough on tracking ChatGPT citations (2025 guide). You can also monitor Perplexity keyword appearances with the steps in Geneo’s Perplexity ranking guide.

2) Engineer: structure content and entities for AI parsing and citation

AI assistants cite sources they can parse, trust, and align to user intent. In practice, that means engineering your pages for chunk‑level clarity, identity, and provenance.

Proven tactics:

- Chunk, cite, clarify. Break long content into purpose‑built, titled sections that directly answer sub‑questions; include succinct, source‑linked claims and explicit expert authorship. This mirrors the “chunk‑cite‑clarify” pattern described in the 2025 analysis of AI search content by Search Engine Land’s chunk‑cite‑clarify framework.

- Strengthen entity identity. Use Organization and Person schema with sameAs to authoritative profiles; give authors dedicated bio pages with credentials. Follow the baseline in Google’s Structured Data introduction and the Article schema reference.

- Add provenance and sources. Where you cite stats, link to the canonical page (original study, official docs). Assistants often favor well‑sourced, up‑to‑date, and expert‑written content.

- Embrace multimedia in instructive domains. In 2025, YouTube citations surged in AI Overviews for how‑to/health content, per Search Engine Land’s report on YouTube citations surge (2025). Consider short video demonstrations paired with textual steps and HowTo schema (when applicable and high‑quality).

- Keep to standard technical SEO. There’s no special markup for AI Overviews beyond what already improves eligibility and understanding, reiterated in Google’s AI features guidance (2025).

What to build in two sprints:

- Authority hubs (pillar pages) with clearly labeled sections that map to the queries you captured in Discover.

- FAQ/HowTo sections that meet quality bars; avoid low‑value or deprecated patterns (FAQPage rich results have a higher bar since 2023).

- Structured author and organization identities, and a source library page linking out to the canonical research you repeatedly cite.

Where Geneo helps in Engineer:

- After publishing or updating a page, use Geneo to watch for shifts in AI answer coverage and sentiment that reference your brand or assets. Historical snapshots in Geneo let you verify whether chunking/schema changes correlate with improved citations across AI engines, as described in the Geneo overview.

3) Distribute: earn authority in the places AI assistants actually cite

Classic link‑building misses the mark if it ignores the sources AI prefers. Multiple 2025 studies show the assistants often cite different domains than the top 10 organic results. See the assistant overlap analyses in Ahrefs’ coverage of AI assistants vs. Google overlap (2025) and its finding that top‑mentioned sources differ by assistant in Ahrefs’ cross‑assistant source comparison.

Actionable distribution moves:

- PR for entity reinforcement. Secure expert quotes and features on authoritative industry publications and reputable directories that align with your entity graph. A 2025 correlation study across 75,000 brands suggested that brand web mentions correlate with AI Overview presence, as noted in Ahrefs’ brand mentions correlation analysis (2025).

- Creator and forum strategy. Pitch subject‑matter experts on YouTube, podcasts, and specialized forums/communities where assistants pick up citations. Package your research into ready‑to‑cite artifacts (data pages, checklists, glossaries).

- YouTube micro‑content. Produce short, authoritative explainers that map to your “chunked” sections; link back to the canonical pillar with transcriptions and timestamps. This aligns with the observed YouTube uptick in AI Overviews citations (2025).

- Ensure crawlability by the engines you want to influence. Bing/Copilot grounds answers in public web results and honors robots.txt, per Microsoft’s guidance on generative answers and public websites (2025). If Bing visibility matters, don’t block Bingbot.

Where Geneo helps in Distribute:

- Track which distribution wins (PR placements, creator features, YouTube uploads) show up most often in AI answers. Geneo’s AI citation and sentiment views make it easier to see whether a new PR win starts appearing inside Perplexity or ChatGPT answers—and to compare across brands or product lines within multi‑brand workspaces as shown on the Geneo homepage.

4) Measure: adopt AI‑native KPIs and integrate with SEO metrics

You can’t improve what you don’t track. In 2025, practitioners are layering AI‑native KPIs on top of their SEO dashboards.

Recommended AI‑native KPIs:

- Share of AI answers: the percentage of assistant answers that cite your brand/domain for a query set.

- AI citation count and cross‑assistant overlap: how many citations you earn and in which assistants simultaneously, inspired by the overlap work in Ahrefs’ assistant comparison (2025).

- Sentiment of AI answers: tone of answers mentioning your brand over time.

- Attribution rate in AI outputs: how often your content is linked when your perspective is referenced, echoing new KPI discussions in Search Engine Land’s generative AI search KPIs (2025).

Combine with traditional SEO KPIs:

- GSC impressions, positions, and CTR (especially for queries with AI Overviews).

- Referral traffic from AI engines (where taggable or identifiable in logs/analytics) and assisted conversions.

Tooling and dashboards:

- Vendors and analysts increasingly recommend tracking AI citations and sentiment; see the patterns summarized in SEMrush’s AI search optimization guidance (2025).

- Build a single “source‑of‑truth” dashboard that merges AI‑native KPIs with SEO metrics and social signals. Annotate all distribution and content changes.

Where Geneo helps in Measure:

- Geneo’s monitoring of AI citations (ChatGPT, Perplexity, Google AI Overviews), answer sentiment, and historical snapshots gives you the longitudinal view needed to correlate work with outcomes. See details in the Geneo product overview and the step‑by‑step guides for ChatGPT citation tracking and Perplexity keyword tracking.

Technical controls and trade‑offs: bots, crawling, and freshness

Decide deliberately which AI agents you allow to crawl your content—there is no one‑size‑fits‑all answer.

- Robots and meta directives: Control crawling in robots.txt and indexing/snippets via meta and headers, following Google’s robots meta tag guide (2025) and robots.txt creation doc.

- OpenAI bots: GPTBot and OAI‑SearchBot behaviors and controls are documented in OpenAI’s bots page (2025). Review logs for volume and consider rate limiting if necessary.

- Perplexity: Understand the difference between PerplexityBot crawls and Perplexity‑User fetches per Perplexity’s bots documentation (2025).

- Bing/Copilot: Generative answers ground in content that Bingbot can crawl, and robots.txt is honored according to Microsoft’s guidance (2025).

Trade‑offs to consider:

- Allowing assistants to crawl may improve visibility/citations but increases bandwidth and potential content reuse risks. Blocking reduces exposure but can protect proprietary assets.

- Over‑optimizing for “answer snippets” can reduce on‑site engagement; mitigate by building owned audiences (email, community) and designing content that encourages deeper exploration.

Sector nuances: where the playbook bends

- B2B and developer tools: Prioritize technical documentation clarity, changelogs, and GitHub/community presence. Assistants often cite technical docs and issue threads when they are well structured and current.

- Ecommerce: Product and review schema quality matters; create problem‑solution guides that map to natural language queries (e.g., “best fit for…”, “how to size…”). Short explainer videos can improve AI Overview coverage in how‑to contexts, per the 2025 YouTube citation surge report.

- Regulated industries (health/finance): Double down on author credentials, citations to primary literature, and compliance review. Expect a higher bar for eligibility and trust in Google’s AI features guidance (2025).

Common pitfalls (and how to avoid them)

- Data silos: Teams track GSC, social listening, and AI citations in separate tools with no shared view. Fix this with a unified dashboard and strict annotation discipline.

- Weak entity identity: Missing Organization/Person schema and inconsistent author bios erode trust. Standardize identity schema and link out to authoritative profiles.

- No provenance: Claims without canonical sources are less likely to be cited. Always link the original artifact (study, official doc) with descriptive anchors.

- Overlooking YouTube and forums: Assistants often cite these surfaces. Design distribution sprints that include video explainers and expert Q&A threads.

- Bot control whiplash: Oscillating between blocking/allowing AI bots erases learning. Decide by section/template; annotate and measure.

A 90‑day implementation plan

Weeks 1–2: Discover and baseline

- Build your topic × assistant grid for 20–50 priority queries. Capture current answers, citations, and sentiment.

- Export and cluster GSC queries by intent; flag which trigger AI Overviews.

- Audit GPTBot/PerplexityBot/Bingbot access; document your robots.txt stance and rationale.

- Stand up a dashboard merging AI citations/sentiment with GSC and social signals. Annotate start state.

Weeks 3–6: Engineer the core assets

- Draft or refactor 5–10 pillar pages using chunk‑cite‑clarify and full identity schema. Add author bios with credentials.

- Produce 3–5 short YouTube explainers mapped to high‑value sections; transcript and embed on the page.

- Publish a source library page with canonical references you cite often.

Weeks 7–10: Distribute with intent

- Launch a PR sprint targeting 5–10 authoritative publications/directories aligned to your entities.

- Pitch 3–5 creators (YouTube, podcast) and contribute expert answers in 3–4 niche forums/communities.

- Coordinate a social series that amplifies each pillar’s key chunks with visuals and pull‑quotes.

Weeks 11–13: Measure and iterate

- Evaluate changes in share of AI answers, AI citation counts, sentiment, and GSC CTR for AI‑Overview queries.

- Re‑run the topic × assistant grid; diff answers and citations vs. baseline.

- Keep what works, fix underperformers, and plan the next cycle.

Where Geneo fits the 90‑day plan:

- Day 1: Connect your brands in Geneo and pull historical AI snapshots and sentiment to establish baseline trends, per the Geneo overview.

- After each sprint: Use Geneo’s historical tracking to confirm whether new PR wins or YouTube uploads show up in ChatGPT/Perplexity answers. For workflows, see the ChatGPT citation guide and the Perplexity tracking walkthrough.

Governance: keep your playbook current

- Quarterly algorithm watch: Review official guidance and industry studies. Google reiterates the lack of special markup for AI features and the primacy of helpful content in its 2025 post on Succeeding in AI search. Expect assistants’ citation sets to evolve.

- Changelog discipline: Maintain a dated log of bot policy changes, schema updates, and distribution pushes. Correlate to KPI shifts.

- Risk and compliance: Especially in regulated categories, add a pre‑publish review for citations and claims. Link to the canonical artifact, not derivative coverage.

Key takeaways

- Treat AI search as a cross‑channel system. What happens in social, PR, YouTube, and forums increasingly determines who gets cited.

- Engineer content at the chunk level and make authorship and provenance unmistakable.

- Measure AI‑native KPIs—share of AI answers, citation count, cross‑assistant overlap, and sentiment—alongside SEO metrics to see the full picture, as framed by Search Engine Land’s KPI guidance (2025) and the assistant overlap findings in Ahrefs (2025).

- Decide intentionally on bot access; annotate and evaluate the trade‑offs using official controls from OpenAI’s bots docs and Microsoft’s Copilot guidance.

- Close the loop every 2–4 weeks. The brands that iterate fastest build durable AI search visibility.

If you want a purpose‑built way to track AI citations, sentiment, and historical snapshots across ChatGPT, Perplexity, and Google AI Overviews while collaborating across multiple brands, try Geneo. Explore the platform at Geneo and dive into the workflow guides on checking ChatGPT citations (2025) and tracking Perplexity keyword rankings.