Best Practices: Adapting Third-Party SEO Tools to Google's 2025 Data Limits

Discover proven strategies for SEO professionals to optimize third-party tools amid Google's 2025 data visibility changes. Actionable workflows, impression tracking, and GSC adaptation tips.

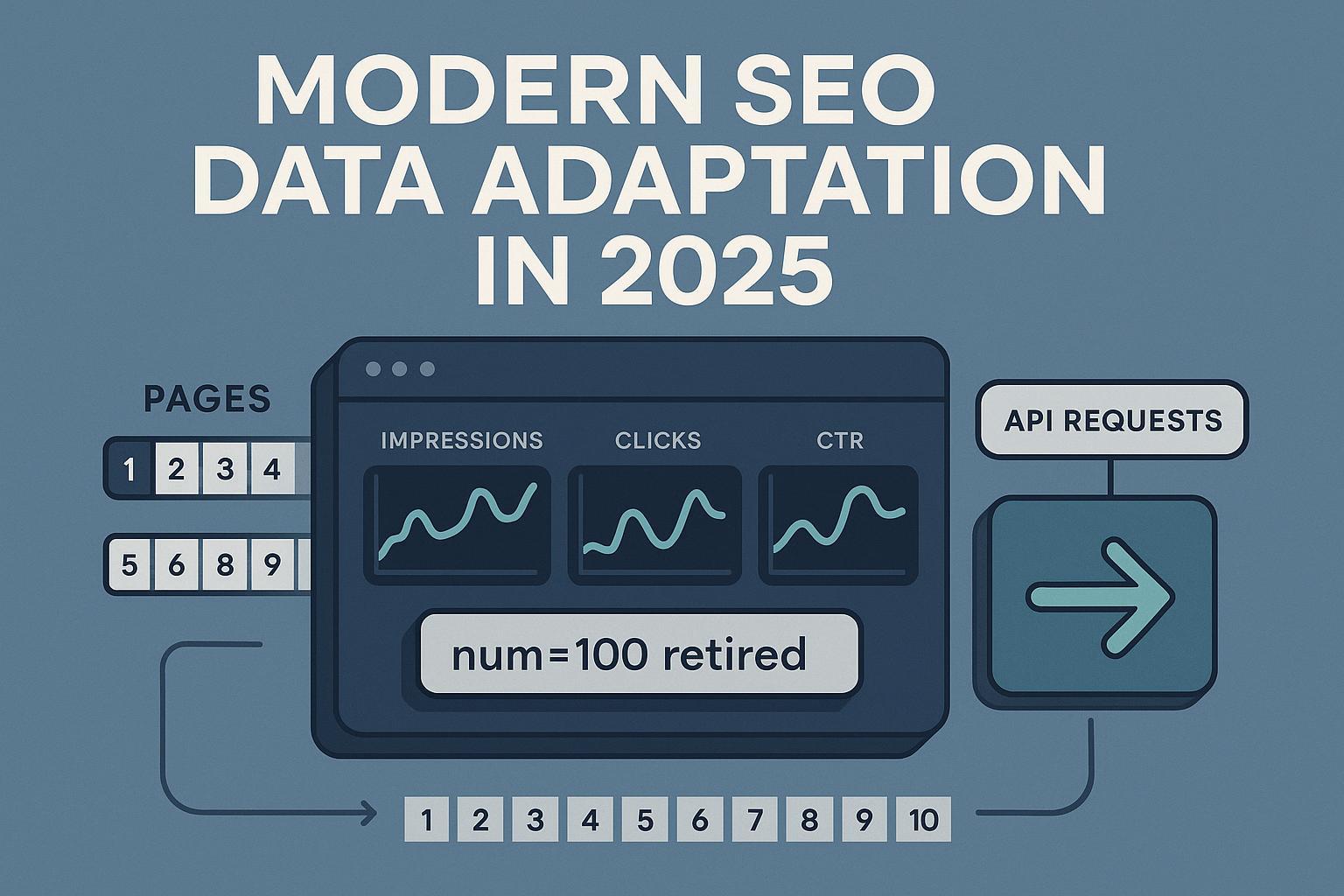

If your rank tracking and impression reporting looked “off” in September 2025, you are not alone. Google confirmed that the long‑used results‑per‑page parameter (commonly appended as “&num=100”) is not supported—effectively removing the ability to reliably fetch 100 results on a single page request. A Google spokesperson reiterated this stance in mid‑September 2025, as reported by Search Engine Land’s confirmation of the parameter’s non‑support (Sep 18, 2025). The downstream effect is a 10x inflation in paginated requests to reach Top 100 results, which disrupted third‑party rank trackers and created temporary depth gaps.

Separately, impressions and “unique ranking keywords” also shifted. Across a dataset of 319 properties, 87.7% of sites saw impressions decline and 77.6% lost unique ranking keywords, according to Search Engine Land’s 2025 analysis of the num=100 impact (Tyler Gargula). For most sites, clicks and average position remained comparatively stable. In practice, this points to a measurement normalization: fewer deep‑page impressions are being surfaced, while top‑visibility data remains intact.

Below is a practitioner‑grade playbook to stabilize your tracking, recalibrate KPIs, and communicate clearly with stakeholders—without overreacting to the noise.

What changed and why tooling broke (briefly)

- Pagination reality: With “&num=100” retired, automated SERP retrieval requires paginating through ~10 pages to reach Top 100. That increases infrastructure cost, timeouts, and rate‑limit exposure. Industry reporters captured the immediate tool disruption in Search Engine Land’s 2025 coverage of rank tracking instability (Sep 15, 2025).

- GSC impression semantics still apply: Impressions in Search Console count when your result is present on the page the user loads on Web Search (scrolling not required), with different behaviors on other surfaces like Discover/News. See Google’s overview in Google Developers’ guidance on using Search Console and GA data.

- AI Mode/AI Overviews roll into totals: In mid‑2025, Google indicated that AI Mode impressions and clicks are included in Search Console totals (not segmented separately in standard reports), as widely noted by industry coverage such as SERoundtable’s June 17, 2025 note that AI Mode counts in Search Console totals.

Implication: You’ll see fewer deep‑position impressions, relatively stable clicks, and infrastructure‑driven rank depth limits in third‑party tools until vendors complete their engineering adaptations.

Best‑practice framework to adapt in 2025

The following practices are field‑tested across enterprise sites and agency portfolios. They focus on durability, cost governance, and decision‑quality.

1) Redesign keyword tracking with impact tiers

- Tier 1 (critical impact): Revenue/lead drivers, essential head terms, brand + high‑intent non‑brand. Track daily at minimum Top 30; schedule deeper pulls (Top 50/100) weekly or biweekly.

- Tier 2 (strategic growth): Mid‑tail expansions and seasonal priorities. Track 2–3x/week; Top 30 default with weekly deeper pulls as budget allows.

- Tier 3 (discovery/long‑tail): Rotate cohorts weekly/biweekly (sampling) and lean on GSC exports plus clustering for coverage.

- Cohort controls: Split by device and key geos where intent differs materially (e.g., mobile‑only behaviors). Document your sample frame so weekly reports compare like‑with‑like.

Why this works: Tiering preserves decision power on the terms that move revenue, while sampling prevents runaway costs from paginated SERP retrieval.

2) Embrace pagination and set depth policies

- Engineering reality: Programmatically paginate SERPs; set rate limits, retries, and exponential backoff to reduce failure rates.

- Depth policy that balances cost vs. intelligence:

- Daily: Guarantee Top 30 for Tier 1.

- Weekly: Top 50/100 for Tier 1 and Top 50 for Tier 2.

- Biweekly/monthly: Deeper audits for Tier 2/3 if needed.

- Feature tracking: Parse and tag impactful modules (Top Stories, Video, People Also Ask, AI Overviews indicators) because features often shift CTR more than a 1–2 position move.

3) Make GSC your measurement backbone

- Treat clicks and impressions from Search Console as your single source of truth for outcomes. Use the Search Analytics API to automate daily exports of clicks, impressions, CTR, and position by date, device, country, query, and page. Start with Google’s Search Analytics API: query endpoint.

- Recognize limits and plan around them. API guidance stresses returning “top rows,” and there are usage ceilings per project—review Search Console API usage limits. For large sites, partition pulls (e.g., by country and device) to minimize sampling gaps relative to the UI.

- Continuity markers: Annotate September 2025 as a methodological break. Maintain pre/post baselines at keyword and page levels to avoid false negative trend calls.

- KPI recalibration: Elevate clicks and CTR as primary health indicators. Add “share of impressions within Top 10 positions” and rank distribution (Top 3/Top 10/11–30/>30) instead of fixating on raw impressions.

4) Automation and cost governance

- Budgeting: Estimate request volumes as keywords × pages × devices × locations. Put guards on job runners to prevent overruns.

- Batch scheduling: Run deep crawls outside peak hours; consolidate long‑tail cohorts into weekly windows.

- Data lineage: Version datasets with run IDs and parameters (depth, pagination, device, geo). Persist logs for rate‑limit errors and retries—auditable reporting saves you during stakeholder reviews.

5) Reporting and stakeholder communication

- Methodology footnote (add to every report): “In September 2025, Google confirmed it does not support the results‑per‑page parameter (‘num=100’). Rank depth collection now uses pagination; our policy ensures daily Top 30 tracking for critical terms and scheduled deeper pulls. Search Console remains authoritative for clicks/impressions; raw impressions since Sep 2025 may not be directly comparable to prior periods.”

- Client memo highlights:

- Expect lower impressions and fewer “ranking keywords,” especially beyond page 2–3.

- Priority terms and clicks are the North Star; these remain stable in most cases.

- We’ve implemented sampling and depth policies to balance coverage with accuracy.

A 30‑day stabilization workflow (agency SOP)

Week 1

- Freeze impression‑based KPIs; annotate dashboards with the September 2025 change.

- Rebuild keyword tiers and depth policies. Validate daily GSC exports by device/country.

Week 2

- Implement cohort sampling for long‑tail. Schedule weekly Top 100 pulls for Tier 1.

- Draft and circulate the client memo; align on new KPI definitions and baselines.

Week 3

- Rebuild dashboards: clicks, CTR, share of Top 10 impressions, and rank distribution.

- Add SERP feature flags and AI Overview presence markers where detectable.

Week 4

- QA across representative properties; compare tool ranks vs. GSC average position on Tier 1. Calibrate cohort sizes to meet acceptable error tolerances.

Practical example (tooling assist, mid‑funnel)

- You can centralize AI surface monitoring and historical trend annotations as part of this SOP. For instance, Geneo consolidates AI search visibility signals (e.g., brand mentions in AI answers) with sentiment and history, which helps explain anomalies alongside GSC trends. Disclosure: Geneo is our product.

Vendor adaptations worth noting (and what they imply)

- AccuRanker: After initial depth constraints, the vendor communicated a path toward daily Top ~30 with scheduled deeper pulls and backfilling. See AccuRanker’s depth update announcement. Implication: Expect many tools to fix daily Top 10/20/30 first, then provide configurable deep crawls on a cadence.

- Semrush: Public communications acknowledged significant operational impact from the 10x request inflation, while maintaining core Top 10/20 stability and implementing mitigations. See Semrush’s 2025 notice on SERP data collection changes. Implication: Don’t assume parity in depth across tools during this transition; verify your tool’s current depth policy.

What this means for you: Tool choice is less about who claims “Top 100 every day” and more about transparent depth policies, failure handling, and cost‑aware scheduling. Ask vendors for their current daily vs. weekly depth guarantees and how they annotate methodological breaks in your historical data.

Dashboards that survive methodology shocks

Replace fragile headline metrics:

- From “Total Impressions” to a bundle of resilient indicators:

- Clicks by device (Web)

- CTR for priority queries/pages

- Share of impressions in Top 10 positions

- Rank distribution buckets (Top 3 / Top 10 / 11–30 / >30)

Add auditability:

- A visible annotation at September 2025 with a hover tooltip linking to a short note summarizing the change (and your policy).

- A dimensions toggle (device, geo, search type) so stakeholders can see consistency where it exists.

GSC API: practical pull patterns that reduce gaps

- Pull by country and device separately to increase the number of “top rows” returned per partition before hitting caps.

- Rotate through query‑ and page‑dimension groupings on alternate days to build a fuller picture without exceeding quotas.

- Keep a small “QA panel” of 50–100 Tier 1 queries for which you manually verify consistency between the UI and API totals weekly.

- Refer to Google Developers’ Search Console API usage limits when estimating your daily run plan, and keep your code close to the Search Analytics API query spec to avoid avoidable errors.

Future‑proofing beyond 2025

- Multi‑surface visibility: Include AI Overviews/AI Mode signals, Discover, and News where relevant. Remember that AI Mode rolls into Search Console totals without separate segmentation in standard reports (as observed in 2025 industry coverage).

- Diversify engines and verticals: Where your audience is active, track Bing, YouTube, Amazon, and vertical search. Apply similar tiered depth policies to contain cost.

- Policy monitoring muscle: Subscribe your team to Google Search Central updates and industry outlets; keep a “policy change playbook” for KPI freezes and dashboard annotations so you can execute within hours—not weeks—when the next change lands.

- Build AI‑surface literacy: If your brand competes in AI answers, establish an audit cadence for prompts that matter and track brand mentions and sentiment over time. For practical context, see the Profound review 2025 and Geneo alternative and our Profound vs Brandlight comparison for AI brand monitoring workflows.

For broader reading on AI search visibility and adaptation patterns, the Geneo Blog curates practitioner guides and change‑monitoring tactics.

What we still don’t know (transparency keeps trust)

- Separate AI Mode segmentation in GSC: Standard reports don’t break out AI Mode impressions/clicks. While included in totals per 2025 communications, practitioners still lack first‑party segmentation in the UI/API for routine analysis.

- Vendor depth trajectories: Many tools are still iterating. Daily Top 30 is becoming common; weekly Top 100 coverage varies. Always confirm current capabilities before committing to SLAs.

- Long‑tail comparability: Because deep‑page visibility is less exposed, pre‑2025 vs. post‑2025 impression trends for long‑tail queries are not apples‑to‑apples. Treat YOY comparisons that straddle September 2025 with caution and annotations.

Common pitfalls to avoid

- Over‑indexing on impressions: Reporting an impressions drop as “performance decline” without corroborating click/CTR trends leads to bad decisions. Validate with clicks and conversion data.

- Chasing daily Top 100 for everything: You will spend a lot and learn a little. Depth policies and sampling deliver better ROI.

- Ignoring data lineage: Without annotated breaks and run parameters, stakeholder trust erodes quickly when numbers shift.

Summary: Pragmatic rules that travel well

- Track what matters daily (Tier 1, Top 30), sample the rest.

- Make GSC the backbone for outcome data; annotate September 2025 as a hard break.

- Rebuild dashboards around clicks, CTR, Top‑10 impression share, and rank distribution.

- Demand vendor transparency on depth policies and failure handling.

- Train stakeholders on what changed, why it matters, and how you’re mitigating.

Sources and confirmations

- Google’s stance on results‑per‑page: Search Engine Land confirmation that the parameter is not supported (Sep 18, 2025)

- Rank tracking disruption context: Search Engine Land’s report on tracking instability (Sep 15, 2025)

- Quantified impact dataset: Search Engine Land’s analysis of 319 properties with 87.7% impressions down (Sep 18, 2025)

- Impression/measurement context: Google Developers’ guide to using Search Console with GA

- AI Mode in totals: SERoundtable’s 2025 note that AI Mode counts in GSC totals

- API implementation and limits: Search Analytics API query spec and Search Console API usage limits

Next steps: If you want a lightweight way to monitor AI surface visibility alongside your GSC metrics, consider trying Geneo for a unified view of brand mentions and sentiment across AI answers. Disclosure: Geneo is our product.