The Beginner’s Roadmap to GEO: Your Guide to Generative Engine Optimization

Start with GEO step by step! This comprehensive beginner guide explains Generative Engine Optimization, key differences from SEO, and practical workflows for tracking AI citations.

When someone asks an AI for advice, you want your brand to be the source it trusts and cites. That’s what GEO—Generative Engine Optimization—helps you do.

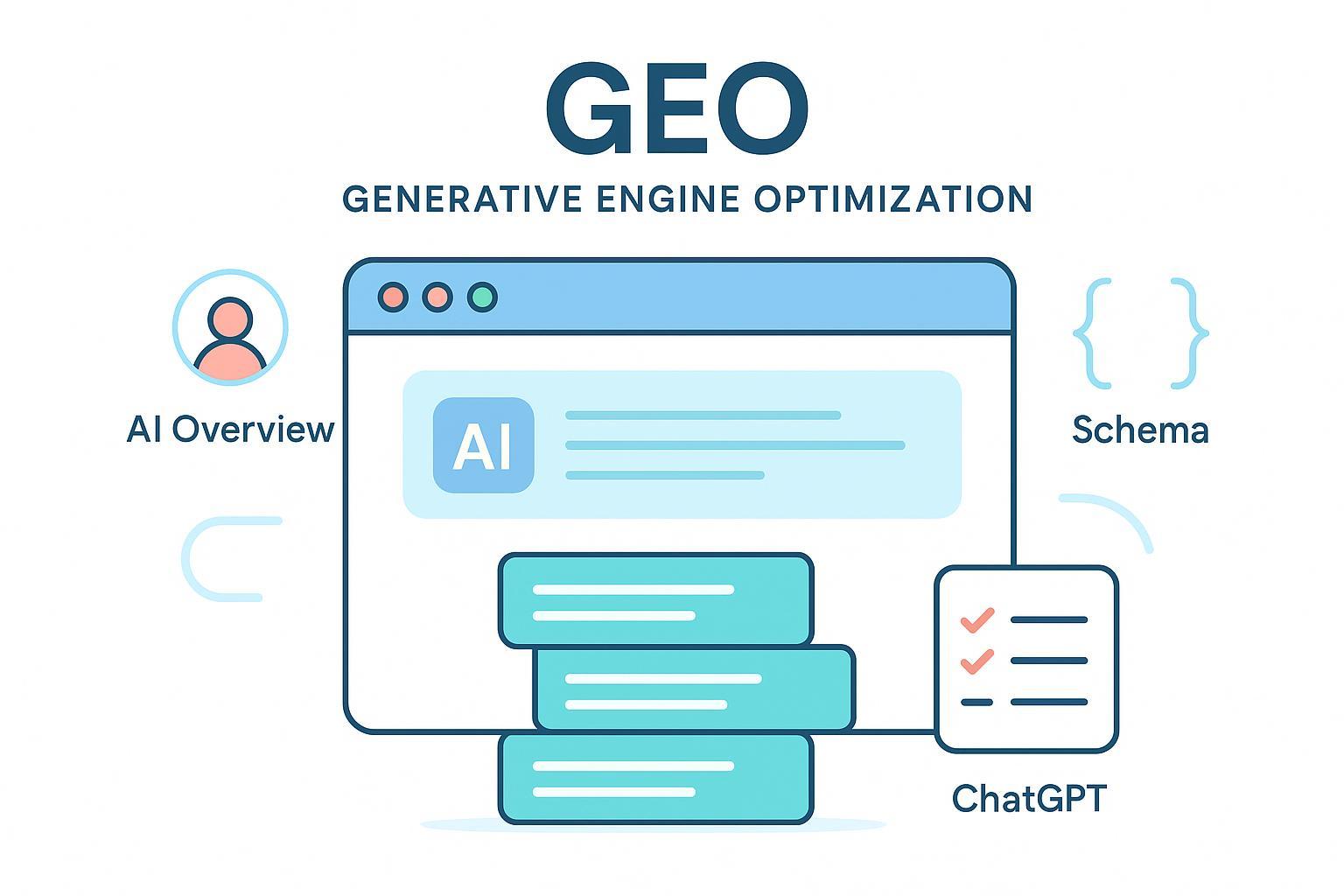

GEO, in plain English

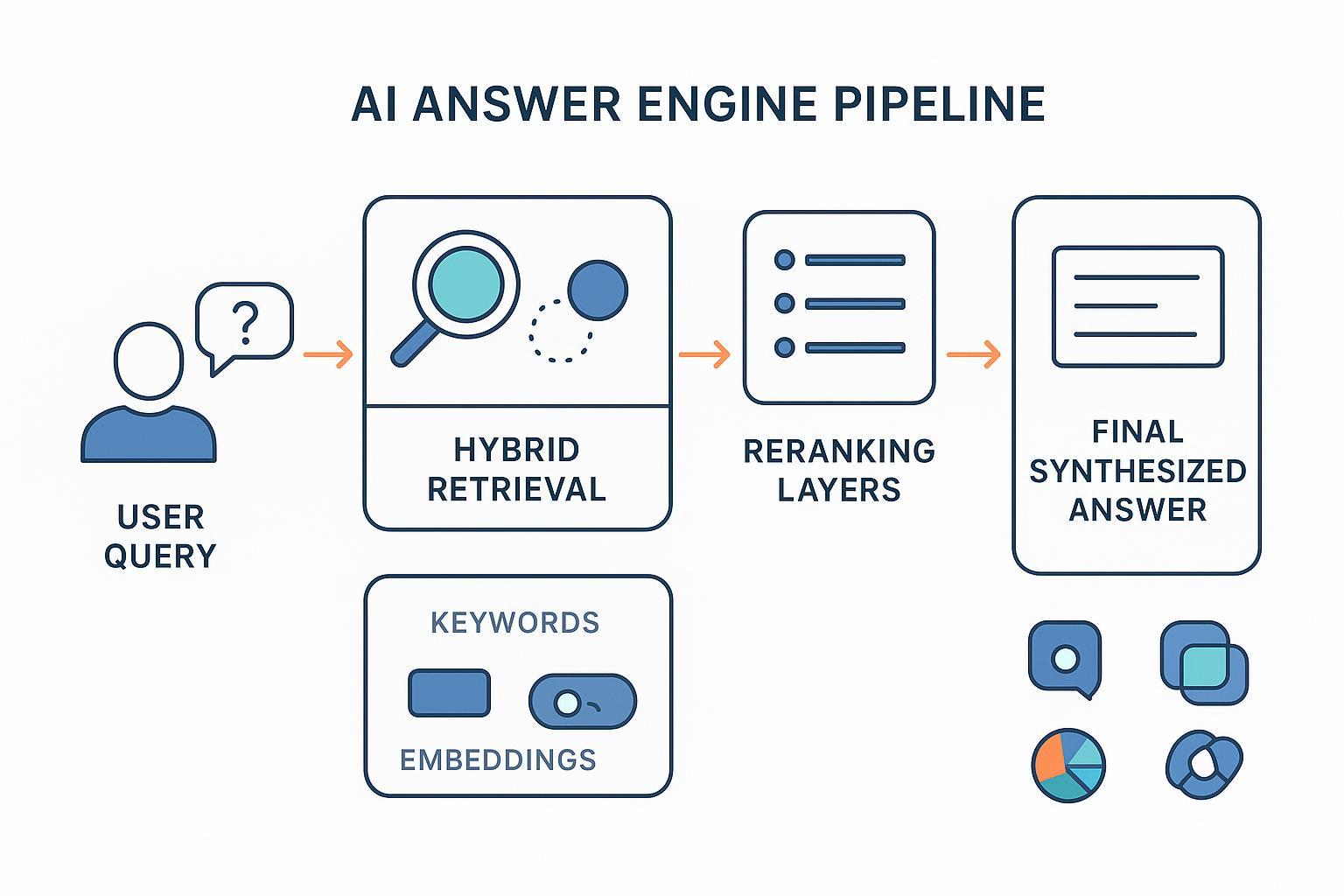

GEO is the practice of making your content easy for AI answer engines to find, understand, and cite. Think of it like preparing your best notes before an open-book test: you still need quality information, but the notes must be clear and organized so the “grader” (the AI) can pull the right pieces quickly.

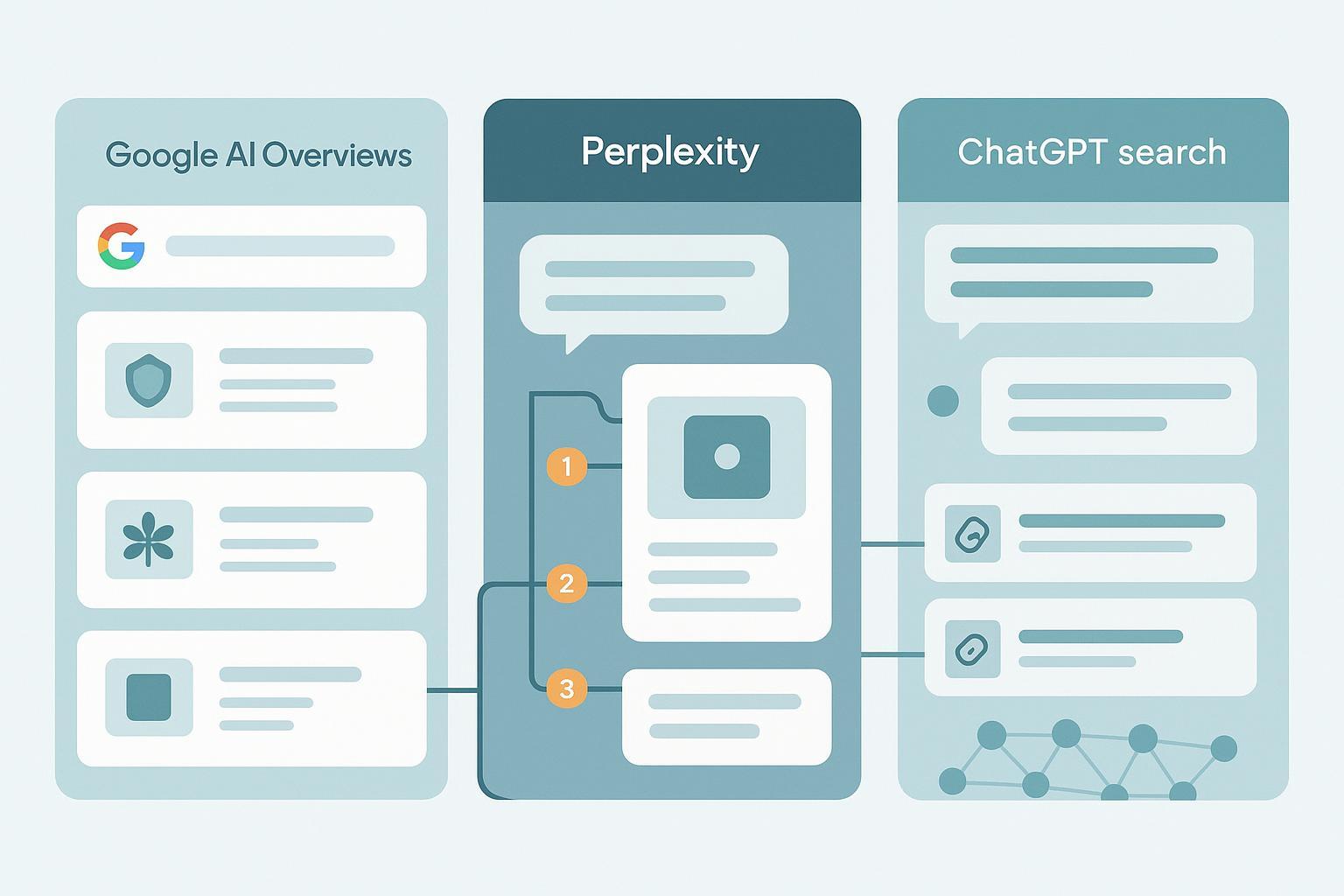

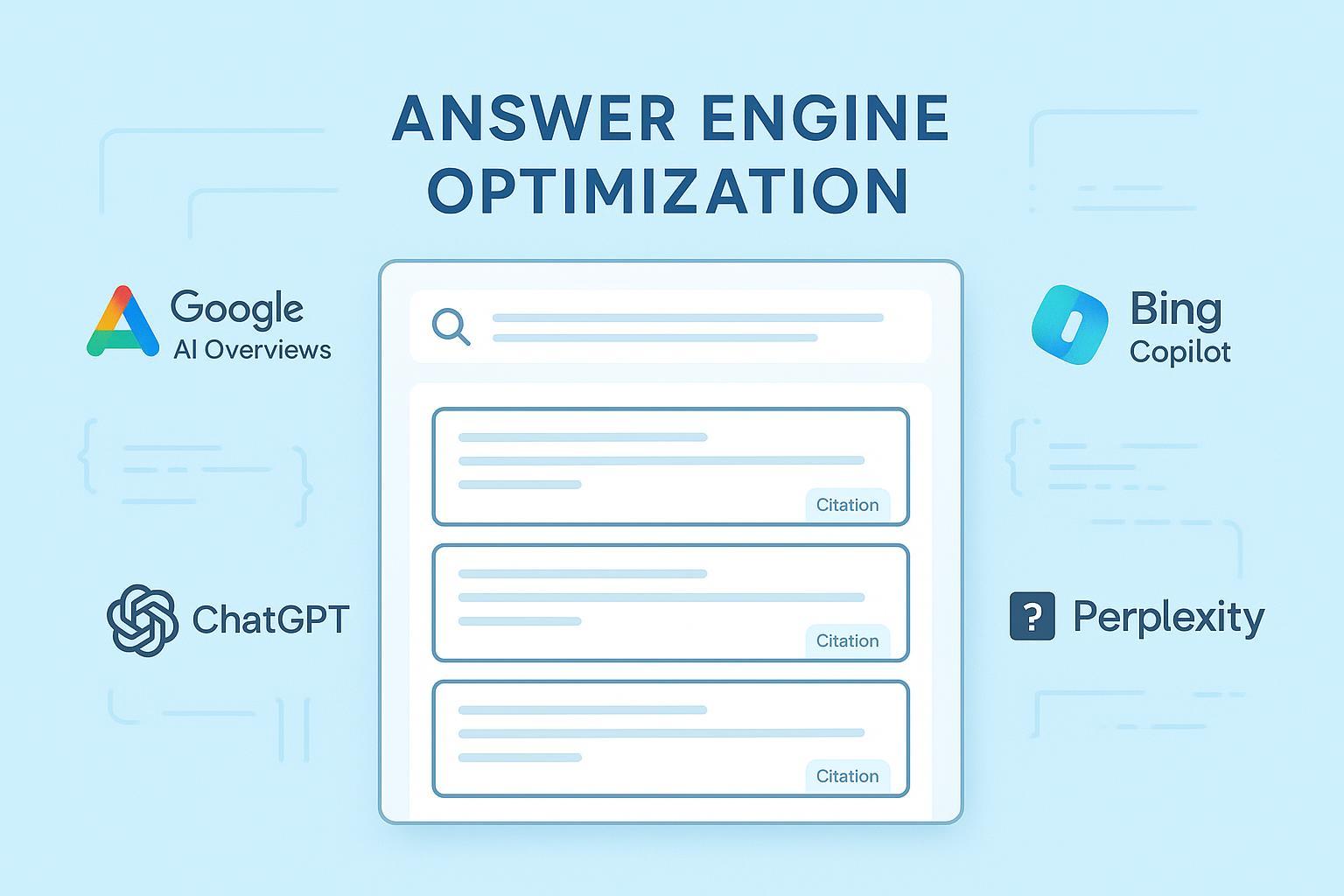

How does GEO relate to SEO and AEO? SEO remains the foundation: fast pages, crawlable content, useful answers, and reputable links. AEO (Answer Engine Optimization) focuses on winning direct answers in traditional SERPs and voice assistants. GEO extends these ideas to LLM-driven summaries across engines like Google AI Overviews, Perplexity, and ChatGPT.

According to Search Engine Land’s explainer, GEO means “optimizing your website’s content to boost its visibility in AI-driven search engines,” emphasizing clarity, structure, and authority for inclusion in generated answers. See the overview in What is generative engine optimization (GEO)? by Search Engine Land (2024).

- Search Engine Land — What is generative engine optimization (GEO)?

If “AI visibility” is new to you, this plain-language primer can help: What Is AI Visibility? Brand Exposure in AI Search Explained.

| Concept | What it optimizes for | Where it shows up | What success looks like |

|---|---|---|---|

| SEO | Traditional search engines | SERP listings, snippets | Rankings, clicks, conversions |

| AEO | Direct answers in search/voice | Featured snippets, voice replies | Being the selected answer |

| GEO | AI-generated summaries | AI Overviews, Perplexity answers, ChatGPT responses | Being cited or described accurately |

How AI answer engines behave (2025 snapshot)

- Google AI Overviews appear for a meaningful slice of queries, though estimates vary. In March 2025, Semrush observed 13.14% of U.S. desktop searches triggering AI Overviews (reported by Search Engine Land), while other datasets later saw higher ranges. See Search Engine Land’s coverage of the Semrush study (2025).

- When an AI summary appears, people may click fewer links. Pew Research (2025) found users were less likely to click when an AI summary was present versus queries without one. Treat percentages as study-specific.

- Perplexity is transparent about citations and surfaces sources prominently. Its documentation explains how it searches and attributes sources, making provenance a core user experience.

- ChatGPT/Copilot/Gemini show variable citation visibility. Users can prompt for sources, but links aren’t always visible by default.

External references for this section:

- Search Engine Land — Google AI Overviews prevalence from the Semrush study

- Pew Research — Users click fewer links when an AI summary appears (2025)

- Perplexity — Search Guide (official docs)

Behaviors evolve; verify your own keyword set regularly.

The core fundamentals you can control

- Entities and schema. Use Organization and Person schema to clarify who you are; add Article, FAQPage, and HowTo where they genuinely fit. Keep names consistent (brand, product lines), maintain an About page, and link to authoritative profiles (e.g., Wikipedia/Wikidata if applicable). Validate markup and keep essential content in HTML. See Google’s Organization structured data documentation for canonical guidance: Google Search Central — Organization structured data.

- E-E-A-T in practice. Show real experience (first-hand examples, case studies), list author bylines with bios, cite reputable sources, and keep content fresh. None of this is new, but it matters more when an AI decides which sources feel trustworthy enough to summarize.

Your first 30–60–90 days

Start small. Pick a focused topic or product line and build confidence with a manageable set of prompts.

30 days: foundation

- Add an “answer-first” block (40–80 words) at the top of core pages that addresses the main question in plain English.

- Implement Organization, Person, and Article schema; ensure your About and Author pages clearly state who you are and why you’re credible.

- Set up a simple monitoring log (spreadsheet): date, prompt, engine, presence (Y/N), cited URL(s), sentiment notes, and fixes to try.

Copy-paste monitoring prompts (try 10–20 to start):

- “Summarize [brand] and its key offerings. Cite your sources.”

- “What are the best tools for [problem X]? Include sources.”

- “Who are alternatives to [brand]? Provide citations.”

- “What do AI results say about [brand] vs [competitor]? Show sources.”

60 days: expansion

- Expand to 50–100 prompts. Build content clusters: related explainers, how-tos, and FAQs that cover the topic’s common questions.

- Add internal links that connect related pieces logically. Strengthen pages that competitors’ citations come from (e.g., more steps, clearer headings, and referenced data).

90 days: optimization

- Troubleshoot gaps (see the section below). Add primary data, quotes, and references where your coverage is thin.

- Create lightweight SOPs: a publishing checklist (answer-first, schema validated, sources cited) and a monthly monitoring cadence.

Metrics to track (keep it simple at first):

- Presence rate: % of tested prompts where your brand appears in AI answers.

- Citation share of voice: the proportion of citations referencing your domain vs. others across your prompt set.

- Sentiment trend: distribution of positive/neutral/negative tone when your brand is mentioned.

- Update velocity: the lag from a content change to seeing it reflected in AI outputs.

A small, reproducible monitoring example

Disclosure: Geneo is our product.

Here’s a basic workflow any team can replicate. Pick five representative prompts (e.g., “best [category] tools,” “[brand] vs [competitor],” “what is [topic]”). Test them in Perplexity, Google AI Overviews, and ChatGPT. Log the date, exact prompt, whether your brand appears, which URL is cited, and a quick sentiment note. If an engine omits your best page, compare your structure with cited competitors: Do they lead with a concise answer? Do they reference independent sources? Are their headings scannable?

Update your page: add a short answer-first intro, tighten headings, and cite 1–2 authoritative references. Re-test the same prompts two weeks later and note changes. If you prefer a tool to centralize logs and track AI citations across engines, you can explore Geneo’s capabilities here: Geneo features overview. The goal isn’t magic—just repeatable observation and small, steady improvements.

Troubleshooting when you don’t show up

- Technical hygiene: validate schema; make sure key pages are indexable; avoid content hidden behind heavy scripts; ensure important text is in HTML.

- Entity clarity: unify brand/product names everywhere; add disambiguation on your About page; link to verified profiles (e.g., Wikipedia/Wikidata/Crunchbase if appropriate).

- Evidence and structure: add primary data, quotes, and neutral citations. Use clear headings and a brief answer-first section.

- Monitoring cadence: re-test your prompt set monthly. Note which engines improve and where you still need work. For broader shifts in SERPs and AI Overviews, this context helps: Google Algorithm Update October 2025.

Keep learning

Two solid places to deepen your practice without the fluff: Conductor Academy’s GEO overview for fundamentals and Search Engine Land’s ongoing reporting on AI Overviews and search behavior. Behaviors change, so keep testing your own prompts and logging results.

- Conductor Academy — What is Generative Engine Optimization (GEO)?

- Search Engine Land — Google AI Overviews prevalence from the Semrush study

Wrap-up

GEO helps your brand get recognized inside AI answers by prioritizing clarity, structure, and trustworthy evidence. Start with a small prompt set, ship answer-first pages with clean schema, and monitor citations over time.

Prefer not to track everything by hand? Consider a lightweight monitoring tool to centralize prompts, citations, and sentiment so you can focus on content improvements. That way, you can keep iterating where it counts.