What Is Answer Engine Optimization (AEO)? Definition & Guide

Learn what Answer Engine Optimization (AEO) means, how it differs from SEO, core practices to rank in AI answer engines, and ways to measure your brand’s results.

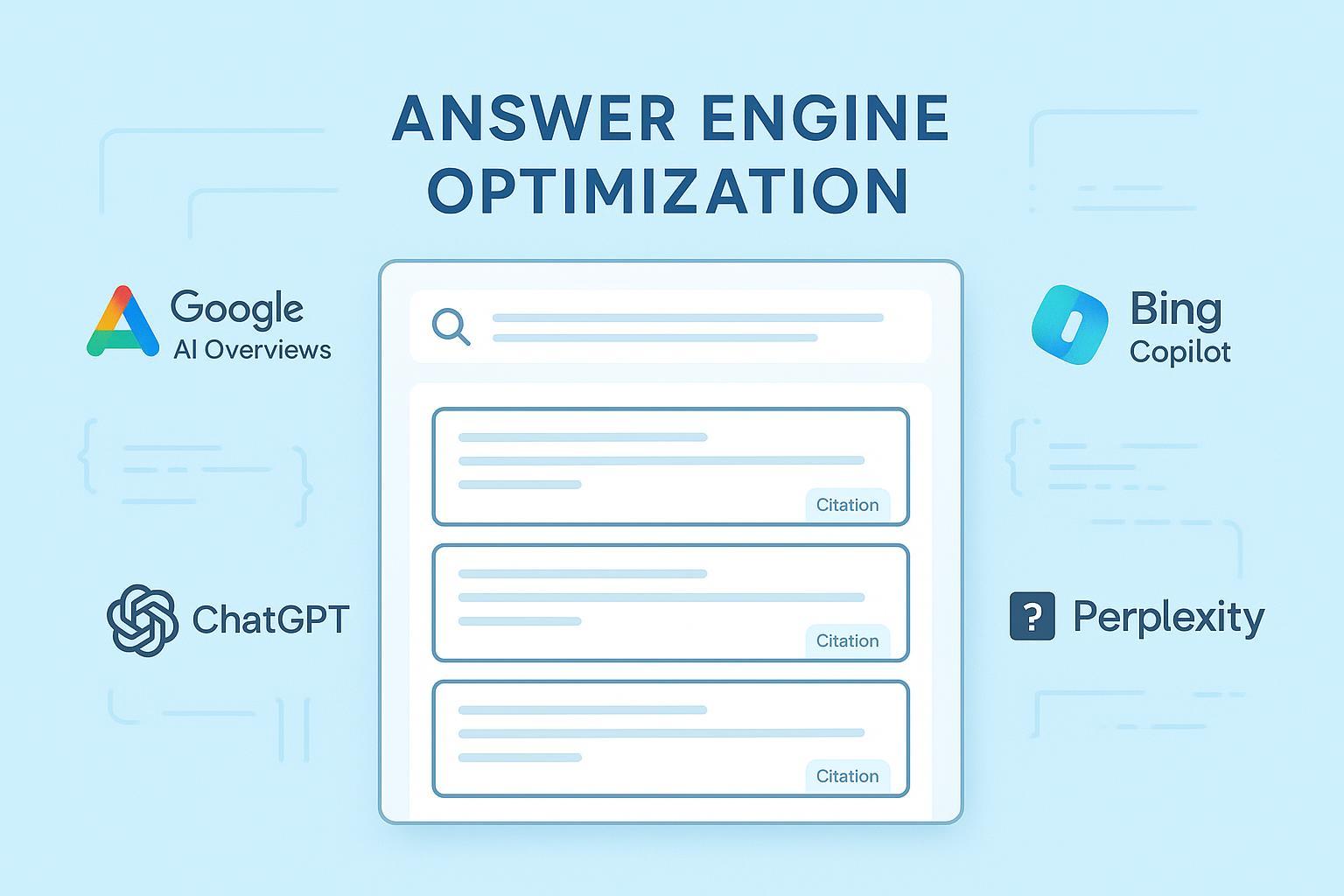

If an AI answers the question directly on the screen, how does your brand still earn credit—and clicks? That’s the core of Answer Engine Optimization (AEO): making your content understandable, citable, and worthy of being selected as the answer across AI‑powered surfaces.

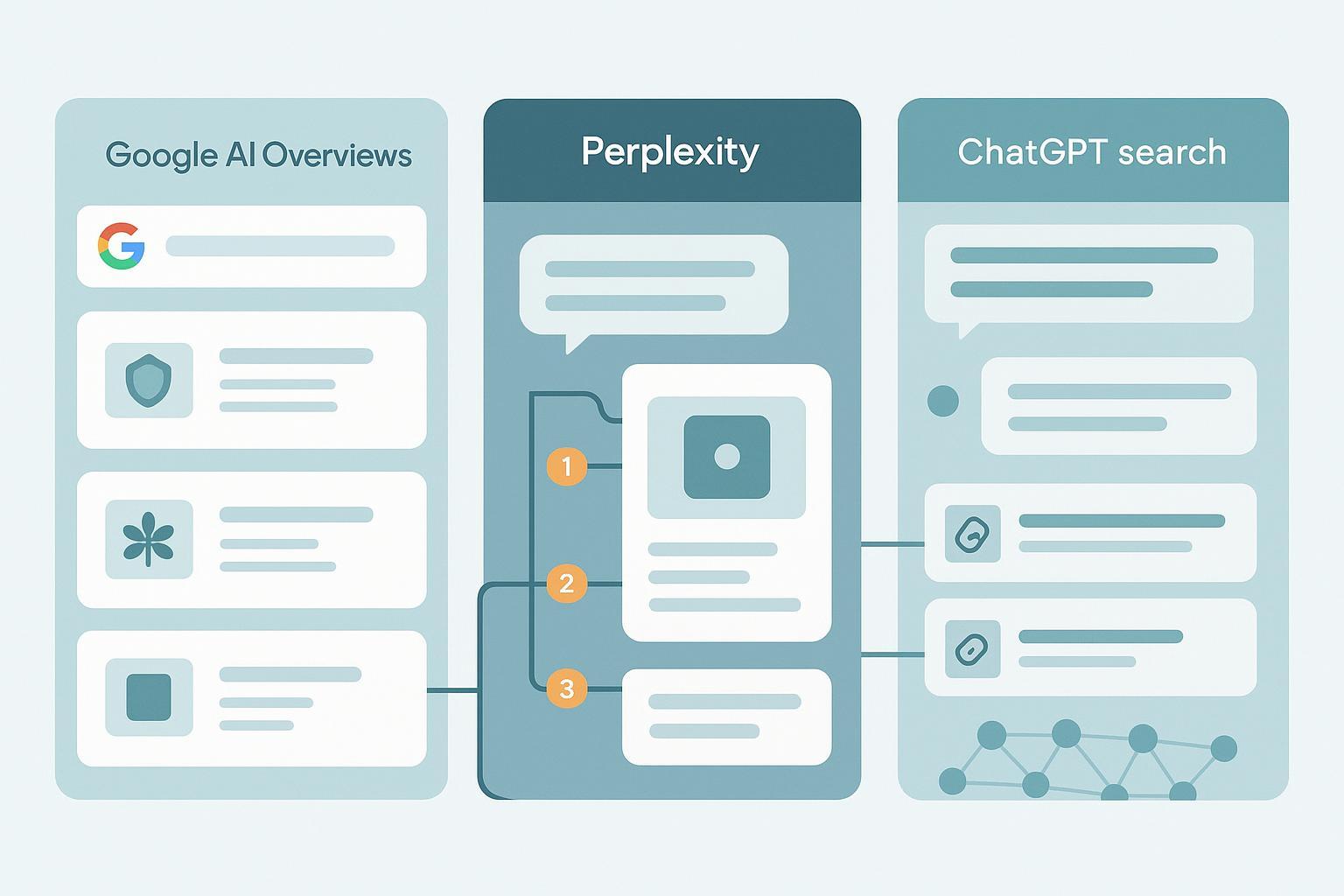

AEO emerged because Google AI Overviews, Bing Copilot, ChatGPT with browsing, and Perplexity synthesize responses and attribute sources in the interface. Your job isn’t just to “rank” a page; it’s to be chosen and cited as the answer.

A clear definition of AEO

Answer Engine Optimization (AEO) is the practice of structuring and improving content so AI‑powered answer engines can understand it, select it, cite it, and present it as a direct answer to user queries. In practice, that means question‑led headings, concise answer blocks, transparent sourcing, and structured data that mirrors what’s visible on the page.

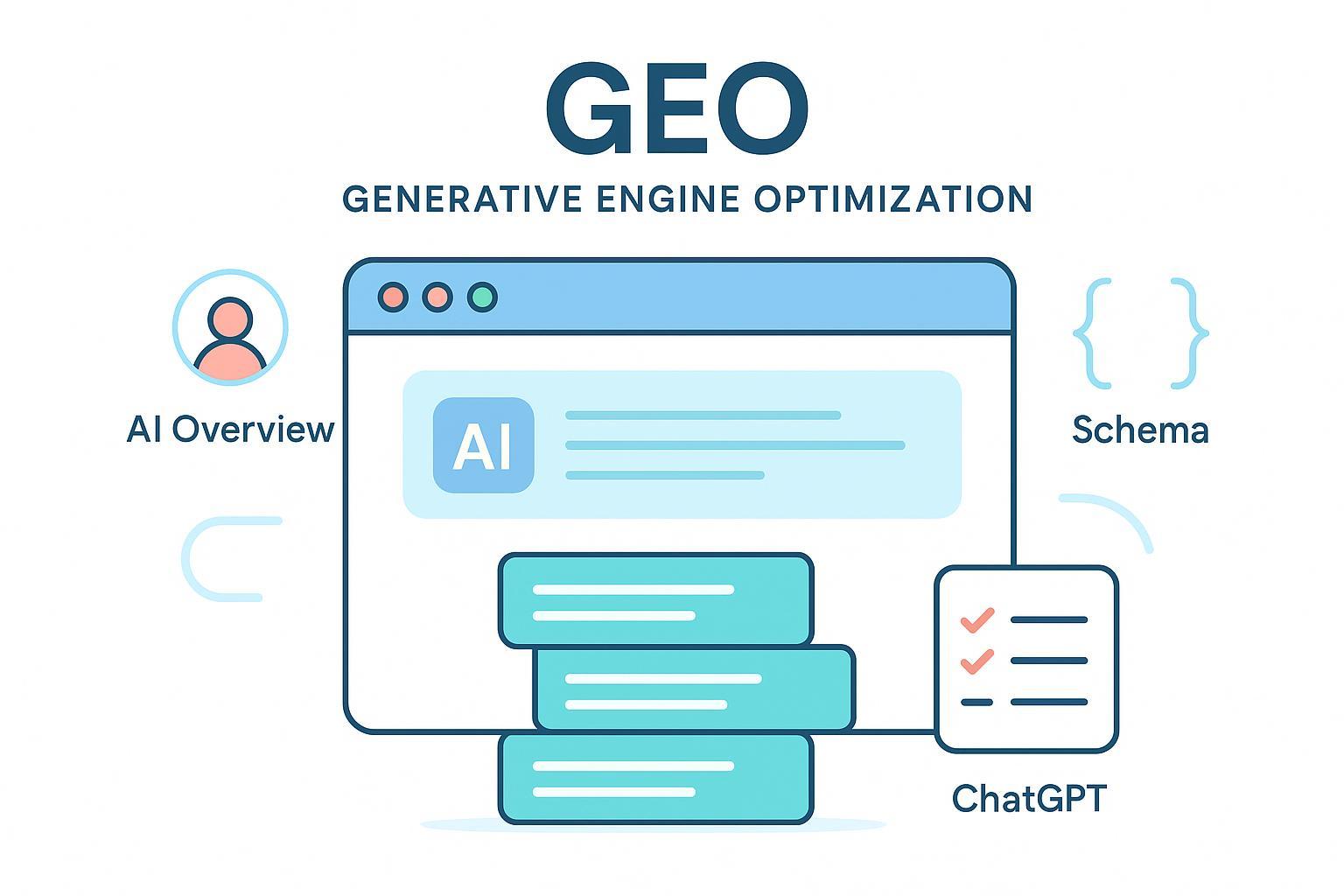

AEO sits alongside classic SEO and overlaps with Generative Engine Optimization (GEO). If SEO is about earning positions and traffic, AEO is about earning inclusion and citations within answer interfaces. GEO often zooms out to entity clarity, authorship, and evidence—useful inputs that reinforce AEO. For measurement framing, see our explainer on AI visibility.

| Dimension | SEO | AEO | GEO |

|---|---|---|---|

| Primary goal | Earn rankings and clicks | Earn selection and citations in answer surfaces | Improve how generative systems interpret and represent your brand |

| Primary surfaces | Organic blue links, rich results | AI Overviews, featured snippets, conversational answers | Generative summaries, assistants, multi‑turn dialogues |

| Content patterns | Comprehensive pages, topical depth | Question‑led H2/H3s, 30–60‑word answer blocks, scannable lists/tables | Clear entities, author bios, transparent evidence, provenance |

| Measurement focus | Rankings, CTR, sessions, conversions | Citations/mentions, share‑of‑answer, AI‑referred traffic | Representation accuracy, sentiment, inclusion across models |

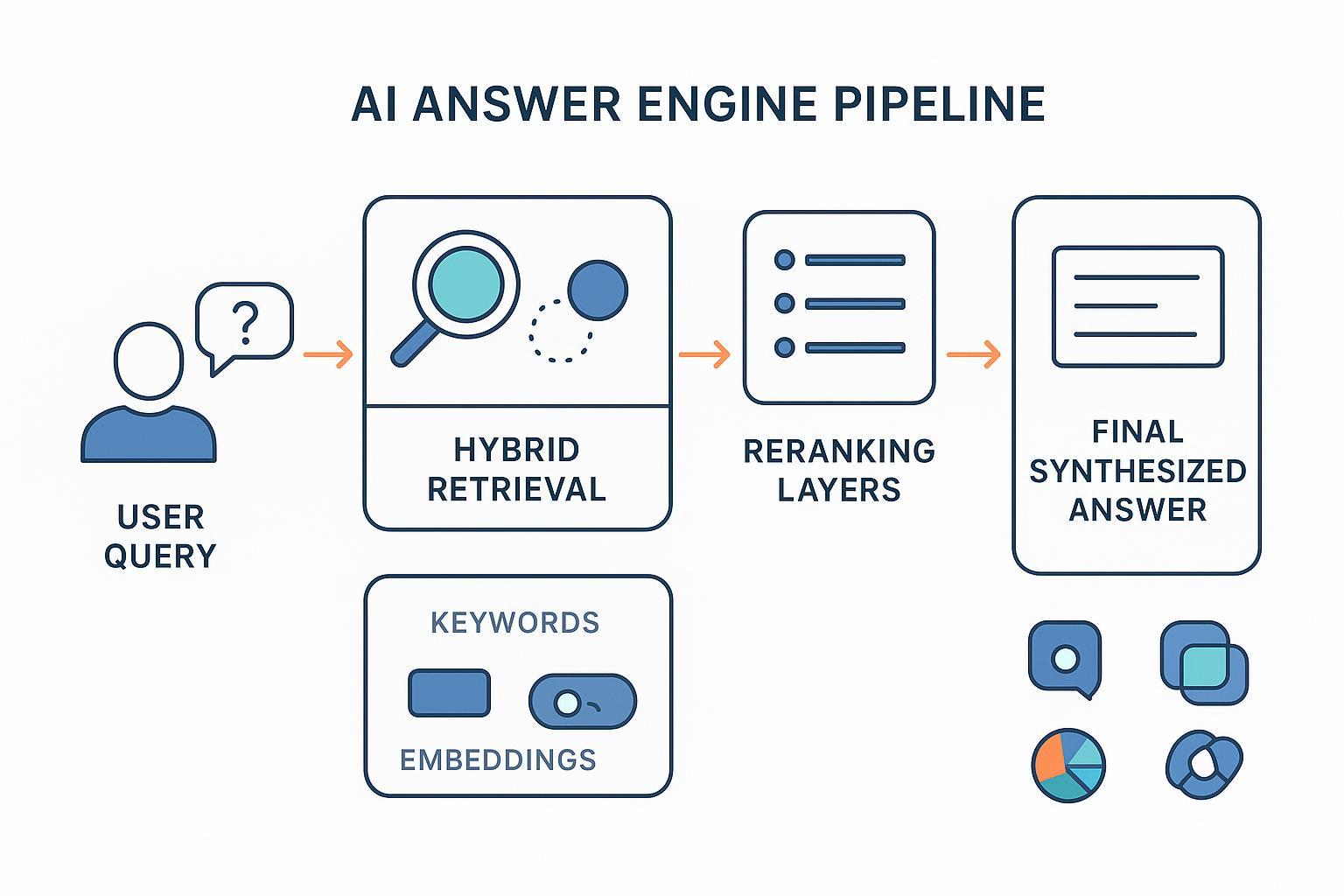

How answer engines choose and cite sources

The mechanics vary by platform, but a common thread is clear, credible, well‑structured content backed by transparent sources.

Google AI Overviews

Google states that AI features summarize and show links to supporting websites. There’s no special markup for guaranteed inclusion; helpful, indexable content and structured data improve understanding, not certainty. See Google’s overview in AI features and your website (Search Central, 2025).

Bing Copilot

When Copilot uses the web, users can view a Sources button showing the Bing query and sites used. Copilot grounds answers in search results and traces back to sources. Details in Understanding web search in Microsoft Copilot Chat (Microsoft Support, 2025).

ChatGPT (with browsing)

In browsing contexts, ChatGPT includes inline citations that link to sources and can be previewed. See Introducing ChatGPT Search (OpenAI, 2024) for how source linking appears in practice.

Perplexity

Perplexity searches the web in real time and consistently presents numbered citations linking to sources. Read How does Perplexity work? (Perplexity Help, 2025).

On‑page patterns and structured data that help you get cited

AEO rewards clarity and extractability. Start with these patterns:

- Use question‑led H2/H3s (“What is…?”, “How to…?”) and place a 30–60‑word direct answer immediately after.

- Add scannable elements (short lists, compact tables) and explicit definitions readers can quote.

- Credit your claims with links to primary data or official docs; include author bios and credentials.

- Keep facts visible (don’t hide critical details only in schema). Ensure any schema reflects what’s on the page.

- Validate markup and watch for warnings/errors; keep your content fresh.

For a deeper tactical overview of AEO formats and the role of structured data, see CXL’s AEO guide for 2025.

A minimal FAQPage JSON‑LD example that mirrors visible Q&A content on the page:

{

"@context": "https://schema.org",

"@type": "FAQPage",

"mainEntity": [

{

"@type": "Question",

"name": "What is Answer Engine Optimization (AEO)?",

"acceptedAnswer": {

"@type": "Answer",

"text": "Answer Engine Optimization (AEO) is the practice of structuring and improving content so AI-powered answer engines can understand it, select it, cite it, and present it as a direct answer to user queries."

}

}

]

}

Platform nuances (so your content fits the surface)

Google AI Overviews: Favor concise, well‑structured answers supported by credible sources. Topical depth still matters across your cluster. If you see volatility, you’re not alone—coverage varies by query class and over time. For context, our note on Google AI Overviews changes 2025 explains how shifts can affect citation patterns.

Bing Copilot: Because Copilot exposes the underlying query and sources, match your titles, H1s, and meta descriptions to the exact question terms people use. Keep pages scannable and make the answer plain in the first 100–150 words.

ChatGPT with browsing: Make extractable answer blocks and cite primary sources on the page. Think of it this way: the clearer your claims and references, the easier it is for the model to quote and link back accurately.

Perplexity: Precision wins. Short, quotable definitions, compact lists, and explicit source links increase the odds of being one of the numbered citations. Freshness and transparent evidence carry weight.

Measurement and analytics for AEO

What would it take for your page to be cited consistently? Treat AEO like an evidence pipeline: hypothesis → content pattern → multi‑engine test → citation log → iteration.

Weekly testing cadence

- Maintain a fixed query set per topic cluster (e.g., 25–50 questions). Test across AI Overviews, Bing Copilot, ChatGPT (browsing), and Perplexity once a week. Capture screenshots and log citation order, wording, and links.

GA4 configuration for AI answer referrals

- Go to Admin → Channel groups → Create new channel group.

- Add a channel named “AI Answer Engines.”

- Define conditions using Session source/medium or Referrer (regex works) to group visits that arrive from AI answer surfaces.

- Order channels so your new rules evaluate correctly; save/apply. Note that groups aren’t retroactive and traffic can fall into Unassigned if rules are too narrow.

Reference: GA4 Help: Custom channel groups (Google, 2023–2025).

Practical example/workflow (single‑tool illustration)

- Disclosure: Geneo is our product.

- Example workflow: For a priority topic, track 30 core queries. Each week, test them on Google AI Overviews, Bing Copilot, ChatGPT (browsing), and Perplexity; store screenshots and the exact cited snippet text. Log whether your page is cited, the citation position, and how it’s described. Based on patterns (e.g., Perplexity cites your definition but Copilot prefers a how‑to list), edit the page: tighten the 40‑word answer block, add a short table, and ensure author credentials are visible. Repeat for four weeks and compare share‑of‑answer trends. If you’re coordinating across clients, an AI visibility tool for agencies can centralize multi‑engine tracking and history without losing manual context.

Competitive and tooling landscape context: If you’re auditing options beyond your current stack, see our AI brand visibility tools comparison for category definitions and trade‑offs.

KPIs to watch (be realistic)

- Citations and mentions across engines on priority topics (target steady month‑over‑month growth).

- Share‑of‑answer in Perplexity and Copilot (how often you appear among cited sources).

- AI‑referred sessions where links exist; engagement and assisted conversions.

- Zero‑click visibility indicators: branded search lift, sentiment/context of mentions.

Common pitfalls (and fast fixes)

- Burying the answer in a long intro → Add a 30–60‑word direct answer under the question heading; expand below.

- Schema that doesn’t match visible content → Make the answer visible and keep JSON‑LD in sync; validate routinely.

- Opaque authorship and sourcing → Publish author bios with credentials; link to primary data and official docs where appropriate.

- One‑and‑done testing → Expect volatility and re‑run tests weekly; track patterns, not one‑off wins.

- Over‑focusing on one engine → Test all four surfaces; optimize the same page for multiple extraction styles.

Bottom line

AEO isn’t a silver bullet, and no single tactic guarantees inclusion. But if you structure pages for extractability, cite real evidence, and run a steady test‑and‑learn loop, you’ll increase the odds that answer engines choose—and credit—your work. Here’s the deal: the teams who log citations and iterate methodically will compound gains while everyone else argues about definitions.