Best Practices for Agencies: Winning AI SEO Pitches & Reporting (2025)

Discover actionable 2025 workflows for agencies: AI search engine optimization, GEO/AEO audits, white-label reporting, and proven pitch strategies for digital agencies.

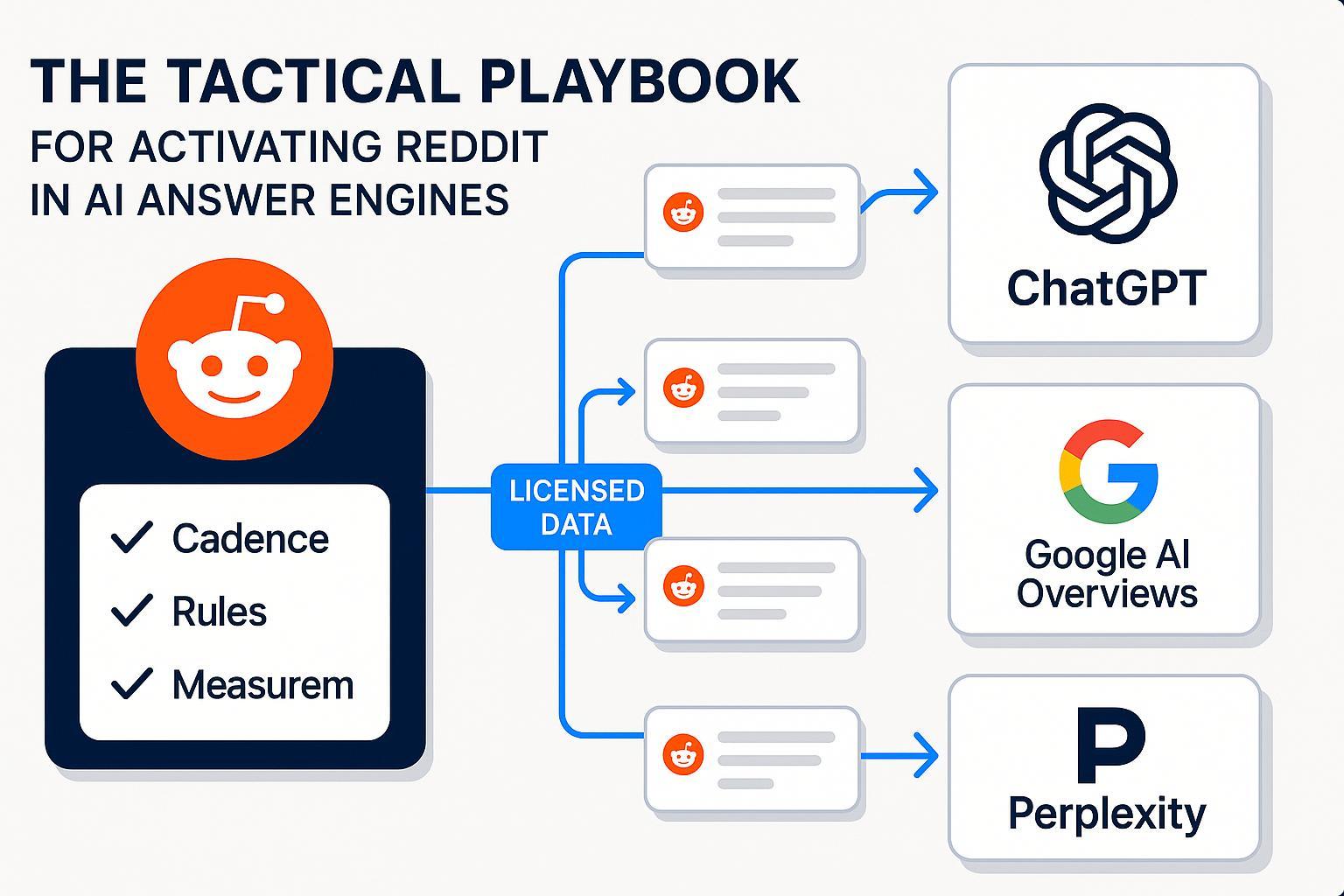

Agencies that can prove client visibility across AI answer engines—Google AI Overviews, ChatGPT-style browsing answers, and Perplexity—are winning pitches and renewals. This guide distills field-tested workflows your team can implement now: how to run pitch-winning GEO/AEO competitive audits, onboard efficiently, run monthly optimization sprints, and report outcomes with white-label dashboards.

What’s changed in 2025 (and why agencies must adapt)

Google’s AI experiences synthesize answers from multiple sources and link back to the web. Google reiterates that publishers should focus on helpful, people-first content, clear structure, and technical accessibility as outlined in AI features and your website (Google Search Central, 2025) and its companion guidance on succeeding in AI search (Google Search Central blog, May 2025).

In May 2025, Google stated AI features have increased usage for certain query types; see the product update in AI in Search: Going beyond information to intelligence (Google blog, 2025).

Independent measurements show meaningful CTR shifts when AI Overviews appear. Amsive’s 2025 analysis (reported by Search Engine Land) observed an average −15.49% CTR with non‑brand falls near −19.98% when AI Overviews are present. Ahrefs found a ~34.5% drop in position‑1 CTR in cohorts where AI Overviews showed (2025 study scope noted on the page).

Practically: GEO/AEO is now a revenue conversation. Agencies must measure and improve inclusion/citations in AI answers, not just blue links—and make that visible in pitch decks and monthly reports.

Quick orientation for clients: If you need a succinct primer to set context, share the internal explainer on the Traditional SEO vs GEO (Geneo): 2025 Marketer’s Comparison.

Workflow 1 — Pitch-winning competitive GEO/AEO audit

Purpose: Prove you understand how the client and its competitors surface in AI answers today—and where the quickest wins are.

Steps you can replicate this week:

Define the prompt universe

Funnel coverage: 10–15 prompts per stage (awareness → consideration → decision), per product/service.

Include variants: “what is…”, “best… for”, “top…”, “vs…/alternatives”, and “how much/price”.

Test across engines

For each prompt, capture whether the brand is cited/mentioned in Google AI Overviews, Perplexity, and browsing answers in chat-style engines. Save screenshots and URLs. Note the cited passages and their page structures.

Analyze the why

Identify common traits of cited pages: concise answer blocks (40–70 words), recency (updated within 90 days), entity clarity (brand/product names), and supporting structured data.

Build the executive summary

1 slide: current presence (Yes/No/Partial) across engines x funnel stages; top 5 gaps; 60‑day “quick wins” with owner and effort estimates.

Package as a white‑label deliverable

Provide a 6–8 slide mini‑audit in the sales deck with a concise narrative: “Here’s how your buyers ask, where you appear today, and the fastest path to inclusion in AI answers.”

Common pitfalls to avoid

Over‑weighting traditional SERP position data; in practice, AI answers sometimes cite beyond the organic top 10.

Not preserving evidence; screenshots and annotated citations make audits credible.

Ignoring regional/language variance; validate in the target locale.

Workflow 2 — Onboarding checklist (first 30–45 days)

Technical foundations

Crawl and indexing: resolve disallow rules, ensure critical pages are crawlable, fast, and accessible. Keep AI-friendly accessibility in mind (robots directives; sitemaps; page performance). The IAB Tech Lab’s 2025 guidance on agent access and robots conventions in the LLMs and AI Agents Integration Framework is useful context.

Structured data: Article, FAQPage, HowTo, Organization, Person/Author. Validate and fix errors.

Internationalization: implement and reciprocate hreflang annotations per Google’s international and multilingual sites guidance (Search Central).

Content and entities

Answer blocks: add a 40–70 word, self‑contained answer under relevant H2/H3s. Follow with supporting detail, examples, and citations.

Entity clarity: standardize brand/product naming, include credentials, addresses, and author bios with expertise.

Freshness: update out‑of‑date stats; add publication and updated timestamps.

Measurement baseline

Track: AI citations/mentions by engine, share of voice (SOV), sentiment of mentions, freshness coverage, structured data coverage, answer‑block coverage, and time‑to‑citation for updated pages.

Workflow 3 — Monthly optimization sprints (repeatable)

Targeting and cadence

Each month, select the 10–20 highest‑value prompts per engine and funnel stage. Prioritize high‑intent “vs/alternatives” and “best for [use case]” queries for B2B.

Experiments to run

Reformatting for extractability: concise definitions, steps, tables, and bullets near top of page.

Schema improvements: add/repair FAQ/HowTo where relevant.

Freshness debts: ensure priority pages have updated stats and examples within 60–90 days.

Engine‑specific tactics

Google AI Overviews: Keep traditional SEO strong; Google’s guidance emphasizes helpful, people‑first content and technical quality, as summarized in the 2025 AI features and your website page and its AI search performance guidance cited earlier.

Perplexity: Answers are multi‑source with inline citations. Prioritize clarity, recency, and trust signals (original data, awards, credentials), and test different prompt phrasings when validating presence.

Chat-style browsing engines: Ensure key resources are crawlable and not paywalled. Provide linkable assets (checklists, tables) and clear author/metadata—these are more likely to be cited when browsing is invoked.

Checkpoints

Re-measure citation presence and SOV every 2–4 weeks for changed pages; record “time‑to‑citation” post update.

Workflow example — Running a cross‑platform GEO/AEO audit and reporting loop with Geneo

Disclosure: Geneo is our product.

Here’s a practical, mid‑funnel example you can adapt for your next pitch and for ongoing reporting:

Set up client and competitor profiles

Create a workspace per client; add 3–5 named competitors and the priority prompt list segmented by funnel stage and engine.

Capture baseline AI visibility

Run scheduled checks across Google AI Overviews, Perplexity, and chat‑style engines. Log citations/mentions, source URLs, and sentiment of the surrounding answer passages.

Identify gaps and quick wins

Use the engine x funnel matrix to find missing citations on high‑intent prompts. Flag pages lacking answer blocks, outdated stats, or missing schema.

Build the white‑label deck and dashboard

Export a 1‑page executive summary (top gaps, projected wins in 60 days) plus a live dashboard for ongoing tracking. Include SOV deltas vs competitors and a freshness/structured‑data coverage panel.

Run monthly sprints and annotate changes

After updates, annotate the dashboard with changes made (e.g., added FAQ schema; refreshed stats). Track time‑to‑citation and highlight wins in monthly reports and QBRs.

Result: In pitches, this workflow shows exactly where the prospect is missing from AI answers and how you’ll fix it. In delivery, it turns into a repeatable optimization/reporting loop your analysts can run in hours, not days.

Workflow 4 — White‑label reporting that clients actually read

Cadence and format

Live dashboard (weekly updates) and a monthly executive PDF with 1 page of decisions and owners; quarterly strategy reviews to adjust roadmap based on performance.

What to include (beyond traffic)

KPI | What it shows | How to act |

|---|---|---|

AI citations by engine | Inclusion in AI answers for tracked prompts | Double down on pages gaining citations; fix extractability on laggards |

Share of voice (SOV) | Brand citations vs competitors | Identify engines/stages where competitors dominate; prioritize counter-content |

Sentiment of mentions | Perceived brand context in answers | Address negative/neutral mentions with clarifying content and proof |

Freshness coverage | % priority pages updated <90 days | Schedule rolling updates; add new data and examples |

Structured data coverage | % pages with valid FAQ/HowTo/Article | Implement and validate; repair errors |

Answer‑block coverage | % pages with 40–70 word answers | Add concise answer blocks under H2/H3 |

Time‑to‑citation | Days from update to first AI citation | Optimize update cadence; watch diminishing returns |

Compliance and brand safety

U.S. FTC: Be transparent with reviews and claims (agencies can be liable). See the 2024 final rule banning fake reviews in the FTC announcement.

EU AI Act: If your service uses higher‑risk AI systems for clients, ensure logging and oversight; many GEO/AEO efforts are “limited‑risk,” but document your assessments. Reference the 2024–2025 rollout note in the European Commission’s AI Act entry‑into‑force update.

Workflow 5 — Multi‑region and multi‑language execution

Local intent mapping: Rebuild prompt lists per locale; don’t translate literally—localize use cases and examples.

hreflang and localized schema: Ensure reciprocal hreflang and localized Organization/LocalBusiness attributes per market (address, currency). Follow Google’s internationalization guidance cited above.

Native QA: Validate AI answers in each locale with native speakers; adjust for regional entities and regulatory context.

Common failure modes (and pragmatic fixes)

Treating GEO/AEO as “yet another report” rather than a roadmap. Fix: Tie every metric to a decision or sprint.

Ignoring freshness debt. Fix: Prioritize pages with high potential but outdated citations; aim for <90‑day updates on core pages.

Thin answer blocks. Fix: Add clear, 40–70 word answers with definitions, steps, or criteria right under relevant headings.

No baseline. Fix: Start tracking citations, SOV, and sentiment before changes; measure deltas after.

Over‑focusing on a single engine. Fix: Compare at least Google AI Overviews and one chat‑style engine to reflect how buyers research.

Packaging GEO/AEO as an agency offering

Positioning

“AI Answers Visibility Program” with two tracks: a) Pitch audit (2 weeks), b) Quarterly optimization loop.

Deliverables

Pitch audit: 6–8 slide deck with engine x funnel matrix, top 5 gaps, and a 60‑day plan.

Monthly delivery: roadmap, annotated updates, live dashboard, and executive PDF.

Pricing notes

Price the audit as a fixed‑fee discovery; bundle the monthly loop into your retainer. Anchor the value in incremental inclusion/SOV and time saved in reporting.

Final thought and next step

The agencies winning in 2025 are those that operationalize GEO/AEO—auditing, fixing extractability/freshness, and reporting AI visibility like a first‑class KPI. If you want to show clients exactly how they appear across AI engines and streamline reporting, book a short demo of Geneo to see this workflow end‑to‑end: https://geneo.app