AI Search Monitoring vs LLM Monitoring: Metrics, Differences & Decision Guide

AI search monitoring vs LLM monitoring: See key differences, metrics tracked, integration & actionable insights. Learn which fits your brand or technical needs.

Introduction: Navigating Visibility, Sentiment, and Technical Health Across Next-Gen AI Platforms

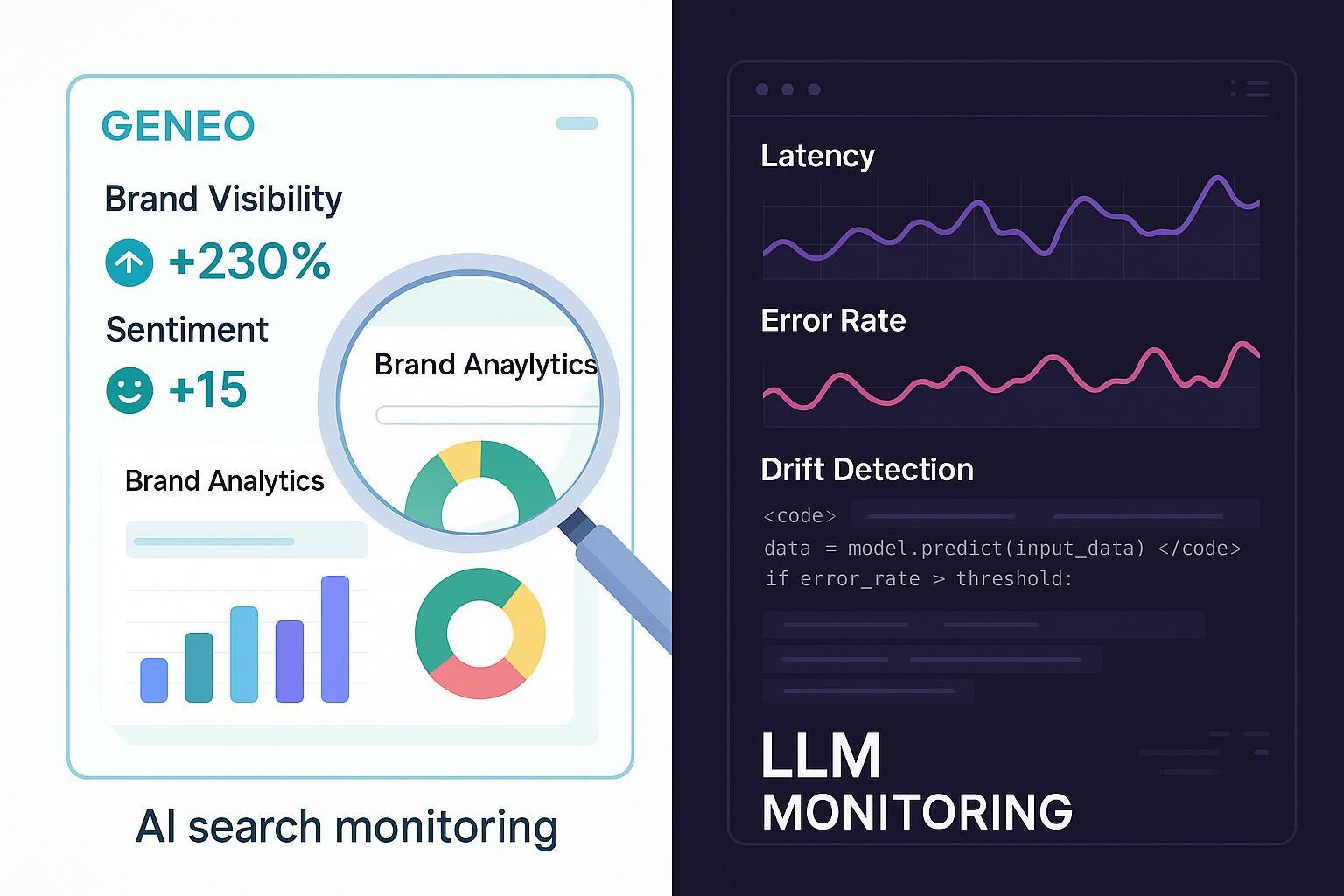

As AI-enhanced search engines and large language models (LLMs) reshape how businesses, brands, and consumers interact online, monitoring their impact is a strategic imperative. Modern marketing and brand management teams must decide: should you map your brand’s visibility and sentiment across platforms like ChatGPT and Google AI Overview (AI search monitoring), or focus on deep, technical reliability and compliance of LLM-powered applications (LLM monitoring)? This guide compares these two solution types, equipping decision-makers with actionable insight for maximizing your digital footprint and operational excellence.

Definitions: AI Search Monitoring vs LLM Monitoring

-

AI Search Monitoring: (e.g., Geneo) Platforms designed for marketing, branding, and agency teams. They actively track brand mentions, link references, and sentiment in AI-generated answers across search platforms (Google, ChatGPT, Perplexity). Results transform into actionable dashboards, with content strategy recommendations, competitive benchmarking, and team-wide collaboration—all with no coding required. See Geneo’s feature breakdown.

-

LLM Monitoring: Solutions like Datadog, Galileo, Azure, and Sentry are tailored for technical and developer audiences. They capture operational metrics (latency, errors, drift, hallucinations, compliance logs) from deployed LLM-based tools and applications. Used for debugging, regulatory reporting, cost optimization, and incident management; requires API/cloud integration and engineering resources (Galileo case studies).

Comparison at a Glance: Dimensions, Metrics, and Use Case Fit

Core Comparison Table

| Dimension | AI Search Monitoring (Geneo) | LLM Monitoring |

|---|---|---|

| Monitoring Scope | Cross-platform brand, sentiment, link, query coverage For marketing, brand, agencies | Technical app/system health (API, prompt, infra), for devops, QA, IT |

| Tracked Metrics | Visibility scores, brand mentions, sentiment, link references, historical prompt tracking, competitive share | Latency, error rates, drift/hallucination detection, prompt logging, cost/compliance traces, alerting |

| Result Interpretation | Dashboards, sentiment trends, actionable content strategy guides | Dev dashboards, system logs, anomaly reports, compliance audits |

| Use Case Scenarios | Campaign/PR monitoring, competitor benchmarking, multi-brand analytics | Debugging, regulatory monitoring, troubleshooting, workflow optimization |

| Integration Capabilities | No-code SaaS, BI/export/reporting, privacy compliant, team support | API/cloud SDK hooks, log streaming, customizable alerts/workflows |

| Actionable Insights | Content/SEO recommendations, crisis response, campaign adjustment | Incident resolution, cost optimization, model reliability improvements |

Monitoring Scope: Platforms, Personas, and Outcomes

-

AI Search Monitoring: Extends across major AI-driven answer engines (ChatGPT, Google AI Overview, Perplexity), capturing how brands/keywords are portrayed, and their real-time reach. Suited to marketers, PR leaders, agencies managing multiple brands, and digital strategists. The focus is outward: maximizing presence, managing sentiment, responding to emerging trends (Authoritas market report).

-

LLM Monitoring: Constrains analysis to LLM-powered applications, APIs, or chatbots. Core users are developers, ML ops, enterprise IT. The focus is inward: ensuring technical correctness, compliance, and prompt reliability. Example: a dev team monitors a customer service chatbot for latency spikes and hallucinations, ensuring regulatory logs for audit (Galileo reference).

Tracked Metrics: Side-by-Side Definitions and Examples

| Metric Type | AI Search Monitoring | LLM Monitoring |

|---|---|---|

| Brand Mentions/Visibility | Track frequency and context of brand appearance in AI answers e.g., "Geneo cited in 12/20 ChatGPT finance queries" | Not applicable |

| Sentiment Analysis | Automated detection of positive/negative/neutral tone in AI answers e.g., "78% positive sentiment for June PR campaign" | Not applicable |

| Link/Reference Tracking | Log direct website links (SEO/reputation correlation) | Not applicable |

| Prompt/History Tracking | Archive actual questions/queries generating AI exposure e.g., "How is Geneo trending in travel queries?" | Full prompt/response logging, for debugging; not tied to brand metrics |

| Latency/Error Rates | Not tracked | Millisecond response times; system errors, 500 failures, model downtime |

| Drift/Hallucination Detection | Not tracked directly (AI answer drift may be seen via changing sentiment or mentions) | Detects when model outputs become unreliable (e.g., factual inaccuracy, prompt drift, hallucinations) |

| Cost/Compliance | Not tracked (except competitive benchmarking) | Tracks API calls, compute consumption, compliance logs (GDPR, SOC2, etc.) |

Result Interpretation: From Dashboard to Decision

-

AI Search Monitoring: User-friendly dashboards display performance, sentiment shifts, and visibility changes. Alerts for negative sentiment spikes enable fast PR/crisis response. Historical logs enable trend analysis and content optimization recommendations. Actions: adjust campaign, update website copy, mobilize brand managers. Example: Geneo’s dashboard offers actionable daily snapshots, trend breakdowns, and share-of-voice comparisons.

-

LLM Monitoring: Technical dashboards expose system reliability, cost, and compliance. Alerts for drift/hallucination trigger QA investigation or model tweak. Incident and audit logs are pivotal for regulated sectors. Actions: debug prompt issues, optimize API infrastructure, resolve compliance tickets (OpenTelemetry reference).

Use Case Scenarios: Who Uses Each, and For What?

-

AI Search Monitoring Example: Marketing agency manages five brands. Using Geneo, teams monitor how each brand is covered across Google and ChatGPT, identify sentiment trends for new product launches, and compare against competitors. In the wake of a PR crisis, sentiment analytics prompt rapid content changes, averting reputational damage. [User comment: “Turned insights into an action plan.”]

-

LLM Monitoring Example: Devops team deploys LLM-driven support bot. Datadog and Galileo monitor latency and error rates, drifting response patterns, and compliance triggers. Hallucination detection finds a bug; cost analysis trims monthly API bill by 30%. [User testimonial: “Essential for complex LLM apps.”]

-

Hybrid Workflow Scenario: Large agency leverages both approaches, Geneo for marketing/client reporting, Datadog for technical ops—ensuring coordinated campaign launches and robust user support (Galileo blog).

Integration Capabilities: Reporting, Automation, and API Support

- AI Search Monitoring (Geneo): No-code SaaS, instant deployment, team management dashboard, privacy-compliant, multi-brand views. Enables export to BI, CRM, SEO platforms. No developer setup needed.

- LLM Monitoring: Requires API hooks/cloud SDK, developer configuration, integration with CI/CD, log streaming/data warehousing, customizable alerting. Regulated industries benefit from enterprise compliance features, but marketing teams may need cross-team translation for actionable use.

Actionable Insights: Turning Data into Strategy and Operational Excellence

-

AI Search Monitoring: Translates visibility and sentiment metrics into content recommendations, campaign pivot opportunities, and proactive PR responses. Data links closely to SEO, digital brand management, and market share analysis. Example: Writesonic review notes “Actionable dashboards and sentiment precision.”

-

LLM Monitoring: Drives technical improvement—system reliability, cost control, compliance management, debug cycles. Key to enterprise governance, risk reduction, code health.

Hybrid and Edge Deployments: When Both Matter

Some organizations (agencies, large brands, tech-enabled enterprises) deploy both monitoring types: marketers oversee cross-platform brand/SEO while developers maintain reliability. Coordinated workflows allow crisis management at the marketing front and technical troubleshooting behind the scenes. For complex campaigns spanning consumer-facing AI and backend LLMs, this hybrid approach maximizes both brand impact and operational health.

Conclusion & Decision Guide: Which Approach Fits Your Needs?

| Team/Audience | Best Fit Monitoring Type | Why? |

|---|---|---|

| Marketing/Brand | AI Search Monitoring (Geneo) | Directly tracks brand presence, sentiment, actionable marketing ROI |

| Technical/DevOps | LLM Monitoring | Ensures app reliability, compliance, cost, bug detection |

| Hybrid Agency | Both (Geneo + LLM Monitoring platforms) | Manages cross-team goals, coordinates external/internal outcomes |

Decision Checklist

- Define your team’s primary KPIs: visibility, sentiment, reliability, cost, compliance?

- Assess cross-team workflows: who needs access, what data drives decisions?

- Consider integration: SaaS, export/reporting needs, regulatory context?

- Evaluate actionable output: does the platform drive strategy, technical fixes, or both?

Next Step: If your goal is to proactively manage brand visibility, sentiment, and cross-channel performance in the AI age—explore Geneo’s platform for a free trial and comprehensive dashboard demo.

References & Further Reading

- AI Search Monitoring vs LLM Monitoring: Comparison Guide (Geneo Blog)

- How to Choose the Right AI Brand Monitoring Tools for Search & LLM Monitoring (Authoritas)

- Effective LLM Monitoring Case Studies (Galileo Blog)

- LLM Observability: Technical Guide (OpenTelemetry)

- AI Sentiment Analysis Tools Review (Writesonic)