How User Reviews Influence AI Search Citations

Learn how reviews and ratings affect AI search citations on Google, ChatGPT, and Perplexity. Actionable, ethical strategies for better brand visibility.

If AI search results are the answer, citations are the footnotes. “AI search citations” are the explicit links that AI-powered search experiences attach to their responses to show where information came from. You’ll see them in Google’s AI Overviews supporting links, Perplexity’s inline source cards, and ChatGPT Search’s Sources panel. For marketers and SEO leaders, these citations determine who gets the click—and whose narrative gets amplified.

In this guide, we unpack how user reviews and ratings influence those citations, both directly (review pages being cited) and indirectly (reviews boosting the visibility of pages that do get cited). We’ll focus on Google AI Overviews, Perplexity, and ChatGPT Search, and keep everything aligned with 2024–2025 documentation and compliance rules.

What this article is and isn’t

- Is: An evidence-based primer on how reviews/ratings affect AI citation likelihood and how to strengthen your footing ethically.

- Isn’t: A tutorial on manipulating reviews. We explicitly reject deceptive or incentivized practices.

Key definition: AI search citations

- Core meaning: The links AI systems attach to their answers to substantiate claims.

- Why they matter: Citations are trust signals and traffic drivers. Earning them increases your brand’s exposure precisely when users are ready to act.

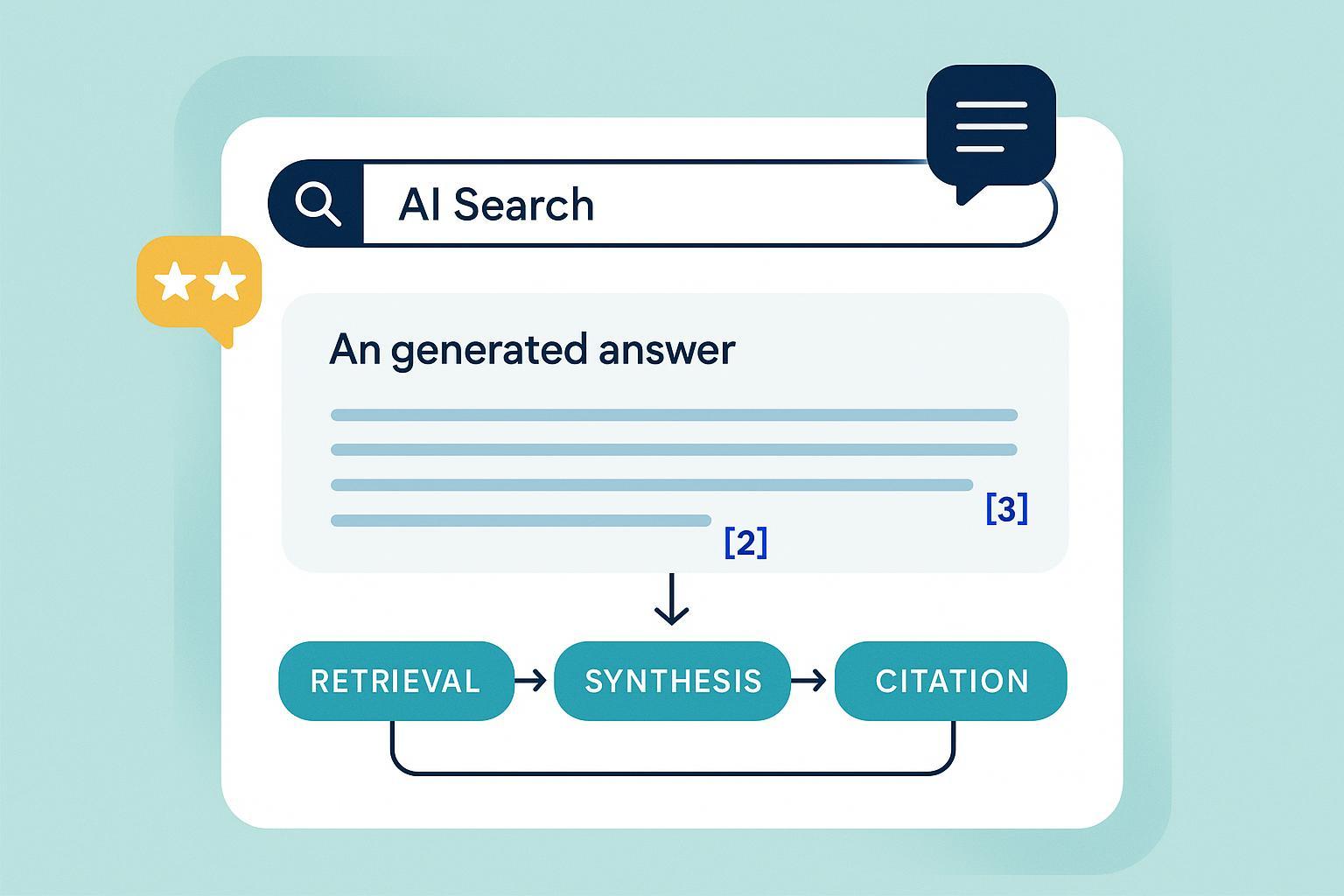

Where reviews enter the pipeline: direct vs. indirect Think of AI answer generation as a pipeline: retrieval → synthesis → citation.

- Direct pathway: Review-heavy pages (Yelp, G2, Trustpilot, Amazon, Reddit threads) can be selected during retrieval and then cited when the question asks for user experience, satisfaction, or social proof. Perplexity explicitly emphasizes citation transparency—“every answer comes with clickable citations,” per its 2024 guide, as shown in Perplexity Hub, Getting started with Perplexity.

- Indirect pathway: A strong, authentic review footprint improves your entity clarity and authority across the broader web (especially Google surfaces). That can make your own evidence-rich pages more likely to be retrieved and cited by AI systems. Google reiterates that AI features rely on normal Search eligibility and helpful, people-first content rather than special markup, per Google Search Central, AI features and your website and the 2025 guidance in Google Search Central Blog, Top ways to ensure your content performs well in Google’s AI Search.

Platform-by-platform: what we actually know (2024–2025)

- Google AI Overviews (AIO)

- Linking behavior: Google positions AIO as a way to “get the gist” and click through to learn more. Links associated with these answers are surfaced from the open web via standard Search criteria—there is no special AIO markup to implement, according to Google Search Central, AI features and your website and the May 2024 product post Google Blog, Generative AI in Search.

- Reviews and AIO: Google has not documented that AIO directly ingests user review signals as a unique factor. However, Google’s review-related systems and people-first content guidance can increase the odds that your pages are among the supporting links. See Google Search Central, Reviews System (2024–2025) and Creating helpful, reliable, people-first content.

- Important distinction: Google also generates AI summaries of reviews in Maps/Places (marked “Summarized with Gemini”), which is a separate product from AIO in web search. For details, see Google Places API, Place summaries (generative summaries).

- Perplexity

- Citation transparency: Perplexity consistently displays citations adjacent to answers and reiterates this commitment in its 2024 updates, including statements that it “has always listed sources above answers” as noted in Perplexity Hub, About the Dow Jones lawsuit.

- UGC inclusion: Perplexity’s content initiatives highlight that sources can range from academic research to Reddit threads, suggesting that user-generated content can be cited when it’s the most relevant experiential evidence; see Perplexity Hub, ‘Discover Daily’ announcement (2024).

- ChatGPT Search

- Citation display: OpenAI’s 2024 announcement confirms that ChatGPT Search includes inline citations and a Sources button to review references; see OpenAI, Introducing ChatGPT Search. While more agentic research modes may present sources differently, the Search product centers verifiable references.

- UGC context: When prompts ask for lived experience or product satisfaction, review pages and forums can appear among sources if they ground the answer meaningfully.

Technical foundations: schema, visibility, and review quality User reviews intersect with structured data and quality guidance in ways that indirectly affect AI citations via Google’s ecosystem.

- Schema types that matter: Review and AggregateRating within eligible item types (commonly Product; be cautious with LocalBusiness/Organization). Implement markup where reviews are actually visible to users and reflect original content. See Google Search Central, Review snippet structured data and Structured data policies.

- Self-serving constraints: Google places limitations on “self-serving reviews” for certain entity types (e.g., LocalBusiness), and prohibits misleading markup or representing third-party content as your own. Review markup must match on-page, user-visible content; see Structured data policies.

- Review quality signals: Google’s Reviews System rewards in-depth, experience-rich reviews that include quantitative measures, pros/cons, comparisons, and evidence of firsthand use, per Google Search Central, Reviews System. Building on-site content that synthesizes genuine customer feedback and independent testing aligns with E‑E‑A‑T and increases overall retrievability.

Compliance spotlight: what changed in 2024–2025

- FTC final rule (U.S.): In August 2024, the FTC finalized a trade regulation rule prohibiting deceptive consumer reviews and testimonials (e.g., buying/selling reviews, undisclosed employee/insider reviews, and suppression of legitimate negatives). Penalties and enforcement mechanisms are spelled out in the Federal Register, Trade Regulation Rule on the Use of Consumer Reviews and Testimonials (Aug. 22, 2024).

- Platform enforcement: Major platforms have tightened anti-fraud measures. For instance, Trustpilot reports increased enforcement, combining AI and human moderation, as noted in the Trustpilot Trust Report 2025.

What reviews are—and aren’t—good for in AI citations

- Strong candidates for direct citation: Pages where real users share comparative experiences, consistent pros/cons, and nuanced context (e.g., a Reddit thread comparing two SaaS tools; a G2 page showing detailed use-case reviews). These are most likely to surface for experiential, “what’s it like?” queries.

- Indirect boosters of citation likelihood:

- First-party pages that synthesize customer feedback with transparent sourcing and testing.

- Well-marked Product pages with visible review content and compliant schema.

- Third-party coverage (press, expert reviews) that corroborates user sentiment and signals authority.

- Less effective or risky approaches:

- Thin, cherry-picked testimonials with no sources.

- Self-serving review markup or aggregated third-party quotes without proper attribution.

- Any tactic that violates the FTC rule or platform policies.

An ethical playbook for marketers and SEO/brand teams

- Grow authentic reviews (no manipulation)

- Invite balanced, honest feedback from real customers; avoid incentives tied to positive sentiment. Disclose any material connections.

- Aim for recency and steady velocity rather than volume spikes. Respond publicly and constructively to show stewardship.

- Diversify by journey stage: Google reviews for local discovery, G2/Capterra for B2B SaaS, marketplaces like Amazon for retail, and community forums for experiential context.

- Strengthen on-site foundations

- Publish evidence-rich resources: comparisons, hands-on tests, and buyer’s guides that cite legitimate customer reviews and independent evaluations.

- Implement Review and AggregateRating markup only on pages where review content is visible and eligible; keep Product/LocalBusiness schema complete and consistent.

- Ensure crawlability and performance: fast loading, stable URLs, and internal links that clearly connect your review-related content.

- Create retrievable synthesis assets for AI

- Produce “review synthesis” pages that distill patterns (common pros/cons, use cases, segmentation by industry/role), with links back to the original reviews and testing.

- Add Q&A modules that answer experiential questions (“Is X reliable for enterprise security teams?”) with citations to genuine customer feedback and technical benchmarks.

- Align to platform nuances

- Google AI Overviews: There’s no special AIO markup; focus on eligibility and helpful, people-first content. Build pages that AIO would want to surface as “learn more” links, as emphasized in Google Search Central, AI features and your website and the 2025 Search Central post cited above.

- Perplexity: It favors multiple, transparent citations—authoritative roundups and clearly sourced review syntheses are natural fits.

- ChatGPT Search: Expect sources to be inspected by users via the Sources panel. Make your claims auditable with clear citations and structured evidence.

- Bake in compliance and transparency

- Codify a review policy that bans fake, purchased, or undisclosed insider reviews; prohibits gating and suppression; and outlines fair moderation.

- Train agencies and vendors on the FTC’s 2024 rule and on platform policies (e.g., Trustpilot’s enforcement posture). Keep audit trails for outreach and moderation.

Measurement: from “we hope” to “we know” Build a lightweight observability stack that correlates your review footprint with AI citation visibility.

- AI citation visibility

- Track the count and share of citations your brand earns across: Perplexity (inline citations), ChatGPT Search (Sources), and Google AI Overviews (supporting links). Segment by domain type (your site vs. review/UGC vs. media/analyst).

- Sentiment and narrative

- Monitor the tone and key themes that AI systems surface about your brand, especially when citing review sources (e.g., common pros/cons, reliability, support experience).

- Review footprint health

- Volume, recency/velocity, star distribution, text richness, platform diversity; track by market/segment where relevant.

- Correlation and causality caution

- Compare before/after periods around initiatives (e.g., launching a review request cadence or publishing a new comparison hub). Expect correlation—not guaranteed causality—because many factors influence retrieval and citation.

How Geneo fits (without over-claiming)

- Cross-platform monitoring: Geneo tracks where and how your brand is cited or mentioned across ChatGPT, Perplexity, and Google AI Overviews, so you can see when UGC/review domains appear alongside—or instead of—your own resources. Learn more at https://geneo.app.

- Sentiment analysis: Geneo’s AI-driven sentiment helps you understand how AI summaries portray your brand and whether review-driven narratives skew positive or negative.

- Historical comparisons: Use Geneo’s historical query tracking to compare AI citation patterns before and after review initiatives (e.g., improved schema on product pages, new review synthesis content).

- Content optimization suggestions: Apply Geneo’s recommendations to strengthen pages most likely to be retrieved and cited (e.g., review roundups, comparison hubs). Note: Geneo does not create or manage reviews—it informs strategy via monitoring and insights.

- Multi-brand benchmarks: Agencies can benchmark which portfolio brands earn citations from review sites and forums and prioritize remediation where gaps appear.

Pitfalls to avoid and how to future-proof

- Over-indexing on UGC alone: Forums and review pages can be noisy. AI systems may cite them for experiential queries, but triangulate with authoritative testing and documentation. Media reviews and primary research add weight.

- Misreading AIO behavior: Because Google has not documented direct use of review signals in AIO inputs, treat review work as an indirect booster of retrievability and trust, not a switch you can flip. Align with Google’s people-first content guidance and the Reviews System.

- Ignoring citation quality concerns: Independent testing in 2024 highlighted inconsistent or poor attribution across AI search engines; see the Tow Center’s analysis in the Columbia Journalism Review (2024). Encourage your teams to verify sources and escalate obvious misattributions to platforms.

- Static playbooks: Platform behavior evolves quickly. Re-validate your assumptions quarterly against current documentation—e.g., Google Search Central, AI features and your website and OpenAI, Introducing ChatGPT Search (2024).

Quick checklist

- Ethical review acquisition program aligned to the 2024 FTC rule and platform policies.

- First-party pages that synthesize genuine customer insights with transparent sourcing and testing.

- Compliant Review/AggregateRating schema on eligible, user-visible review content.

- Fast, crawlable, interlinked pages covering experiential questions your buyers actually ask.

- Monitoring and iteration loop for AI citations, sentiment, and review footprint health (e.g., with Geneo).

Bottom line User reviews and ratings influence AI search citations in two ways: they can be the sources themselves for experience-driven questions, and they can strengthen the broader web signals that help your best pages get retrieved and linked. Focus on authenticity, compliant schema, evidence-rich content, and continuous measurement. That’s how you turn real customer voice into durable visibility across AI search.