How AI Overviews Changed Search Behavior and Organic Clicks in 2025

Discover how AI-generated answers in Google, Bing, and Perplexity transformed search behavior and cut organic clicks in 2025. See key data and actionable steps.

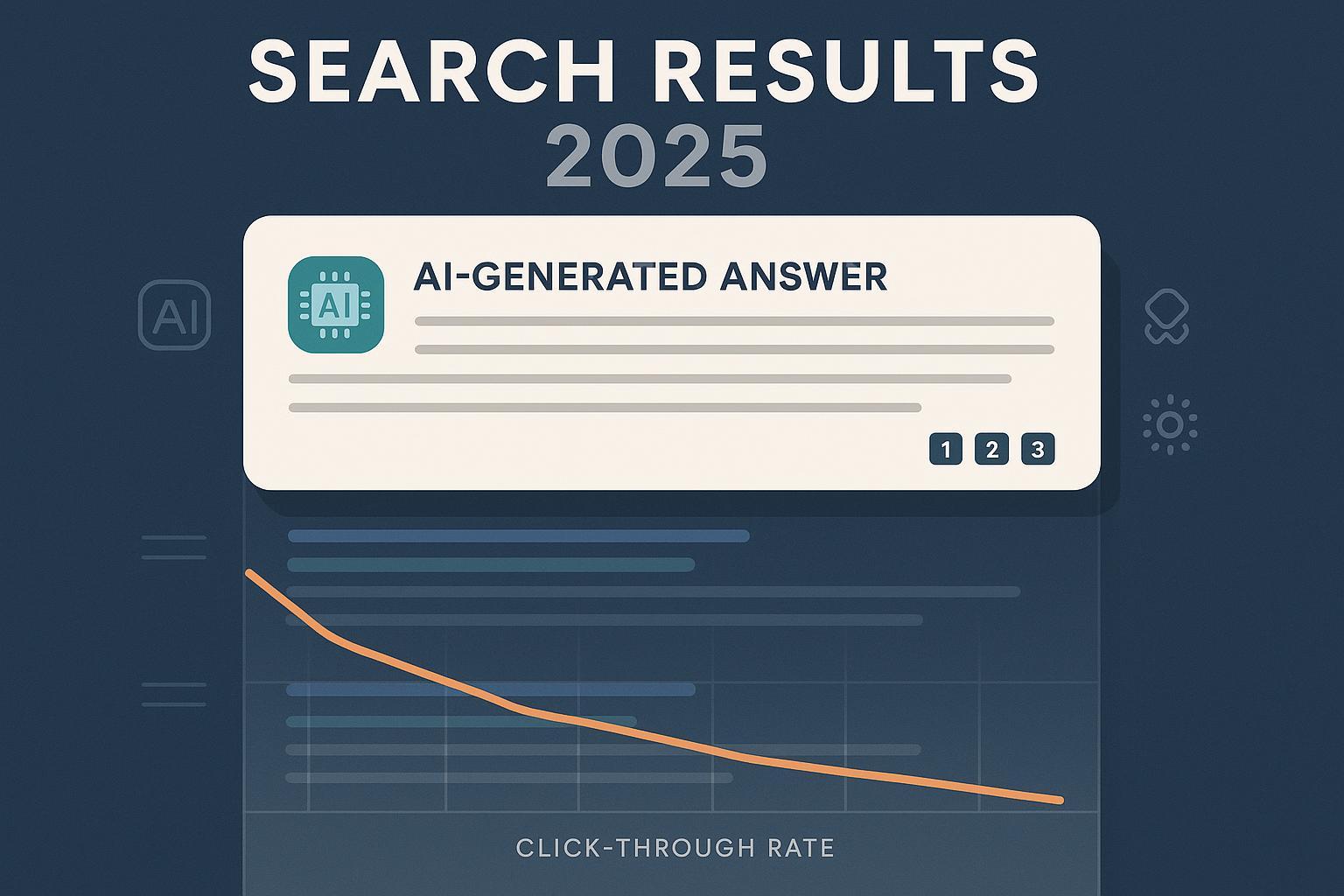

The search results page is no longer a simple list of blue links. In 2025, AI-generated answers—Google’s AI Overviews and AI Mode, Bing Copilot’s summaries, and Perplexity’s cited responses—now sit atop countless queries, compressing organic real estate and changing how users behave. For brands and publishers, the implications are immediate: fewer clicks on informational queries, more answer-first browsing, and a rising need to earn inclusion (and favorable representation) inside AI surfaces—not just rank traditional pages.

What changed on the SERP in 2025

Across major markets, AI answers are present on a material share of queries—and rising.

- In U.S. desktop results, AI Overviews appeared on 6.49% of searches in January 2025, then 13.14% by March 2025, with 88% skewed toward informational intent, according to the Semrush 2025 AI Overviews study.

- In the UK, industry tracking shows “over 18%” prevalence by late March 2025, with fluctuations documented through mid-year; summarized by Barry Adams referencing Sistrix’s panels in “Google’s AI adventure, year one” (2025).

- During Google’s March 2025 core update window, category triggers spiked—entertainment (+528%), restaurants (+387%), travel (+381%)—as reported via BrightEdge data covered by Search Engine Land’s April 2025 analysis.

Google’s own position emphasizes increased usage: “In our biggest markets like the U.S. and India, AI Overviews is driving over 10% increase in usage of Google for the types of queries that show AI Overviews,” from the Google Search AI Mode update (May 2025). That usage increase coexists with independent findings of lower click-through to publishers, which we’ll address next.

What the click data shows—and why it matters

Multiple 2025 measurements point to reduced organic clicks when AI summaries appear:

- A field study by Pew Research Center (68,879 Google searches run by 900 U.S. adults in March 2025) found users clicked traditional links in 8% of visits when an AI summary appeared vs. 15% without; only 1% clicked links inside the AI summary; and 26% ended the session after seeing an AI summary vs. 16% without, per Pew’s July 2025 behavioral analysis.

- Ahrefs’ aggregate GSC-based study (300,000 informational keywords, U.S.) shows position‑1 CTR fell about 34.5% when AI Overviews were present (Mar 2024 → Mar 2025), as detailed in the Ahrefs CTR impact study (April 2025).

- Amsive’s first‑party panels (700k keywords across 10 sites) observed average CTR declines of −15.49% when AI Overviews trigger, with segment nuances, per Amsive’s 2025 research write‑up.

- Publishers felt the drop: Digital Content Next reported a median YoY decline of roughly 10% in Google Search referrals over an ~8‑week period in mid‑2025 for 19 premium publishers, summarized in DCN’s August 2025 report.

Together, these findings don’t mean “no clicks”—users still click for depth, shopping, and verification—but they do confirm a structural compression of referral traffic for many informational queries. Brands need to adapt.

Why verticals feel it differently

AI Overview triggers are uneven across categories, which means risk and opportunity vary:

- Travel: With March spike data and abundant informational intent, travel queries frequently surface AI answers summarizing destinations, itineraries, and visa rules. Implication: Build authoritative, citation‑friendly destination guides and safety information with clear sourcing.

- Restaurants: Local discovery queries often summarize reviews, cuisines, and booking options. Implication: Ensure up‑to‑date menus, structured data, and reputable third‑party citations; monitor how brand sentiment is expressed.

- Entertainment: High spike rates mean synopsis and recommendation answers compress clicks to individual titles or publisher pages. Implication: Provide fact‑checked metadata and unique angles that warrant citation in AI surfaces.

- Ecommerce: While transactional queries may be more guarded, many pre‑purchase informational queries (comparisons, how‑tos) trigger AI summaries. Implication: Publish comparison matrices, specs, and care instructions with structured data and clear references.

Measure your “answer‑engine” share of voice

Winning in 2025 requires measuring more than rankings. Treat AI surfaces as channels with their own visibility, accuracy, and sentiment:

- Inclusion rate: Percent of tracked queries where your brand is named or cited inside AI answers (Google AI Overviews/AI Mode, Bing Copilot, Perplexity).

- Citation frequency and quality: Number of times your domain is linked or referenced; whether citations are prominent, accurate, and contextually favorable.

- Sentiment and framing: The tone of descriptions (positive/neutral/negative) and whether key facts are correct.

- Depth and attribution integrity: Are product specs, pricing, or claims correctly attributed to you? Are competitors prioritized?

This is the foundation of Generative Engine Optimization (GEO)—optimizing for inclusion and favorable representation in AI answers, alongside traditional SEO. For a deeper primer on GEO and AI visibility, see the framing on Generative Engine Optimization and AI visibility to contextualize how these metrics fit into broader search programs.

A practical workflow to monitor cross‑engine visibility

Here’s a neutral, replicable process teams can run weekly:

- Define a query panel by intent (informational, navigational, commercial) across priority categories.

- Capture AI answers on Google (AIO/AI Mode), Bing Copilot, and Perplexity. Record inclusion, citations, and sentiment.

- Diagnose misattributions (wrong specs, outdated facts) and sentiment drifts; queue corrections with evidence.

- Align content upgrades to the patterns: FAQs, how‑tos, comparison cards, original data pages with clean references and schema.

You can facilitate this workflow with platforms like Geneo. Disclosure: Geneo is our product. For reading outputs, these example query reports illustrate visibility and sentiment patterns: a travel‑discovery panel such as “vacation rental homes Caye Caulker Belize” or “beachfront cabins Belize island accommodation”. Use them to learn how answer surfaces present brands versus competitors.

Content and technical adaptations that earn citations

AI answers favor clear, corroborated information that’s easy to attribute:

- Build citation‑friendly assets: Original data write‑ups, procedures, comparison matrices, and FAQs with transparent sources and dates.

- Strengthen entities and structure: Use descriptive headings, semantic HTML, and consistent naming for products and organizations.

- Implement structured data: FAQPage, HowTo, and Product where appropriate, with clean references and updated facts.

- Publish verification hooks: Provide downloadable specs, policy pages, and method notes that AI engines can cite.

Google’s guidance on “succeeding in AI search” emphasizes helpfulness, freshness, and robust sourcing—review the Google Developers blog explainer (May 2025) and adapt your editorial standards accordingly.

Governance and risk: Detect, correct, prevent

Answer engines can misstate facts or frame brands unfavorably. Establish a governance plan:

- Detection: Monitor a representative query panel weekly; log inclusion, sentiment, and accuracy with timestamps.

- Correction: Maintain evidence packs (screens, sources) and use documented channels (feedback forms, publisher outreach) to request fixes.

- Prevention: Update official pages first; consolidate canonical facts (pricing, specs, policies), and ensure multiple reputable third‑party sources corroborate them.

- Escalation: Create an internal playbook covering thresholds (e.g., safety claims, legal issues), contacts, and SLAs.

Forecast the next 6–12 months

Expect continued iteration:

- Google will refine triggers and UX for AI Overviews/AI Mode, potentially expanding in some verticals while reducing exposure in sensitive areas.

- Bing Copilot’s Deep Research is geared toward exploratory, multi‑step queries; expect richer answer cards and more structured source presentation as features mature, consistent with the Microsoft “Your AI Companion” announcement (Apr 2025).

- Perplexity will likely deepen citation workflows and developer integrations, in line with its Deep Research product notes (Feb 2025).

Budgeting and mix: Model traffic scenarios by intent and vertical using prevalence data (Semrush, Sistrix/SEL) and CTR impacts (Pew, Ahrefs, Amsive). Shift more effort to owned audiences, direct subscriptions, and GEO alongside SEO.

Updated on 2025-10-04

- US prevalence: Semrush reported AIO triggers at 13.14% (Mar 2025) with strong informational bias.

- Category surges: BrightEdge data (via SEL) highlighted March spikes in entertainment, restaurants, travel.

- Behavior: Pew documented lower click rates and higher session endings when AI summaries appear.

Closing: Act now, measure relentlessly

The SERP is now an answer surface. Treat AI Overviews, Copilot, and Perplexity as channels you can measure and influence. Put an answer‑engine share‑of‑voice program in place, upgrade citation‑friendly assets, and establish governance to correct inaccuracies. If your team needs a consolidated way to track cross‑engine visibility and sentiment, Geneo can be used to support that monitoring workflow and multi‑brand collaboration. For deeper insight into GEO concepts and multi‑brand monitoring approaches, explore the broader perspective at the Generative Engine Optimization, SEO & AI content insights.