18 Best AI-Friendly List Formatting Practices for LLMs (2025)

18 actionable ways to create AI-friendly lists for LLMs—get 2025-ready with schema, chunking, and measurement tips. Boost AI citations now!

TL;DR

- Write with question‑led headings and place a 40–60 word direct answer first.

- Prefer Markdown bullets and shallow hierarchies; keep each bullet atomic.

- Add semantic HTML and list‑aware schema (FAQPage, HowTo, ItemList) with rich entity linking.

- Chunk content by tokens (~800–1200) with 10–20% overlap; add stable anchors and rerank retrieved chunks.

- Make AI crawlability explicit; include a compact FAQ and TL;DR; optimize for AEO.

- Track AI citations in Perplexity/AI Overviews, re‑date meaningful updates, and run periodic AI response audits.

Methodology and how we chose

- Criteria and indicative weights: LLM parse‑ability (30%), evidence and standards alignment (25%), low‑lift implementability (20%), impact on AI citations (15%), human readability (10%).

- Sources: We prioritized primary documentation and 2024–2025 analyses, including Azure/OpenAI/Anthropic retrieval guidance, Google Developers structured data docs, and 2025 AEO playbooks. Examples and code are trimmed for clarity; vendor specifics are subject to change.

A. Plan and format fundamentals

1) Why start each section with a question and a 40–60 word direct answer?

Leading with a natural‑language question and a crisp answer increases extractability for AI Overviews and answer engines, while helping readers scan. It mirrors the snippet‑friendly “inverted pyramid” style and sets up bullets and examples that follow.

- How to implement

- Use question‑style H2s (“How do I…?”).

- Place a 40–60 word answer immediately under the heading.

- Expand with bullets, examples, and citations.

- Evidence: According to the CXL 2025 guide to AEO, direct answers and Q&A blocks improve inclusion in answer engines (CXL, 2025).

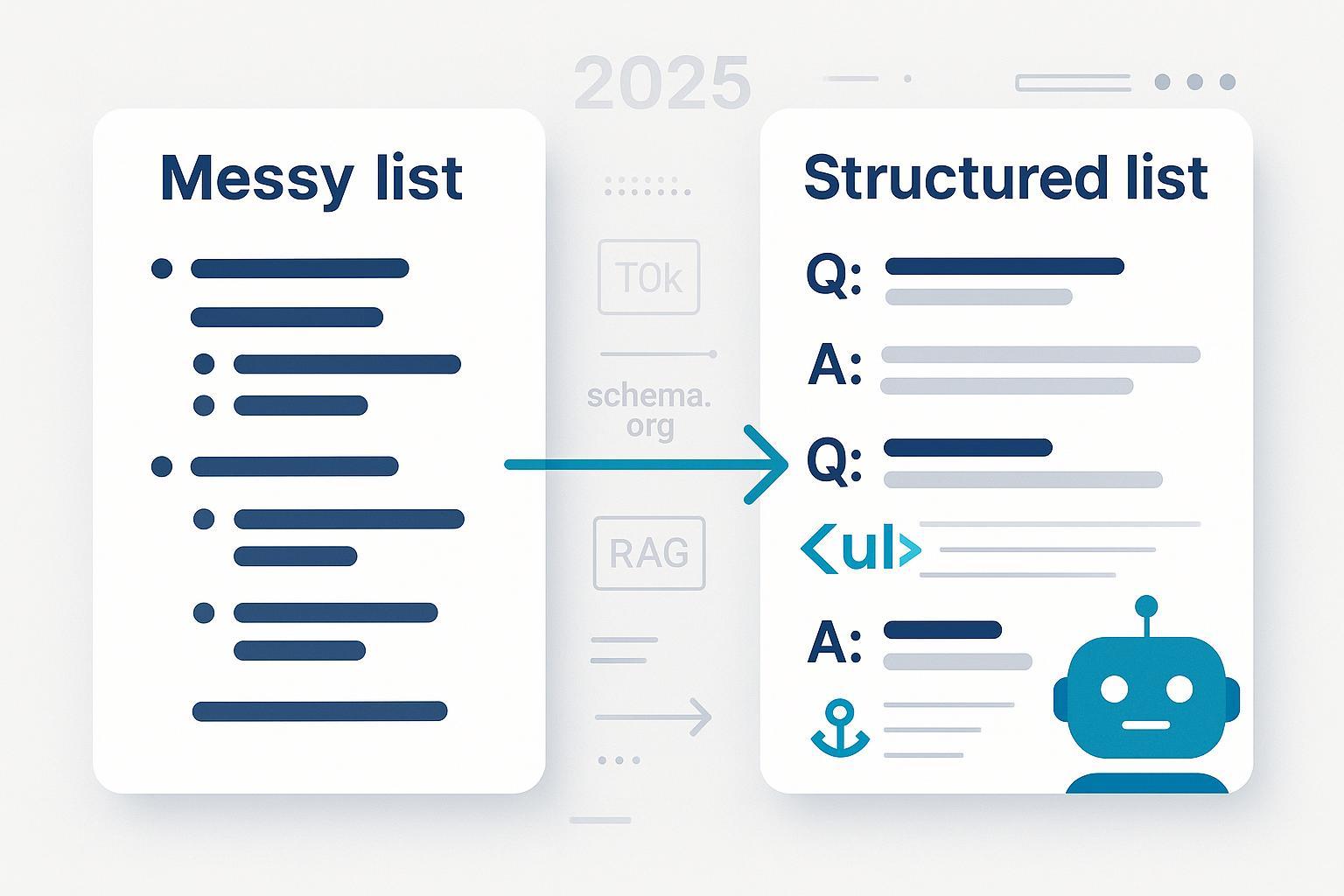

Before → After excerpt

- Before: “Best practices for lists” then a long paragraph.

- After: “How should I format lists for LLMs?” followed by a 55‑word answer, then bullets and schema where relevant.

2) When should you prefer Markdown over JSON in human‑facing pages?

Use Markdown (headings, bullets, tables) for narrative content and reserve JSON for machine endpoints. Markdown is lighter on tokens and easier for models to parse, while JSON is ideal only when strict schemas are necessary.

- How to implement

- Author in Markdown or semantic HTML; keep bullets shallow.

- Use JSON‑LD for schema only; avoid embedding full data objects within prose.

- Evidence: Community benchmarks suggest Markdown can be roughly 15% more token‑efficient than JSON for equivalent content (OpenAI Community, 2024); engineering posts describe improved extraction when favoring HTML/Markdown over heavy JSON (Skyvern, 2024).

3) How atomic should bullets be—and how deep should you nest them?

Keep one idea per bullet and avoid nesting beyond two levels. Atomic bullets improve comprehension for readers and reduce ambiguity for LLMs that infer structure from headings and list markers.

- How to implement

- One action or concept per bullet.

- At most two levels of nesting.

- Convert sprawling bullets into short sequences.

- Evidence: Google’s technical writing guidance advocates single‑idea list items and clear list structures (Google Developers—Lists and tables, 2025).

4) Why obsess over consistent terminology and entity names?

Consistent naming strengthens entity recognition and reduces homonym collisions in knowledge graphs and LLMs. Align names with identifiers and canonical profiles so answer engines attribute correctly.

- How to implement

- Standardize product, org, and person names.

- Link to canonical profiles (e.g., Wikidata) when relevant.

- Use schema @id and sameAs for disambiguation.

- Evidence: Entity SEO resources stress consistent naming and identifiers to improve knowledge graph alignment (Search Engine Land, 2024).

5) Should you add a mini‑glossary for recurring terms and acronyms?

Yes—compact glossaries reduce ambiguity and help both humans and LLMs understand recurring jargon. They serve as anchorable definition chunks that retrieval systems can cite or summarize directly.

- How to implement

- Add a “Glossary” subsection or sidebar for acronyms and recurring terms.

- Keep definitions 1–2 sentences each; anchor the section for deep linking.

- Evidence: GEO/AEO checklists recommend FAQs and definitions to improve clarity and retrieval alignment (ToTheWeb, 2024).

B. Semantic and schema structuring

6) What semantic HTML patterns help machines and people equally?

Use a clean HTML5 hierarchy (article, section, h1–h3) and only add ARIA roles when native semantics are insufficient. Clear structure improves accessibility and machine parsing, defining topical boundaries for extraction.

- How to implement

- One H1; logical H2/H3 progression.

- Use section/article landmarks; avoid role overrides.

- Keep IDs stable for anchors.

- Evidence: W3C’s HTML‑ARIA mappings emphasize semantic‑first practice; ARIA is additive, not a replacement (W3C HTML‑ARIA, 2024).

7) Which schema types best encode list content (and why)?

Use JSON‑LD to mark up Q&A blocks (FAQPage), step lists (HowTo), collections/rankings (ItemList), and parent articles (Article/BlogPosting). This clarifies content types and supports rich results and AI understanding.

- How to implement

- Wrap Q&A with FAQPage → mainEntity → Question → acceptedAnswer.

- Use HowTo for stepwise instructions; ItemList for ranked collections.

- Validate with Google Rich Results Test.

- Evidence: Google recommends JSON‑LD and documents FAQPage, HowTo, and Article schemas with required properties (Google Developers—Structured data intro, 2025).

Minimal JSON‑LD examples

{

"@context": "https://schema.org",

"@type": "FAQPage",

"mainEntity": [{

"@type": "Question",

"name": "How should I format lists for LLMs?",

"acceptedAnswer": {

"@type": "Answer",

"text": "Lead with a question and a 40–60 word direct answer, then use shallow Markdown bullets. Add semantic HTML and relevant schema (FAQPage, HowTo, ItemList)."

}

}]

}

{

"@context": "https://schema.org",

"@type": "ItemList",

"itemListElement": [

{"@type": "ListItem", "position": 1, "name": "Question-led headings"},

{"@type": "ListItem", "position": 2, "name": "Markdown bullets"}

]

}

8) How do Organization/Person and sameAs strengthen attribution?

Enrich Organization/Person entities with sameAs links to canonical profiles and use @id to reference them across pages. Strong entity linking improves disambiguation and authority signals for answer engines.

- How to implement

- Add Organization/Person JSON‑LD site‑wide.

- Include sameAs to official profiles (e.g., LinkedIn, Wikidata).

- Reference authors and publishers via @id on each article.

- Evidence: Entity linking with sameAs helps disambiguation and authority (SchemaApp, 2024).

Example (excerpt)

{

"@context": "https://schema.org",

"@type": "Organization",

"@id": "https://example.com/#org",

"name": "Example Co",

"url": "https://example.com",

"sameAs": [

"https://www.wikidata.org/wiki/Q42",

"https://www.linkedin.com/company/example-co"

]

}

9) Why aim for high property completion and interlinked entities?

Completing required and recommended properties and connecting entities via about/mentions/@id builds an internal knowledge graph. This supports rich result eligibility and clearer AI comprehension.

- How to implement

- Fill recommended fields (author, datePublished, dateModified, headline, description, image).

- Use about/mentions to connect related entities.

- Validate via Google Rich Results Test and Schema Markup Validator.

- Evidence: Structured data quality and @id reuse improve coherence and visibility (MarTech on structured data quality, 2024).

C. Retrieval and RAG alignment

10) How should you chunk list sections by tokens (and why)?

Chunk content into ~800–1200 tokens with 10–20% overlap while respecting logical boundaries. This preserves context across chunk edges and improves retrieval precision and grounded generation.

- How to implement

- Split at sentence/paragraph boundaries.

- Start with 800–1200 tokens; test 10–20% overlap.

- Tune by corpus size and model window.

- Evidence: Vendor and community guidance commonly targets 512–1200 tokens with overlap; see Azure concepts and Pinecone/Weaviate/NVIDIA analyses (Azure—Use your data concepts, 2025; Weaviate chunking strategies, 2024).

Chunking sketch

[Chunk 1: ~1000 tokens]

<...content...>

[Overlap ~150–200 tokens]

[Chunk 2: ~1000 tokens]

11) Why add headings/IDs and stable anchors for addressability?

Stable anchors let users and answer engines cite precise sections. They also enable deterministic linking in audits and monitoring tools—and they make your own RAG pipelines more reliable.

- How to implement

- Assign predictable IDs (e.g., #faq, #glossary) and keep them stable.

- Match headings to common conversational queries.

- Evidence: Documentation platforms emphasize anchorable sections for deep linking and reuse (Atlassian documentation tooling, 2024).

12) How do you control hallucinations with reranking and top‑K caps?

Use a reranking step after initial retrieval and cap top‑K and total tokens included in prompts. This filters noise, preserves budget, and improves factual grounding.

- How to implement

- Retrieve K=20; rerank; keep top 5–10.

- Cap context at a budget (e.g., 1k–4k tokens) depending on your model.

- Consider lightweight summaries for near‑miss chunks.

- Evidence: Modular retrieval pipelines with verification reduce context size while improving accuracy; vendor docs stress token budgeting (Azure—On‑your‑data best practices, 2025; Anthropic—Contextual Retrieval, 2024).

D. Publication, AEO, and AI Overviews

13) What crawlability signals should you set for AI agents?

Make your robots.txt explicit for AI crawlers (e.g., GPTBot, ClaudeBot, PerplexityBot, Google‑Extended, Bingbot, CCBot). llms.txt is emergent and not consistently honored, so keep robots.txt authoritative and review it periodically.

- How to implement

- List relevant AI user‑agents you allow.

- Avoid accidental blocks in subdirectories.

- Re‑check after site moves or CDN changes.

- Evidence: Practical guides note robots.txt remains the primary control and AI bot behavior is evolving; treat llms.txt as advisory for now (MovingTrafficMedia, 2024).

robots.txt excerpt

User-agent: GPTBot

Allow: /

User-agent: ClaudeBot

Allow: /

User-agent: PerplexityBot

Allow: /

User-agent: Google-Extended

Allow: /

User-agent: Bingbot

Allow: /

User-agent: CCBot

Allow: /

User-agent: *

Disallow: /private/

14) Why include a compact FAQ and TL;DR near the top?

Answer engines and AI Overviews often extract from concise summaries and Q&A blocks. A TL;DR (50–70 words) plus a short FAQ mirrors those patterns, improving both UX and machine reuse.

- How to implement

- Add a TL;DR after the H1.

- Include 3–6 FAQs with 40–60 word answers; consider FAQPage schema.

- Evidence: AEO playbooks highlight TL;DR and FAQs as useful structures for inclusion in AI results (Inbound Design Partners, 2024; Big Human, 2024).

15) How do you write for answer engines (AEO) without hurting human UX?

Provide direct answers, use long‑tail phrasing that matches conversational queries, and surface trust signals (author bios, citations, dates). Keep prose first and use compact lists to avoid “over‑structuring.”

- How to implement

- Start sections with the answer; follow with bullets and evidence.

- Add author/publisher schema and visible dates.

- Maintain natural language and avoid keyword stuffing.

- Evidence: 2025 AEO guidance emphasizes clear answers, schema, and freshness for AI inclusion (AiMultiple, 2025).

E. Measurement and iteration

16) How should you track AI citations and mentions across engines?

Treat AI citations in Perplexity and AI Overviews as leading indicators. Ensure you’re indexable by Bing/IndexNow, then monitor how and when your pages are cited—and which snippets get reused.

- How to implement

- Check that Bing indexing and robots access are healthy.

- Track Perplexity citations on target queries; review snippet text and links.

- Record changes when you adjust structure or schema.

- Evidence: Visibility in Perplexity depends on crawl sources and freshness; citations are shown inline and in footnotes (WebFX, 2025; Perplexity Pages overview, 2025).

Tools and resources for measurement (neutral)

- Geneo — Monitor AI search visibility across ChatGPT/Perplexity/AI Overviews, analyze sentiment, keep history, and get optimization suggestions; supports multi‑brand collaboration. Disclosure: Geneo is our product.

- Alternatives (selection; capabilities change over time)

- Rankability AI Analyzer — Track inclusion in AI answers; better when you prioritize simple dashboards over multi‑brand support.

- LLMrefs — Catalogs LLM citations; useful for research teams validating references across engines.

- General telemetry: Google Search Console and Bing Webmaster Tools for crawl/index health, a prerequisite to AI visibility.

17) Why re‑date sections and add “subject to change” where needed?

Freshness and transparency matter for answer engines. When you make substantive updates, reflect them in the visible date and in JSON‑LD (datePublished/dateModified), and consider a brief change log. Add “subject to change” to volatile facts like pricing.

- How to implement

- Update dates only for meaningful edits; avoid superficial “date gaming.”

- Maintain dateModified in schema and visible UI.

- Add a short “Changes” note when you alter steps or code.

- Evidence: Structured data docs and SEO analyses advise aligning visible and structured dates to signal genuine freshness (Google Developers—Structured data intro, 2025; ClickRank, 2024).

18) How do you run periodic AI response audits and adapt your structures?

Auditing how AI summarizes your pages reveals hallucinations, omissions, or misattribution. Standardize prompts, track KPIs (accuracy, relevance, concision), and iterate headings, bullets, and schema based on findings.

- How to implement

- Weekly prompts: “Summarize this page in 100 words; list 3 key facts with citations.”

- Compare AI output vs. your TL;DR/FAQs; log issues and fixes.

- Monthly: Regenerate chunks/anchors if structure changed; validate schema.

- Evidence: AEO workflows highlight monitoring and iteration as critical to sustaining visibility in AI results (CXL traffic analysis, 2025).

Suggested weekly/monthly SOP

- Weekly

- Audit 5–10 priority pages in Perplexity and AI Overviews; capture citations.

- Spot‑check answer accuracy; flag hallucinations and unclear bullets.

- Ship minor fixes (headings, bullet atomicity, TL;DR clarity).

- Monthly

- Review chunking parameters and anchors; adjust overlap if retrieval drifts.

- Validate schema with Google Rich Results Test; fix property gaps.

- Update dates and change logs for substantive edits.

Bonus: before/after list snippet for LLM parse‑ability

Before (hard to parse)

- Best practices

- Overview of several approaches including schema, markup, formatting, and more for better AI performance in search engines and tools like ChatGPT.

- Additional notes with examples and considerations for future updates.

After (LLM‑friendly)

How should I structure a best‑practices list for LLMs? In 40–60 words, give the direct answer first. Follow with shallow, atomic bullets, then add schema and stable anchors. Keep consistent terminology and include a compact FAQ/TL;DR. Chunk content by tokens with overlap and rerank retrieved chunks.

- Use Markdown bullets; avoid deep nesting.

- Add FAQPage/HowTo/ItemList JSON‑LD.

- Assign stable IDs to sections for citations.

Quick implementation checklist

- Question‑led H2s with 40–60 word direct answers

- Shallow, atomic bullets in Markdown

- Semantic HTML5 hierarchy; stable anchors/IDs

- JSON‑LD: FAQPage, HowTo, ItemList; Organization/Person with sameAs and @id

- High property completion; about/mentions links

- Chunk ~800–1200 tokens; 10–20% overlap; rerank and cap top‑K

- Robots.txt explicit for AI crawlers; avoid accidental blocks

- TL;DR and compact FAQ near the top

- Track AI citations; iterate monthly; re‑date substantive updates

Key takeaways

- Put answers first, then structure for machines without sacrificing human flow.

- Treat schema and entity linking as your content’s API to answer engines.

- Align chunks and anchors with how retrieval actually works.

- Monitor citations and iterate—AI visibility is a moving target in 2025.