How AI Systems Select Brand Examples in Answers

Learn how AI engines choose brand examples in answers vs. citations. Explore key selection signals and ways to optimize your brand's inclusion.

When an AI answer highlights three brands as “examples,” why were those three chosen—and not yours? That choice shapes audience perception, click‑throughs, and even category leadership in AI search. This article explains how “AI brand example selection” works across the major answer engines, what signals you can influence, and how to measure progress without falling for silver bullets.

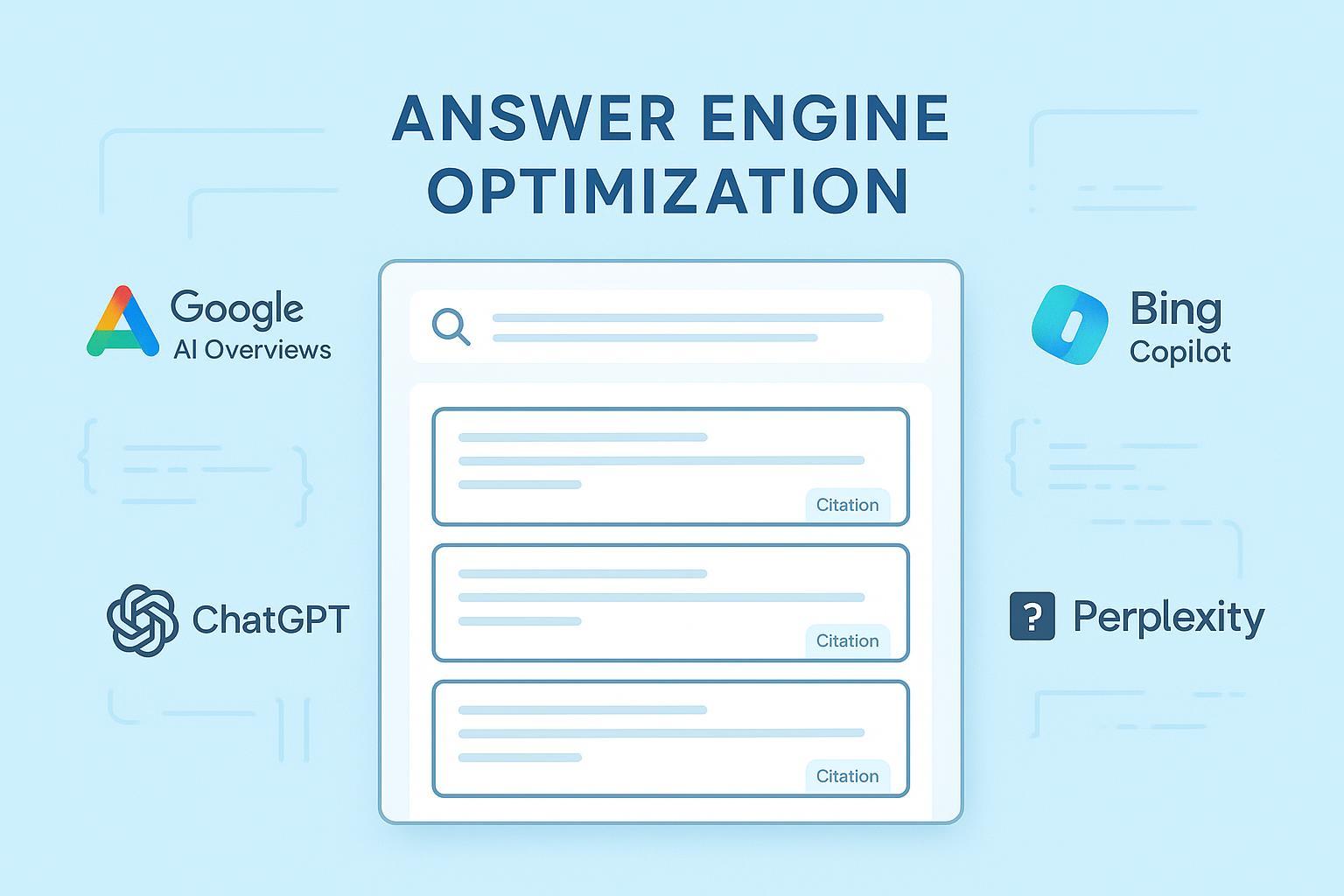

What “brand example selection” means (and how it differs from citations)

A brand example is the entity the AI names in the body of its answer—e.g., “For email platforms, consider Brand A, Brand B, and Brand C.” A citation, by contrast, is a supporting link or footnote showing where the AI sourced evidence. You can be cited without being named, and you can be named without earning the most prominent citation slot. The difference matters because being named puts your brand in the spotlight; a buried footnote might still pass authority, but it rarely drives attention.

Think of it this way: citations are the bibliography; brand examples are the panelists on stage. Your goal isn’t only to appear in the footnotes—it’s to be the example the model reaches for when it needs to make the answer concrete.

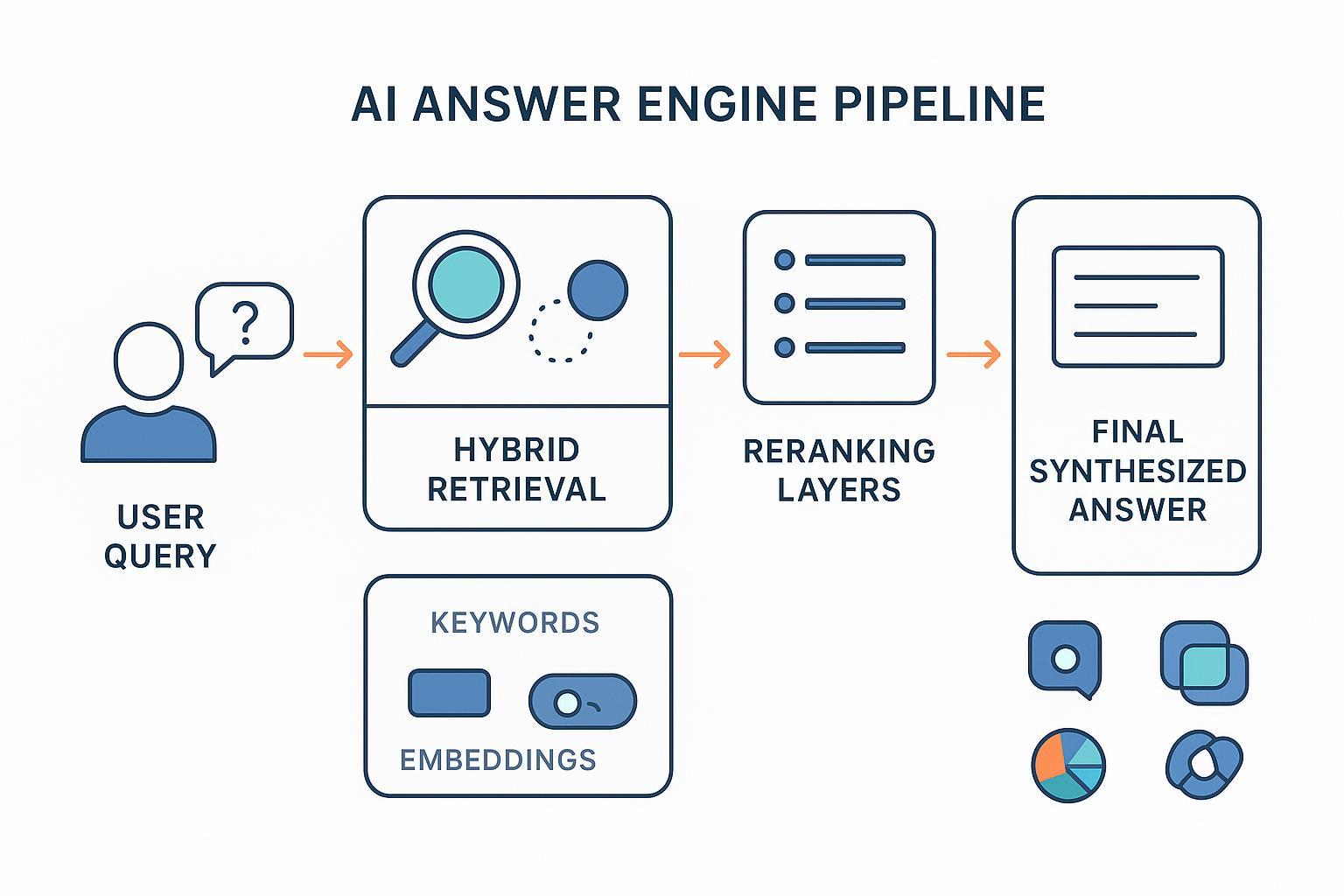

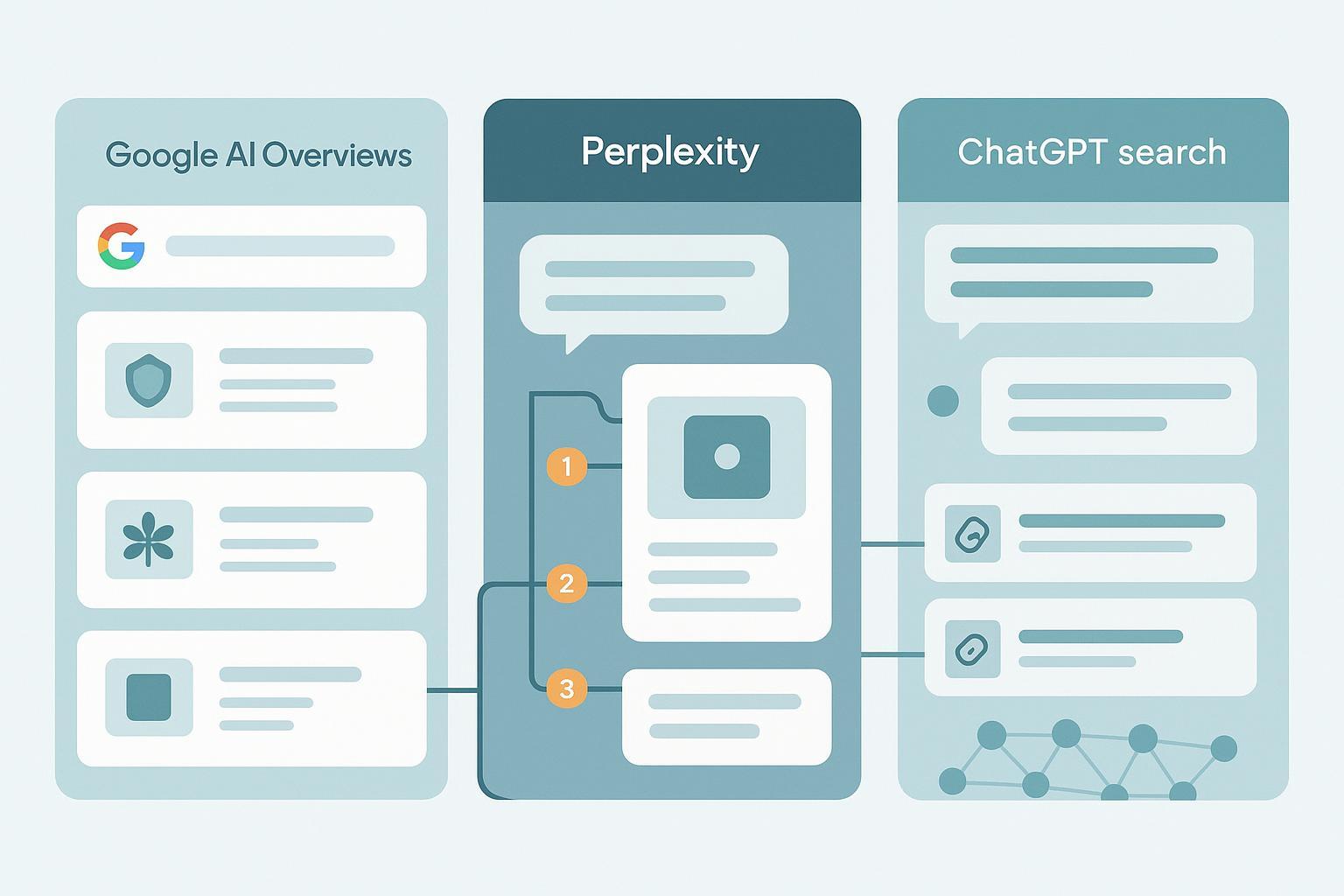

How the major engines source and cite brands

Different platforms retrieve information and attribute sources in different ways. Those mechanics affect which brands show up as examples.

-

Google AI Overviews and AI Mode synthesize an answer and show “supporting links.” Google notes that eligibility is tied to standard Search: pages must be indexed and able to appear with a snippet; there are no extra technical requirements unique to AI Overviews. See Google Search Central’s guidance in AI features and your website, and Google’s 2025 post Succeeding in AI search, which describes broader link discovery via query fan‑out and a focus on helpful, diverse sources.

-

Perplexity retrieves from the live web and places explicit, numbered citations in the answer. The company explains that transparency and credit are core behaviors, and modes like Deep Research affect depth and breadth of retrieval. Reference: Perplexity’s Help Center article How does Perplexity work?

-

ChatGPT with web search (SearchGPT) can search the web and include in‑line attributions and links to relevant sources; behavior varies by model and partner search providers. Reference: OpenAI’s announcement Introducing ChatGPT Search

Below is a quick comparison of what this implies for brands.

| Engine | Retrieval behavior | Citation behavior | Implication for brands |

|---|---|---|---|

| Google AI Overviews / AI Mode | Synthesizes from web pages eligible in standard Search; expands queries (fan‑out) to find supportive pages | Displays multiple supporting links near the answer | Standard SEO fundamentals and entity clarity are prerequisites. Clear, snippet‑friendly content improves chances of being cited and named. |

| Perplexity | Live web retrieval with modes that affect depth | Always shows explicit, numbered citations | Concise claim‑evidence pairs and up‑to‑date pages tend to be cited; freshness and clear sourcing help. |

| ChatGPT with search | Web retrieval via partner search providers | In‑line attributions/links within the chat turn | Copy‑ready facts and well‑titled, authoritative pages raise the odds of being referenced and named. |

For definitive details, see Google’s AI features and your website and Succeeding in AI search, Perplexity’s Help Center How does Perplexity work?, and OpenAI’s Introducing ChatGPT Search.

Cross‑platform signals you can influence (probabilistic, not guaranteed)

No platform publishes a deterministic formula for which brands will be named as examples. Still, several well‑documented and widely observed signals correlate with inclusion. Treat them as levers—not certainties.

- Authority and helpfulness. Models draw from inventories shaped by Search quality systems that reward people‑first, well‑sourced content. Demonstrate real expertise with bylines, methods, and original insights. Google reiterates this in its 2025 guidance Succeeding in AI search.

- Indexability and snippet eligibility. If a page can’t be indexed or can’t show a snippet (due to blocking or restrictive directives), it’s unlikely to appear as a supporting link in Google’s AI features. See Google’s AI features and your website.

- Entity clarity and structured data. Make your brand easy to disambiguate. Use Organization schema, consistent naming, and authoritative sameAs profiles; structured data helps Search understand entities. See Google’s Introduction to structured data.

- Recency and comprehensiveness. For time‑sensitive topics, updated pages are more likely to be retrieved (especially in Perplexity). For evergreen queries, depth and coverage can win.

- Geographic and intent alignment. If the query implies a location, emphasize local relevance. Match your content format to the intent (definition, how‑to, comparison, pros/cons) to improve answerability.

- Source diversity and policy compliance. Engines prefer a range of trustworthy sources and filter out content that conflicts with safety and quality policies. Avoid scaled, low‑value pages and manipulative tactics.

Platform‑aware content patterns

If you want to be the “example” a system reaches for, present information in shapes the engines can easily reuse.

- Google AI Overviews / AI Mode. Use clear headings, short definition blocks, step‑by‑step sections, and concise pros/cons. Surface the answer near the top, then add depth. Make distinct, snippet‑ready segments that can be quoted or summarized.

- Perplexity. Write claim–evidence pairs: make a specific statement and support it with a cited source on the same page. Keep summaries tight so your page is quotable without extra interpretation.

- ChatGPT with search. Place copy‑ready facts close to their sources and use descriptive titles and meta text so your page looks credible in the attribution preview. Include stat lines and definitions that are easy to lift.

Measurement: verify inclusion and track progress

How do you know whether you’re being named, merely cited, or missing entirely? And if an answer mentions a rival instead of you, what changed since last month?

Key KPIs to track across engines:

- Mention frequency by query cluster (named example vs. passing mention vs. not present)

- Citation prominence (appears near the top vs. footnote area; number of competing sources cited)

- Recommendation type (explicit positive recommendation, neutral inclusion, or negative warning)

- Sentiment and rationale expressed in the answer

- Share of voice within intent‑defined clusters (e.g., “best,” “compare,” “how to”)

- Freshness lag (time from page update to appearance in answers)

A tool‑agnostic workflow you can run today:

-

Curate a high‑value query set. Group by intent (definitions, comparisons, how‑tos) and by funnel stage. If you’re new to the concept, see this primer on AI visibility in AI search.

-

Run manual spot checks in each engine. In Google AI Overviews/AI Mode, Perplexity, and ChatGPT with search, capture screenshots of answers and the cited links. Log timestamps and the exact prompt.

-

Record mentions and sentiment. Keep a simple spreadsheet of whether you were named, merely cited, or absent, and note the tone (positive/neutral/negative) with a short rationale.

-

Compare against competitors. Track which rivals are being named for the same query cluster and how often.

-

Repeat monthly and after updates. Re‑test following major content updates or reported algorithm shifts.

Practical example (optional automation): Disclosure: Geneo is our product. For teams that want less manual overhead, you can monitor multi‑engine mentions and citations across Google AI Overviews/AI Mode, Perplexity, and ChatGPT search, log sentiment automatically, and export histories for audits. For deeper prompt‑level insights and benchmarking patterns, see our review of prompt‑level visibility and competitor benchmarking.

Risks, ethics, and remediation

- Avoid manipulative tactics. Scaled low‑value content and misleading markup can reduce eligibility and erode trust. Focus on readable, sourced, people‑first pages.

- Disclose conflicts and avoid absolutes. When referencing your own tools or clients, add a clear disclosure. Do not promise inclusion; model behavior changes.

- Address hallucinations or misattributions. If an engine misnames your brand or repeats an error, publish a correction page, strengthen entity signals (Organization schema, consistent sameAs), and provide clear, source‑backed clarifications. Use product feedback channels where available.

- Mind regulated topics. If you operate in sensitive categories, cite primary sources carefully and avoid prescriptive claims outside your expertise.

A compact next‑step checklist

- Fix eligibility basics: ensure indexability, snippet‑friendly pages, and clean on‑page structure.

- Tighten entity signals: Organization schema with sameAs, consistent naming, and authoritative profiles.

- Shape content to intent: definition blocks, steps, and comparison tables that are easy to cite.

- Prove expertise: bylines, methods, data, and citations on‑page; refresh time‑sensitive pages.

- Measure what matters: run monthly checks, track sentiment and share of voice, and iterate based on gaps you find.

—

According to Google’s guidance in AI features and your website and its 2025 post Succeeding in AI search, eligibility and helpfulness drive the pool of sources for AI Overviews. Perplexity explains its citation behavior in How does Perplexity work?, and OpenAI summarizes ChatGPT’s retrieval and attribution in Introducing ChatGPT Search.