AI Backlink Loops in 2025: How Answer Engines Build Self-Sustaining SEO

Discover how AI backlink loops are reshaping SEO in 2025—get the latest data, key pitfalls, and actionable tactics for citation-worthiness.

The quick take

AI answer engines are concentrating citations on a relatively narrow set of sources. When a domain gets cited repeatedly across engines and queries, that visibility tends to compound—shaping perceived authority even as outbound clicks shrink. In Q4 2025, this dynamic is accelerating and requires a measurement‑first, policy‑aware response.

What we mean by “AI backlink loops”

“AI backlink loops” isn’t a formal industry term yet. In practice, it describes a feedback effect where repeated citations from AI answer engines (e.g., Google’s AI Overviews, Perplexity, Bing Copilot) reinforce a site’s visibility and likelihood of being cited again—without traditional manual outreach. Unlike classical link building, these loops are powered by:

- Engine sourcing habits and historical prominence signals

- Cross‑platform entity strength (brand, author, organization)

- Content that’s easy to excerpt and quote

- Community signals (e.g., discussions and embeds) that engines often favor

This loop can raise an entity’s perceived authority—even as overall referral traffic may stagnate due to zero‑click UX.

The evidence the loop is forming

-

Prevalence of AI Overviews: In July 2025, Semrush reported that Google’s AI Overviews appeared in 13.14% of U.S. desktop searches, with strong growth in informational categories. See the methodology and category trends in the Semrush analysis: Semrush 2025 AI Overviews study.

-

Click‑through rate impacts: Multiple 2025 studies summarized by Search Engine Land found that when AI Overviews are present, top organic listings lose clicks (typical declines range from mid‑teens to roughly one‑third depending on query sets and methodologies). For a synthesis of Ahrefs and Amsive findings, see Search Engine Land’s 2025 CTR analysis.

-

Cross‑engine citation patterns: Longitudinal research from TryProfound shows distinct and sometimes divergent citation ecosystems across platforms, underscoring why certain domains are repeatedly surfaced by specific engines. Their public methodology and findings are summarized in TryProfound’s AI platform citation patterns (2024–2025).

-

Misattribution risk: Independent testing by the Tow Center/Columbia Journalism Review in March 2025 documented that AI search engines often err in attributing news sources, including broken or fabricated URLs and incorrect publishers—an important limiter on blindly trusting the loop. See the study write‑up: Tow Center/CJR’s 2025 evaluation of AI news citations.

Taken together, these data points support a world where some entities gain durable visibility via repeated citations, while overall click volume can be suppressed and attribution can be imperfect.

How these loops form—and where they can break

-

Input signals: Strong rankings, clean entity signals (organization schema, consistent naming, credible author profiles), and original, quotable data increase inclusion odds. Engines lean on sources they can parse, verify, and excerpt cleanly.

-

Amplification mechanics: Once cited, a domain accrues a form of “answer‑engine familiarity.” Engines and aggregators (including newsletters and AI‑driven curations) may re‑surface the same sources, creating a compounding effect across related queries.

-

Propagation channels: AI snippets get embedded in programmatic posts, roundups, and community threads; those mentions feed back into web prominence signals—sometimes yielding more citations.

-

Break points and risks:

- Zero‑click keeps users on the platform, limiting traffic lift even as visibility grows.

- Misattributions can send credit elsewhere or reference outdated artifacts.

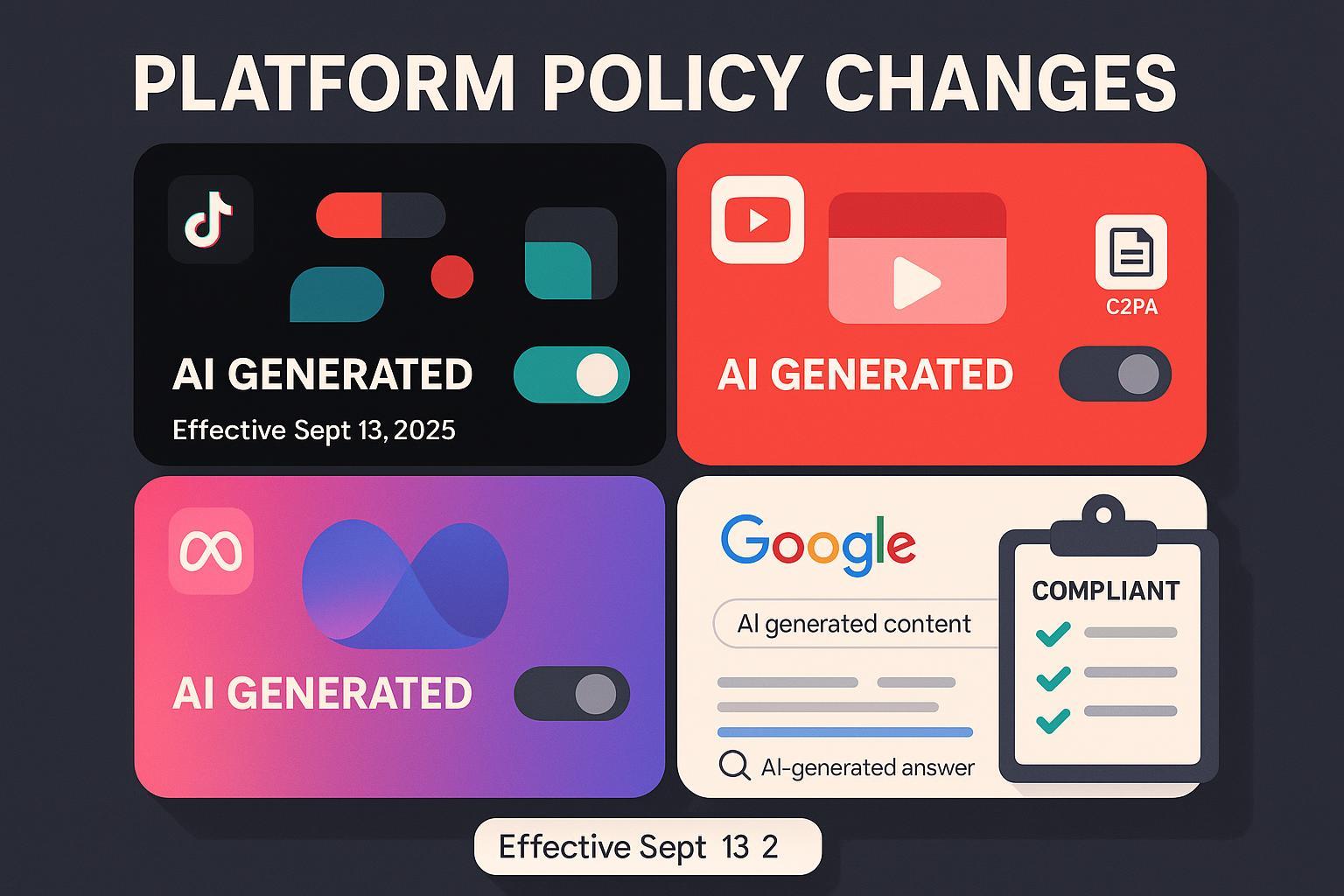

- Policy headwinds matter: Google’s March 2024 updates explicitly target scaled content abuse, site reputation abuse, and expired domain abuse. If you try to “manufacture” the loop with thin, mass‑generated pages or synthetic link schemes, you risk penalties. See the official guidance in Google’s March 2024 spam policies.

-

An academic analog: While not the same as web SEO, a 2025 case study on preprint platforms documented “citation inflation” among AI‑generated papers, showing how reciprocal references can artificially lift metrics. It’s a cautionary analogy for feedback loops in web ecosystems; see arXiv 2025 citation inflation case study.

Engineering citation‑worthiness (without manual outreach)

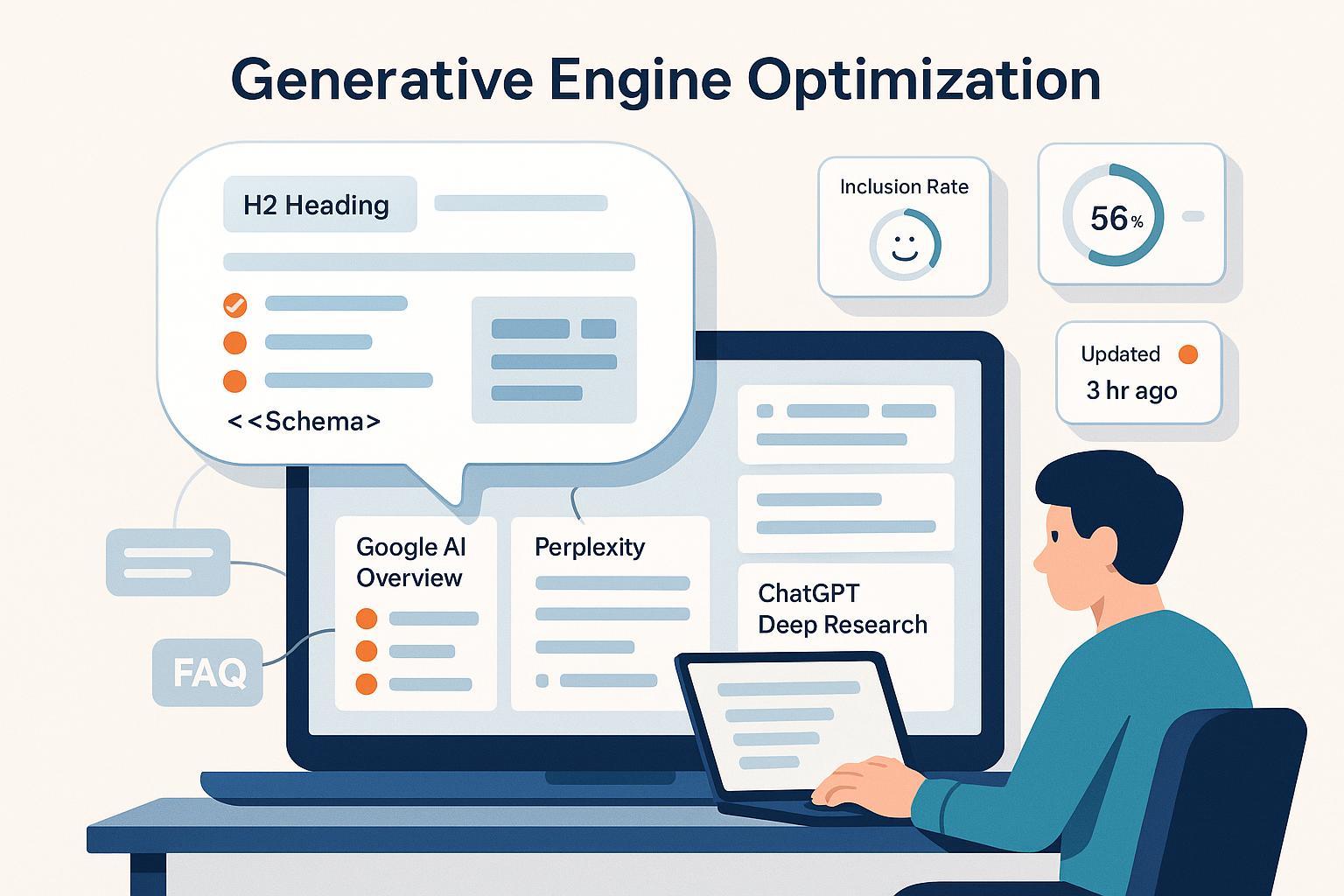

If you want answer engines to repeatedly cite your pages, design for it upfront. Here’s a practitioner checklist that aligns with 2024–2025 evidence and policy constraints:

- Publish original, quotable artifacts

- Build proprietary datasets with year‑stamped methodology, clearly labeled charts, and succinct summary stats. Engines favor scannable, verifiable facts.

- Include a brief “How we measured” section and a change‑log on your most cited assets.

- Strengthen entity signals and bylines

- Use Organization and Person schema; align author bios with real expertise, affiliations, and social proofs.

- Maintain a consistent brand name and NAP across your site and major profiles. Add transparent “About” and “Contact” pages.

- Design for excerpting and answer UX

- Structure pages with short summaries, FAQs, pull‑quotes, and clear H2/H3s. Provide concise definitions and numbered steps that can be lifted verbatim.

- Add companion formats where engines source heavily (e.g., YouTube video synopsis, alt text and captions).

- Cultivate community signals where engines look

- Participate in relevant Reddit threads and publish useful, non‑promotional summaries or benchmarks tied to your pages. For a deeper tactical walkthrough on nurturing community proof points, see this practical guide on Reddit communities and AI search citation best practices.

- Ensure technical accessibility for AI crawlers

- Allow and log AI bot access; prefer clean HTML over heavy client‑side rendering that obscures content.

- Validate structured data and minimize render‑blocking scripts that can hide key excerpts.

A neutral monitoring workflow (with an example stack)

You won’t manage what you don’t measure. A simple, repeatable workflow:

-

Define tracked questions: List 25–50 core queries and 10–15 emerging questions per product line or editorial beat.

-

Capture cross‑engine snapshots: Record which sources are cited by Google AI Overviews, Perplexity, and Bing Copilot for each query, monthly.

-

Log sentiment and misattribution: Note tone, gaps, and whether the link lands on the correct artifact.

-

Maintain a public change‑log on your flagship pages so engines (and readers) see freshness.

-

Compare narrative differences: Where engines diverge, annotate why and adjust your artifacts accordingly.

As an example in a measurement stack, you can centralize these observations with Geneo to monitor cross‑engine brand visibility, citations, and sentiment over time. Disclosure: Geneo is our product.

If you want to see how citations differ across engines on real queries, scan one of our annotated examples such as this cross‑engine query report on GPU shortage price trends in 2025. Use it as a template for your own monthly snapshots.

What to measure next month

- Inclusion rate: % of your tracked queries where your domain appears in AI Overviews/answer engines.

- Citation placement: Presence in the first citation chip or within the first visible set of sources.

- Excerpt velocity: Number of fresh, quotable stats added this month; count of pages with updated methods sections and change‑logs.

- Community corroboration: Count of high‑quality Reddit threads or YouTube descriptions that reference your latest artifact.

- Misattribution incidents: Instances where engines cite the wrong URL or publisher; number successfully corrected.

Ethical guardrails and policy alignment

- Avoid scaled, thin AI content designed solely to bait citations—this falls squarely into scaled content abuse and risks broader site trust.

- Don’t buy or fabricate citation lists. Focus on verifiable artifacts, real subject‑matter expertise, and transparent methods.

- When misattributions occur, request corrections politely and document the fix in your change‑log.

Bottom line and next steps

AI backlink loops are real enough to plan for—even if the term is still emerging. The playbook is not about shortcuts; it’s about engineering citation‑worthiness and proving relevance where answer engines look. If you put a measurement program in place and refresh your artifacts monthly, you’ll compound visibility in the channels that matter in 2025—without leaning on manual outreach.

If you’re formalizing your monitoring, consider piloting a lightweight stack that logs citations, sentiment, and overlap across engines over a 90‑day window. You can include Geneo as part of that stack to centralize observations while keeping your workflow vendor‑neutral.

Updated on 2025-10-07

Change‑log

- Initial publication with 2025 citations: Semrush AI Overviews prevalence; Search Engine Land CTR synthesis; TryProfound citation patterns; Tow Center/CJR misattribution study; Google March 2024 spam policies; arXiv 2025 analog on citation inflation.