Best Practices for AI Answer Extraction & GEO Performance (2025)

Proven methods for structuring content to maximize AI answer extraction and GEO performance in 2025. Includes actionable workflows, schema examples, and monitoring tactics for pros.

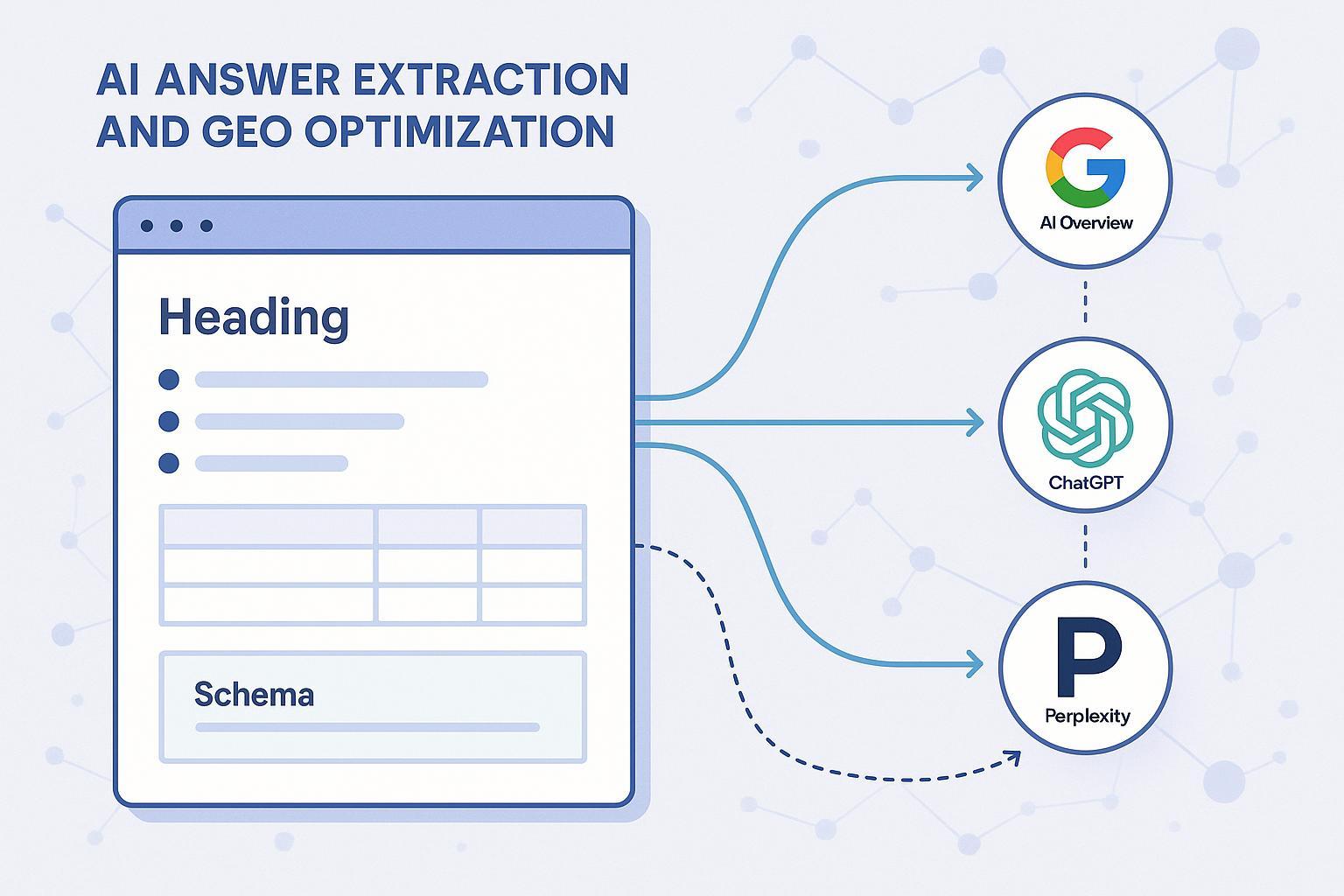

A practical way to get cited by AI search engines is to make your content trivially extractable. In practice, that means answer-first sections, question-based headings, semantic HTML, and robust structured data—then monitor citations and iterate.

Why extractability now matters

Google explains that AI Overviews and AI Mode aim to summarize complex queries and expose a wider, diverse set of helpful links via a “query fan-out,” creating new opportunities for publishers (Google, 2025). See the official guidance in AI features and your website and the 2025 blog Succeeding in AI search.

OpenAI confirms citation behavior in its browsing-based experience; their announcement Introducing ChatGPT search (2025) describes how answers include source links.

Perplexity documents its crawler and publisher controls in PerplexityBot guidelines (2025), noting robots.txt compliance and how sources are linked in results.

Multiple industry studies track prevalence and click impacts. Semrush reported AI Overviews in around 13% of queries in March 2025, up from January (2025), in its AI Overviews study; BrightEdge found AIOs appear in over 11% of queries with an overall usage increase and CTR shifts (May 2025) in its year-one analysis. Treat these as directional signals and measure your own data.

Structure pages answer-first

If AI systems can identify the exact answer and the supporting evidence rapidly, your odds of being cited increase.

- Lead with a 2–3 sentence summary that directly answers the core question.

- Use question-based H2/H3: “What is X?”, “How do I do Y?”, “Which Z is best for…?”

- Provide a one-sentence direct answer immediately under each heading, followed by concise elaboration.

- Format steps as ordered lists, comparisons as tables, and definitions as definition lists. These are machine-friendly and align with accessibility.

Semantic HTML patterns that help

- Headings: Use a logical H1–H3 hierarchy with descriptive text. Refer to MDN heading elements.

- Lists and definition lists: Use

<ol>,<ul>, and<dl><dt><dd>for steps and glossaries per MDN list elements and definition lists. - Tables: Structure comparisons/specs with proper

<thead>,<tbody>,<th>and<caption>as outlined in MDN tables. - Figures: Wrap diagrams in

<figure>+<figcaption>; see MDN figure. - Accessibility: Align with WCAG 2.2 criteria like descriptive headings and programmatic relationships; see WCAG 2.2.

Implement structured data for extractable answers

Use JSON-LD and ensure the markup mirrors visible content. Validate with Google’s Rich Results Test.

FAQPage (for Q&A sections)

{

"@context": "https://schema.org",

"@type": "FAQPage",

"mainEntity": [

{

"@type": "Question",

"name": "What is Generative Engine Optimization (GEO)?",

"acceptedAnswer": {

"@type": "Answer",

"text": "GEO is the practice of optimizing content so generative AI systems can discover, understand, and cite it in their answers."

}

},

{

"@type": "Question",

"name": "How do I structure content for AI answer extraction?",

"acceptedAnswer": {

"@type": "Answer",

"text": "Lead with direct answers, use question-based headings, semantic HTML, and JSON-LD (FAQPage/HowTo/Article). Validate markup and monitor citations."

}

}

]

}

HowTo (for step-by-step guides)

{

"@context": "https://schema.org",

"@type": "HowTo",

"name": "Monitor and improve AI citations",

"totalTime": "PT30M",

"step": [

{"@type": "HowToStep", "text": "Audit pages for answer-first structure and schema."},

{"@type": "HowToStep", "text": "Publish SSR-rendered HTML with visible Q&A content."},

{"@type": "HowToStep", "text": "Track citations across Google AI Overviews, ChatGPT, and Perplexity."},

{"@type": "HowToStep", "text": "Iterate monthly based on sentiment and citation gaps."}

]

}

Article + Organization (E-E-A-T signals and entity context)

{

"@context": "https://schema.org",

"@type": "Article",

"headline": "How to Structure Your Content for AI Answer Extraction and Better GEO Performance",

"datePublished": "2025-09-30",

"author": {

"@type": "Person",

"name": "Editorial Team"

},

"mainEntityOfPage": "https://example.com/ai-answer-extraction-geo",

"about": "Generative Engine Optimization",

"mentions": ["AI Overviews", "FAQPage", "HowTo", "Entity hubs"]

}

{

"@context": "https://schema.org",

"@type": "Organization",

"name": "Your Company",

"url": "https://example.com",

"logo": "https://example.com/logo.png",

"sameAs": [

"https://www.wikidata.org/wiki/Q12345",

"https://en.wikipedia.org/wiki/Your_Company"

]

}

Implementation notes:

- Only mark up content that is visible to users; Google reiterates this in intro to structured data.

- Keep identifiers consistent. Use

@idto connect Organization, Person, and WebSite across pages. - Validate changes in a staging environment and re-check post-deployment.

Build entity hubs and topical clusters

AI systems rely on entity understanding. Make your pillar pages the canonical hubs, then interlink into clusters.

- Disambiguate with

sameAsreferences to authoritative profiles (Wikipedia, Wikidata) and clarify page focus withaboutandmentions. - Use

@idto create a consistent internal graph linking Organization, authors, and key topics. - Interlink cluster articles with descriptive anchors; avoid orphan pages.

For deeper context on GEO fundamentals and definitions, see our GEO explainer.

Authoritative primers on entity SEO are covered in a February 2025 overview from Search Engine Land; see knowledge graphs & entities guidance. Practical schema linking insights are discussed by SchemaApp in measurable impact of entity linking (2024–2025).

Render accessibly; avoid client-only content for key sections

Google deprecated dynamic rendering and recommends a single rendering path such as SSR or static (docs updated through 2024–2025). Reference JavaScript SEO basics and note broader guidance changes in Google Search docs updates. Ensure:

- Critical answers and structured data are present in the initial HTML.

- CWV stay healthy (INP, LCP, CLS); see March 2024 update & spam policies.

- Avoid heavy client-side rendering for Q&A or HowTo content.

Robots and access controls for AI crawlers

Use the official robots.txt directives; proposed files like llms.txt aren’t standard.

- Robots.txt primer and directives: Google robots intro.

- GPTBot (OpenAI) controls: GPTBot docs. Example robots rules:

User-agent: GPTBot

Allow: /

or to block:

User-agent: GPTBot

Disallow: /

- PerplexityBot behavior and controls: PerplexityBot guidelines. Independent observations have noted stealth crawling behavior; Cloudflare discusses this in its June 2024 note Perplexity undeclared crawlers. If concerned, monitor logs and consider WAF rules.

Monitoring and iteration: a repeatable workflow

Track how often and where your pages are being cited, the sentiment of mentions, and referral traffic.

- KPIs: citation count/rate per engine, sentiment of AI answers, AI referral traffic, schema validation pass rate, CWV health.

- Review cadence: monthly against a dashboard; iterate based on gaps.

Example workflow: cross-platform AI citation tracking

Use a structured workflow to instrument and improve citations.

- Centralize queries and target pages; tag sections with question-based headings and one-sentence answers.

- Validate structured data with Rich Results Test; confirm SSR/static output embeds markup.

- Track citations and sentiment across engines; compare before/after changes.

We use Geneo to monitor cross-platform AI citations and sentiment in practice. Disclosure: Geneo is our product.

Troubleshooting playbook: common failures and fixes

-

Symptom: Your page is authoritative but rarely cited.

- Fix: Add a concise, direct answer under the relevant H2/H3; ensure FAQPage/HowTo markup mirrors visible text; add

sameAslinks for entity clarity.

- Fix: Add a concise, direct answer under the relevant H2/H3; ensure FAQPage/HowTo markup mirrors visible text; add

-

Symptom: AI answers paraphrase competitors despite your better guide.

- Fix: Move critical facts into lists or tables; add a definition list for terminology; improve internal linking from hub to cluster pages; verify that your content is fully accessible without JS.

-

Symptom: Markup validates, but citations don’t change.

- Fix: Strengthen E-E-A-T signals in Article/Organization schema (author, datePublished, mainEntityOfPage); add references to authoritative external profiles; expand Q&A coverage with more specific questions.

-

Symptom: Unexpected bots are crawling or ignoring robots.

- Fix: Confirm robots rules for GPTBot and PerplexityBot; monitor server logs; consider WAF rate limiting for non-compliant patterns.

Implementation checklist

- Draft answer-first sections with question-based headings and one-sentence answers.

- Convert steps, comparisons, and definitions into

<ol>,<table>, and<dl>. - Add JSON-LD: FAQPage, HowTo, Article, Organization; mirror visible content.

- Disambiguate entities using

sameAs,about,mentions, and consistent@idacross pages. - Ship SSR/static pages so answers and schema are present at first render.

- Validate markup; check CWV; confirm indexability.

- Configure robots.txt for GPTBot/PerplexityBot as desired.

- Monitor citations, sentiment, and referrals monthly; iterate with structured tests.

For broader strategy context and real outcomes, explore 2025 AI search strategy case studies.

Next steps

Start with one pillar page: add answer-first structure, FAQPage and HowTo schema, and entity disambiguation. Then instrument a monthly review cycle against your KPIs. If you want a streamlined way to track cross-engine citations and sentiment, consider Geneo for dashboards and iteration support.