AEO Best Practices 2025: Executive Guide to Measuring AI Voice

Discover 2025 AEO best practices for CMOs. Learn how AI Overviews impact CTR, measure cross-engine visibility, and report with confidence. Read now.

When AI summaries show up, fewer people click. In March 2025, U.S. users who saw a Google AI summary clicked a result roughly half as often as when no summary appeared, according to the Pew Research study of 900 adults (fielded March 2025): users clicked 8% with a summary vs. 15% without. For executives defending organic budgets, that means you can’t judge success on classic blue-link CTR alone.

Updated on 2025-12-26

2025-12-26: Added late-2025 data on AI Overviews’ pullback and Pew Research click behavior; noted Google’s expansion of ads in AI Overviews/AI Mode.

1) Why classic SEO alone won’t defend your organic influence in 2025

AI Overviews surged through mid-2025 and then pulled back in Q4. A large-scale dataset shows appearance rates rising from single digits in January to roughly one-quarter of queries at the July peak, then falling meaningfully by November. The intent mix shifted too—informational share dropped while commercial and navigational shares rose. See the late-2025 summary in Search Engine Land.

If fewer users click, what are you optimizing for? Beyond sessions, you need an AI-first visibility lens: appearances in AI results, how you’re referenced, whether links point to your canonical pages, and whether you’re framed as a top recommendation. Think of it this way: if an engine summarizes the category and lists three “top picks,” your goal is to be there with correct attribution—even if total page clicks dip.

2) What answer engines actually show (and why it matters for reporting)

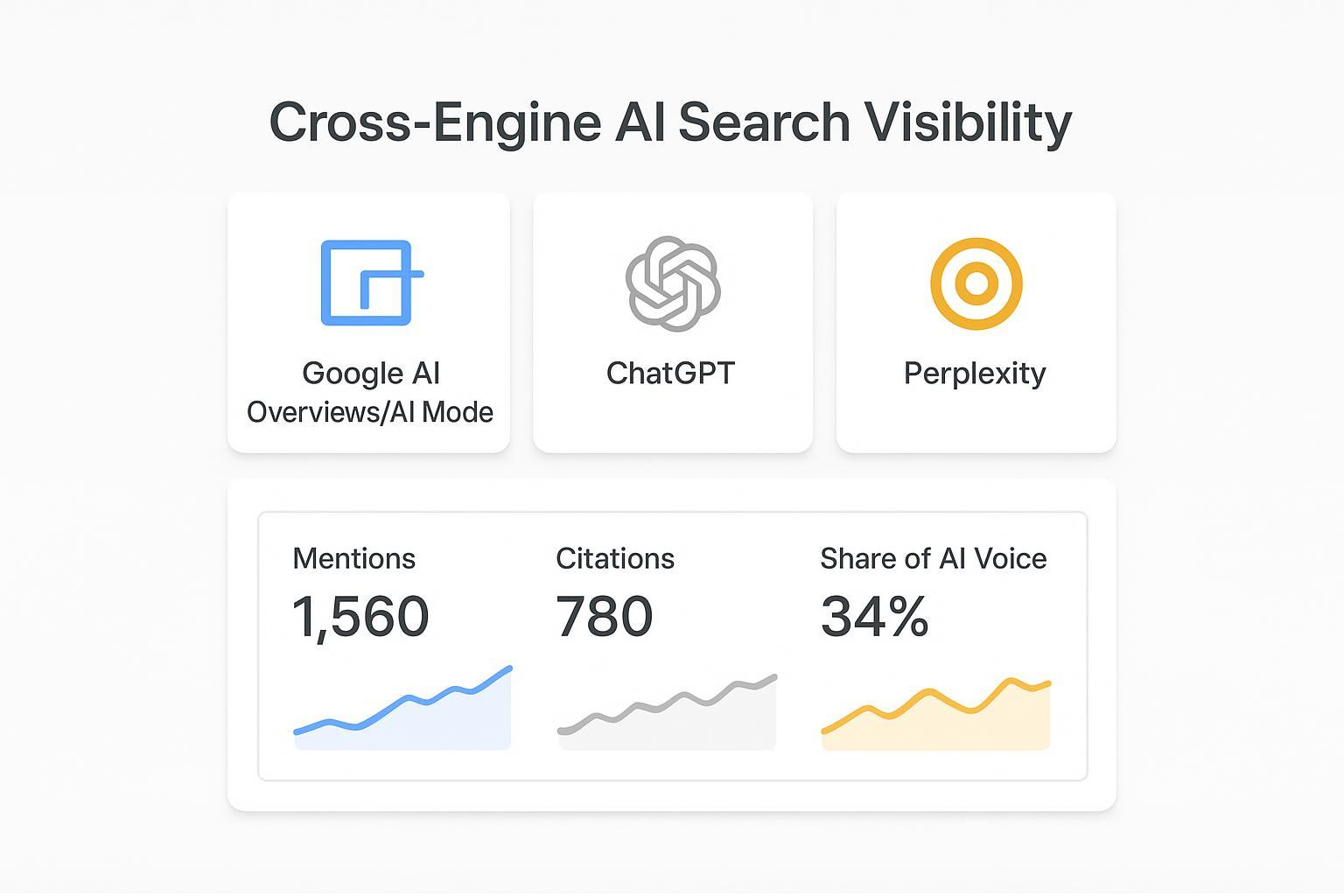

These aren’t identical surfaces, so your reporting needs to account for their differences.

Google AI Overviews and AI Mode: Throughout 2025, Google emphasized prominent links within AI surfaces and broadened availability. The company also began expanding Search and Shopping ads into AI Overviews and AI Mode. See Google’s AI Mode update (May 20, 2025).

Perplexity: Answers carry explicit, numbered citations linking to source pages, with modes like Web or Academic and a Deep Research option. Their documentation details the source panels and inline [1], [2] references; see the quickstart in Perplexity’s docs.

Microsoft Copilot: Citations can point to web results or Microsoft Graph items depending on context and permissions. A high-level overview is in Microsoft’s Copilot documentation.

ChatGPT: OpenAI doesn’t publish a universal citation rule across all contexts. Releases note ongoing changes to tools and search behavior, and attribution may require prompting. See ChatGPT release notes.

Why does this matter? Because your KPIs must normalize for: where links appear (inline, footnotes, panels), whether your official domain is the one attributed, and how prominent the recommendation is. Otherwise, you could misread visibility gains or losses.

3) The KPI framework CMOs can standardize today

A dual-lens dashboard—AI visibility plus classic outcomes—keeps your org aligned. Here are the core metrics and how to use them.

AI impressions/appearance rate: The proportion of tracked prompts/queries where your brand is referenced in an AI answer, by engine and cohort.

Brand mentions (share of answer): Count of prompts with a brand reference divided by total prompts for the cohort; segment informational vs. commercial.

Citations and link attribution: Of those mentions, how often does the answer link to your official domain or the correct page? Track misattributions separately.

Recommendation type (prominence): Classify appearances as neutral mention, list inclusion, top-pick/primary recommendation. Weight them accordingly.

Freshness/recency: Median age of cited sources and the percentage of your cited pages updated in the last X months.

Sentiment mix: Ratio of positive/neutral/negative framing in generated answers.

Position-weighted Share of AI Voice (SOV): An engine-normalized score that reflects both frequency and prominence. A simple example:

SOV = sum(weight_position × appearance) ÷ total_opportunities, normalized across engines with different UI densities.

Example weights: top-pick = 3; list item = 1; footnote-only mention = 0.5. Adjust in your governance doc as surfaces change.

For measurement logic that maps to business outcomes (accuracy, relevance, personalization), see LLMO Metrics: Measuring Accuracy, Relevance, Personalization.

4) A 90–120 day operating plan your org can run now

Weeks 1–2: Baseline and governance

Build a prioritized query and prompt set spanning informational, commercial, and brand queries. Log engine, mode, date, and locale for every run. Capture mentions, citations, recommendation type, position, sentiment, and cited domains. Assign an owner in SEO or Growth Ops.

Establish a change-log convention so weighting or UI assumptions can be updated transparently.

Weeks 3–6: Refactor and structure content

Add answer-first blocks (40–60 words), clarify Q&A headings, and upgrade schema (FAQPage, HowTo, Article, Organization/LocalBusiness). Maintain author/entity consistency and cite primary sources.

Commission one small original data asset per priority topic (survey, benchmark, or pricing table) with clear methodology and dates. Engines reward authoritative, recent references.

Weeks 6–10: Distribute and earn authority signals

Run targeted digital PR to earn mentions in publications and communities that engines frequently cite in your vertical. Ensure reciprocal, correct referencing of your primary pages.

Package assets for reuse (quotes, charts, explainer snippets) to increase the likelihood of being cited.

Weeks 10–14: Measure deltas and iterate

Re-run the baseline. Compare mentions, link attribution, SOV, and sentiment. Note shifts by engine and intent cohort. Update your change-log and adjust weights if UI density changed.

For a practical baseline approach, see How to perform an AI visibility audit for your brand.

5) Practical workflow example (tool-agnostic with a neutral platform reference)

Here’s what an enterprise-ready loop looks like end to end:

Audit: Crawl a fixed prompt set weekly across Google AI Overviews/AI Mode, Perplexity, Copilot, and ChatGPT; store snapshots and attributions. Normalize engine-specific fields.

Analyze: Compute appearance rate, mentions, correct link attribution, and position-weighted SOV by engine and intent. Flag misattributions and gaps.

Act: Prioritize fixes (e.g., build a 50-word answer block, add FAQ schema, publish a fresh statistic with methodology, pitch a targeted outlet). Track a hypothesis per action.

Report: Roll up monthly for executives with trendlines and a brief narrative: what changed, why it matters, and what’s next.

A cross-engine platform can reduce manual effort here. For example, Geneo supports multi-engine monitoring and competitive benchmarking, and offers white-label reporting for agencies. Disclosure: Geneo is our product. For a capabilities overview suited to this workflow, see Geneo Review 2025.

6) Risk management: Don’t chase one engine; keep evidence fresh; keep a change-log

Avoid single-engine optimization. Surfaces change quickly—AI Overviews’ appearance rate and intent mix shifted materially during 2025. See the late-2025 summary in Search Engine Land. Balance investment across engines where your buyers search.

Treat policies and UI as volatile. Google’s AI Mode expanded globally across 2025, and ads began rolling into AI Overviews and AI Mode. Re-verify monthly against Google’s AI Mode blog.

Maintain a visible mini change-log tied to your reporting deck. Document data refreshes, model/UI shifts, and weighting changes. Who owns it? Usually the Head of SEO with a data partner.

7) What to do next

Stand up your dual-lens dashboard (AI visibility + classic outcomes). Assign ownership and a monthly executive review.

Run a 90–120 day sprint and commit to publishing one original data asset per priority topic.

If you want to consolidate cross-engine monitoring and executive/agency reporting, consider using Geneo for AEO/GEO measurement and white-label dashboards. See the platform at geneo.app.