2025 Best Practices: Build Content Workflows for Human & AI Success

Discover actionable 2025 best practices to create content workflows that engage humans and optimize AI analyzer citations. Includes technical SEO, compliance, and real-world governance.

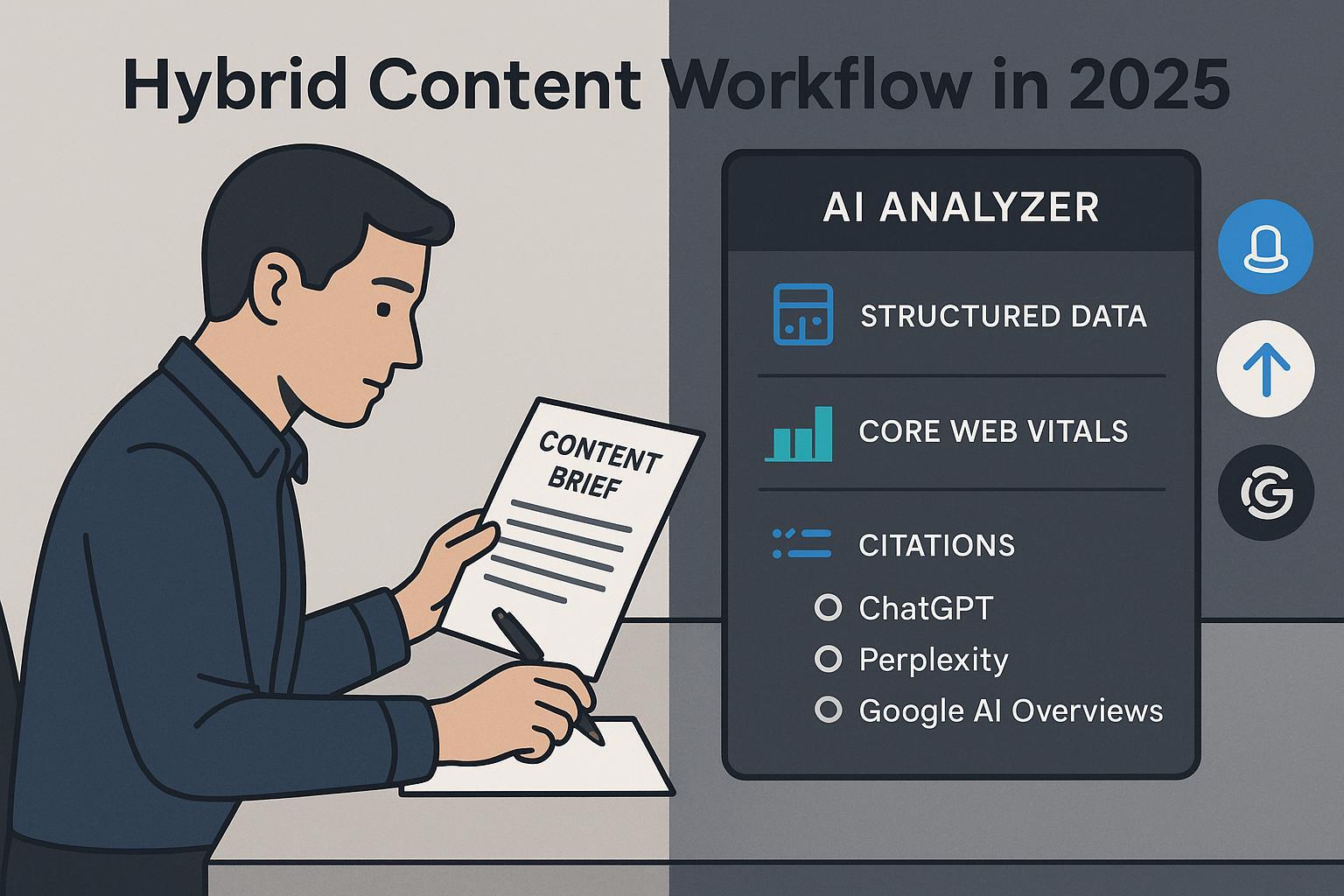

Why a hybrid workflow is now non‑negotiable

In 2025, the content that wins does two things at once: it delights human readers and gets reliably cited by AI systems like ChatGPT, Perplexity, and Google’s AI features. Google continues to emphasize helpfulness, clarity, and technical soundness for AI surfaces; the company’s guidance on AI features highlights that eligibility flows from high‑quality content and robust technical foundations, not tricks or special tags, as described in Google’s own documentation in 2025 on AI features and your website (Google Search Central, 2025). Google’s May 2025 update further underscores that AI mode appears on complex queries and links back to sources (Google Blog AI in Search update, 2025).

At the same time, accessibility, compliance, and performance standards have tightened. WCAG 2.2 adds concrete focus and input requirements that content teams must bake into their process (W3C WCAG 2.2 Recommendation, 2023–2024). The FTC’s 2024 final rule on deceptive reviews and endorsements brings explicit disclosure and authenticity expectations into marketing operations (FTC final rule on fake reviews, 2024). On the performance side, INP replaced FID as a Core Web Vitals metric in 2024, and the accepted “good” thresholds remain tight in 2025 (web.dev INP explainer, 2024–2025).

The implication isn’t “do everything.” It’s design a hybrid workflow where AI boosts speed and coverage, and humans assert judgment, originality, and compliance. Below is a battle‑tested, seven‑phase flow we’ve used across enterprise and mid‑market teams.

The 7‑phase workflow that consistently works in 2025

Think of this as a production line with clear roles, gates, and artifacts. Most teams ship faster and with higher quality by formalizing SLAs like: drafting ≤5 days, review ≤2 days, compliance ≤2 days.

1) Strategy and briefing

- Inputs: audience jobs‑to‑be‑done, intent clusters, topical map, risk/compliance constraints, and your E‑E‑A‑T plan (which experts, quotes, proof points, and credentials you’ll include). Remember: E‑E‑A‑T informs quality assessments; it’s not a direct ranking factor per Google’s Quality Rater Guidelines (2025), but it guides what “helpful” looks like in practice.

- Outputs: a rigorous brief that sets angle, POV, primary sources, target questions to answer, extractable facts (numbers/dates), and success metrics.

- Roles: strategist (owns the brief), SME (experience and examples), editor (narrative and cohesion), compliance partner (disclosures, claims standards).

- Tips that matter:

- Define “AI visibility” goals (e.g., be cited in Perplexity and Google AI mode for 10 priority queries this quarter) alongside human KPIs (scroll depth, conversion proxies).

- Establish a source credibility ladder: official docs/regulators > primary studies > recognized expert orgs > reputable media.

2) Research and source curation

- AI‑assist the collection of primary sources, but have humans vet authority and resolve conflicts.

- Build an annotated bibliography with the key claim, date, and what you’ll cite. Keep a conflict log where sources disagree.

- Target extractable facts that AI systems can lift cleanly: definitions, thresholds, dates, and stats—each with a canonical source. For example, Core Web Vitals “good” targets (LCP ≤2.5s, INP ≤200ms, CLS ≤0.1) are summarized by Google’s developer materials in 2025 (web.dev vitals hub).

3) Drafting and expert review

- Let AI draft outlines, suggest section scaffolds, and generate tables/checklists. Then have SMEs inject lived experience, concrete examples, and failure modes.

- Editor responsibilities:

- Trim redundancy; add transitions; maintain a consistent, human voice.

- Ensure citations wrap the fact phrase and include publisher/year in proximity.

- Confirm the “extractability” pattern: short paragraphs, descriptive headings, bolded key stats, and a concise summary near the top.

- E‑E‑A‑T proofing checklist: author bylines with credentials, expert quotes or interviews, primary-source citations, and a short “methods” note when synthesizing.

4) Technical packaging and accessibility

- Structured data: implement JSON‑LD for relevant types (Article, Organization, Product, VideoObject, QAPage if you include FAQs). Google’s 2025 article structured data guidance remains the reference for properties and eligibility (Google Article structured data, 2025).

- Information architecture: single H1; logical H2/H3 hierarchy; scannable lists; descriptive alt text; strong summaries and FAQs.

- Performance: budget for Core Web Vitals; audit scripts and images; focus especially on INP responsiveness (interactive tasks) per Google’s guidance (web.dev INP explainer, 2024–2025).

- Freshness and change signaling: last‑updated stamps, changelogs for major edits, and clean canonicalization.

- Accessibility (WCAG 2.2): visible focus indicators; keyboard navigability; predictable interaction patterns (W3C WCAG 2.2).

5) Compliance gate

- Add required disclosures for endorsements, affiliate relationships, and AI assistance. The FTC’s 2024 rule bans deceptive or AI‑generated fake reviews; double‑check your reviews/UGC process and ensure clear, proximate disclosures in relevant content (FTC rule on fake reviews, 2024).

- For EU audiences and regulated contexts, apply AI transparency statements and keep an approval audit log in line with the EU AI Act’s staged rollout (2024–2026) using the European Commission overview as a baseline reference (European Commission AI Act overview, 2024).

- Verify copyright and media rights for any third‑party assets.

6) Publication and distribution

- Publish primary content; repurpose into Q&A snippets, checklists, and short‑form updates for social/email.

- Submit updated sitemaps; annotate last‑updated in the UI; and use IndexNow to notify search engines of changes for faster discovery, per Microsoft’s guidance (2024–2025) on when and how to use it (Bing Webmaster Blog on IndexNow, 2024).

- Add Open Graph/Twitter cards, canonical tags, and meta descriptions tuned for CTR.

7) Monitoring and continuous optimization

Monitor three layers weekly/biweekly:

- Search visibility: indexing, clicks, CTR by query class.

- AI visibility: how often your pages are cited or linked in AI answers for target queries and on which platforms; what the sentiment looks like; and which facts or summaries get lifted.

- Experience/engagement: scroll depth, time on page, bounce patterns; CWV pass rates.

In practice, teams centralize these signals to decide what to refresh next. A platform like Geneo can unify AI citation visibility across ChatGPT, Perplexity, and Google AI features, track sentiment of AI answers, and keep historical query snapshots so your editors can prioritize updates with the highest upside. Disclosure: We use Geneo in our own workflow.

Operationalize improvements on a tight cadence:

- 7–14 days post‑publish: iterate headlines/meta, expand concise answers/FAQs, and add missing primary citations if AI systems aren’t picking up your page.

- 30–60 days: refresh stats, add SME quotes, improve INP by trimming blocking scripts, and prune/merge thin pages.

- Quarterly: revisit topical maps, re‑assess structured data opportunities, and re‑baseline CWV budgets.

Data packaging that AIs and humans both reward

Your biggest wins usually come from how you “package” facts. Four patterns consistently help AI systems cite you while improving human comprehension:

- Declarative, scannable facts near the top

- Include a 2–4 sentence summary that states the conclusion and the key numbers/dates readers came for.

- Follow with a short FAQ that answers one question per bullet.

- Clear headings and semantic structure

- One H1, descriptive H2/H3s, and compact paragraphs. This supports both readers and AI summarizers that chunk content by headings. Google’s 2025 guidance on AI features stresses clarity and helpfulness rather than tricks (Google Search Central AI features, 2025).

- Structured data and fact containers

- Use Article/FAQ schema for consistent topics, but avoid over‑markup if features are limited. The goal is machine understanding, not just rich results. Keep properties accurate per Google’s 2025 article schema guidance (Google Article structured data, 2025).

- Freshness and provenance

- Stamp last‑updated, link to primary sources, and include author credentials. When you cite adoption trends, prefer primary surveys. For instance, McKinsey reported in 2024–2025 that generative AI adoption reached roughly the mid‑60s to low‑70s percent of organizations, with benefits but uneven EBIT impact (McKinsey State of AI, 2024/2025).

- Excessive FAQs can dilute focus and rarely earn additional rich results; include only what clarifies ambiguous queries.

- Schema coverage is valuable, but prioritize accuracy and consistency over breadth; incorrect properties can erode trust with both AI systems and readers.

KPIs, dashboards, and ROI: measuring what matters

Define a KPI model before you change the workflow. Track deltas after each iteration, not just absolute numbers.

- Cycle time: days from brief to publish. Target a 30–50% reduction with AI‑assisted drafting and templating.

- Velocity: pieces per month per FTE, normalized by quality (engagement and AI citation rates).

- AI visibility: percent of target queries where your page is cited or linked in AI responses; growth per quarter; platform mix.

- Sentiment: net sentiment of AI answers mentioning your brand; aim for quarter‑over‑quarter improvement.

- Experience: percent of pageviews on URLs that meet “good” Core Web Vitals thresholds (LCP ≤2.5s, INP ≤200ms, CLS ≤0.1).

Suggested dashboard tiles and cadence:

- Weekly: AI citations by platform; top pages cited; missing‑citation opportunities; sentiment swings.

- Biweekly: CWV pass rates; slowest interactions (INP) by template; quick fixes.

- Monthly: Topic cluster health; pages to prune/merge; schema validation errors; compliance disclosures audit.

Evidence hygiene:

- Prefer primary sources for claims: Google documentation for AI features and structured data, W3C for WCAG, web.dev for vitals, Bing blog for IndexNow, and McKinsey for adoption stats.

- Where vendor case studies suggest outsized gains, replicate via your own A/B pilots over 8–12 weeks on a defined cluster before scaling.

Advanced techniques most teams miss

-

Prompt engineering for research and drafting

- Research prompts: “List 8–10 primary, canonical sources for [topic], prioritizing official docs and regulators from 2023–2025; return with publisher, year, and the specific fact each supports.”

- Drafting prompts: “Produce a section outline that prioritizes definitions, thresholds, dates, and examples. Insert [3] call‑outs where expert quotes should land. Flag where structured data fits.”

- Fact‑check prompts: “Given these two conflicting sources, summarize the disagreement and recommend the canonical reference, with a justification.”

-

Governance and change management

- Use a RACI for each phase; publish SLAs and hold weekly standups focused on blockers.

- Maintain an approvals log: who reviewed for accuracy, accessibility, and compliance; date/time; changes requested.

- Train editors on disclosure language and accessibility patterns; rotate “accessibility champions” per squad.

-

Multi‑brand scaling

- Centralize your topical maps and style system, but localize examples, quotes, and compliance rules per brand or region.

- Create a shared “extractable facts” library (numbers, definitions, thresholds) with source/date to prevent drift.

-

- Add “In brief” boxes with 3–4 bullet facts; use tight definitions with years and sources.

- Where appropriate, add QAPage sections that mirror the top intents you’re targeting. Keep answers 40–80 words for liftability.

Pitfalls and how to avoid them

- Over‑producing thin pages: Consolidate overlapping pieces; prioritize depth and originality over volume.

- Weak sourcing: If a stat originates from a secondary roundup, hunt for its primary source or drop the claim.

- Accessibility as an afterthought: Retrofits are costlier. Build WCAG 2.2 checks into your drafting and QA templates.

- Compliance misses: Disclosures must be clear and proximate. Keep an audit trail for endorsements and AI assistance per FTC guidance (2024) and consider EU AI transparency when relevant.

- Technical debt: Track INP regressions after each design change; set performance budgets for scripts/images.

A 60‑day rollout plan you can actually run

-

Week 1

- Define target query clusters, human KPIs, and AI visibility goals.

- Draft the production RACI and SLAs. Set up dashboards for AI citations, sentiment, and CWV.

-

Weeks 2–3

- Build briefs for 4–6 core articles. Curate primary sources and quotes. Prepare schema templates.

- Align compliance and accessibility checklists; finalize disclosure language.

-

Weeks 4–5

- Draft with AI assistance; run SME and editorial passes.

- Package technically (schema, meta, alt text); accessibility QA; publish two pieces.

- Submit sitemaps; trigger IndexNow notifications; annotate last‑updated.

-

Weeks 6–8

- Publish remaining pieces; repurpose into FAQs and snippets.

- Monitor AI citations and engagement weekly. Refresh headlines and FAQs for underperformers.

- Present findings and decide whether to scale or adjust prompts/templates.

Further reading and internal enablement

For a deeper dive into monitoring AI visibility and sentiment across engines, see this curated set of tactics and case write‑ups in our AI visibility monitoring best practices. For vendor‑landscape due diligence, review this concise comparative analysis of AI brand monitoring tools, then design your stack around integrations and governance, not just features.

The bottom line

Hybrid workflows in 2025 aren’t about choosing humans or AI—they’re about designing a system where each does what it’s best at. Put extractable facts up front, anchor them to canonical sources, package them for machines and humans, and measure everything from cycle time to AI citation rate and sentiment. Tighten governance and accessibility, notify search engines promptly, and iterate relentlessly.

If you want a single place to track AI citations, sentiment, and query history as you iterate, consider adding Geneo to your workflow.

Sources cited inline

- Google’s guidance on AI features and eligibility (2025) anchors the packaging approach.

- Google’s 2025 AI mode update explains linking back to sources on complex queries.

- W3C WCAG 2.2 provides accessibility gates.

- web.dev covers INP and broader Core Web Vitals thresholds.

- FTC’s 2024 rule and guidance shape disclosure and reviews policy.

- Bing Webmaster Blog outlines IndexNow usage for faster change signaling.

- McKinsey’s 2024/2025 State of AI informs adoption context and ROI expectations.

- Perplexity’s publisher program confirms clickable citations as a discovery surface (Perplexity Publishers’ Program, 2024–2025).