How to Optimize for Perplexity Results (2025) – Best Practices

Unlock expert Perplexity optimization with 2025's latest best practices: AI search ranking, technical SEO, content strategy, and entity authority insights for professionals.

Perplexity has shifted how people discover answers: it reads widely, cites sources prominently, and rewards content that is fresh, well-structured, and trustworthy. The stakes are growing fast—Perplexity handled about 780 million queries in May 2025, according to CEO Aravind Srinivas, a figure multiple outlets reported as part of its rapid month-over-month growth. See reporting in TechCrunch’s coverage, which quotes the CEO’s numbers in June 2025: Perplexity processed 780M queries last month.

Here’s the deal: Perplexity doesn’t publish official ranking weights. But practitioners have observed consistent patterns in what gets cited. This guide distills those patterns—clearly separating observation from official statements—and turns them into a practical playbook you can put to work today.

How Perplexity Chooses Sources (What We Know vs. What We Observe)

What’s official: Perplexity positions itself as a citation-forward answer engine. Its Deep Research feature runs many searches, reads hundreds of sources, and grounds summaries with links to what it deems the best evidence. You can read the platform’s own explanation in the announcement: Perplexity Deep Research (2025). Perplexity also offers a Search API with configurable retrieval parameters and a continuously refreshed index, documented in the Perplexity Search API guide (2025).

What’s observed by marketers and technical SEOs in 2024–2025:

- Freshness and time decay matter. Updated pages (with visible “last updated” and meaningful changes) tend to surface more often, especially on time-sensitive queries.

- Authority and trust help. Reputable domains and expert-authored content are commonly cited; academic/public-interest sources appear prominently in Academic Focus.

- Clear, answer-first structure wins. Pages that directly answer the question, use descriptive headings, lists, tables, and include citations are easier for Perplexity to extract.

- Entities and semantic relevance count. Strong alignment between your page’s entities (Organization, Person, Product) and corroborating hubs (Wikipedia/Wikidata) supports retrieval and confidence.

These are practitioner patterns, not official weights. Still, when combined with sound technical SEO, they reliably increase the odds your page is chosen as a source.

Build Pages That Get Cited: Structure, Q&A, and Evidence

Perplexity prefers pages that “look like answers.” Think inverted pyramid: lead with the concise answer, then expand with details, examples, and credible references. Use semantic HTML that’s easy to parse. Mix short and long sentences for readability, avoid buzzwords, and make the value obvious in the first screenful.

A compact way to plan your next page is to map structure to the expected effect on Perplexity citations:

| Tactic | Why it helps Perplexity cite you |

|---|---|

| Clear H2/H3 questions mirroring user intent | Improves extraction and relevance alignment |

| Summary/answer up top (2–4 sentences) | Increases chances of being used as the quoted snippet |

| Tables for comparisons and lists for steps | Easier for LLMs to lift structured facts |

| Inline citations to authoritative sources | Signals trust; helps Deep Research corroborate claims |

| FAQ section for related intents | Captures long-tail prompts and expansions |

Example: FAQ schema JSON-LD (add to pages with real Q&A)

<script type="application/ld+json">

{

"@context": "https://schema.org",

"@type": "FAQPage",

"mainEntity": [

{

"@type": "Question",

"name": "How does Perplexity choose sources?",

"acceptedAnswer": {

"@type": "Answer",

"text": "Practitioners observe Perplexity rewards freshness, authority, clear structure, and entity alignment. Perplexity has not published official weights."

}

},

{

"@type": "Question",

"name": "What technical SEO helps with Perplexity?",

"acceptedAnswer": {

"@type": "Answer",

"text": "Accurate metadata, schema markup, clean canonicals, fast pages, mobile-friendly design, and clear sitemaps support retrieval and synthesis."

}

}

]

}

</script>

Cite primary sources for key claims and maintain a consistent style guide for headings and tables. For foundational technical practices that support any AI search engine, Google’s living documentation remains a solid baseline: Google’s SEO Starter Guide.

Freshness and Time-Decay Management

Perplexity’s index refreshes often. Content that signals recent, substantive updates tends to perform better. This isn’t just changing a date stamp—show the work by listing what changed and why, aligning updates to meaningful improvements like new data, updated screenshots, or clarified steps. Set a realistic refresh cadence by page type (e.g., newsy pages monthly; evergreen explainers quarterly; technical docs as features ship) and sync those updates with your product and content calendar. Keep sitemaps clean, include only canonical URLs, maintain accurate

Think of freshness like gravity: without periodic boosts, your content’s citation likelihood slowly falls.

Entity Clarity and Authority Signals

Perplexity and similar systems perform better when your entities are unambiguous and well connected. Use Organization, Person, and Product schema with sameAs links to authoritative profiles (official site, social, Wikipedia/Wikidata where applicable), and document relationships between entities (e.g., founderOf, brand, manufacturer). Support these with topic clusters—multiple articles around the same theme, interlinked—and cite credible third-party research when making claims. If your team is new to AI visibility, this primer outlines the KPIs and definitions we reference throughout: AI visibility and brand exposure in AI search. For measurement design, see LLMO metrics: measuring accuracy, relevance, and personalization.

Technical Implementation That LLMs Parse Reliably

Most Perplexity-friendly pages also pass standard technical SEO health checks:

- Schema everywhere it fits the content model: Article, FAQPage/QAPage, HowTo, Organization, Person. Always include author, datePublished, and dateModified.

- Canonical discipline: a single, self-referencing canonical on the preferred URL; ensure canonicals match what’s in your XML sitemaps; avoid noindex in sitemaps.

- Performance and UX: fast, secure (HTTPS), mobile-friendly layouts. Clean typography and spacing help answer extraction.

- Crawl/index hygiene: monitor robots.txt, meta robots, and server logs. Keep orphan pages and parameter duplicates in check.

If you want to go deeper into how Perplexity finds and cites sources, review their developer-facing material for search behavior and parameters: Perplexity’s Search API guide. While not an SEO rulebook, it clarifies the retrieval mechanics your content ultimately meets.

Focus Modes and Multimodal Assets

Perplexity supports Focus Modes (e.g., Web, Academic, Social, Video), which can change which sources are considered. To broaden your odds, publish academic-friendly versions (whitepapers, methodology sections, references) for topics likely to be cited in Academic Focus; use tables, step lists, and visual snippets that concisely encode facts; and, when relevant, add images with descriptive alt text and captions that restate key facts. For videos, provide transcripts and chapter markers, and link the video to a canonical page that contains the structured summary and schema. Ask yourself: if a model needed to quote just two or three lines from your page, are those lines easy to find and self-contained?

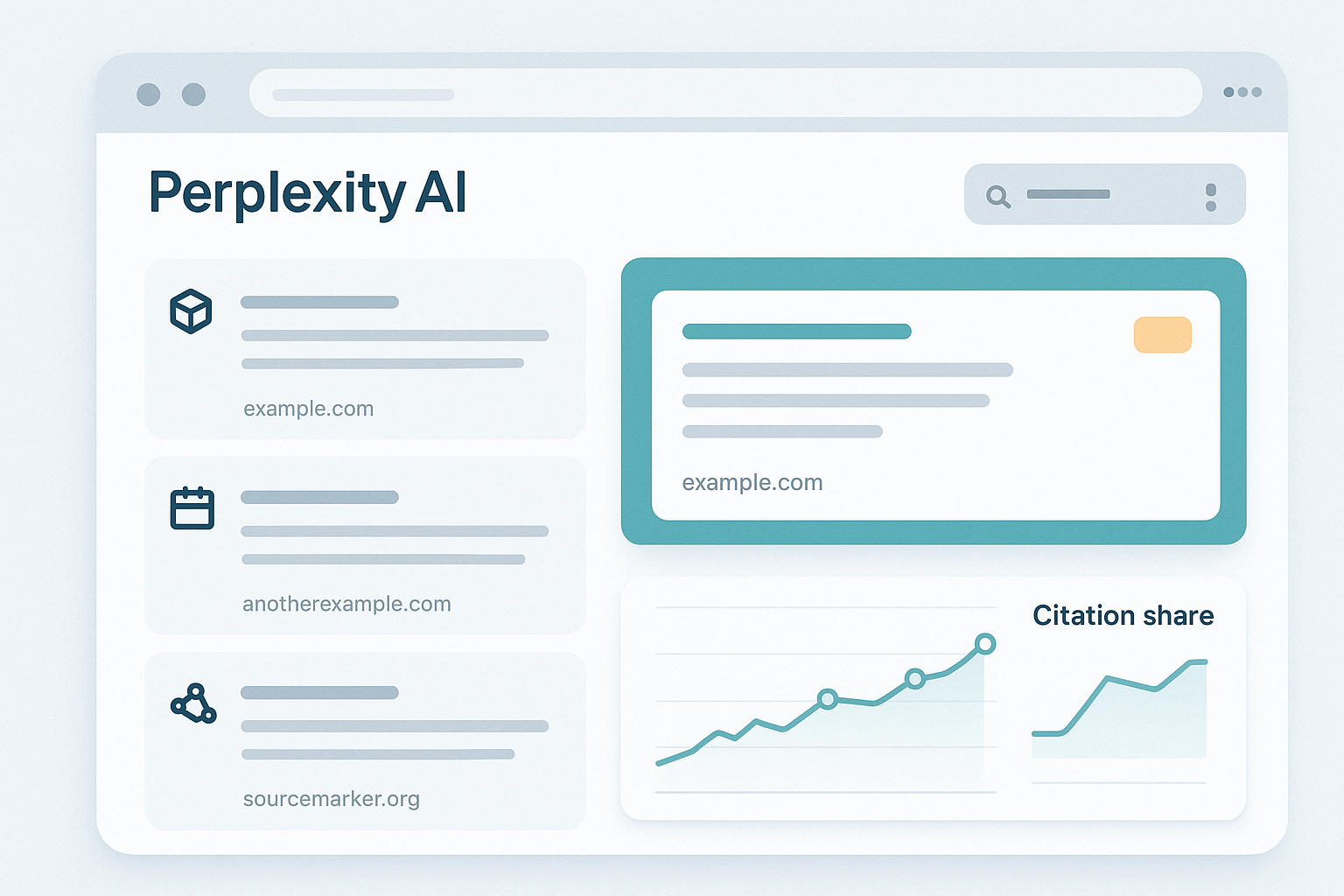

Measurement and Experimentation (with a Weekly Panel)

What you don’t measure, you can’t improve. Track Citation Share (the percentage of your tracked prompts where your domain is cited), Brand Presence (mentions in answers, even without a link), Volatility (week-over-week swings for the same prompt panel), and Referral Traffic (GA4 sessions from perplexity.ai). Run a weekly panel of 50–100 high-intent questions in your niche. Log which domains Perplexity cites, the order of citations, and whether any snippets appear to derive directly from your content. When you ship updates (schema, new tables, refreshed data), note the date and review the next two panel runs for movement.

Disclosure: We build Geneo, a platform for AI search visibility monitoring. Here’s a real-world workflow example (reader utility comes first; feel free to adapt with any toolset): set up a saved panel of prompts aligned to your top themes and commercial intents, tagging each by cluster (e.g., “Perplexity optimization,” “entity SEO”). Track weekly Citation Share and Brand Presence, and trigger alerts if Citation Share drops more than 10% week-over-week for a cluster. When an alert fires, open the affected pages, refresh the lead summary, add or update a supporting table, and append an FAQ with schema. Re-run the panel after indexing to validate recovery. No single tool is required for this approach, but teams move faster when they centralize tracking and annotation alongside results.

Role-Based Execution in One Pass

- Content lead: Prioritize the pages that map to your revenue moments. Write answer-first, add one table, one FAQ, and link out to a primary source for any claim you wouldn’t defend in a customer meeting.

- Technical SEO: Validate schema, canonicals, sitemaps, and performance. Ensure dateModified reflects meaningful refreshes, not cosmetic edits.

- Outreach/PR: Secure citations from credible publications and expert communities. When you publish original data, pitch it with a clear methodology page that Academic Focus can trust.

A Quick Reality Check on Evidence Quality

Unlike classic SEO, independently audited, publisher-side case studies with before/after Perplexity citation metrics are still rare. Much of the current playbook rests on converging practitioner evidence. That’s fine—as long as you run your own experiments. Try an A/B refresh cadence across two similar content clusters. Document changes and compare Citation Share and GA4 referrals after two to four weeks. Small, repeated tests beat waiting for the perfect study.

Putting It All Together

Treat Perplexity like a discerning editor. Give it a clean, answer-first page with visible updates, credible references, and clear entities. Maintain technical hygiene so your content is easy to crawl, parse, and quote. Measure weekly, adjust quickly, and keep a log so you can connect updates to results over time.

For a deeper understanding of how Perplexity composes answers and grounds them in sources, the platform’s own explainer is helpful context: Deep Research overview (Perplexity, 2025).

If you want an introductory framework for AI search visibility strategy and measurement, these primers can help you operationalize the ideas in this guide:

- AI visibility and brand exposure in AI search

- LLMO metrics: measuring accuracy, relevance, and personalization

Ready to track and improve your Perplexity visibility? You can centralize prompt panels, monitor citations and mentions, and annotate updates in one place. Try Geneo’s AI search visibility monitoring to shorten the loop between content updates and measurable results.