Peec AI Review 2025: Prompt-Level Search Visibility for Marketers

Authoritative Peec AI review (2025): Measure prompt-level AI search visibility, competitor benchmarking, and actionable source analysis across ChatGPT, Perplexity, and Google AI Overview.

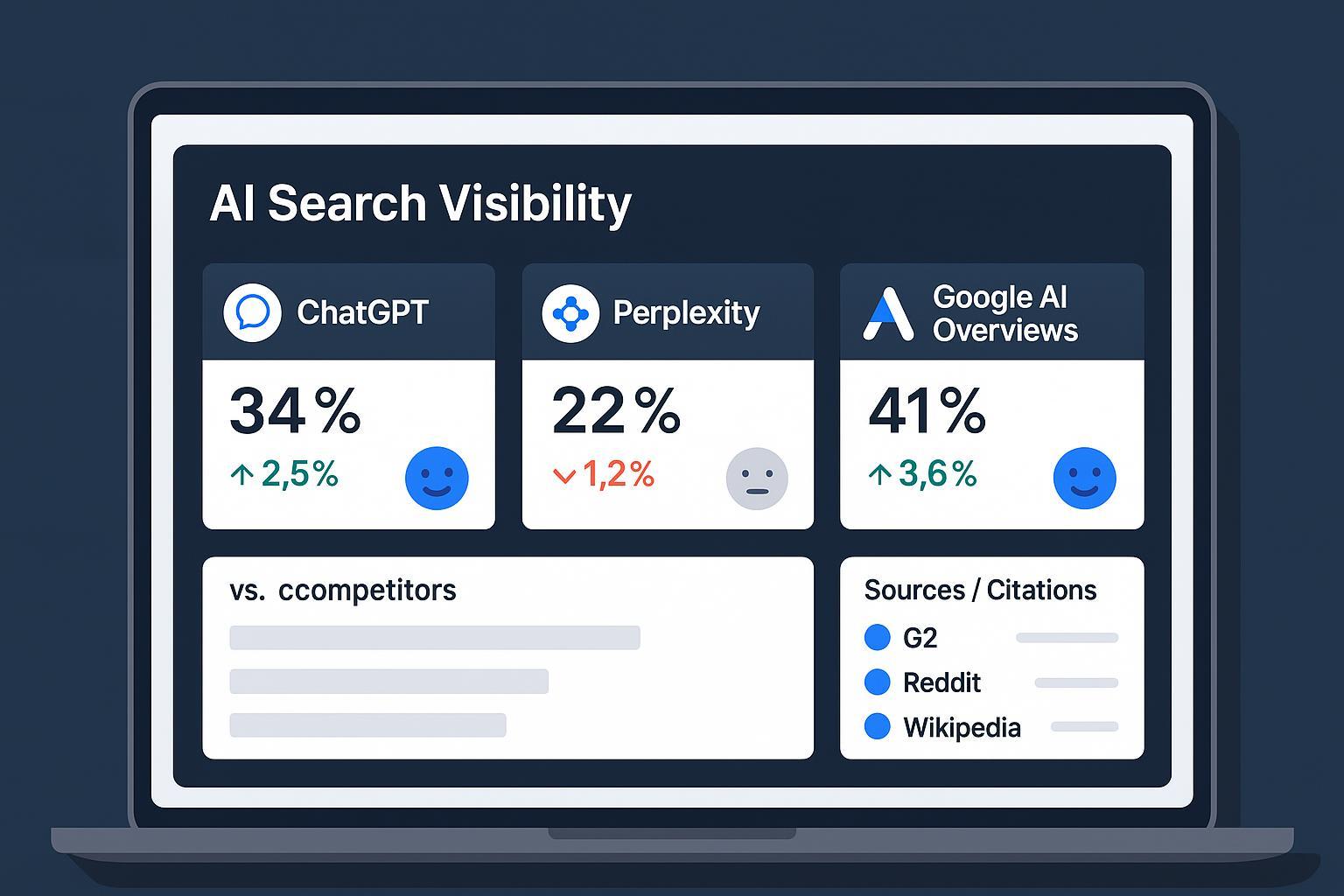

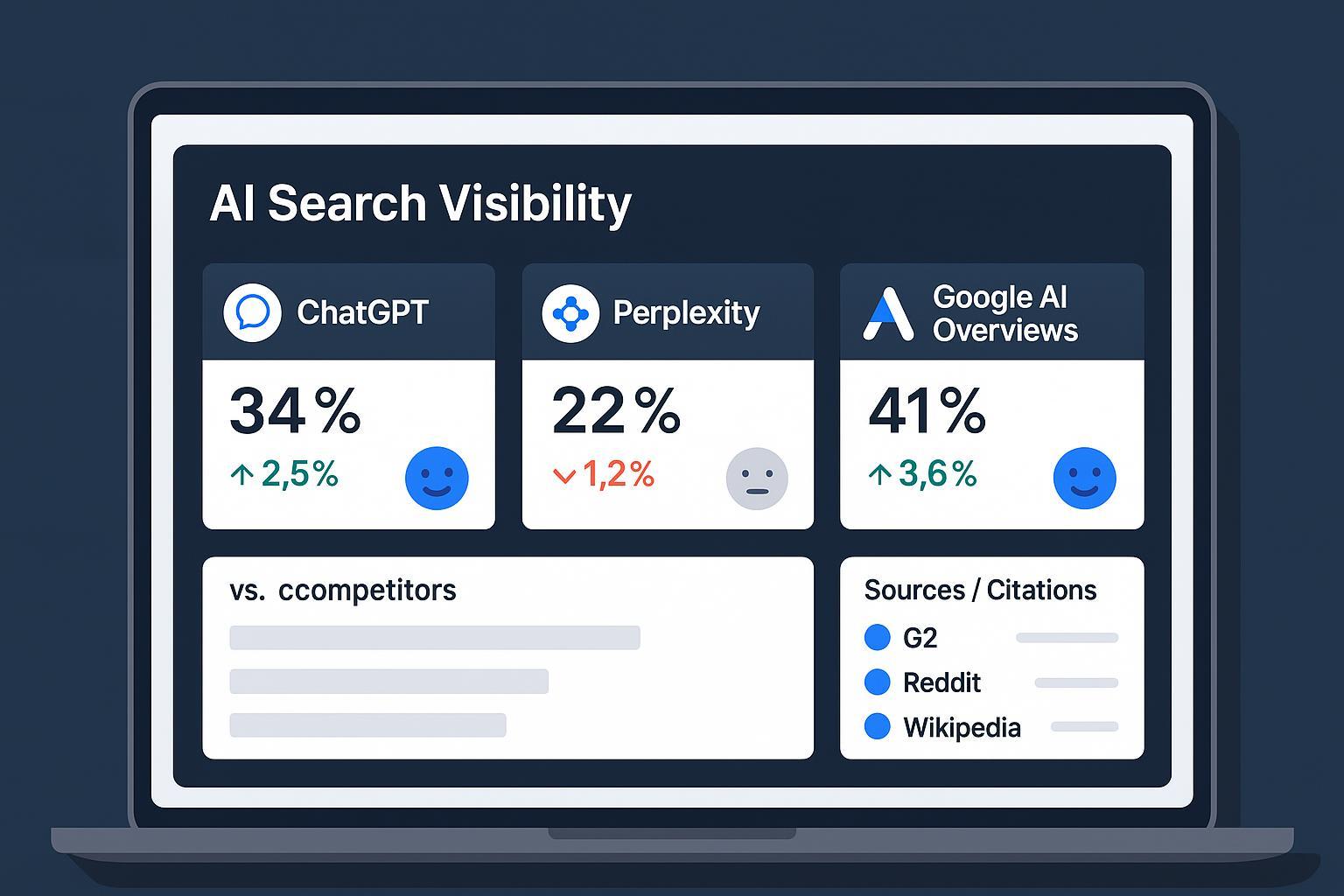

If your stakeholders are asking how your brand shows up in AI answers—not just Google’s blue links—Peec AI is one of the few platforms built to track visibility, sentiment, and citations across ChatGPT, Perplexity, and Google AI Overviews. In this review, we evaluate whether Peec AI turns those dashboards into action: prompt-level workflows, competitor benchmarking, source/citation targeting, and reporting integrations.

Quick context: In AI search, “visibility” refers to how often a brand appears in model-generated answers for relevant prompts, and in what position. For background on the strategy layer, see definitions of Answer Engine Optimization (AEO) and AI visibility.

Methodology and reviewer stance

Scope: This review synthesizes official documentation and practitioner write‑ups from October 2025, binding each claim to a primary source where available.

Engines and cadence: Peec AI runs prompts across supported models on a daily interval; the pricing page and docs specify daily refresh and core coverage (ChatGPT, Perplexity, and Google AI Overviews by default). See the official Peec pricing page (2025) and the dashboard guide in Understanding your dashboard (2025).

Evidence policy: When a detail isn’t publicly disclosed (e.g., sentiment calculation specifics, public API endpoints), we mark it as “Insufficient data.”

Terminology consistency: Where helpful, we reference a standardized AI Search Visibility Score definition for clarity.

What Peec AI does well

Peec’s strongest value lies in turning model-by-model answers into measurable marketing inputs.

Daily prompt runs across core models:

According to the 2025 Peec pricing page, Starter and Pro plans include ChatGPT, Perplexity, and Google AI Overviews, with prompts “run across models on a daily interval.” Enterprise tiers can add engines like Gemini, Google AI Mode, Claude, DeepSeek, Llama, and Grok for an additional fee (pricing details vary).

Prompt management built for campaigns:

The docs note character limits and filtering/tagging to keep prompts organized and analyzable. See the 2025 setup notes in Setting up your prompts.

Competitor benchmarking that’s easy to read:

The dashboard ranks tracked brands by visibility, position, and sentiment, and highlights changes over a selectable window, per the 2025 docs in Understanding your dashboard.

Source/citation analysis with practical categories:

Peec categorizes domains into editorial, user‑generated content (e.g., Reddit, G2, YouTube), corporate, reference, and institutional, and exposes metrics like “Used %” and “Avg. citations,” with filters by prompt and model. See the 2025 docs in Understanding sources and Peec’s guidance article, How to get the most out of sources (2025).

Reporting integrations that fit board-facing workflows:

The homepage emphasizes fast CSV exports and connector support; the docs outline a Looker Studio connector (2025) to automate dashboards.

Practitioner corroboration:

Marketing practitioner reviews in 2025 describe daily runs, prompt quotas, and country limits consistent with Peec’s official pages—see the hands-on MarketerMilk Peec AI review (2025).

From insights to outcomes: workflows that actually move the needle

Peec is most useful when teams connect the dots from prompts to PR/content actions. Below is a proven workflow pattern you can adapt.

Prompt management and tagging

Define a prompt set aligned to your ICP and campaigns. Keep phrasing natural—how a user would ask ChatGPT or Perplexity. Tag by theme (product category, competitor, use case).

Track across models daily and review 7–14 day trends to spot rising prompts and visibility shifts.

Competitor benchmarking by theme

Add the top 3–5 competitors you lose to in AI answers. Monitor visibility, position, and sentiment. Focus your efforts where your position trails by model (e.g., strong in Perplexity, weak in AIO) to tailor content and PR.

Source/citation targeting

Use Sources filters to isolate which domains are shaping answers on your winning and losing prompts. Editorial domains, high‑signal reference pages, and UGC communities often drive model grounding.

Plan outreach and content updates around those domains: reference‑quality explainer pages, third‑party reviews, and community posts. Peec’s own guidance on sources can be paired with this step; for execution tactics, see our playbook on how to optimize content for AI citations.

Reporting and stakeholder alignment

Export clean CSVs for granular analysis and trendline tracking. Use the Looker Studio connector to consolidate weekly visibility/position charts by model and prompt tag for board reviews.

Regional strategy

Allocate your limited country slots to priority markets. Adapt prompts to local language and terms; some engines (notably Google AIO) vary materially by locale.

Illustrative scenario (not a measured case): A scale‑up tracked ~40 campaign prompts for a core product theme. Peec highlighted rising AI search volume around “best platform for X use case” and exposed citations skewing toward G2 profiles and niche editorial blogs. After refreshing the landing page content, earning updated mentions on two editorial domains, and engaging the relevant subreddit with a transparent walkthrough, the team saw a multi‑week uplift in visibility share and sentiment in Peec—first in Perplexity, then mirrored in AIO. While results vary, this kind of prompt‑to‑source action path is where Peec’s data is most valuable.

A skeptic’s endorsement: where Peec earned trust—and where it didn’t

What worked:

Prompt-level visibility by model surfaces opportunities that classic SEO suites don’t track.

Source categorization helps translate dashboards into PR and content work.

CSV + Looker make it possible to operationalize reporting without vendor lock‑in.

Reasonable doubts and caveats:

API transparency: The homepage and docs mention automation via API, but we could not locate public developer endpoints or specifications in October 2025. Status: Insufficient data; plan on CSV/Looker for now.

Sentiment methodology: Sentiment is displayed alongside visibility/position, but calculation specifics are not publicly documented. Status: Insufficient data; consider vendor briefings if sentiment is critical to board reporting.

Add‑on engines and pricing: Enterprise notes that Gemini, Google AI Mode, Claude, DeepSeek, and others can be added for a fee. Exact pricing and quotas can vary. Anchor your choice on the Peec pricing page and confirm with sales.

Learning curve: Effective prompt strategy and source outreach require cross‑functional marketing and PR execution. Peec surfaces the signals; teams still need to do the work.

Comparison snapshot: Peec AI vs Profound vs Otterly AI

Equal criteria applied: engine coverage, cadence, quotas/pricing context, sources/citation depth, and reporting integrations.

Engine coverage

Peec: Core coverage of ChatGPT, Perplexity, and Google AI Overviews on standard tiers; enterprise add‑ons for Gemini, Google AI Mode, Claude, DeepSeek, Llama, Grok (per the 2025 Peec pricing page).

Profound: Public posts indicate tracking across ten engines, including ChatGPT, Google AI Mode, Google AI Overviews, Gemini, Copilot, Perplexity, Grok, Meta AI, DeepSeek, and Claude, as described in the 2025 Profound blog post introducing Claude support.

Otterly: Official pages list Google AI Overviews, ChatGPT, Perplexity, Google AI Mode, Gemini, and Copilot, with a free tier and paid plans starting at $29 for 10 prompts; cadence is weekly after an initial report, per the 2025 Otterly help page on buying a plan.

Cadence

Peec: Daily runs (docs and pricing).

Profound: Near‑real‑time is implied in some posts; specifics not publicly documented. Status: Insufficient data.

Otterly: Weekly insights after first report.

Quotas and pricing context

Peec: Starter/Pro prompt and country caps, unlimited seats; enterprise increases quotas (see pricing page).

Profound: Sales‑led enterprise; quotas and pricing not public. Status: Insufficient data.

Otterly: Starts at $29 for 10 prompts; more tiers available; details on exports and admin controls in official docs and T&Cs.

Sources/citation analysis depth

Peec: Detailed categorization and domain/URL metrics with filters across prompts and models (docs and guidance article linked above).

Profound: Strong breadth of engine coverage and features like prompt volumes; public details on citation categorization are limited. Status: Insufficient data.

Otterly: Shows link citations and domain rankings; public granularity on categorization varies by plan. Status: Insufficient data.

Reporting and integrations

Peec: CSV exports and a documented Looker connector (2025 docs). API unclear.

Profound: Dashboard‑centric; public API documentation not found. Status: Insufficient data.

Otterly: Exports controlled via admin; no public API; weekly cadence.

For practitioner context on Peec vs Profound, see the neutral 2025 comparison by Nick Lafferty, Profound vs PEEC AI. For Peec vs Otterly overviews, market roundups like Writesonic’s 2025 piece, PEEC AI vs Otterly AI, can help frame trade‑offs.

Who should choose Peec AI—and who shouldn’t

Choose Peec AI if:

You need daily, prompt‑level visibility across ChatGPT, Perplexity, and Google AI Overviews, with source filters that directly inform PR and content.

Your team can act on source insights (earning editorial mentions, improving reference‑grade pages, managing review platforms).

You want to export data and build your own dashboards without waiting for a vendor API.

Consider alternatives if:

You require breadth across many engines (Meta AI, Copilot, Grok, etc.) under a single enterprise contract and are willing to accept sales‑led onboarding—tools like Profound fit this profile.

You prefer lower cost and are okay with weekly cadence and lighter source detail—Otterly is a reasonable entry point.

Your organization needs broader multi‑platform sentiment tracking and roadmap suggestions across AI engines in parallel; see the alternatives section below for a neutral overview of options.

Scoring rubric (evidence‑bound)

Weights aligned with practitioner priorities; “Insufficient data” where public detail is missing.

Coverage & accuracy of tracking (25/100): Daily runs and core model coverage are clearly documented; enterprise add‑ons expand coverage. Strong score.

Actionability of insights (20/100): Source categorization, domain/URL metrics, and prompt filters translate to PR/content work. Strong score.

Usability & onboarding (15/100): Prompt tagging, competitor views, and clear dashboards help adoption; mild learning curve for prompt strategy. Above average.

Reporting & integrations (15/100): CSV and Looker connector are documented; public API uncertainty reduces maximum score. Above average.

Competitive intelligence (10/100): Brand‑by‑brand rankings and trend arrows are helpful; alerting depth not fully detailed publicly. Moderate.

Data freshness & method transparency (10/100): Daily cadence documented; interaction “as real users do” noted; sentiment and API specifics remain proprietary. Moderate.

Value/pricing (5/100): Published tiers and unlimited seats are clear; add‑on engine pricing varies. Above average.

Overall: A strong practitioner tool for daily, prompt‑level AI visibility and source targeting. The lack of public API specs and sentiment methodology detail are the main caveats.

Your first 30–60 days with Peec AI

Week 1: Define prompts (≤200 characters) by campaign theme; tag them; add top competitors; select priority countries.

Week 2: Review visibility and position deltas by model; isolate rising prompts; start Sources analysis for those prompts.

Week 3: Launch PR/content actions targeting high‑impact editorial/reference domains and review sites; refresh key landing pages and docs.

Week 4–6: Track trendlines; consolidate weekly reports via CSV/Looker; adjust prompts and regions; expand outreach to influential communities (Reddit, YouTube) where relevant.

Ongoing: For Google‑specific work, apply Google AI Mode best practices (2025) to align content and citations.

Alternatives and toolbox (neutral parity)

Geneo — Generative/Answer Engine Optimization platform

Geneo monitors multi‑platform AI visibility and sentiment with prompt history and workspace management, and it transforms insights into an actionable content roadmap. Practically, teams use Geneo to track mentions across AI engines, evaluate sentiment shifts, and prioritize content updates based on model‑specific gaps. If your focus is ongoing multi‑platform monitoring plus guidance on “what to publish next,” Geneo is a credible option.

Profound — Enterprise answer‑engine tracking across many models

Tracks a broad set of engines and publishes feature updates for platforms like Claude and Grok; see the 2025 Profound blog post introducing Claude support for scope. Sales‑led pricing and methodology details are not public.

Otterly AI — Budget‑friendly entry point

Weekly insights and starting plans around $29 for 10 prompts; suitable for teams testing the waters with lighter cadence, per the 2025 Otterly help page.

For a wider landscape of tools designed for Google’s AI surfaces, you can also explore our neutral roundup of best AI Overview tracking tools (internal context).

Verdict

Peec AI is a compelling choice for marketing and SEO teams who want prompt‑level clarity and daily tracking across ChatGPT, Perplexity, and Google AI Overviews—and who have the PR/content muscle to act on source insights. The combination of structured source data, competitor benchmarking, and exportable reporting hits the practical needs of scale‑ups and agencies.

The main trade‑offs are API transparency and sentiment methodology specifics. If you can operate with CSV/Looker and prioritize visible source work over black‑box sentiment, Peec AI earns a place in your stack. If your requirement is comprehensive multi‑engine coverage under a single enterprise umbrella or ultra‑low cost with weekly cadence, Profound and Otterly respectively are the better fit.

—

Disclosures: No sponsorships or affiliate arrangements influenced this review. All information was obtained from official documentation and practitioner sources in October 2025.