What is a Large Language Model (LLM)? Definition, Key Components & Applications

Discover what a Large Language Model (LLM) is, including its definition, core principles, and real-world applications in AI search optimization, brand management, and content generation. Learn how LLMs like GPT and BERT power modern AI, and how platforms like Geneo use LLMs to boost brand visibility and content performance. LLM definition, meaning, and use cases explained for marketers, businesses, and tech professionals.

One-Sentence Definition

A Large Language Model (LLM) is an advanced artificial intelligence system, built on deep learning and transformer architectures, that understands and generates human-like text by processing vast amounts of data. (IBM, EM360Tech)

Detailed Explanation

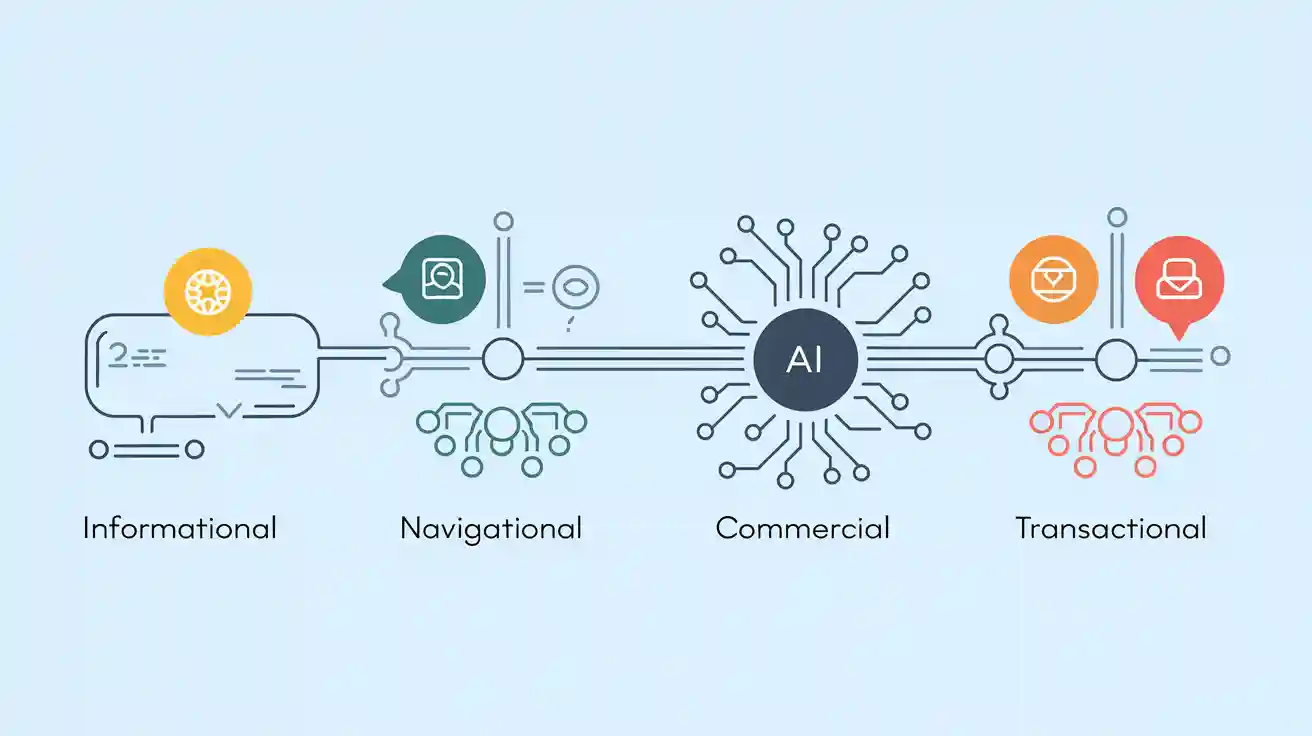

LLMs are foundational models in AI, trained on massive datasets—often billions of words from books, articles, and the web. Using self-supervised learning, they learn language patterns, context, and meaning without explicit human labeling. The core technology behind LLMs is the transformer architecture, which uses self-attention mechanisms to understand relationships between words, regardless of their position in a sentence. This enables LLMs to generate coherent, contextually relevant text, answer questions, summarize content, translate languages, and more. While LLMs can mimic human conversation and creativity, they do not "understand" language as humans do; instead, they predict the most likely next word or phrase based on learned patterns.

Key Components of LLMs

Massive Training Data: LLMs are trained on diverse, large-scale text corpora, enabling them to capture a wide range of language nuances.

Transformer Architecture: The backbone of modern LLMs, transformers use self-attention to process input sequences efficiently and capture long-range dependencies.

Billions of Parameters: These models have millions to billions of adjustable weights (parameters), allowing them to model complex language relationships.

Pre-training and Fine-tuning: LLMs are first pre-trained on general data, then fine-tuned for specific tasks or industries (e.g., legal, healthcare, marketing).

Self-Supervised Learning: LLMs learn by predicting missing words in sentences, requiring no manual labeling.

Prompt Engineering: Users can guide LLM outputs by crafting specific prompts, improving relevance and accuracy.

Real-World Applications

LLMs are transforming industries by automating and enhancing language-related tasks:

Content Generation: Automatically create blog posts, marketing copy, product descriptions, and social media content.

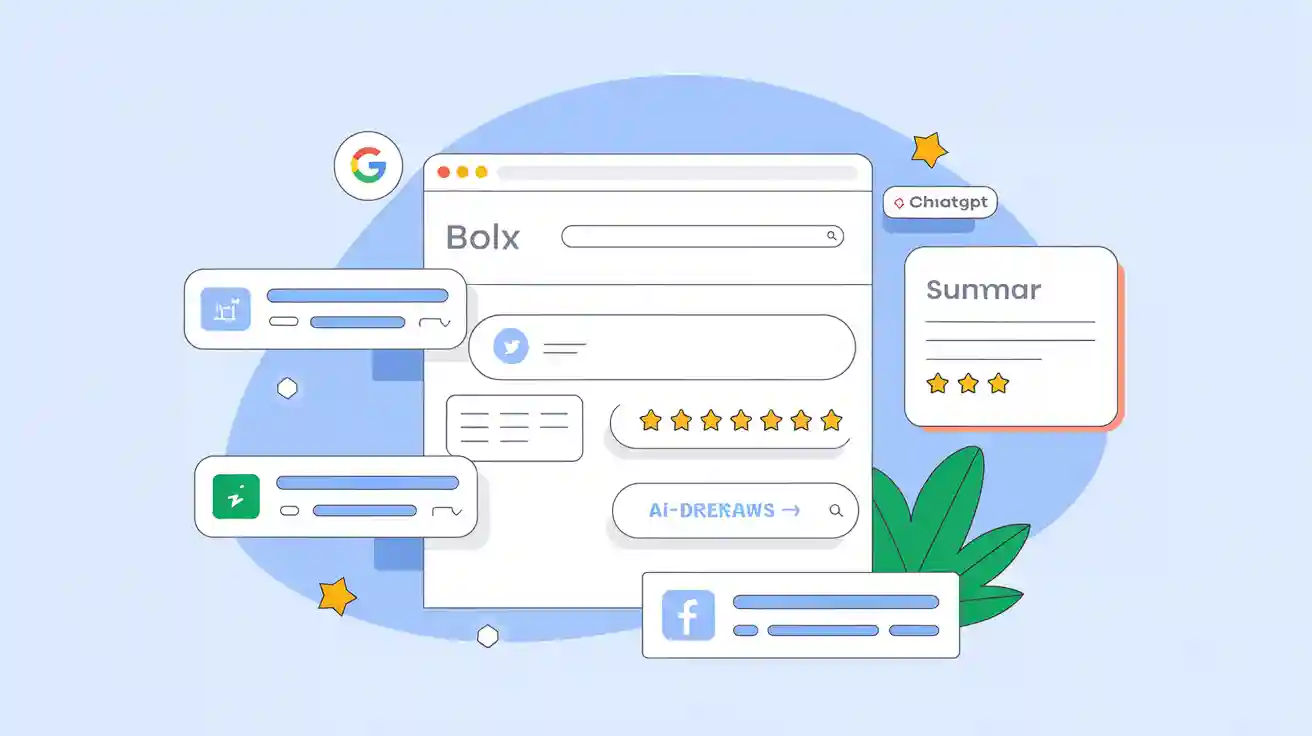

AI Search Optimization: Platforms like Geneo leverage LLMs to monitor and optimize brand visibility in AI-driven search engines (e.g., ChatGPT, Perplexity, Google AI Overview), helping brands improve their ranking and exposure.

Sentiment Analysis: Analyze customer feedback, reviews, and social media to gauge public sentiment and inform brand strategy.

Customer Support: Power chatbots and virtual assistants that provide instant, context-aware responses.

Language Translation: Deliver accurate, context-sensitive translations across multiple languages.

Trend and Competitive Analysis: Identify emerging topics, track competitors, and generate actionable insights from unstructured data.

Personalized Recommendations: Enhance user experiences in e-commerce, entertainment, and education by tailoring content and suggestions.

Related Concepts

Natural Language Processing (NLP): The broader field of AI focused on understanding and generating human language.

Transformer: The neural network architecture that powers most LLMs, enabling efficient processing of sequential data.

Generative AI: AI systems that create new content, such as text, images, or code, based on learned patterns.

Pre-trained Models: LLMs like GPT, BERT, LLaMA, and Gemini are pre-trained on large datasets and then fine-tuned for specific uses.

Prompt Engineering: The practice of designing input prompts to elicit desired outputs from LLMs.

Limitations and Challenges

Despite their power, LLMs have notable limitations:

Computational Demands: Training and running LLMs require significant computing resources and energy.

Bias and Hallucinations: LLMs can reflect biases in their training data and sometimes generate inaccurate or misleading information (ProjectPro).

Privacy Risks: Sensitive information in training data can lead to privacy concerns.

Knowledge Staleness: LLMs do not update their knowledge in real time and may provide outdated information.

Complex Reasoning: They may struggle with multi-step logic or nuanced understanding beyond pattern recognition.

Further Reading

Geneo helps brands and enterprises optimize their presence in AI-powered search and content platforms using advanced LLM-driven analytics and recommendations. Learn more about Geneo and boost your brand’s visibility in the AI era.