How to Transform SEO with Vectors: Practical Guide for Marketers

Discover how to use vectors for SEO, boost AI answer visibility, and benchmark competitors—step-by-step guide for agencies.

Single-keyword reporting can’t explain why competitors appear in AI answers when you don’t. Modern search blends exact terms with meaning. Vectors—dense numerical representations of language—make that possible by turning text into coordinates in a semantic space. Engines then retrieve passages that sit “closest” to a query’s intent, even if the wording differs.

This guide keeps vector talk practical for agency teams. We’ll translate hybrid retrieval and passage-level ranking into workflows your SEO leads can run and your client-success teams can explain, with a competitive intelligence lens for founders and growth leaders.

Vectors and embeddings, in marketer terms

A vector is a GPS pin for meaning; an embedding model maps text onto that coordinate system. That’s why “pricing” content can match a query for “cost” without using the exact word.

Major platforms document this shift. Google’s Vertex AI Vector Search overview explains hybrid search that combines keyword and semantic signals. Microsoft’s Azure AI Search semantic ranking describes lexical retrieval followed by an L2 semantic re-ranker derived from Bing models. Elastic shows BM25 and vector k‑NN fused via RRF in Hybrid Search with multiple embeddings. IBM’s What is Vector Embedding? clarifies the concept for similarity, clustering, and retrieval.

Why it matters: vectors reduce vocabulary mismatch and elevate answerable passages. For reporting, you can move from “we targeted synonyms” to “we built semantic coverage for the full intent.”

Hybrid retrieval and passage-level ranking, in practice

Most stacks mix sparse methods (e.g., BM25) with dense similarity, then fuse or re-rank results.

Passage-level retrieval is now table stakes. Vendors advise chunking content and retrieving segments rather than full pages; Google highlights this in Vertex AI architectures for RAG with Vector Search. Elastic and Databricks document hybrid fusion patterns—BM25 + k‑NN merged via RRF—which improves recall without hand-tuned weights as shown in Elastic’s guide and Databricks’ hybrid search GA.

What to do differently on-page: structure pages so the most answerable passages are easy to extract. Use precise lexemes for product names and SKUs to satisfy lexical matching, then expand with adjacent concepts to satisfy semantic matching.

From keywords to semantic fields: a replicable workflow

You can run a low-lift analysis to reveal gaps without building full infrastructure.

Start with a semantic field. Take 50–200 seed queries across services, normalize them into question forms and intents, and embed both queries and your top URLs with a consistent model family. Cluster into 10–30 intent groups using nearest-neighbor similarity, name groups with specific entities (product models, standards), and list missing coverage.

Draft passage plans for priority URLs: outline 3–6 answer-first passages per page, each leading with a crisp 2–4 sentence response to the centroid question, followed by supporting detail. Include critical lexemes (model numbers, specs) while writing to the semantic centroid—the “average meaning” of the cluster.

Baseline guardrails: start with 300–600 token chunks and 15–20% overlap, then validate recall on a benchmark set; Google emphasizes chunking quality in Vertex AI architectures. Keep indexing/querying within the same embedding family; Microsoft notes pipeline consistency in Azure’s docs.

Content and internal links: optimize for intent, not just terms

Write answer-first passages and link by semantic adjacency. Instead of generic “learn more,” use anchors that reflect intents (“compare X vs Y power consumption”). Disambiguate entities with schema.org types (Product, Organization, Person, CreativeWork) and add FAQPage/HowTo where appropriate. These practices make your content easier to retrieve and cite in answer surfaces.

Measuring AI answer visibility: panels, KPIs, and trends

There isn’t an official Google dashboard for AI Overviews visibility, so agencies use audit panels and practitioner KPIs to describe “share of answers.” Track inclusion rate (where you appear), citation share (how much of the citations are yours), source prominence (position/visibility), and trends over time.

Interpret CTR impacts with caution; they vary by query type and timeframe. For context on measurement approaches and observed effects, see Semrush’s AI Overviews study (2024) and agency briefings like Seer Interactive’s AIO impact update (2025). Build per-engine dashboards, log sample design (query set, country/device, date windows), and disclose limitations—Google Search Console doesn’t expose AI Overviews filters.

For monitoring options and extended reading, explore 10 Best Google AI Overview Tracking Tools for China — GEO 2025.

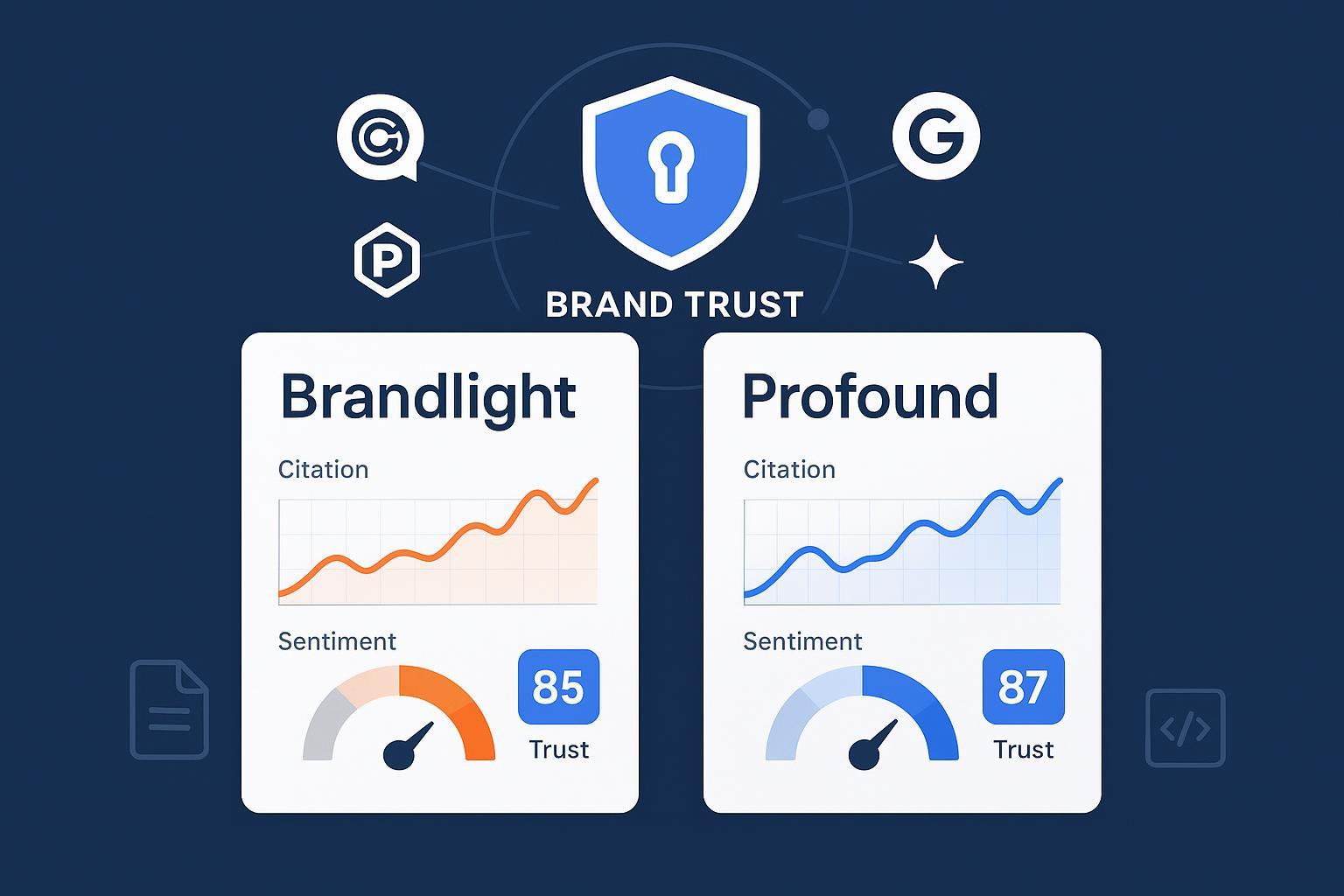

Competitive intelligence: map coverage to citations

Turn embeddings into a client-facing benchmark.

Build intent centroids by averaging query embeddings per cluster and measure how close each passage is to the centroid. Gaps are clusters where competitors sit closer—or where their content is cited in AI answers and yours isn’t. Overlay per-engine KPIs (inclusion, citation share, prominence) to show “coverage-to-citation” effectiveness.

Practical example (disclosure and neutral mention): Geneo is our product. You can monitor cross-engine inclusion and citation logs using Geneo and connect results back to your clusters. For a query-level visibility format reference, see 8k video streaming bandwidth requirements. Equivalent tooling works—the key is consistent measurement.

Troubleshooting and fine-tuning

Model mismatch/domain drift: index and query with the same embedding family; re-embed when your corpus changes. Pipeline consistency appears in Azure’s docs.

Entity ambiguity: reinforce with schema and metadata; filter k‑NN results by entity/type where your platform supports it.

Chunk strategy: tiny chunks lose context; giant chunks dilute relevance. Start with 300–600 tokens and 15–20% overlap; validate recall@K. Guidance on chunking quality is highlighted in Vertex AI architectures.

Fusion balance: overweighting dense similarity can miss exact brand terms; overweighting sparse can miss paraphrases. Hybrid fusion patterns are documented by Elastic and Databricks.

Index parameters: HNSW/IVF settings trade off recall and latency; start with defaults and A/B a benchmark. See OpenSearch k‑NN docs.

Quick-start resources and a 30–60 minute pilot

Embeddings: OpenAI Embeddings, Google Vertex AI text embeddings.

Vector stores: Pinecone quickstart, Qdrant quick start.

Pilot plan (Colab): embed a 500‑URL sample and 100–300 queries; upsert into Pinecone/Qdrant; compute top‑K nearest neighbors by cluster centroid; export gap lists to CSV; brief writers on new/updated passages; schedule weekly AI answer audits.

What to do next

If you want to see where your brand shows up in AI answers—and where competitors beat you—start by measuring. Sign up for a free AI visibility analysis to establish your baseline and prioritize fixes.